AI’s Moral Economy of Memory: Consent, Debt, Justice

Courts put a price on past training while a major lab shifts to opt in with five year retention. Here is a practical playbook for consent, influence metering, compensation, and retention that rewards creators and sustains innovation.

This week, consent enters the room

For years, AI systems absorbed the open web and shadow libraries under a banner of progress. The implicit rule was scrape first, argue later. This week signaled a shift. On September 25, 2025, a federal judge in California preliminarily approved a $1.5 billion settlement in a landmark class action brought by authors over book datasets used for AI training. The approval is not final, but it shows that courts are ready to price certain forms of training and to police how those datasets were acquired. It turns a philosophical fight into a market reality. If training raised a model’s ability, that uplift now has a price attached, at least for some categories of data. preliminarily approved a $1.5 billion settlement.

Only days later, one of the largest AI labs shifted the default around user data. Instead of assuming your chats are fair game for training, it introduced an explicit choice. If you opt in, your conversations can train future models and may be stored for five years. If you opt out, they will not be used for training and follow shorter retention windows. The default becomes a question, not a harvest. That reframe turns a silent taking into a visible trade. updated its privacy policy.

Two signals in one week. A court converting authors’ claims into dollars. A lab converting user consent into access and retention. Together they invite a deeper question. If machines can remember, what do those memories owe?

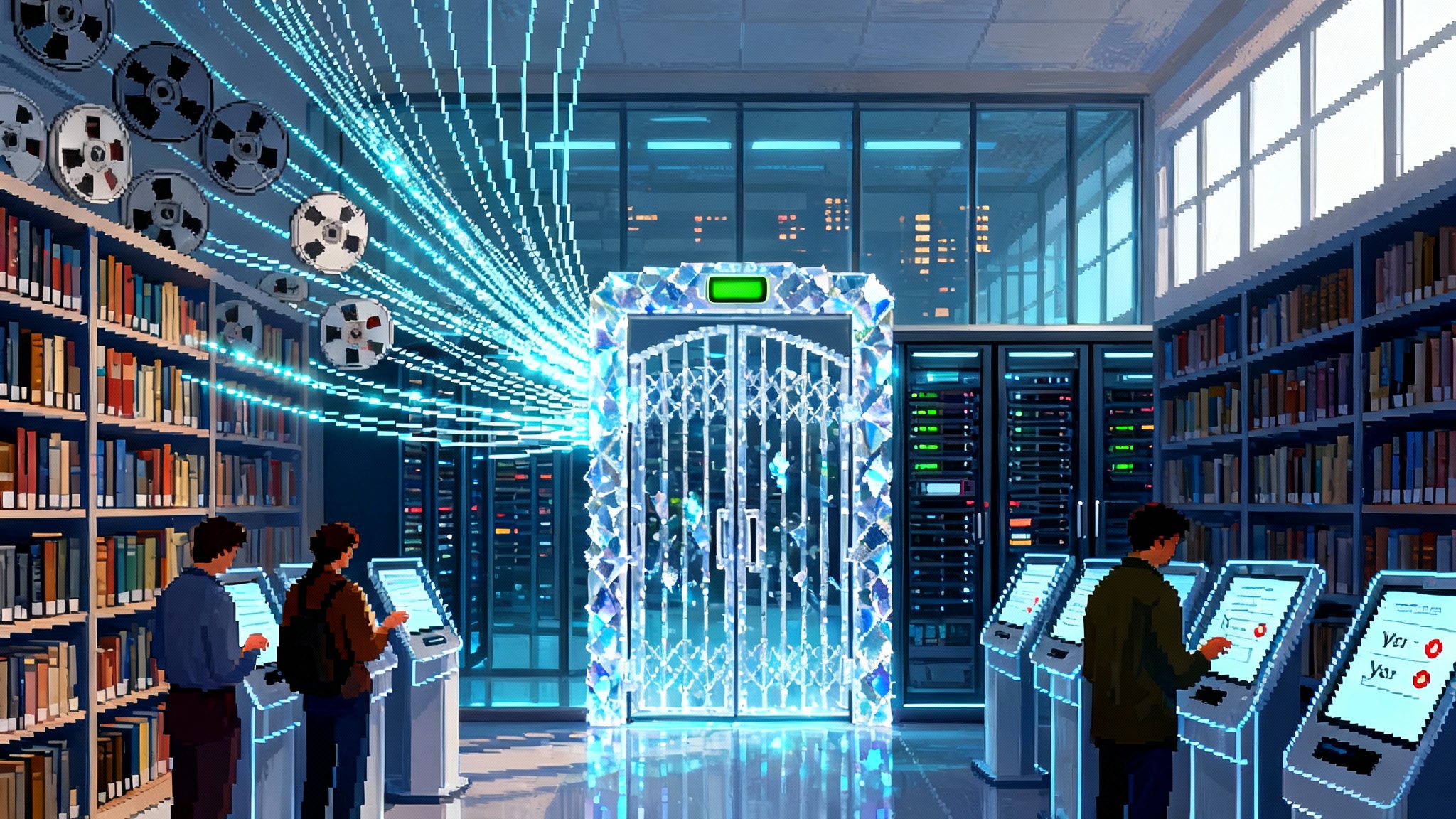

What it means for AI to remember

When we say a model learns, we compress a stack of mechanisms into a single word. There is raw storage that holds datasets. There are training runs that adjust billions of parameters so a model predicts the next token. There are retrieval systems that pull in documents at runtime, and there are safety systems that monitor for abuse. Memory exists across all of these layers.

Human memory feels like an inner room filled with books and fragments. Model memory is a probability field shaped by exposure. It does not memorize a paragraph the way a person does unless it is overfit or prompted to reproduce text that it saw. Yet memory still has a direction. Gradient steps imply a story of influence. If your book helped push the loss down, a slice of your labor lives inside the model’s capability. That is the moral center of this debate. Influence without acknowledgment is extraction. Influence with consent is partnership.

Learning versus copying is not just technical. It is a consent line.

The old argument tried to draw a clean border. Learning was portrayed as abstract and unowned. Copying was concrete and illegal. In practice the boundary is fuzzy. A model that repeats an author verbatim has copied. A model that never repeats yet uses patterns distilled from the author’s style has learned. Most real systems sit somewhere in the middle. That is why consent matters. Consent moves the argument from metaphysics to governance. With consent, the same gradient steps become legitimate. Without consent, they become a moral debt even when the law might call them fair use.

Consent also makes the public good legible. A museum can choose to let its digitized archives help train models in exchange for funding, attribution, or educator access. A news outlet can license content with terms that reinforce investigative work. A musician can opt in for a royalty per million tokens. No new theory of mind is required. What is required is a set of contracts and controls that respect the people behind the data. For a primer on consent models and common pitfalls, see our internal guide, data consent in AI practice.

Moral debts in machine memory

When a model’s ability to reason, summarize, code, or compose is materially increased by particular bodies of work, the model inherits a debt to those creators. Not a punitive debt, but a civic one. The settlement news hints at one way to settle such debts after the fact. The opt in policy hints at a better way to prevent them. A moral economy of memory would make these debts explicit and payable.

Think of three kinds of debts:

- Acquisition debt. If data was obtained without permission or against stated terms, the debt is owed even if later training is deemed lawful.

- Influence debt. If a specific corpus materially lifts a capability, rightsholders deserve a share of the value that capability enables.

- Stewardship debt. If a lab stores and processes data in ways that create risk, it owes clarity, minimization, and deletion rights commensurate with that risk.

These debts do not block innovation. They shape it.

Why consent changes the value question

Consent is not only a yes or no. It sets context. People and institutions agree to share under very different motives. Some want money. Some want visibility. Some want their work excluded from certain domains. Some want their contributions acknowledged in dashboards so their communities can see how their culture shapes the model.

Once consent is in the loop, value becomes a menu of exchanges. That is good for markets and good for culture. It recognizes that not all contributions are equal and not all contributors want the same thing. The five year retention window is part of that bargain. Longer retention increases both risk and potential future value, which justifies stronger controls and better compensation.

A social contract for cultural data

Here is a concrete sketch institutions and labs can adopt as AI becomes core infrastructure.

-

Consent by default

Training on user generated or professionally produced content requires an explicit yes. No silent opt in. No prechecked boxes. -

Data minimization and local first

Collect only what you need. When possible, sample and aggregate before uploading. Prefer retrieval over training when a stable copy is sufficient. -

Transparent provenance

Every training batch ships with a signed manifest of sources, licenses, retention periods, and exclusion rules. The manifest is auditable by an independent body. -

Influence metering

Measure marginal contribution by corpus using holdout tests and attribution methods. Numbers do not need to be perfect to be fair. They must be consistent and visible. See our influence metering guide. -

Proportionate compensation

Blend a base rate for inclusion with a variable rate tied to measured influence. Niche expertise that unlocks safety, medical quality, or local knowledge earns a premium. -

Purpose constraints

Rightsholders can permit training for some applications and exclude others. A medical corpus can be used for clinical reasoning but not for ad targeting. Community archives can opt out of sensitive generation tasks. -

Retention ceilings and deletion rights

If consented data is held for five years, contributors can demand periodic reconsent or deletion after a shorter window. Deletion should propagate to training pipelines through scheduled refreshes when feasible. -

Attribution and feedback

Contributors get dashboards that show inclusion, estimated influence, and payouts. They can flag misuse and request red team tests on edge cases in their domain. -

Public interest carve outs

Libraries, research datasets, and endangered language corpora can participate under subsidized terms and stronger privacy controls. The goal is not to privatize knowledge, but to stop treating creative labor as a free raw material. -

Spillover duty of care

If a model trained on a sensitive corpus becomes a target for extraction, the lab must invest in output filtering, watermarking, and access controls proportionate to the risk borne by contributors.

How to build this without slowing innovation

There is a fear that consent, compensation, and retention controls will suffocate progress. In practice the opposite is more likely. Clear rules crowd in investment. Businesses prefer predictable input costs over legal fog. Here are pragmatic pieces that can be deployed now.

- Machine readable licenses. Every dataset carries a small JSON sidecar that specifies consent, retention, and purpose limits. Pipelines enforce these at load time.

- Clearinghouses. Neutral organizations collect opt ins from creators, manage payouts, and provide audit logs. The model exists in music and can extend to text and images.

- Attribution telemetry. Labs run periodic ablation studies that estimate each corpus’s marginal lift on benchmark suites. The telemetry feeds a rate card, not a courtroom. We outline one approach in our memory ledger framework.

- Retention lockers. Storage systems enforce per source retention automatically. When the clock runs out, the data and its derivatives are queued for exclusion in the next training refresh.

- Redaction and protected classes. Before data enters a training job, automated tools scrub sensitive attributes and remove content marked as excluded by communities at risk.

None of this requires perfect measurement. It requires good faith, repeatable methods, and the willingness to be audited.

Pricing memory in practice

Prices anchor norms. If a court sets a dollar figure per book for past use, markets will quickly triangulate rates for forward use. The figures will vary by domain. Technical textbooks that boost reasoning. Investigative archives that teach fact patterns. Journalism that improves currency and coverage. Community corpora that grant access to underrepresented dialects. The key is to avoid one price to rule them all.

A workable model looks like this:

- Base inclusion fee. Reflect the cost of curation and the willingness to participate.

- Variable uplift fee. Tie payments to measured contribution for target capabilities. Treat it as a share of the memory that matters for the tasks where value is created.

- Safety bonus. Reward sources that reduce harms or improve robustness on critical edge cases.

- Retention multiplier. Increase the rate when contributors allow longer storage windows and future retraining, with tiered bands and opt down rights.

For small creators the goal is not to nickel and dime, but to make dignity visible. A predictable payout, even if modest, beats the feeling that your life’s work dissolved into a gradient with no trace.

Addressing common objections

-

What about fair use. Fair use will continue to apply, especially to research and noncommercial contexts. Consent and compensation are not rejections of fair use. They are a better fit for industrial scale systems that internalize value from others’ work.

-

What about open culture. Open licenses remain vital. Consent can coexist with openness. People can say yes and choose permissive terms. The difference is that the yes should be deliberate, not assumed.

-

What about the commons. The answer is governance. If we want a robust knowledge commons, we can fund shared datasets openly and treat them as civic infrastructure with strong privacy protections and no extractive exclusivity.

-

What about global inequality. Wealthy institutions may monetize while others cannot. This is why we need subsidized participation for public interest datasets, language revitalization projects, and resource poor creators. A share of commercial payouts should fund these.

-

What about friction and speed. Consent systems add overhead. So did copyright collecting societies, spam filters, and security reviews. We built them because the alternative was worse.

What changed is not only policy. It is posture.

A judge asked the market to assign a price to memory gathered without permission. A lab asked users for permission and offered a longer retention window in return. Those moves do not settle the debate, but they change its center of gravity. From now on, every serious player will need to answer three questions:

- How do you obtain consent for the data that shapes your model.

- How do you measure and compensate influence from specific corpora.

- How do you match retention periods to real risk, with clear rights to reverse course.

The industry can respond with more scraping, more litigation, and more public distrust. Or it can treat memory as a social contract that must be earned, kept, and renewed.

A closing proposal: the memory ledger

Let us establish a norm. No major model release without a memory ledger. The ledger lists data sources by category, consent status, retention window, and contribution score. It is not a perfect map of the training universe, but it gives society a handle. It allows creators to see where they stand and to enter the conversation with power. It gives users a choice and a clock. It gives labs a shield and a compass.

If you build AI, ask not only what your model can remember. Ask what its memory owes. If you create, ask not only whether your work will be learned from. Ask how it will be valued and how that value will be made visible. If you govern, design rules that reward consent, protect the commons, and measure influence where it counts.

That is the moral economy of memory. It starts with yes or no. It matures into how much, for how long, and for what purpose. This week the line moved in the right direction. Hold it there, then build on it.