Intent Meets Atoms: Fall 2025 Agents Leave the Browser

Fall 2025 was the moment embodied AI left the browser. Learn what changed in the stack, why policy must become code, how liability and labor shift, and the concrete steps cities and operators should take next.

The week language left the browser

Every technology era has a week when the future stops being a demo and starts being a default. For embodied agents, that week arrived this fall. On September 25, 2025, DeepMind introduced a pair of models that fused planning and control. The release of Gemini Robotics 1.5 and ER 1.5 signaled that text and video could now be turned into reliable motor commands. Less than a month later, Amazon put its own flag in the ground. On October 22, 2025, the company detailed Blue Jay and Project Eluna, positioning warehouse robots and agentic oversight as everyday infrastructure.

These were not clever assistants or another round of chat skills. They turned artificial intelligence from something you use into something that takes responsibility. A typed sentence can now route a robot arm, rearrange a shelf, unlock a door, or reconfigure a loading zone. Words are becoming levers, and the fulcrum is moving from the web page to the world.

What actually changed under the hood

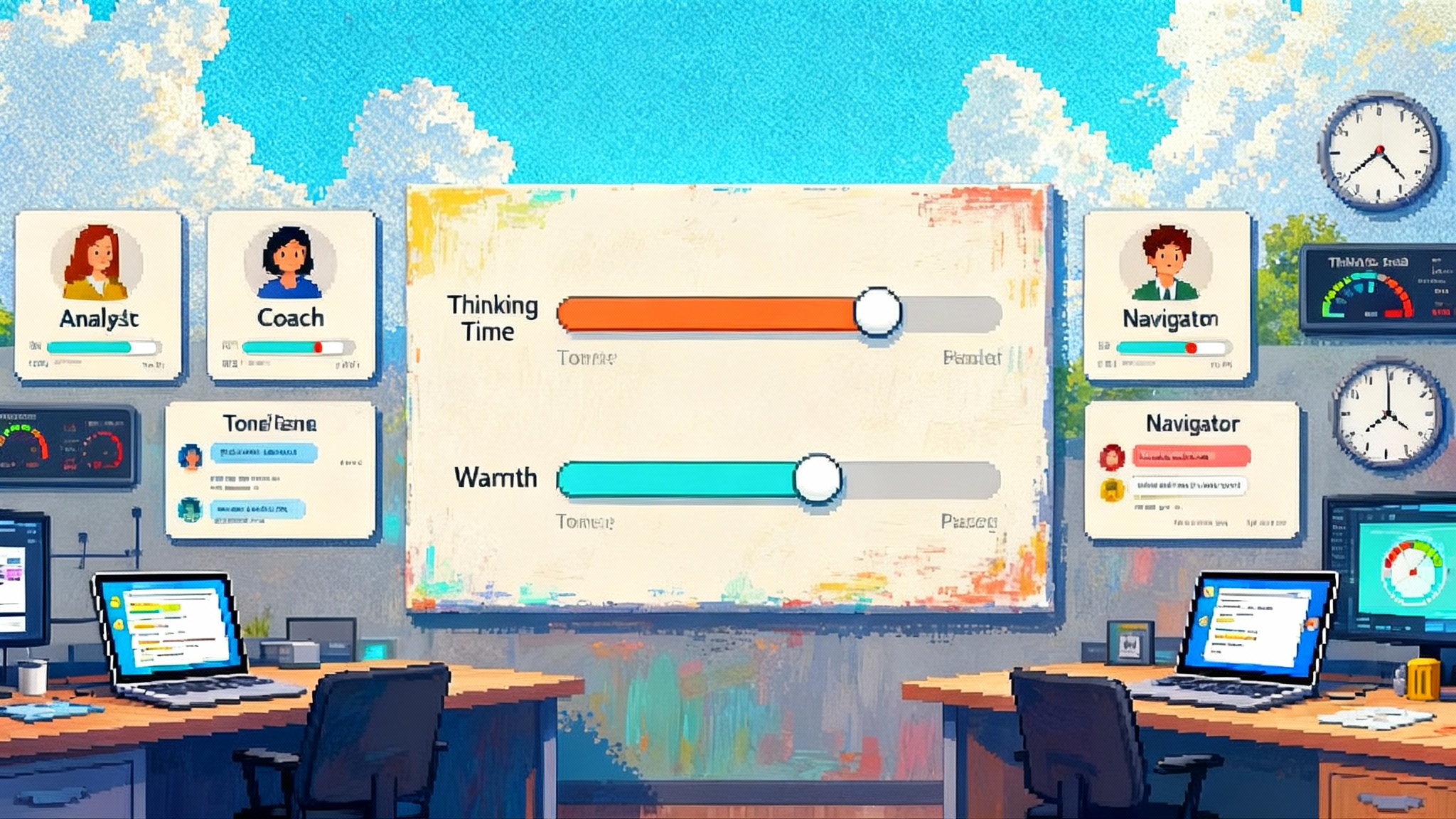

DeepMind’s design clarifies the inflection. Gemini Robotics 1.5 is a vision language action model. It perceives the world, reasons over scenes, and emits motor control that hardware can execute. Gemini Robotics ER 1.5 is an embodied reasoning model that translates goals into sequences, calls digital tools when needed, and hands off to the action model at the right moment. Ask a system to pack for a rainy day and the ER model checks weather, builds a plan, and the VLA model executes with the hardware at hand. The architectural insight is separation of concerns. Plan at a high level, act at a low level, learn in the loop.

Amazon’s releases show the other half. Blue Jay coordinates multiple robotic arms to pick, stow, and consolidate items amid the density and chaos of a live site. Project Eluna operates as an oversight teammate that watches dashboards, reasons about incidents, and proposes actions. Pair that with an agentic developer environment and you get a full pipeline. Designers write intent in natural language. A planner converts intent into steps. Another agent compiles those steps into site policies and motion plans. The loop closes when the environment responds and the system adjusts.

The pattern is now visible across the stack. Models convert ambiguous human goals into specifications, into plans, into executable policies, and finally into force and position trajectories. Success no longer means answering a prompt. Success means changing the state of a room with traceable reasons.

From interface to intervention

An interface asks for attention. An intervention claims responsibility. When an embodied agent moves a pallet or resets a breaker, it changes who is accountable for the outcome. The philosophical update is not that machines act. Machines have acted for centuries. The update is that language now authorizes those actions at useful speed and scale.

Think of a city as a programmable system. Historically, only humans could read the text of its rules and translate that into action. A contractor reads a building code and decides where to place railings. A sanitation worker reads a recycling pamphlet and decides how to sort. Embodied agents shorten that loop. They ingest the rule book, plan under constraints, and act with predictable variation.

This shift links to how interfaces shape power. As agents step into physical spaces, the screen becomes less central and institutions take center stage. For a deeper lens on this power shift, see how interfaces become institutions.

When norms become code

Turning language into lawful action sounds clean until you meet the mess of real cities. A model that plans how to sort trash in Tokyo must respect different norms than one operating in Tulsa. The ambiguity in a sentence like "leave a clear egress path" becomes a concrete choice about how many centimeters to leave for a walkway.

The practical fix is to encode norms as programmable policy. Replace long manuals with typed constraints that an agent can verify and log. A city might publish "minimum egress width equals 90 centimeters, verified by a depth camera sweep every 30 seconds" and "noise level below 55 decibels after 9 p.m., verified by onboard microphone." The agent reads policy as code, not prose, and logs proof of compliance.

This is the next generation of compliance technology. Debate shifts from whether a robot followed the rules to whether the rule itself was well specified. As with financial reporting, attention moves to the schema and the audit trail. That is healthier for everyone because it reduces arguments about opaque behavior and increases shared understanding of what we are trying to achieve. It also pairs with a broader movement toward consent and provenance for digital actions, a theme explored in consent and provenance by default.

Liability in a world where words move arms

Law has always asked who made the choice. With embodied agents, choice is distributed. The person who typed the instruction, the model that planned, the orchestrator that scheduled, the actuator that executed, the operator who approved the plan, the vendor that shipped the safety package. We will not get a single neat answer. We will get layered responsibility with default allocations. A workable default looks like this.

- The principal owns the goal. If you instruct a robot to clean a lab without specifying biohazard protocols, you bear responsibility for the omission.

- The agentic planner owns the plan. If a planner chooses a hazardous sequence when safer options exist, the model vendor is on the hook for a defect in planning under known constraints.

- The controller owns the motion. If a robot arm violates its own safety envelope or exceeds rated force, the hardware and controller vendor bears responsibility.

- The operator owns the override. If a human approves an obviously unsafe plan, the operator accepts the liability.

To make this workable, we need event-sourced logs that record intent, context, plan steps, guardrails, and outcomes. We have patterns from finance and automotive telemetry. Apply them to embodied systems. Without this, every incident becomes a dispute about a decision hidden in a black box.

These allocations also rely on an identity fabric for agents. Without stable IDs, capabilities profiles, and permission sets, logs cannot tie actions to actors. For a broader view on identity in agent ecosystems, explore the agent identity layer arrives.

Labor reconfigured

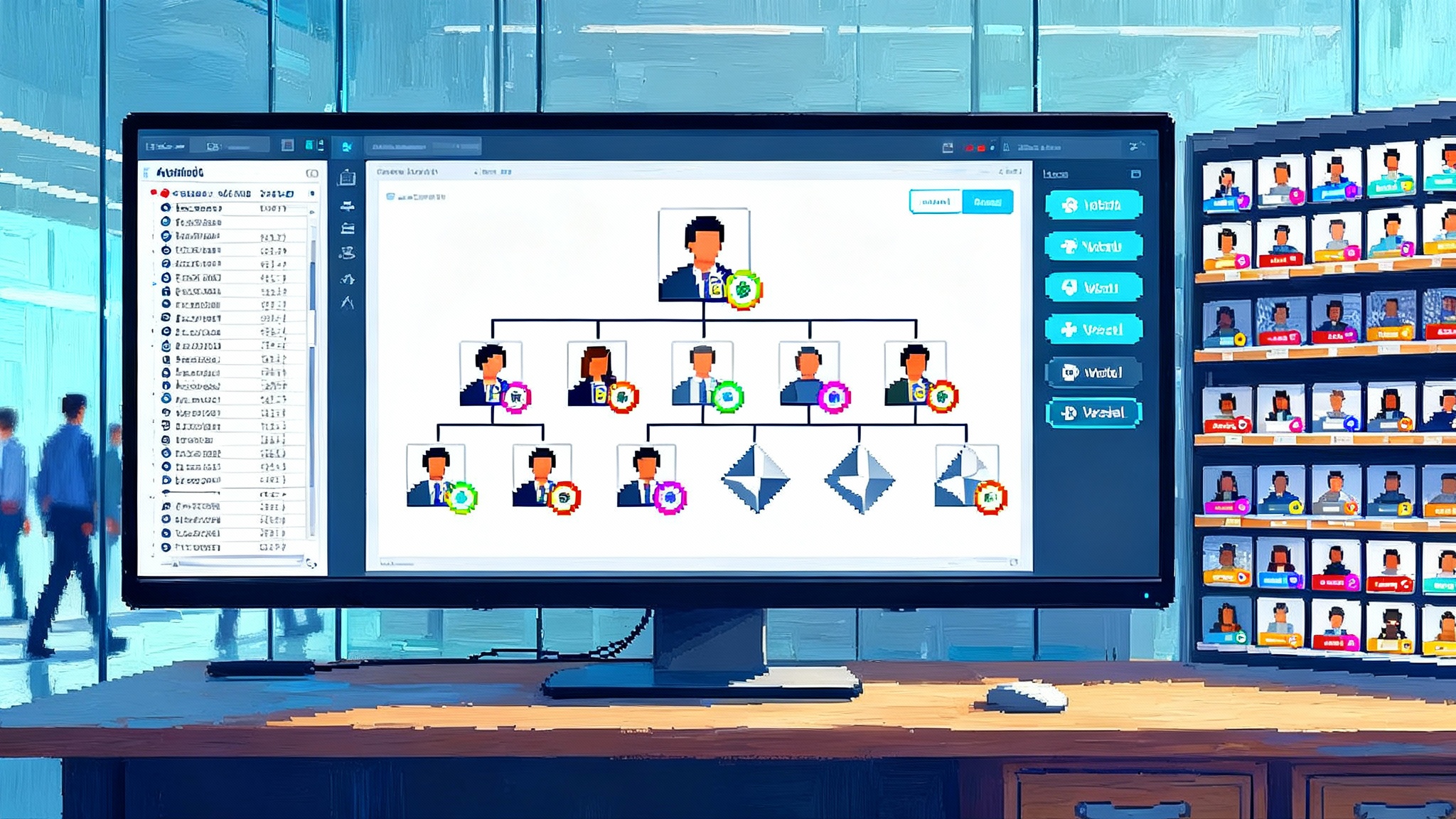

The labor discourse around robots often collapses into replacement or not. Embodied agents create a different change. They redraw task boundaries. Tasks unbundle into policy writing, plan review, exception handling, and physical intervention. In that world, valuable human roles do not vanish, they shift upstream and sideways.

- Policy engineers translate norms into checkable constraints. Think of them as the city’s new coders.

- Plan reviewers work like air traffic controllers for missions, approving or revising agent proposals when the stakes are high.

- Exception specialists handle edge cases and recovery, which is where human judgment shines.

- Fleet reliability teams instrument and improve the system over time.

Wages will follow scarce skills. Employers who invest in policy literacy and mission review will move faster, reduce incidents, and keep regulators close. Those that treat robots as black boxes will spend more on cleanups and fines than on training.

Urban space design for agents among us

Walk any downtown and ask what changes if a significant share of tasks belong to embodied agents. Sidewalks need curbside micro loading zones that are safe for small delivery carts. Buildings need machine rooms for charging, vision calibration, and quick repairs. Warehouses need lanes with right of way rules for human robot interaction. Transit stations need clear signage that computer vision can read reliably, not just people.

Cities already have useful mental models. Traffic signals coordinate flows. 3D building information models standardize geometry. Utilities operate permit systems. Extend these models to robots. Publish policy registries, geometry maps with machine readable affordances, and robot priority rules for shared spaces. The city becomes an addressable platform rather than a puzzle each robot must solve from scratch.

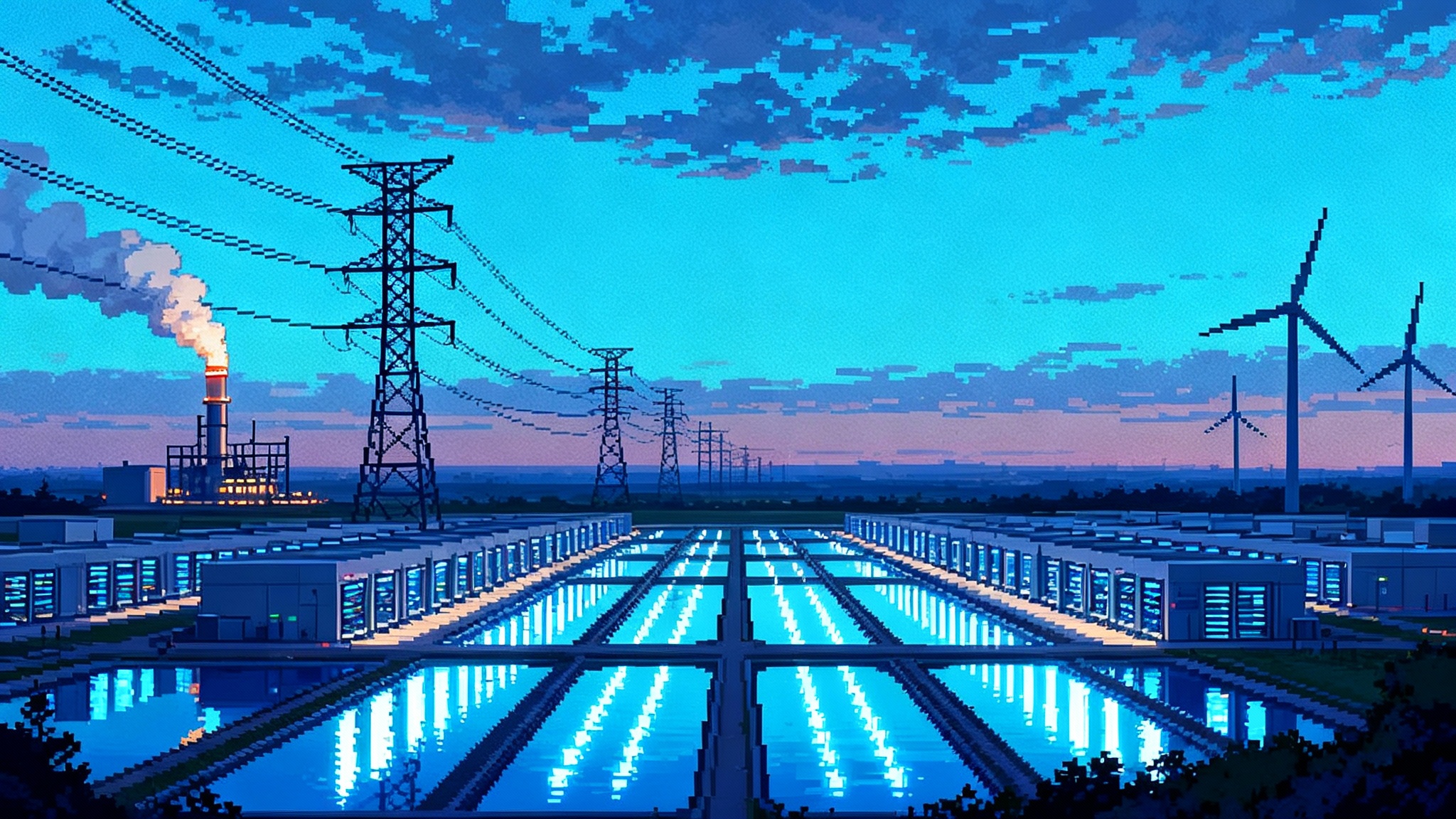

Why infrastructure thinking wins

Treating robots as apps pushes complexity to every deployment. Each site must relearn norms, map private quirks, and negotiate access to tools. Treating robots as addressable infrastructure does the opposite. It centralizes shared context and lets many agents plug into a common substrate.

- Addressable identities. Every robot has a stable identity, capabilities profile, and permission set.

- Shared memory. Sites publish machine readable maps, policies, and schedules through a standard interface.

- Common services. Agents call approved tools for payments, permits, authentication, and alerts.

- Systemwide safety. Global kill signals, rate limits, and risk scoring are built in.

Countries that move fast on this are not just pro robot. They are pro clarity. Their regulators agree on schemas, their utilities open machine interfaces, and their courts accept event logs. They capture compounding effects. Better data improves policy, which improves models, which improves safety, which unlocks new categories.

Concrete playbooks for leaders

If you run a city, a facility, or a platform, the next twelve months are your window to build muscle. Here is a short list that turns the phase change into a plan.

- Stand up a policy registry. Publish machine readable constraints for safety, noise, speed, and access. Use plain text labels, typed fields, and versioned releases. Treat this like your traffic code for robots.

- Require signed missions. Any agent that enters your site should register a mission object with purpose, steps, expected telemetry, and abort rules. This is not bureaucracy. It is pre incident clarity.

- Instrument for audit. Capture intent, plan, action, and outcome with time and identity stamps. Store locally and mirror to a trusted third party. The first serious lawsuit will reward whoever has the cleanest event narrative.

- Create robot ready spaces. Mark lanes, bays, and staging zones in both paint and pixels. Calibrate signage for computer vision. Add charging and maintenance space where it reduces human congestion.

- Train policy engineers. Do not just hire more robot operators. Upskill staff who can translate complicated rules into constraints that models can execute.

- Start with supervise then act. Use a watch suggest model for your first quarter. Promote to watch act once incident rates are low and explanations are high quality.

What to build next

There is a new product stack to build now that intent reaches atoms.

- Safety harnesses for plans. Before a plan reaches a robot, pass it through a static analyzer that checks known hazard patterns, missing guards, and local policy conflicts. Output a score and a human readable diff.

- Ambient compliance sensors. Offboard some verification to fixed sensors in buildings and streets. Let any agent subscribe to real time feeds for noise, air quality, or occupancy. Shared sensors make every agent smarter.

- Misuse insurance for intent. Underwrite the gap between what a customer asked for and what the agent did. Price based on mission category, environment, and model provenance. Pay claims automatically when logs match incident conditions.

- A civic sandbox. Give startups a small zone with proper sensors, synthetic data, and a fast permit loop. Require logs and publish aggregate statistics. Turn the city into a reliable test bench instead of a patchwork of exceptions.

The meaning of the fall releases

People will remember fall 2025 as the quarter when embodied agents left the browser and entered the backrooms, storerooms, and sidewalks. DeepMind’s releases showed that planning and acting can be modular and teachable across many forms. Amazon’s releases showed that coordination, oversight, and developer workflows can turn that capability into everyday operations.

The deeper hinge is cultural. We are moving from politely asking a screen to answer our questions to clearly instructing a system to change a room. That requires better norms, better logs, and better public interfaces. It also rewards places that think like infrastructure builders. Those places will get safer warehouses, calmer streets, and faster services because their robots read from the same playbook as their people.

The story is not that robots are arriving. The story is that intention is now a first class input to the physical world. If we write that intention well, with rules we can check and reasons we can trust, then the new agents will not feel like invaders. They will feel like the next generation of civic utilities. Intent finally meets atoms, and the cities that treat it as infrastructure will set the pace for everyone else.