The Personality Turn: GPT-5.1 Makes Persona the Platform

GPT-5.1 reframes AI around persona, not just capability. By pairing Instant and Thinking styles with tone controls and metered reasoning, teams can specify how an assistant behaves, what it refuses, and how hard it thinks before it speaks.

Breaking: AI's new default is persona

OpenAI's GPT-5.1 changes the center of gravity in human-AI design. Instead of meeting a single generic assistant, users now meet a configurable self that has habits, boundaries, and a thinking budget. In ChatGPT, this shift shows up as two model styles. The Instant model aims for conversational speed on everyday questions. The Thinking model slows down and writes out more structured reasoning on harder problems. OpenAI also made tone and formatting controls more accessible, so people can tune warmth, brevity, and structure across chats. You can see this in the official notes that introduce the update in OpenAI on GPT-5.1.

This is not just about sounding friendly. Persona governs how an AI allocates thought, when it defers, what it refuses, and how it explains itself. That turns persona into a real contract between model and user. After GPT-5.1, models are not only smart. They are someone in your workflow.

From mode switch to self switch

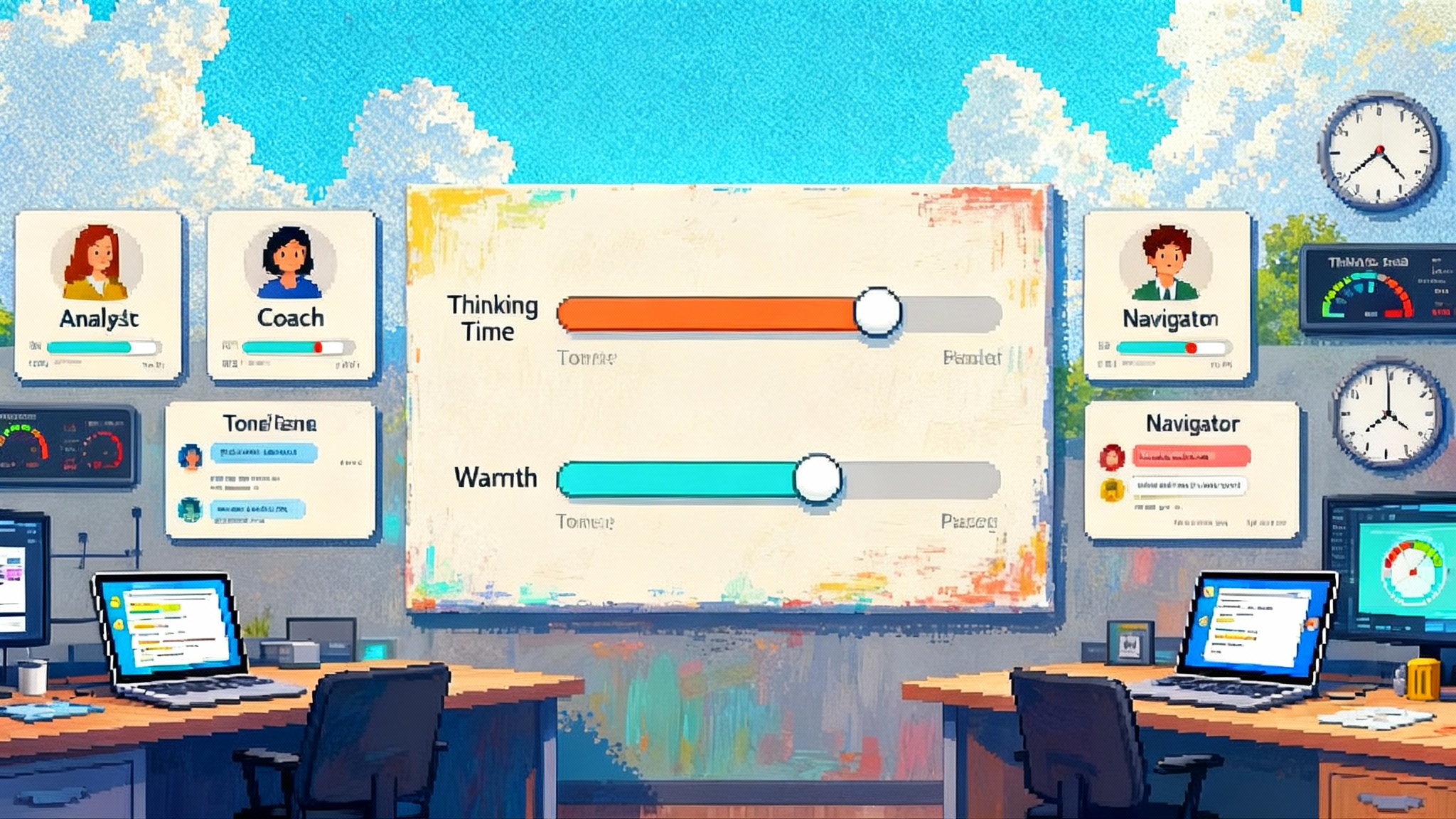

Most people will not toggle Instant or Thinking by hand. GPT-5.1 can auto route many requests. What matters is the behavior envelope that emerges. Instant feels brisk and social, good at following instructions quickly. Thinking stays with complex tasks longer, writes down interim reasoning, and weighs trade offs before it answers.

Add tone controls on top and what you experience is not just a mode. It is a voice with habits. A persona. OpenAI seeded this earlier with preset personalities in ChatGPT. GPT-5.1 takes the next step by turning warmth, concision, and structural preferences into first class settings that travel with you across contexts. Once those settings persist, they stop being mere style. They become identity.

This aligns with a broader industry pattern we have tracked, where agents are moving from tools to actors with roles, histories, and explicit responsibilities. If you want the bigger picture on that trend, see our internal perspective on the agent identity layer. Persona is how that identity becomes legible to users and auditable by teams.

The mechanics behind the mask

Two levers do most of the work under the hood.

- Metacognitive throttling. The model decides how much time to spend thinking before it speaks. With GPT-5.1, developers can also push that throttle down or up. OpenAI's developer update describes adaptive reasoning and a new no reasoning mode, which signals that cognition is becoming a resource teams can meter, budget, and explain. See the adaptive reasoning details.

- Style and affect controls. These set the social frame. Warmth, terseness, structure, and emoji use create expectations for empathy, authority, and distance. Turn warmth up and the model may mirror more, apologize more, and use supportive language. Turn it down and you get crisp briefs, fewer hedges, and faster handoffs.

Together, these levers let teams package not only capability, but also values and expectations. That is why persona is becoming the interface and the promise. You are not only choosing what the model can do. You are agreeing on how it will treat you while it does it.

Persona as contract: trust, consent, accountability

Consider three concrete cases where the contract is the product.

- Health triage. A clinic deploys a front door assistant that must be calm, clear, and never overstep medical advice. The persona needs high empathy but strict boundaries on reassurance and diagnosis. The contract states what the assistant will do and what it will never do. The clinic sets a conservative thinking budget for triage and a higher one for post visit summaries.

- Finance. A retail brokerage offers two advisory personas. The Coach uses warm encouragement, short checklists, and low thinking budgets for basic account tasks. The Analyst uses a cooler tone, more citations, and higher thinking budgets for research summaries that include model confidence and not just recommendations. Users opt into each persona with clear consent, including how their data trains future recommendations.

- Education. A reading tutor persona adapts tone by grade level. For early readers, it celebrates small wins, surfaces phonics tips, and uses gentle corrections. For older students, it switches to Socratic questioning and stricter rubric mapping. Thinking budgets are capped during live practice to keep pacing snappy, but raised during feedback sessions to generate richer commentary tied to standards.

In each case, trust follows the persona more than the brand. Users consent to a way of being helped. Teams can audit the persona against the promise they made.

Why this becomes the next platform layer

Platforms stabilize around a core abstraction. Operating systems give you processes and files. Browsers give you the document and the origin. LLM platforms began with tokens and prompts. After GPT-5.1, the core abstraction shifts to persona packages that combine tone, policy, and metered cognition.

This is not cosmetic. Persona packages do five important jobs.

- Compress defaults. Instead of long system prompts or brittle prompt hacks, a persona captures behavior, tone, escalation rules, refusal patterns, and thinking budgets in one reusable unit.

- Create predictable touch. Human teams can rely on consistent bedside manner, consistent handoffs, and consistent error disclosures. That is critical in support, compliance, and care.

- Attach to rights. A persona can claim it will not retain certain data, will watermark critical steps, and will escalate to a human under defined conditions. Behavior becomes policy you can audit.

- Expose levers to users. People can grant or withhold consent for higher thinking budgets or for more affective language in exchange for better accuracy or faster service.

- Enable marketplaces. Once a persona is a package, it can be versioned, attested, rented, or sold. That creates supply and differentiation that do not reduce to raw model size.

If you have been thinking of AI as infrastructure, this will feel familiar. We argued in our piece on intelligence as utility that teams should build pipes, not prompts. Persona packages are the fittings that let those pipes deliver a consistent, auditable flow of help.

The first marketplaces for task specific selves

Expect early marketplaces to look like a blend of app stores and staffing agencies. Instead of downloading a tool, you will hire a self.

- Legal and compliance bundles. A Policy Paralegal persona for regulated industries that is tuned for citations, conservative interpretations, and automatic redlines. Vendors will sell it with indemnification tiers and a performance bond that pays out if the persona violates a stated refusal rule.

- Care bundles. A Calm Navigator for clinical paperwork and scheduling. It uses high empathy but has strict boundaries against diagnosis. Providers will choose vendors based on measured rates of false reassurance and escalation accuracy.

- Field operations bundles. A Line Supervisor persona that runs with minimal affect, prioritizes safety checklists, and spends thinking budget on step validation rather than prose. It reports exceptions in a terse, timestamped format that fits radio and wearable devices.

These bundles will not be simple style presets. They will include verified safety policies, data minimization rules, and preset thinking budget ceilings. Vendors will compete on uptime, clarity of refusal behavior, and the cost per unit of reasoning, not just on benchmark scores.

Design patterns for building with selves

If you are designing with GPT-5.1 today, five patterns will help you ship faster and safer.

- State the promise, not just the prompt. Write a one page persona promise that any user can understand. Include tone, allowable and forbidden tasks, escalation rules, and the default thinking budget. Put this on the settings screen or contract.

- Separate tone controls from duty controls. Do not let warmth override policy. For example, the assistant can mirror feelings, but must still refuse to diagnose. Test for failures where a friendly tone becomes unsafe advice.

- Meter cognition explicitly. Choose a default thinking budget in tokens or seconds. Raise it only for tasks that show measured gains. Show the user when you raise it and why. Let them opt out.

- Define consent for affect. Ask users if they want more supportive language or more scannable output. Offer a toggle for just the facts that keeps comfort phrases to a minimum.

- Log persona decisions. Keep a structured trace of when the model invoked more thinking, when it refused, and which style rules were applied. You will need this for audits and user appeals.

These patterns will need a control surface. That is why the governance side matters. For a deeper dive on adversarial conditions and safety posture, consider our note on the control stack. Persona only works as a platform layer if you can cap its thinking, prove its provenance, and review its decisions.

Pitfalls to avoid

- Sycophancy disguised as care. Warmth can mask agreement. Test personas with adversarial prompts that pressure the model to please. Penalize surface empathy that overrides refusal policies.

- Hidden costs of thought. More thinking can help, but not always. Treat thinking budgets like any other resource. Measure accuracy gains versus latency and cost. If your Analyst persona spends twice as many tokens to reach the same conclusion, cap it.

- Style drift across contexts. A persona that is charming in chat may be noisy in a spreadsheet or too verbose in a wearable. Tune separate output profiles for different surfaces.

- Consent theater. It is easy to ask for a style preference and then ignore it. Instrument adherence and show users where their preferences were applied.

The standards we will need next

If persona becomes the platform layer, we will need shared expectations and proofs. Three standards can emerge quickly.

- Persona provenance

- What it is: A signed persona card that includes authorship, version history, last review date, linked policies, and the hash of the behavior template used in production.

- Why it matters: Users and regulators can verify that the persona they see is the one that was certified. Vendors can publish update notices when a refusal rule changes.

- How to start: Use a simple, signed manifest format in your build pipeline that captures tone settings, refusal policies, and thinking budget ceilings. Expose a read only copy in product settings.

- Safety limits on affect

- What it is: A rubric for emotional language with measurable bounds. For example, maximum counts of reassurance phrases per 1,000 tokens, or a cap on implied certainty in sensitive domains.

- Why it matters: Style is not neutral. Affect can reduce or inflate perceived authority. Limits keep personas from drifting into therapy or coercion without consent.

- How to start: Define an internal taxonomy of comfort, apology, and praise phrases. Add unit tests that fail when a persona exceeds the allowed rate in a given context.

- Thinking budgets

- What it is: A transparent meter for model cognition. Default budgets by task class, with user visible upgrades. Budgets include token caps, time caps, and tool use limits.

- Why it matters: Reasoning is becoming an explicit resource. Users should know when more of it is being spent on their behalf, and why.

- How to start: Ship a visible indicator when a persona raises its thinking level. Log the reason and show it in a session report. Provide an account level policy that caps total thinking use per day.

What companies should do this quarter

- Product leads. Pick two core workflows and define paired personas, one warm and fast, one cool and deliberate. Ship them with clear promises and let users switch. Measure task success, not just satisfaction.

- Engineering. Implement a thinking budget manager. Start with three classes: basic, complex, and critical. Tie each to token and time caps and record overrides. Provide an interface so support can review a session's thinking trace.

- Compliance and safety. Write an affect policy. Include examples of acceptable and unacceptable phrasing by domain. Specify the escalation triggers that override tone preferences.

- Sales and partnerships. Prepare a menu of personas with clear pricing. Include tiers by thinking budget and by safety indemnity. Offer a trial where customers can A or B test personas on their own data.

What this means for creators and workers

For creators, persona packaging is a new way to differentiate. A writer agent that is not only good at outlines but has a specific editorial voice and ethical code is easier to hire and review. For workers, persona can make delegation less stressful. You are not just sending a task into a void. You are handing it to a known character with known habits.

This reframes accountability. If the Coach persona fails to escalate a risky situation, you can audit the promise, the thinking budget, and the refusal rules that were active at the moment. That is easier to govern than a single, shape shifting assistant that has no declared self.

The deeper shift

We are watching intelligence become social by default. The switch that matters is no longer just model size or speed. It is the self you select for the job and the budget you grant it to think. GPT-5.1 made that practical with two levers that map to human expectations: how the assistant sounds and how hard it tries before it speaks.

The result is a new platform layer. When you can meter thought and standardize affect, you can sell, regulate, and trust personas. Markets will form. Standards will mature. Teams will tune their fleets of selves the way they once tuned fleets of servers. The winners will not be the models that sound the friendliest or think the longest. They will be the ones that keep their promises and spend cognition where it counts.

Closing thought

We got used to asking what a model can do. The more useful question now is who it should be for a given task and how much thinking it should spend to do it. Choose the self, set the budget, and hold it to the promise. That is the practical future that GPT-5.1 just made possible.