When Electrons Constrain Intelligence: AI’s Grid Treaty

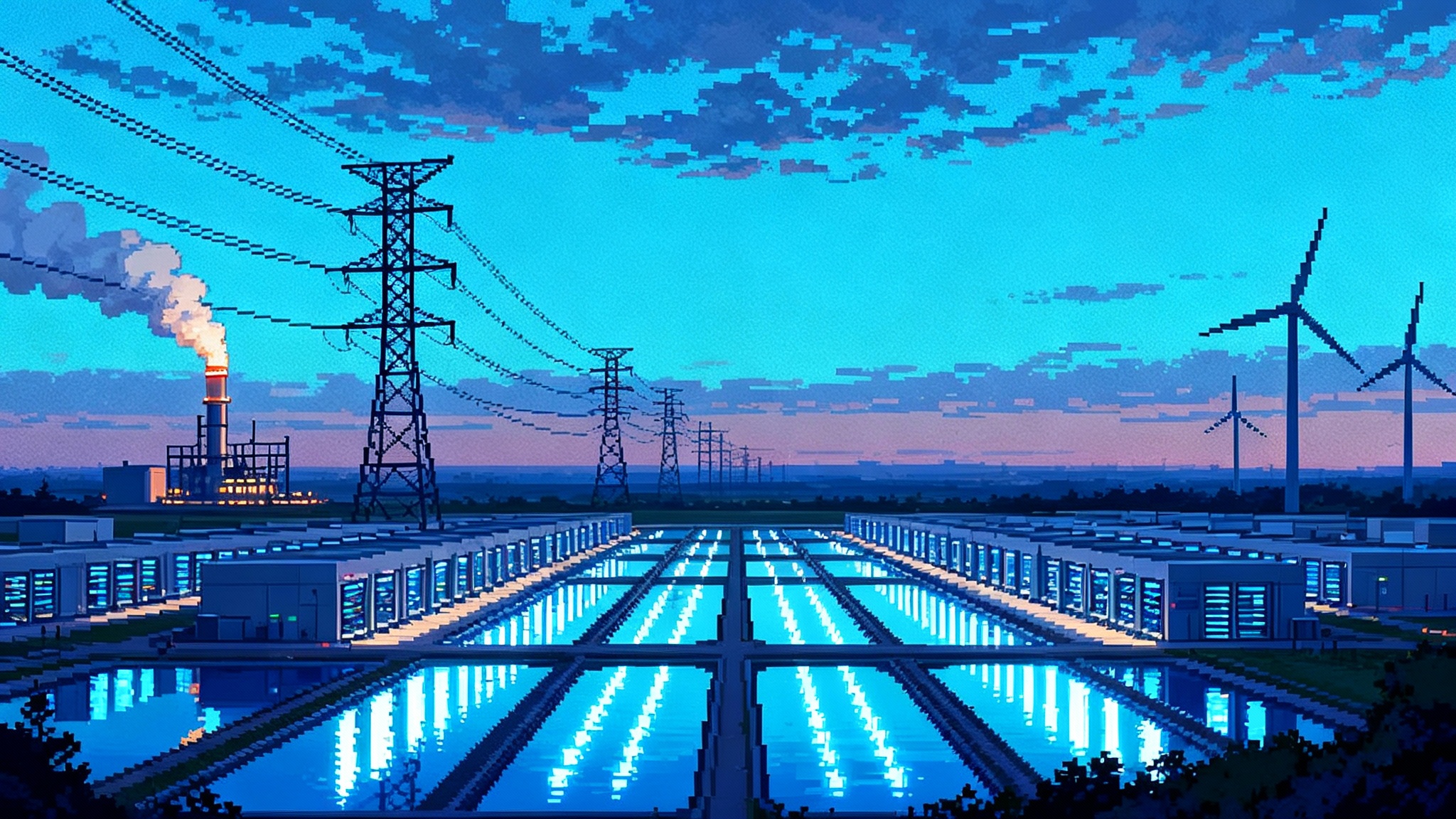

AI is colliding with the limits of the U.S. power grid. The next moat is energy aware AI that schedules training, inference, and memory against real time electricity, negotiating with the grid to stay fast and resilient.

Breaking: AI quietly signed a deal with physics

Something shifted this year. The story of artificial intelligence stopped being only about bigger models and faster silicon and became a story about transformers of another sort. Substations. Transmission lines. Gas turbines and nuclear fuel rods. In 2025, the grid stepped into the AI roadmap.

The headline moment was not a new benchmark. It was a new relationship. Google disclosed that it would curtail certain machine learning workloads when regional demand spiked, via formal agreements with Indiana Michigan Power and the Tennessee Valley Authority. In plain language, a hyperscaler agreed to throttle noncritical AI jobs to help keep the lights on. That is a quiet treaty between datacenters and the power system, and it sets a precedent for how model operators will coexist with physical limits. The company’s move was reported in Reuters coverage of Google's utility deals.

At the same time, states moved to add serious new capacity. Texas accelerated loans from its Texas Energy Fund for fast, dispatchable projects, including a 1,350 megawatt natural gas plant in Ward County that is slated to support the ERCOT region later this decade. It is the kind of scale that shows how policy is now reshaping compute supply in watts, not just tokens. See the Texas announcement of 1,350 MW loan.

Together, these moves reveal the new rules of the game. Compute is no longer just chips and cooling. It is power purchase agreements, capacity auctions, and demand response contracts. The constraint that matters is not only memory bandwidth or interconnect topology. It is electrons.

Why 2025 forced the issue

Three forces converged.

- Load arrived faster than concrete can cure. Utilities from the Midwest to the Mid Atlantic reported multi-gigawatt interconnection queues for datacenters and heavy industry. Capacity auctions in major markets priced higher, reflecting a scarcity premium for guaranteed availability. Transmission lines take years to permit and build, and big thermal plants take even longer. AI procurement cycles move in months.

- Datacenter growth concentrated in a few hubs. Northern Virginia, parts of Ohio and Indiana, Central Texas, and the Pacific Northwest saw overlapping surges in large loads. Local grid stress became a gating factor for where new clusters could land.

- Hyperscalers started to act like power companies. Multi-gigawatt renewable portfolios, long term nuclear partnerships, and grid service pilots shifted from experiments to planning assumptions. This is not corporate social responsibility. It is supply chain.

You can feel the result in a simple anecdote: a model deployment that once required capacity planning inside a cloud region now requires discussions with a utility about summer peak events and ramp rates. Success looks like latency targets that survive a July heat wave.

The next moat: energy aware AI

The new advantage is not only who has the most accelerators. It is who uses them with the power system in mind. Energy aware AI treats electricity as a first class input that is variable in time, price, carbon intensity, and availability. Instead of assuming flat, infinite power, it optimizes training, inference, and memory against a live feed of grid conditions.

Here is what that looks like in practice.

- Training that moves with price and carbon. Pretraining runs are split into interruptible shards with clear checkpoints. When the local grid is tight or expensive, the scheduler pauses noncritical shards and migrates them to cooler zones. When wind is strong at night in the Midwest or solar is peaking in the Southwest, the system surges.

- Inference that honors service tiers. Critical, low latency inference for safety or payments rides on reserved, uncurtailable power. Batch summarization or analytics joins a flexible tier that can be rate limited or delayed by seconds to minutes when the grid needs relief.

- Memory that budgets electrons. Vector databases and caches are tuned for power. Freshness windows, replication factors, and search depth adjust to a budget that includes energy price and congestion. The system chooses between recomputing embeddings and retrieving them based on both time and power cost.

Think of it like air travel. There are guaranteed seats and standby seats. There are red eye flights that exist because nighttime demand is different. Energy aware AI uses an equivalent yield management playbook for compute.

Architecture for a grid literate stack

Energy awareness needs a stack that speaks both model and market. A pragmatic design uses three layers.

1) Signals and contracts

- Telemetry: Ingest local marginal prices, real time load forecasts, and grid event signals from utilities or regional operators. Include heat index and renewable forecasts for the next 24 to 168 hours.

- Commitments: Register demand response programs, flexible load agreements, and site level curtailment rules. Each contract becomes a machine readable constraint with penalties and rewards.

- Carbon data: Track marginal emissions so the system can prefer clean windows when performance allows.

If your team is already building pipelines that treat AI as a network utility, this will feel familiar. It extends the mindset from the Intelligence as Utility playbook to include energy markets as first class inputs.

2) Schedulers and markets for jobs

- Training market: Every training job publishes its sensitivity to delay, checkpoint granularity, and acceptable migration overhead. The scheduler bids the job into different regions and timeslots based on power signals, similar to bidding a battery into an energy market.

- Inference market: Service tiers map to power tiers. Critical paths get a fixed allocation with headroom. Flexible calls use probabilistic service level agreements that degrade gracefully during declared grid events.

- Memory market: Caches and vector stores advertise their power cost per query and per gigabyte. A planner routes calls to the cheapest clean power at that moment, with replication rules to avoid hotspots.

3) Model level controls

- Dynamic precision: Allow activation precision and quantization settings to step down during curtailment without violating accuracy budgets for the task.

- Mixture of experts as a power valve: Adjust expert activation rates to shed load while preserving output quality for top tasks.

- Elastic context: Shrink or expand context length and retrieval depth based on power budgets, with task aware prompts that preserve intent.

None of this requires exotic science. It requires engineering discipline and interfaces that assume electricity is not a constant. The payoff is resilience. When heat waves or polar vortices arrive, the model does not just keep running. It keeps running responsibly.

A new role for datacenters: from load to grid asset

Demand response used to be for factories and aluminum smelters. In 2025, hyperscale datacenters began to participate at scale. The Google agreements referenced above target machine learning workloads precisely because they can be paused without breaking user facing services. Other operators are moving in the same direction, often through industry collaborations with utilities and research groups to standardize flexible designs and measurement.

As programs mature, datacenters can provide more than simple curtailment.

- Fast frequency response with onsite batteries: Inverter based storage can inject or absorb power on subsecond timescales, stabilizing local frequency excursions.

- Black start assistance: Large sites with turbines or fuel cells can help restart a grid segment after an outage.

- Thermal flexibility: Chilled water loops and phase change storage can shift cooling load by tens of minutes, creating safe buffers during short grid events.

The important point is that datacenters can be good grid neighbors without sacrificing their core mission. The examples above do not ask a search engine to go dark. They ask that nonurgent AI tasks be polite.

Market mechanisms for compute availability

Once electricity scarcity is explicit, compute availability needs markets that price it. The cloud already offers spot instances that can be reclaimed. The next step is to tie those reclaim events to transparent grid signals and to standardize service tiers that line up with power conditions.

- Grid linked spot: Instances can be discounted when local reserves are healthy, then automatically throttled or reclaimed when reserves drop below a threshold. Customers see event windows in advance, not a surprise termination.

- Compute futures: Enterprises buy guaranteed training windows ahead of time in specific regions, bundled with the associated power guarantees. The price reflects both chip scarcity and expected grid tightness.

- Energy indexed pricing: For certain flexible tiers, inference rates float with a published energy index for that region. Customers who pick these tiers benefit from lower costs most of the year and help stabilize the grid during peaks.

These mechanisms reward software that can pause, checkpoint, and resume. That creates a flywheel for energy aware engineering. For teams building the commercial layer around this, the economics rhyme with our view in payments become AI policy.

Rethinking alignment: intent that respects infrastructure

Alignment is usually framed as getting models to follow human preferences. The power crunch adds another layer. We also need alignment with infrastructure safety and reliability.

- Safety: Throttle background AI tasks before hospitals face brownouts. That should be part of every incident playbook.

- Fairness: Avoid shifting flexible jobs in ways that consistently export noise or emissions to the same communities. Explain where jobs move and why.

- Transparency: When curtailment affects service levels, surface it. Users should know when a flexible tier is in effect and how it protects the grid.

This is not a moral abstraction. It is a clear operational ethic: first, do not destabilize the system you depend on. For teams designing controls and governance, see how this complements the mindset in the control stack arrives.

How to build an energy aware program now

For hyperscalers

- Publish a workload flexibility catalog: Classify internal jobs by tolerance to delay, checkpointing, and precision changes. Tie these classes to explicit grid events and contract terms at each site.

- Integrate curtailment into the control plane: Make grid events a native signal for schedulers, autoscalers, and feature flags, not a side channel.

- Invest in onsite flexibility that avoids emissions spikes: Batteries, thermal storage, and clean firm power reduce reliance on diesel while giving the grid headroom.

- Make energy a first class SLI: Set targets for curtailment response time, recovery time, and work loss during events, and track them alongside latency and availability.

For model developers

- Design for pause and resume: Build checkpointing into every long run with clear maximum work loss targets. Treat checkpoints as artifacts with retention and integrity policies.

- Make power visible: Log energy, carbon, and curtailment events alongside loss curves and latency metrics. If engineers can see it, they will optimize it.

- Tune for elastic context and precision: Treat context length, retrieval depth, and quantization as dynamic controls rather than fixed knobs. Measure task level quality impacts so the scheduler can trade accuracy and watts with confidence.

- Harden migration paths: Package training and inference jobs to migrate across zones with deterministic startup, avoiding cold start penalties that erase energy savings.

For utilities and regulators

- Standardize large load tariffs with flexibility terms: Make the rules clear for interconnection, compensation, and measurement and verification of performance.

- Create fast lanes for sites that commit to grid services: If a datacenter offers guaranteed demand response and telemetry, exchange that for streamlined approvals where safe.

- Share better signals: Publish machine readable, location specific alerts and forecasts that let schedulers act early rather than during emergencies.

- Reward clean flexibility: Compensate sites that pair curtailment with low carbon backup resources, not diesel standbys that shift the problem.

For startups

- Build the energy aware scheduler: A control plane that bids workloads across time, region, and power conditions will sit at the center of this stack.

- Offer an energy indexed inference gateway: Expose simple service tiers that map to grid conditions and provide a uniform interface across clouds.

- Create the memory optimizer for power: Dynamic cache and vector store tuning that targets watts as well as latency will have immediate buyers.

- Sell measurement and verification: Provide trusted telemetry and proofs of curtailment performance that utilities can rely on for settlement.

What could go wrong

- Rebound effects: Efficiency gains can expand total demand. Counter with hard budgets and public progress reporting.

- Equity trade offs: Flexible load may move to regions with lower prices but higher emissions or local sensitivities. Plan with community input and disclose routing policies.

- Fragmented standards: If each utility uses different telemetry formats and event codes, integration slows. Industry groups can publish shared schemas and test kits.

- Cyber risk: More grid to datacenter integration means more surfaces to secure. Treat grid signals as critical infrastructure traffic with strong authentication and isolation.

The near future: power adaptive models by default

If you are building models today, assume that electricity is uneven in time and space. Assume that regulators will prefer sites that help during peaks. Assume that partners will ask for proof that your jobs can pause and move.

The winners will not just run bigger models. They will run models that know how to wait, where to run, and how to share. They will schedule training to chase clean, cheap hours. They will route inference to where the grid has headroom. They will treat memory as a power sensitive resource. They will negotiate, in real time, with physics.

That negotiation is the quiet treaty of 2025. Intelligence that thrives within limits is not weaker. It is wiser. The moat is not only in chips and data. It is in the software that treats electrons as a scarce, strategic input, and designs accordingly.

The punchline

We used to say that models should align with humans. Keep saying it. Now add a second line: models must align with the grid. When electrons constrain intelligence, the smartest systems learn to cooperate. That is how AI grows without tripping the circuit it runs on.