Intelligence as Utility: Build the Pipes, Not the Prompts

OpenAI’s seven year, 38 billion dollar pact with Amazon marks a shift from model demos to dependable delivery. The winners will build an AI utility with peering, portability, safety, and SLAs you can trust.

The moment intelligence crossed the utility line

On November 3, 2025, OpenAI and Amazon Web Services announced a seven year, 38 billion dollar partnership to run OpenAI’s advanced workloads on Amazon’s infrastructure. The announcement did not read like a product launch. It read like a utility buildout. In one stroke, the story shifted from model heroics to pipes, substations, and service guarantees. This is the tell that the frontier has moved. See the OpenAI and AWS partnership for the official framing.

On November 6, 2025, OpenAI went further and stated that access to advanced artificial intelligence should become a foundational utility on par with electricity or clean water. That framing matters. Utilities are expected to be dependable, interoperable, and priced with clarity. You do not haggle with your faucet. You trust that when you open it, water flows, clear and safe. The same expectation is now taking hold for intelligence: turn the tap, get high quality reasoning, reliably and at scale. Read the commitment to an AI as foundational utility.

If you still think the frontier lives in one more clever prompt or one more fireworks demo, you are behind the curve. The winners will not be whoever squeezes the last five points of benchmark score. The winners will be those who deliver utility grade intelligence: multi provider capacity, predictable quality, clean failover, measured safety, and contracts you can run a business on.

The grid, not the gadget

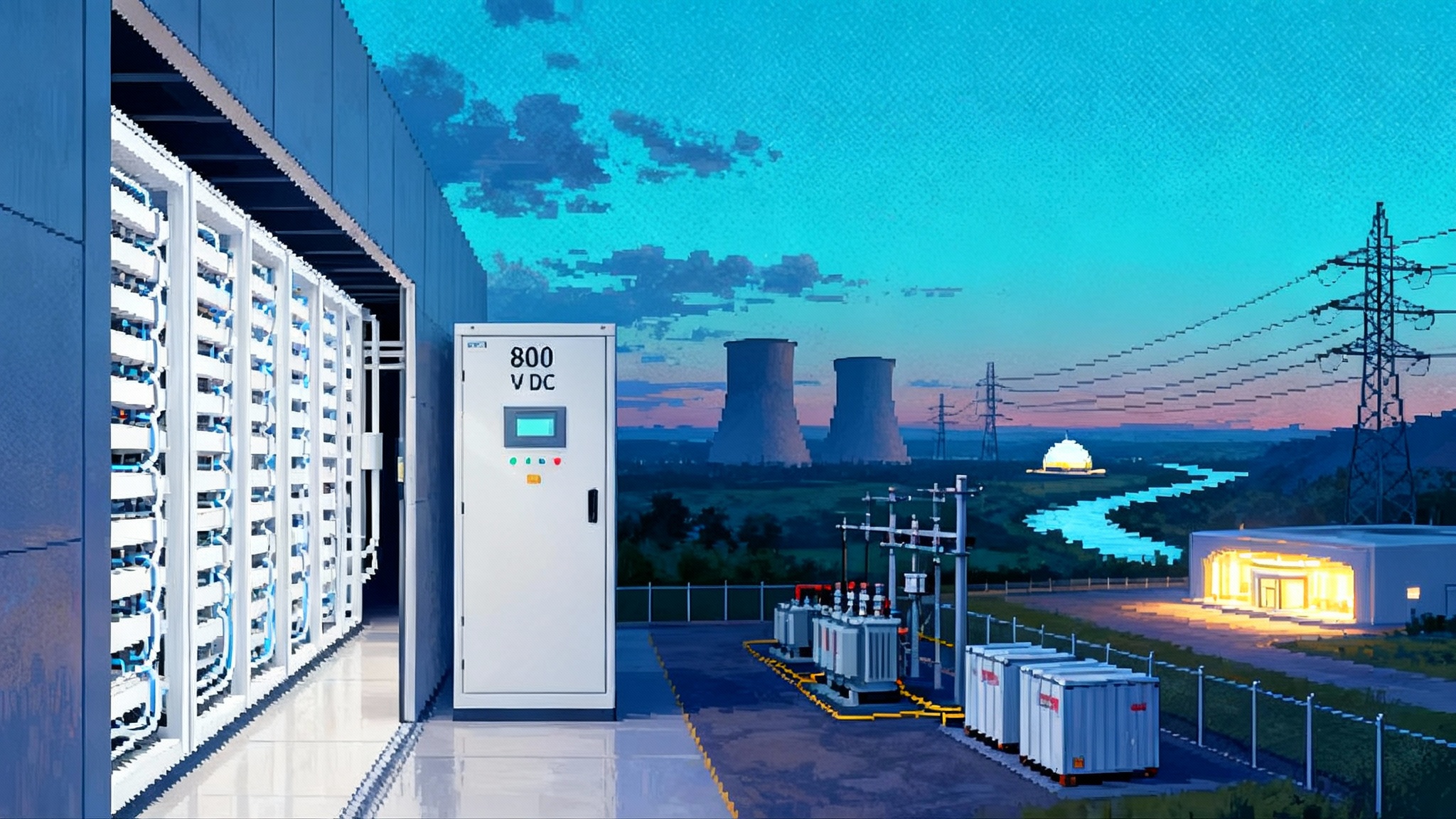

Think of artificial intelligence today like a power grid coming online. The model training clusters are your generation plants. The model hosting layers are your substations. The orchestration and context stacks are the last mile into homes and factories. For a few years, we were transfixed by the shiny turbines. Now we care about the poles, the wires, and the meters.

What makes a grid a grid is not just more megawatts. It is how the network absorbs spikes and failures, how it balances regions, how it bills fairly, and how it lets independent producers connect without drama. The OpenAI and AWS deal is significant not only because of the dollar figure, but because it normalizes the idea that intelligence delivery must be multi sited, multi vendor, and engineered for traffic patterns that look like real life, not lab benchmarks. This is the shift from product thinking to infrastructure thinking.

If the grid metaphor resonates, it is because we are already seeing the rest of the stack form. Interfaces are becoming the new runtime, as we argued in interfaces are the new infrastructure. Energy constraints are reshaping deployment decisions, a theme we explored in AI’s grid reckoning begins. The common thread is that the value is moving into the network, not the node.

The new frontier: polycloud AI peering

Polycloud means more than hedging spend across two vendors. It means your application can route each request to whichever provider currently offers the best mix of price, latency, quality, safety, and energy profile, then fail over without a human in the loop. AI peering is the missing connective tissue. It is the practice of establishing direct, private pathways and commercial arrangements among intelligence providers, much like internet peering among networks or energy exchanges among power pools.

What AI peering looks like in practice:

- Private interconnects among providers at neutral facilities so requests and data do not hairpin across the public internet. Think of modern colocation hubs as the substations where model clusters exchange capacity.

- A routing plane that selects a provider per request using live telemetry: token throughput, ninety ninth percentile latency, refusal rates, and safety filter triggers.

- A settlement layer so compute suppliers can charge fairly for partial work. If a request runs for three seconds on Provider A and then fails over to Provider B for two seconds, both are compensated. No finger pointing.

- Safety peering. Providers can call each other’s classifiers and red team tools in the path, with transparent logs. Safety is not a bolt on after output; it is a first class peering service.

The point is not to play clouds against each other. The point is to keep intelligence available and predictable, the way phone calls and power stay up even when an individual carrier or plant has a bad day.

Standardize the pipes, not the prompts

We have spent two years inventing house dialects of prompt templates. That was fine when the market was small and volatile. Now it is a liability. The prompts themselves are not the asset. The asset is the pipe: stable, well defined interfaces, schemas, and behaviors that survive a model swap or a provider change.

What to standardize:

- APIs and schemas. Use structured input and output with strong typing so requests are portable. If your system relies on a paragraph of prose instructions, you will rewrite it for each model. If your system defines a tool call with a JSON Schema contract and validation tests, it will travel.

- Tooling protocol. Standardize how your agent calls tools, passes credentials, and streams intermediate results. Treat tools as first party citizens, not prompt hacks.

- Trace context. Adopt a common trace and span model so you can follow a request across providers. You should be able to answer, for any identifier, what model produced which tokens at what time, using which tools and what filters.

- Safety signals. Define a shared set of safety events that any provider must emit: refusal reasons, jailbreak detection, and policy categories triggered. If you cannot observe it, you cannot guarantee it.

What not to standardize:

- The exact wording of prompts. Language will evolve. Models will shift. Let providers compete on how well they interpret the same clean, structured intent.

This is where governance meets engineering. The ability to observe, constrain, and explain behavior across vendors echoes themes from the control stack arrives. If you build the pipes well, you do not need to hold the prompts still.

Portability that actually works

Portability is not a press release. It is a set of engineering choices that keep you from waking up trapped. Here is a practical checklist.

- Model routers as a first class service. Route by task, not by provider. For every task, maintain a champion and a challenger. The champion handles 90 percent of traffic; the challenger handles 10 percent. Promote based on measured business outcomes, not gut feel.

- Test suites that travel with your prompts. For every intent, track a small corpus of inputs, expected shapes of outputs, and red line behaviors. When you switch providers, you are not guessing; you are running tests.

- Semantic feature flags. If a provider’s safety filter tightens and starts refusing critical requests, flip a flag that changes the safety preprocessing pipeline or the model choice without a redeploy.

- Context packaging. Treat external knowledge and tools as portable assets. Store them with versioned schemas and clear permissions. The less your system depends on proprietary embeddings or opaque memories, the easier it is to move.

- Execution sandboxes. If your agents execute code, make the runtime portable with strict resource budgets. Do not depend on a provider specific sandbox. Constrain compute, network, and file system access the same way everywhere.

A concrete example: a customer support agent that must never tell a user to reboot a medical device. You encode that rule once as a safety contract and as a set of tests. Whether the request lands on Amazon Web Services, Microsoft Azure, Google Cloud, or a specialist host, you validate the output against the same tests. If a provider begins to drift, your router sees the failures, fails traffic away, and raises a ticket.

Reliability that is worthy of a utility

A Service Level Agreement is a contract that defines measurable reliability and performance. If intelligence is a utility, Service Level Agreements must become boring and precise. Here is a blueprint that customers should ask for, and providers should be ready to sign.

- Availability. 99.95 percent monthly uptime for the control plane and 99.9 percent for model inference in each region. Publish planned maintenance windows and avoid global correlated downtime.

- Latency. For streaming outputs, first token time under 400 milliseconds at the ninety fifth percentile and under 900 milliseconds at the ninety ninth percentile for standard tasks. For non streaming tasks, time to complete under two seconds at the ninety fifth percentile for requests under a fixed token budget.

- Quality guardrails. Providers should publish stable task level metrics that track refusal rate, hallucination rate under a standard test set, and tool call correctness. No one number captures quality, but a dashboard of simple rates makes drift visible.

- Safety uptime. If your safety classifier is down, your API is down. Customers deserve a safety Service Level Agreement alongside availability. Publish a safety incident log with time to detect and time to remediate.

- Data handling. Clear sections on retention, training use, and deletion timelines. Data residency commitments per region and proof of isolation between tenants.

- Incident response. A clock starts the moment an incident impacts customers. Customers receive an initial notice within 15 minutes, hourly updates during mitigation, and a full postmortem within five business days with corrective actions and owners.

On the customer side, adopt Service Level Objectives. A Service Level Objective is an internal reliability target tied to user experience. Pair each objective with an error budget, the tolerated slice of failure. Spend it consciously. If latency exceeds the objective, rate limit proactively or move traffic to simpler models. Reliability is a product choice, not an afterthought.

Safety is an always on service

In a utility model, safety is not a document. It is a service with endpoints, logs, and uptime. Treat safety models, classifiers, and red team tools as peers in the call path. Expose versioned policies and categories as structured signals. Emit refusal reasons, jailbreak detections, and remediation actions as first class telemetry.

Design for safety interoperability. If one provider detects a risky instruction and another provider has the best classifier for that category, your routing plane should be able to call it with low latency and auditable results. Bring the best safety anywhere to every request, and measure it with the same rigor as latency and availability.

Energy, siting, and the real world

Turning intelligence into a utility has physical consequences. Data centers do not run on ideas; they run on electricity and cooling. The industry is converging on multi gigawatt campuses and new power procurement models. Expect siting to favor regions with abundant zero carbon power, flexible grid interconnects, and clear water usage policies.

Portability meets the physical world here, too. If providers peer in neutral facilities near transmission and fiber backbones, customers get lower latency and lower egress fees. If providers publish the carbon intensity per million tokens generated, customers can route sensitive workloads not only on price and quality, but also on energy impact. That turns sustainability from a slide into a dial you can turn. For a deeper view on the energy shift, revisit AI’s grid reckoning begins.

A 90 day plan to build the pipes

You can move from aspiration to practice in a quarter. Use this as a playbook, then adapt it to your product and risk posture.

Days 1 to 30: instrument and isolate

- Introduce a request id that travels across every system involved in a response. Propagate it through your APIs, your tool calls, your logs, and your analytics. Make every event traceable.

- Wrap your prompts in tests that assert structure and safety. Start with ten cases per task. Put them in version control.

- Separate your orchestration layer from any single provider’s SDK. Use simple, portable interfaces. Keep provider specific features behind adapters.

- Stand up a first draft of your safety telemetry. Emit structured refusal events, jailbreak flags, and category tags. Store them alongside performance metrics.

Days 31 to 60: route and rehearse

- Deploy a model router that can split traffic between at least two providers and at least two model families.

- Establish a failure drill. Once a week, block exit to your primary provider in staging and watch your system fail over automatically. Fix what breaks and publish the findings to your team.

- Add a safety budget to your existing error budgets. If refusal or jailbreak rates exceed thresholds, turn down temperature or move to a safer model. Automate the switch.

- Run comparative tests against your fixed corpus for portability. Promote or demote the champion based on outcome rates that your product actually cares about.

Days 61 to 90: contract and commit

- Negotiate Service Level Agreements that match how your product works. Ask for safety uptime and first token time, not just vague availability. Put financial credits behind them.

- Document your portability runbook. If a provider announces a long outage, who flips the flags, where is the checklist, and how do you prove to leaders and regulators that you stayed within policy.

- Publish a small, privacy safe transparency report on your own use of artificial intelligence. Show that you measure quality, safety, and carbon impact. Your partners will trust what you measure.

What this means for vendors and regulators

Vendors:

- Treat cross provider routing as a feature, not a threat. If you make it easy to bring traffic in and out, more customers will come. A rising tide fills the grid.

- Offer a clean, standard interface for tools. Make it trivial for a customer to point the same tool at your models and a rival’s models. Compete on quality and price.

- Publish a safety service and a billable meter for it. If customers can pay for stronger filters and get uptime guarantees, they will.

Regulators and industry bodies:

- Run interoperability tests. Define a voluntary test suite for portability and safety. Offer a public badge for vendors that pass.

- Encourage peering. Just as phone number portability unlocked competition without breaking service, encourage model portability without constraining innovation.

- Ask for real incident reporting. Treat major artificial intelligence outages like major cloud outages: timely notices, shared learnings, and corrective action tracking.

Identity and provenance will anchor trust

As traffic moves across providers and borders, identity becomes essential. You need to know which agent acted, under what policy, with which tools, and on whose behalf. You need authorization that travels with the request and provenance that survives multi hop flows. The scaffolding for this is forming in the agent identity layer. Bring those ideas into your portability plan early, because retrofitting identity is harder than adding a new model.

The takeaway

The past few years rewarded teams that could coax magic out of a single model. The next few years will reward teams that deliver dependable intelligence under load, across providers, with safety you can verify and contracts you can trust. The OpenAI and AWS partnership on November 3, 2025 and the November 6 utility framing are not just news. They are permission to stop improvising and start engineering.

Build the utility fast. Standardize the pipes, not the prompts. When you do, your applications will stop acting like lab demos and start acting like the lights in your kitchen. You will flip the switch, and it will simply work. That is how intelligence becomes a utility, and that is when the real economy level gains arrive.