Electrons Over Parameters: AI’s Grid Reckoning Begins

AI’s next bottleneck is electricity, not parameters. From fusion pilots and nuclear extensions to 800 volt direct current and demand response, the winners will treat power procurement as core product strategy.

The breakthrough that changed the conversation

In late July 2025, a quiet project in Washington state turned into a loud signal for the entire industry. Helion Energy began building a fusion plant intended to feed power to Microsoft data centers later this decade. It moved fusion from press releases to concrete, rebar, and procurement schedules. The timeline is still uncertain, as fusion timelines often are, but the intent is clear. The next constraint on artificial intelligence is measured in megawatts and hours, not in benchmark points. For the most important public details, start with mainstream coverage of Helion’s construction start and Microsoft’s plan to buy its output. Reuters reported Helion's construction.

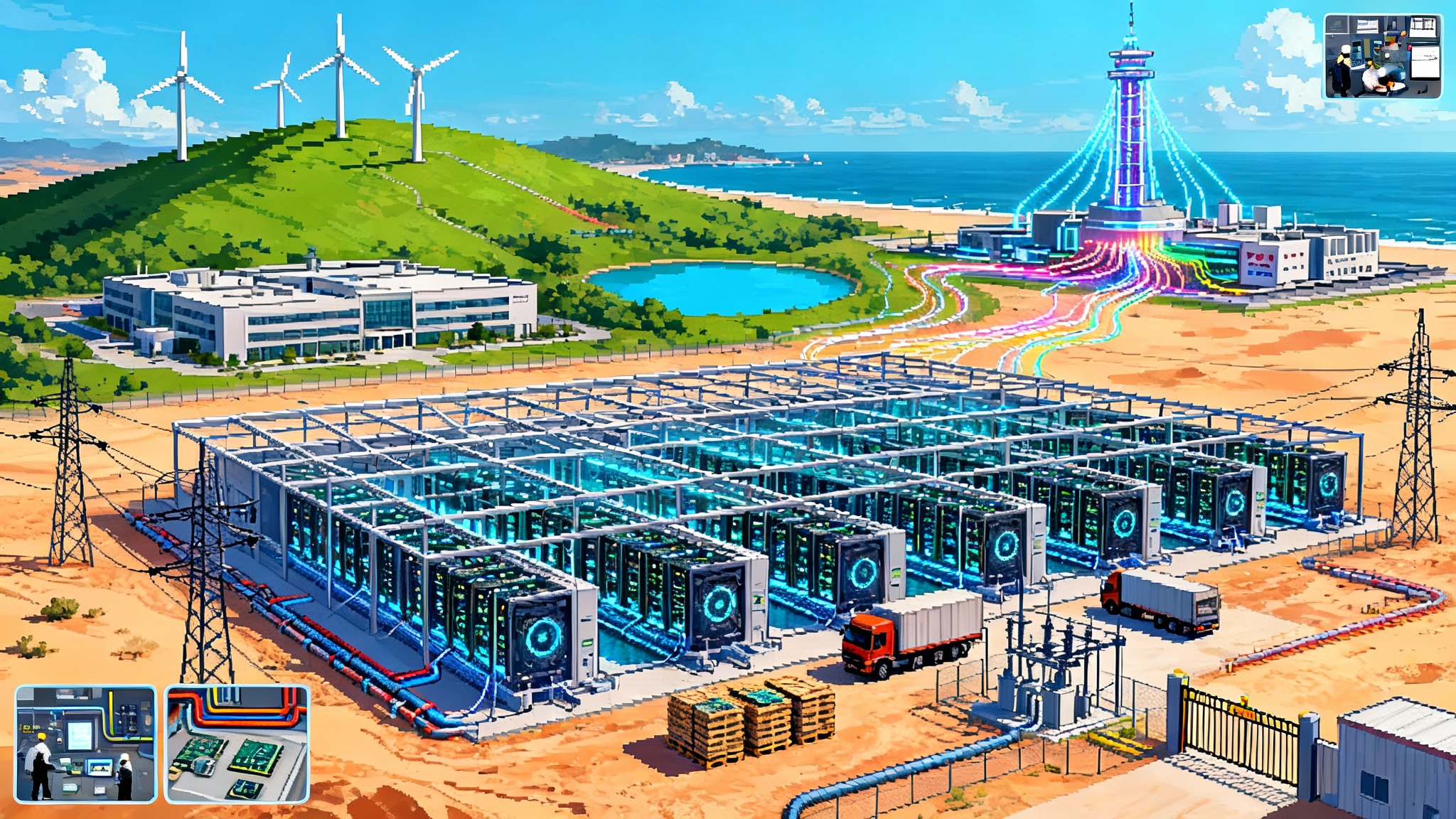

This is not a one off. Across the United States and Europe, hyperscalers spent mid 2025 stitching together long duration and firm power, from conventional reactors to advanced nuclear designs, and from geothermal baseload to flexible storage. The result is a new kind of arms race. The scarce resource is not only talent or silicon. It is electrons that can be delivered precisely when 100,000 accelerators ask for current at once.

The quiet energy arms race

Think of a modern AI facility as a factory that turns electricity into answers. Parameters still matter, but every incremental improvement in model quality now raises the ceiling on required energy. Hyperscalers are reacting with bespoke power portfolios that look more like utility balance sheets than tech procurement lists.

- Nuclear as a service. Multi decade deals with utilities that operate existing reactors signal that keeping licensed plants online can be cheaper and faster than building new gas peakers for reliability. Several cloud providers are negotiating similar instruments with utilities across PJM, ERCOT, and the Tennessee Valley Authority.

- Geothermal as base fabric. Enhanced geothermal projects can contribute round the clock carbon free energy to the same grid that powers nearby data centers. They offer a template for firming portfolios without waiting for perfect weather.

- Storage and on site resilience. Battery systems are no longer just for ride through. Operators are beginning to pair supercapacitors and batteries with subsecond control so that racks can ride GPU load spikes without yanking on the grid. The point is not only sustainability. It is stability.

If the last decade was about signing wind and solar power purchase agreements to meet annual targets, the next decade is about matching real time demand with real time supply, and about owning enough dispatch rights to keep training jobs from stalling at 2 a.m.

Why thermodynamics beats benchmarks

Every generation of model has outgrown the last generation’s power envelope. A single rack that once drew 10 kilowatts now asks for 120 kilowatts. Vendors talk seriously about one megawatt racks by the end of the decade. The reason is simple physics. To move more data between more chips at higher frequencies, you need more power. To reject the heat that power creates, you need more cooling. To avoid wasting energy, you must reduce conversion losses in the power path. The machine learning curve has met the first law of thermodynamics.

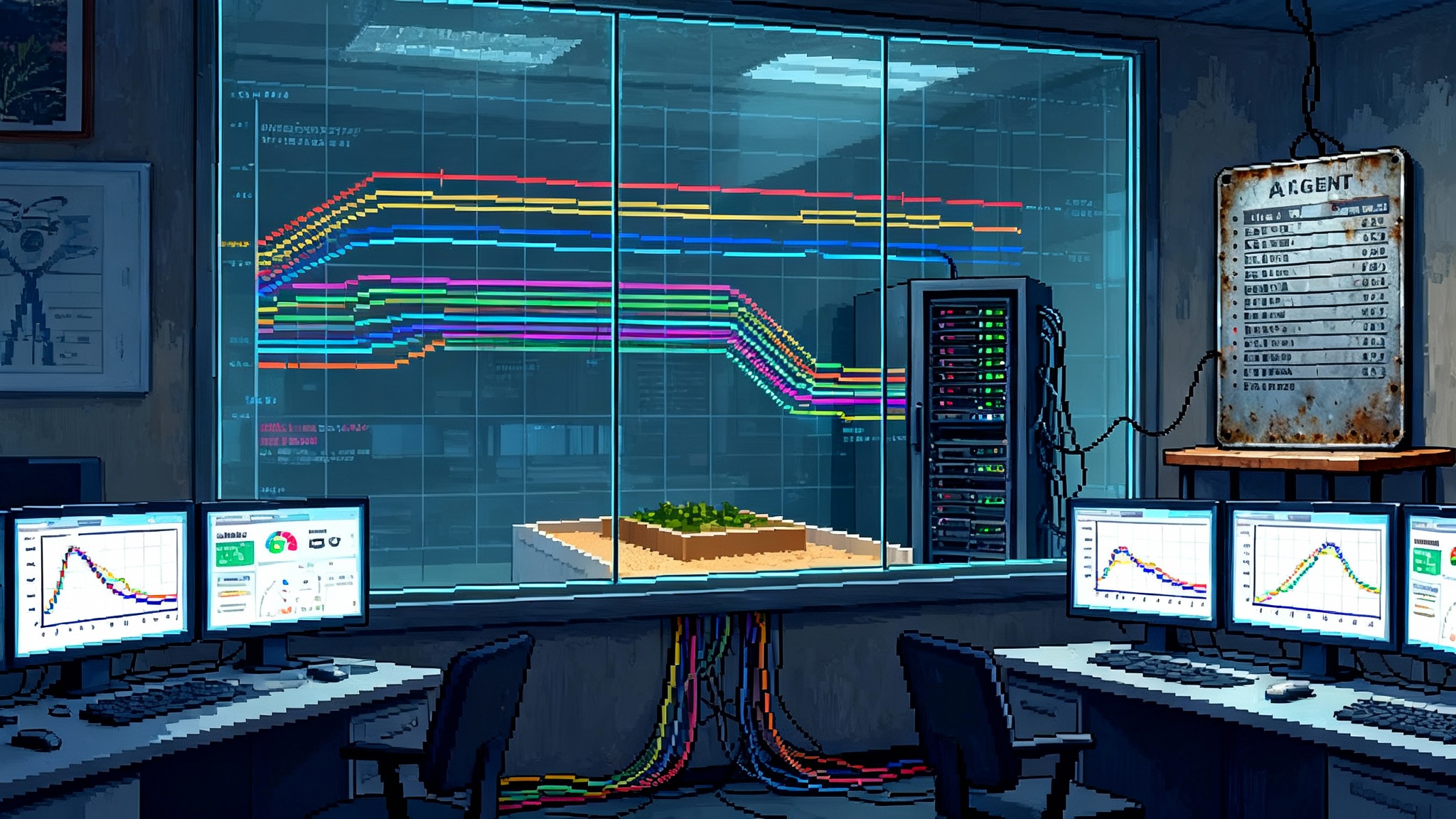

Benchmarks still matter for bragging rights, but watts per token and joules per sample are becoming the operating metrics that drive total cost and availability. When the grid cannot deliver, your cluster does not care that your perplexity improved by 0.2. It cares that the breaker tripped. This shift fits the argument we outlined in our post benchmark era analysis. The scoreboard is changing from leaderboards to load curves.

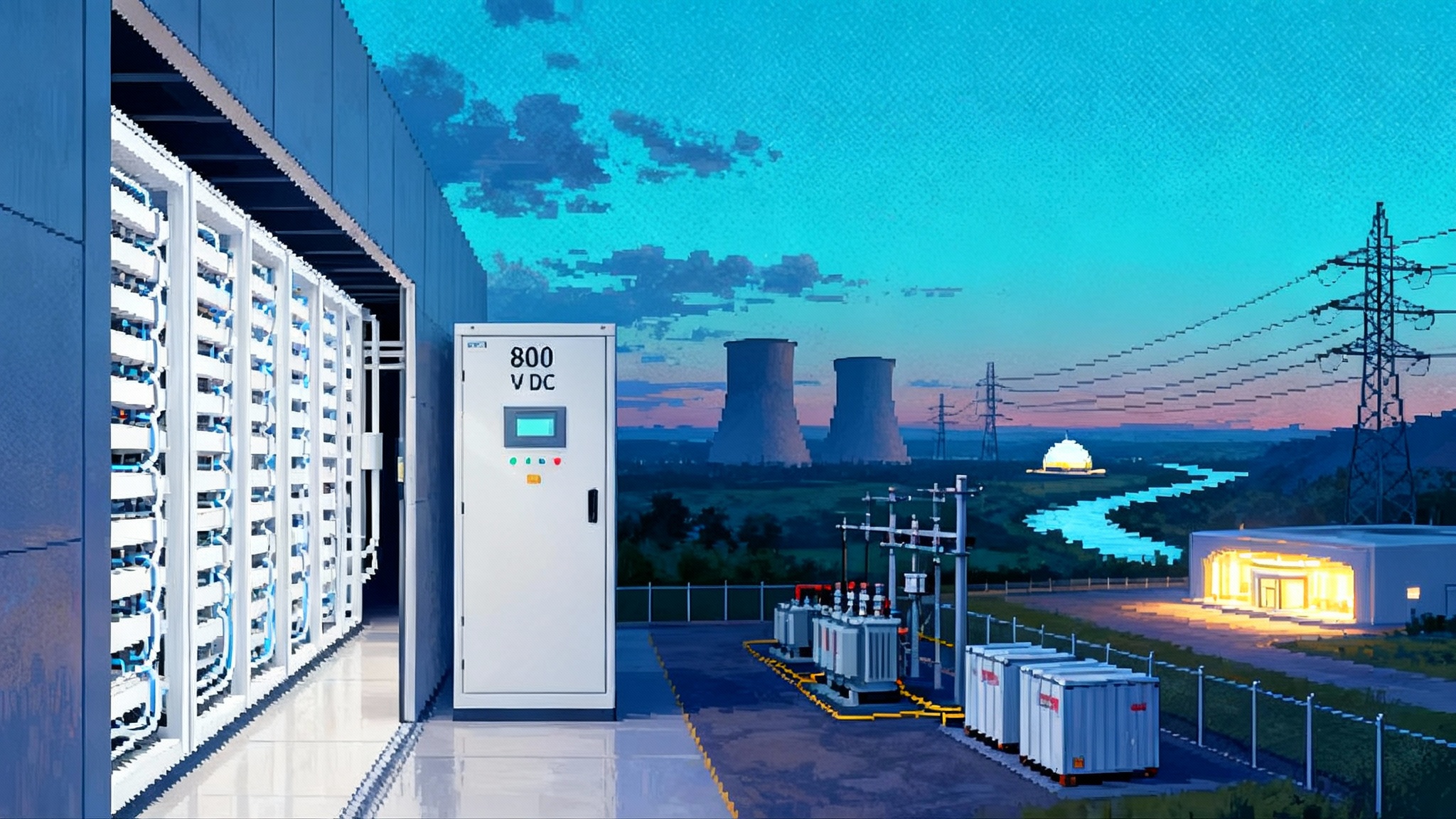

The 800 volt turning point

This year, the industry stopped pretending that a 48 volt bus could scale to the new era. Companies across the power stack announced support for 800 volt direct current distribution inside data centers, a shift that looks technical but is strategic. Fewer conversion stages mean less waste and less heat. Higher voltage means lower current for the same power, which means thinner copper and fewer bottlenecks. Most important, it means the power system can track the fast transients of GPU loads without collapsing.

NVIDIA made the pivot explicit by detailing an 800 volt architecture, with solid state transformers at the perimeter, central rectification, and direct current distribution feeding rack level converters down to the chips. Partners across semiconductors, power gear, and data center infrastructure quickly lined up to support the design. For a technical overview from the source, see how NVIDIA explains 800 volt DC.

If you prefer a metaphor, imagine the old 48 volt approach as a city that forces every truck to unload and reload its cargo at each neighborhood boundary. The 800 volt plan is an expressway that runs close to each home, with just one small transfer at the end. Less idling, fewer handoffs, lower risk of delay.

What changes inside the building

- Centralized conversion. Move heavy conversion to the perimeter where it is easier to service, cool, and monitor. Convert once at high efficiency, then distribute direct current to rows.

- Transient fidelity. High voltage buses respond more gracefully to sudden load steps from coordinated GPU activity. That keeps clocks up and error rates down when training pushes the system.

- Serviceability. Clear procedures for hot swap at the row and rack level reduce downtime. Expect standardized lockout and tagout for energized racks, with dedicated training and test bays.

Demand response becomes a feature, not a patch

For years, data centers were treated as must serve loads with little flexibility. That story is changing. Operators are now contracting for demand response as a first class feature. The new goal is to shape the compute curve to the supply curve, without sacrificing reliability or throughput.

- Shiftable work. Non real time jobs, like data augmentation and some pretraining phases, can be time shifted into hours with surplus wind or low wholesale prices. Schedulers can do this without hurting latency sensitive inference.

- Flexible baseload. With 800 volt direct current and central power conversion, operators can add energy storage at the perimeter and modulate the direct current bus for seconds to minutes. This gives the grid headroom during frequency events while keeping racks stable.

- Compute shedding with guarantees. Instead of a binary on or off request from the grid operator, new contracts specify ramp rates, minimum compute floors, and compensation bands tied to the value of avoided outages. This turns a data center campus into a finely tunable resource, not a simple burden.

What changed is the level of coordination between grid operators and campus operators. The grid asks for help, and the campus responds with predictable power modulation, because the power train and the job scheduler were designed to support it.

The new moat: power procurement and grid integration

The competitive advantage of the next two years will come from how well a company can secure, shape, and control energy. That advantage looks like a moat when three conditions hold. Long term firm supply is locked in. Interconnection is fast tracked. The campus can act like a miniature utility.

- Supply. Multi decade contracts with nuclear operators and advanced geothermal developers hedge price and volatility. Where possible, companies co invest to extend the life of existing reactors or help underwrite advanced projects. These deals can include first call rights on output during grid stress.

- Interconnection. The hardest part of any new campus is not the land. It is the substation, the queue, and the permits. Teams that learn to navigate regional transmission organization processes, negotiate cost share for new lines, and stage substation buildouts in parallel with facility construction will outrun competitors who wait at the back of the line.

- Campus utility. With on site storage, perimeter power electronics, and 800 volt direct current distribution, operators can provide frequency response, voltage support, and black start assistance. That can earn grid revenue and community goodwill while improving uptime.

This is also a governance shift. Once power becomes the bottleneck, the procurement team becomes a core part of AI strategy. The site development lead is no longer a facilities manager. They are a grid diplomat whose work determines launch dates and revenue.

For companies building outside the classic North American and European hubs, these choices intersect with national industrial policy. Our take on the state capacity side of the story is captured in the sovereign AI factory buildout.

What this means for model builders and product teams

Teams who design and deploy models will feel these power decisions in daily work. Expect new constraints and new opportunities.

- Budget by energy, not only tokens. Training budgets will increasingly express a hard cap in megawatt hours. This encourages better curriculum design, more efficient optimizers, and smarter checkpointing.

- Scheduling with price signals. Cluster schedulers will expose real time energy price and carbon intensity as inputs. Model owners can choose to run in greener or cheaper windows when possible, and pay a premium only when necessary.

- Hardware and topology as power policy. Choice of interconnect, memory, and parallelism strategy will be judged partly by watts per token. Designs that minimize data movement will get first call on constrained power windows.

The organizational implication is simple. Resource allocation will not be a single global queue. It will be a portfolio of compute pools that reflect cost, carbon, and firmness. This is already visible in the move to multi cloud and multi region strategies, which we covered as poly cloud breakout dynamics.

How utilities and regulators can turn this into a win

The surge in compute demand creates stress, but it also creates a once in a generation chance to modernize the grid. Three levers matter most.

- Standardize flexible load contracts. Define templates for ramp rates, response times, telemetry, and settlement to let campuses participate in ancillary services without bespoke negotiations.

- Prioritize firming portfolios. Reward projects that add clean firm capacity or extend the life of plants that provide inertia and voltage control. Streamline relicensing where safety and community conditions are met.

- Build shared infrastructure. Encourage substation co location zones near transmission backbones, with clear interconnection timelines. Treat data center clusters as planned load, with coordinated transmission upgrades rather than ad hoc hookups.

The short version. Treat AI campuses as grid actors. Measure their performance and pay for it, just as you would any other flexible plant.

Design principles for the 800 volt era

The move to 800 volt direct current is not only a wiring diagram update. It is a system design philosophy.

- Fewer conversions, fewer failures. Every conversion stage is a heat source and a failure point. Centralize at the perimeter and convert once near the chip.

- Thermal first. Liquid cooling, warm water loops, and heat reuse to district systems should be baseline. Thermal design determines power density, which determines revenue per square foot.

- Measure the right metrics. Track end to end wall plug to model efficiency. Report watts per token, joules per sample, and energy cost per million tokens. Publish the numbers so software and model design teams can optimize for them.

- Protect service windows. Design maintenance procedures and training so that technicians can swap boards safely in energized racks with clear boundaries and interlocks.

What to watch through 2026

- Fusion pilot milestones. Helion’s buildout will hit regulatory and engineering gates. Each pass will accelerate copycats and new financing models. Each delay will push more capital toward proven nuclear and geothermal.

- Nuclear extensions and uprates. Expect more deals that support license extensions and small capacity uprates at existing reactors, paired with guaranteed offtake from hyperscalers.

- 800 volt reference designs. By 2026, the market will settle on a few hardened topologies for direct current distribution, safety, and serviceability. The leaders will publish maintenance procedures that let technicians swap boards safely in an energized rack.

- Compute aware tariffs. Utilities will experiment with data center specific tariffs that reward predictable flexibility. The best will integrate telemetry from the campus to verify performance without adding overhead to operators.

A concrete playbook for operators

If you run an AI infrastructure team, here is a simple list of moves that work today.

- Build an energy balance sheet. Map hourly demand against firm supply for the next five years. Buy or develop enough firm resources to cover the base. Use demand response and storage for the rest.

- Stand up an 800 volt pilot row. Do not wait for the perfect spec. Work with vendors to deploy one row of racks with perimeter conversion, direct current distribution, and safe service procedures. Gather data and train staff.

- Integrate the scheduler with energy. Expose price and carbon signals to job submission. Give model owners a knob for speed versus cost. Celebrate teams that hit targets with fewer megawatt hours.

- Treat the substation as a product. Assign a product manager to interconnection. Track milestones, dependencies, and risks like any launch. Your substation is your most important release of the year.

- Partner locally. Offer grid services, heat reuse, and workforce training. Communities that see value will help you clear permits and attract talent.

A note on risk management

- Do not assume firm supply will arrive on time. Stage contingency plans that include temporary generation and portable storage for commissioning.

- Insure for curtailment. Structure contracts that pay when interconnection or transmission delays force you to underutilize a campus.

- Audit power paths. Validate direct current bus stability under worst case load steps. Invest in metering at every boundary so you can trace losses and fix them.

The human and environmental stakes

Moving gigawatts of new load onto the grid is not only a technical challenge. It is a social license question. Communities care about water, noise, views, and jobs. The new governance layer around AI factories must include transparent reporting on water use, carbon intensity, and local benefits. The operators that treat community trust as part of uptime will earn time and optionality when they need it most.

There is also a real environmental upside. If the industry uses its buying power to extend the life of clean firm plants and to commercialize next generation geothermal and advanced nuclear, the result can be a grid that is cleaner and stronger for everyone, not just for cloud customers. That is a path out of the false choice between more compute and more emissions.

The line that now runs through everything

For a decade, we measured AI progress by model size, parameter count, and leaderboard wins. In 2025 the industry admitted what energy teams already knew. The valuable line is the one that runs from a substation to a busbar to a chip. Companies that can secure clean, reliable power, distribute it at 800 volts with minimal waste, and knit it into the rhythm of the grid will outship those who only count parameters.

The reckoning is not punishment. It is clarity. The next leap in AI will not come from a clever trick alone. It will come from designing systems where thermodynamics and algorithms cooperate. That is how you build a factory that turns electrons into intelligence without burning through the planet or the patience of the grid. The winners will be the ones who learn, quickly and concretely, to treat power as the most important model parameter of all.