Sovereign AI Goes Industrial as Nations Build AI Factories

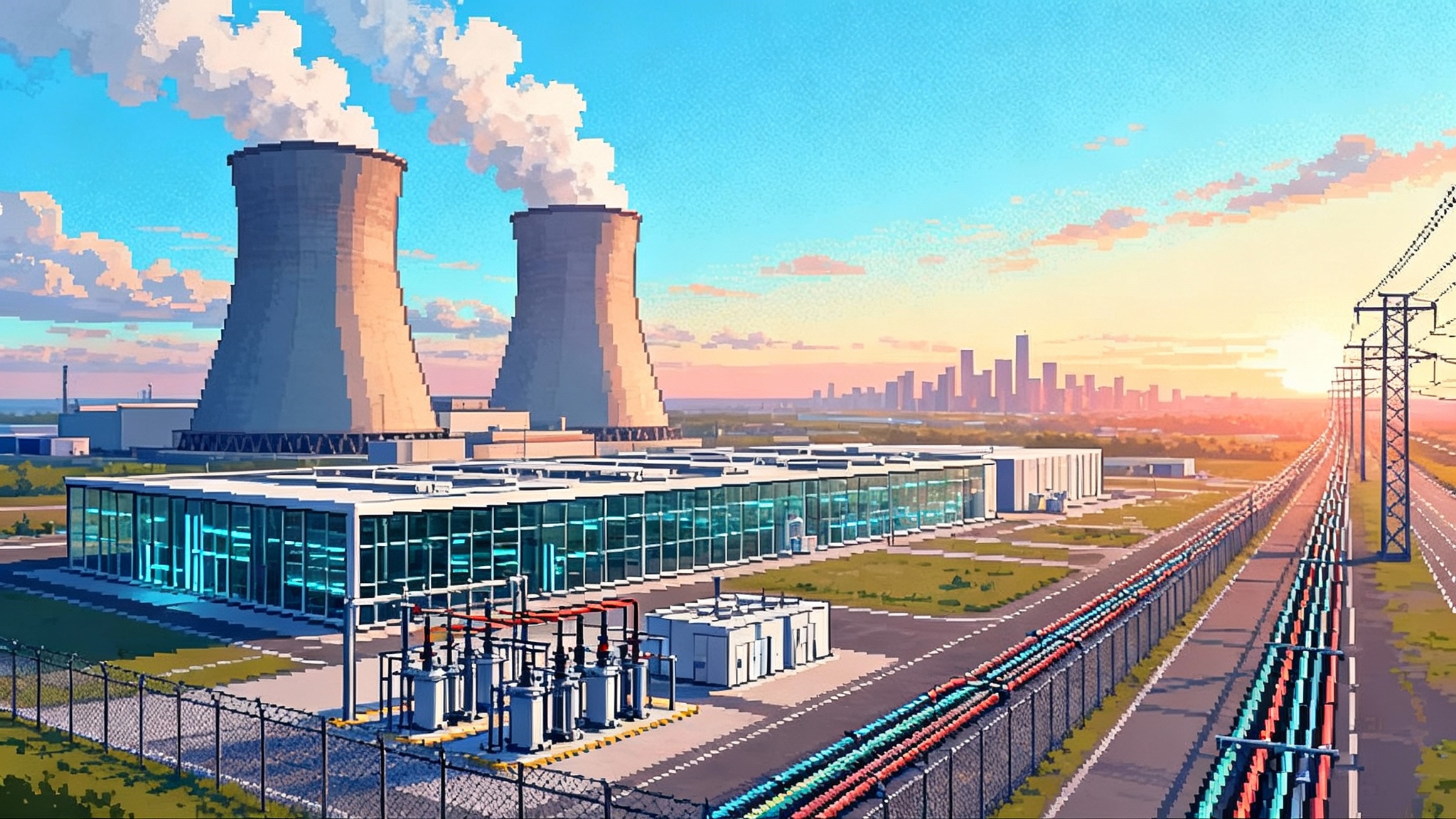

In 2025, Saudi Arabia, the United Kingdom, and South Korea began treating AI as state infrastructure. AI factories, sovereign models, and compute pacts are turning power, chips, and data into durable national capability.

The month intelligence became an industry

Something real shifted in late spring 2025. What had felt like a software footrace between labs started to look like a buildout of national infrastructure. Governments did not just buy cloud credits. They mapped land, booked power, reserved chips, and drafted compliance perimeters. Leaders used the phrase AI factory with intent, signaling that intelligence would be produced the way nations once produced steel, cars, and refined fuel.

Treat an AI factory like a 20th century assembly plant. Chips are machine tools, power is fuel, data is the steel, and alignment is the quality standard. The output is not sedans but reasoning capacity delivered as models and agents. Bringing that industrial line home turns AI from a product that lives in someone else’s region into a capability measured in megawatts, uptime, and audit logs.

Saudi Arabia’s compute-to-energy strategy

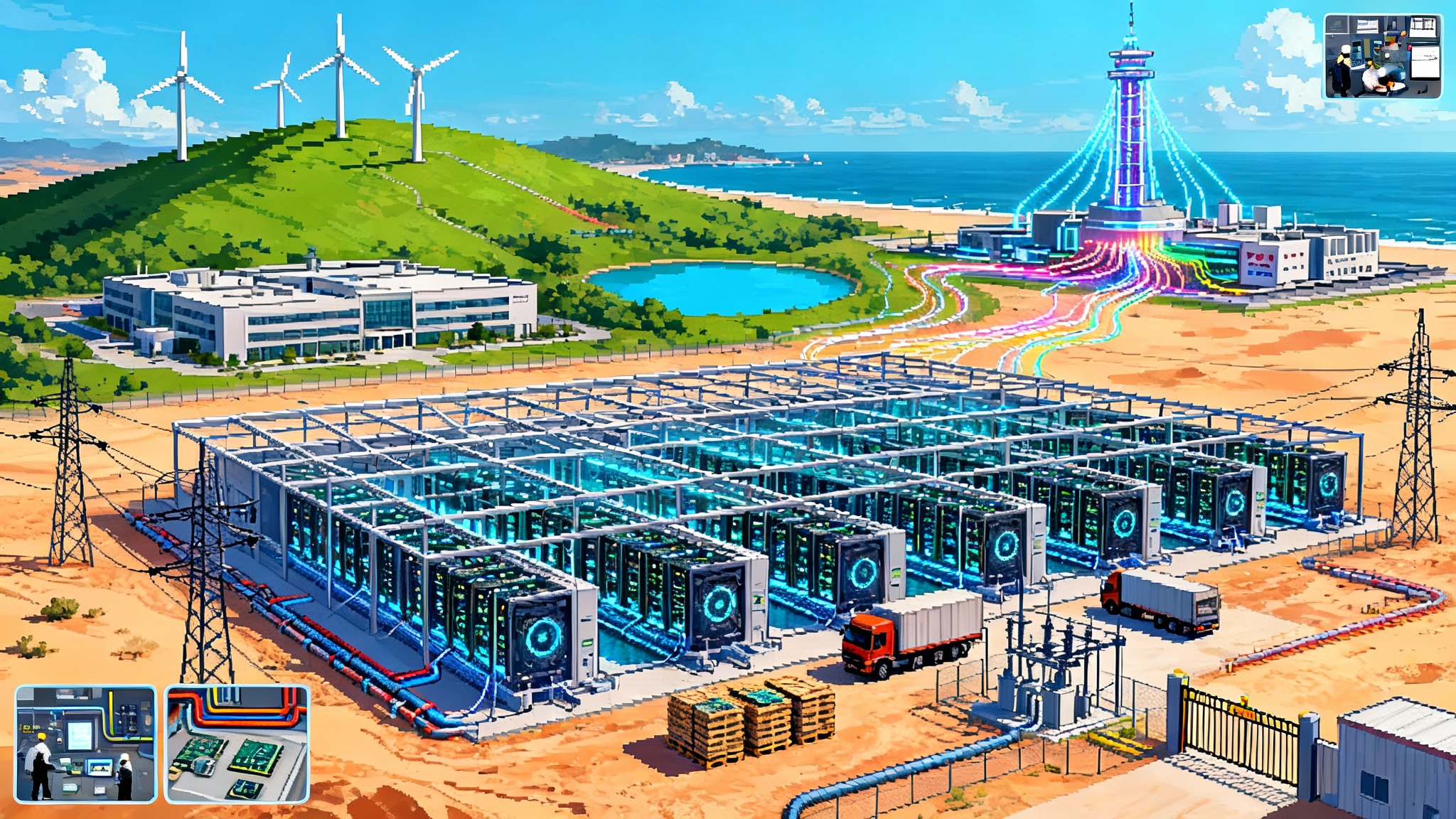

Saudi Arabia offered the clearest early signal. Through a new platform aimed at national AI buildouts, the kingdom announced plans for training clusters that begin around 18,000 next generation accelerators and scale toward multi hundred megawatt campuses over a five year horizon. The idea is simple and ambitious. Convert abundant energy into digital intelligence, export spare cycles to a regional market, and move from hydrocarbons to compute as a core development lever.

Why this works comes down to two advantages:

- Affordable, scalable power. Training and large scale inference have become energy businesses. Data centers are the conversion machinery. Regions with cheap, reliable, and clean power can price intelligence competitively.

- Industrial policy capacity. A sovereign buyer can secure chip allocations, fast track permits, prewire grid upgrades, and negotiate multi decade power contracts. That compresses timelines from years to quarters.

There are real risks. Domestic demand might lag early capacity and create oversupply. Export controls can also change the rules midstream. Yet if licensing regimes tie permission to site governance and security telemetry, then compliance becomes a comparative advantage. Nations that can operate high assurance facilities keep the licenses and the chips flowing.

For readers tracking the power side of this story, we previously argued that AI’s next bottleneck is power. That constraint is exactly why regions using energy strategy as AI strategy are moving first.

The United Kingdom’s sovereign compute by design

The United Kingdom pursued a different but complementary path. Rather than chase generic cloud capacity, Britain began to encode compute into national planning. Growth zones were identified, public research access was scoped, and sensitive workloads were slated to run under British law with domestic audit rights. In parallel, private builders committed to dedicated AI campuses, including sites in greater London designed to scale from roughly 50 to 90 megawatts as new accelerator generations arrive.

Three design choices stand out in the UK model:

- Jurisdictional clarity. Certain public services and regulated industries will require training, fine tuning, and inference onshore, under local compliance and audit. This is not digital mercantilism. It is legal hygiene for finance, health, and safety critical sectors.

- Shared capacity with reserved lanes. Public labs and universities gain time on high end compute they could not finance alone, while reserved slices protect national projects such as biomedical research and climate modeling.

- Planned siting. Designated zones with prearranged grid upgrades, water reuse plans, and liquid cooling allowances compress multi year permitting into months. Compute becomes a land use plan, not a tactical procurement.

The result looks like a template others can copy. Anchor sensitive workloads in country, specialize private builders around siting and cooling, and connect both to a public mandate for research access.

South Korea’s sovereign foundation models

In August 2025, South Korea moved from aspiration to execution. Ministries selected national consortia led by leading technology firms to build sovereign foundation models in Korean, trained on Korean data, and aimed at Korean public services and industries. Licensing is flexible where it can be, with open weight releases where safety allows, and service models where it does not. The rationale is straightforward. Language, law, and norms shape behavior in model systems. A country that wants reliable results for its citizens needs models that speak its vernacular and reflect its institutions.

This approach offers a preview of values forward modeling at scale. In education, a sovereign model can embed national curricula and exam formats at pretraining time, not only through downstream prompts. In healthcare, a multimodal model tuned on properly consented domestic imaging archives can improve accuracy for diseases and demographics prevalent at home. The bet is that alignment is not one size fits all. It is a product of data, evaluators, and the downstream institutions that certify acceptable behavior.

From cloud product to state capability

For a decade, the center of gravity was cloud software. Models were consumed through APIs, trained wherever power was cheapest and chips were fastest. In 2025, training, tuning, and hosting began to migrate into the category of national capability. Four forces explain the shift:

- Scarcity moved upstream. Allocations for advanced accelerators are booked long before delivery. States can bargain at that level in ways most firms cannot.

- Energy is strategic. Multi hundred megawatt campuses need multi decade power. That is grid planning and permitting, which are inherently political.

- Data residency hardened. Courts, regulators, and citizens demand that sensitive data be processed under domestic rules, which changes where models live.

- Security became physical. National security grade access control and telemetry are easier to guarantee when the owner of last resort is the state.

Once capability sits with the state, geopolitics follows.

Model treaties, export controls, and compute pacts

If the last energy era was organized by pipelines and production quotas, this one is cohering around three instruments.

- Model treaties. Bilateral or club style agreements harmonize evaluation methods and reporting for general purpose models. They establish minimum test suites for misuse, set red teaming procedures, and commit partners to share results under defined confidentiality. Think of them as passports for models, where compliance certificates travel with weights and version hashes.

- Export controls. Controls are evolving from blanket restrictions to sieves. Licenses are coupled to site level assurances, uptime of security tooling, and audit rights. The same chip can be lawful in one data center and restricted in another, depending on governance. The scarcest commodity is not only chips. It is credible compliance that keeps licenses flowing. See our view on attestation in Attested AI becomes default.

- Compute pacts. The new counterpart to power purchase agreements bundles long term chip supply, energy contracts, land use, and security rules into one instrument. Pacts often include reciprocal commitments on workforce training and public interest capacity. They turn model demand into shovel ready projects that a grid operator, a lender, and a cabinet can sign.

Taken together, these tools are building a federated AI internet. Instead of one global model accessed through a few hyperscale clouds, many national and sectoral models will interoperate through gateways. Some will be open weights for diffusion. Others will be licensed services with hard audit trails. The federation will not be tidy. It will be stitched together by translation layers, compatibility profiles, and policy bridges that look a lot like telecom roaming did in the 1990s.

Acceleration, divergence, and where value accrues

Competition at this altitude does something paradoxical. It speeds up capability and diffusion because buyers are large, decisions are political, and capital is patient. You can negotiate a 200 megawatt power allocation faster when the cabinet prioritizes it. You can justify tens of thousands of accelerators when the treasury treats them like strategic stockpiles. You can get frontier models into schools and clinics sooner when the state is also the anchor tenant.

Speed creates divergence. Alignment will fork along cultural and legal lines. A tax agency may require models that never pass a strict hallucination threshold when generating notices. A media regulator may demand watermarking not accepted elsewhere. A defense ministry may insist on latency and control that drive procurement toward local hosting even when a public cloud would be cheaper. The likely outcome is a world of compatible but not identical models. That is healthy if we build the right bridges.

Where will value accrue as the landscape evolves? Three layers matter most:

- Energy compute hubs. Regions that pair cheap, clean power with cooling and transmission will mint persistent rent. Think of them as intelligence refineries. Success depends on grid upgrades, water reuse, and siting policy, not clever prompts.

- Model stewards. Institutions that evaluate and certify models for cross border use will become the notaries of the AI economy. Their marks will unlock markets. Ministries, safety institutes, and third party labs will compete to be the trusted stamp.

- Data trusts and domain owners. Whoever assembles high value, lawful datasets with clear governance will decide which sovereign models become indispensable. Hospital consortia, agricultural boards, and financial regulators can build moats by curating clean, consented data. For a deeper look at the licensing turn, see the consent layer for licensed training.

Concrete moves for policymakers and builders

The policy to do list is actionable. Start small and compound.

- Sign reciprocal evaluation memoranda. Agree on a shared test suite, formats for reporting, and emergency revocation procedures. Start with two partners and grow to a club. That makes model treaties real rather than rhetorical.

- Treat compute like a utility. Require developers of large campuses to file integrated energy and water plans, heat reuse strategies, and grid balancing services. In return, commit to fast track permits and long term price certainty.

- Build sovereign data trusts. Fund public institutions to assemble domain specific corpora with robust consent and clear licensing for both closed and open models. Require lineage tracking and publish machine readable documentation so model stewards can certify provenance.

- Create public interest offtake. Reserve slices of national capacity for universities, small firms, and public agencies. Publish transparent queues, prices, and service level targets. This builds a local AI economy rather than only hosting global workloads.

- Incentivize open weight baselines. Where safety allows, support open weight sovereign models to seed ecosystems. Pair them with guardrail toolkits, audit trails, and safe fine tuning recipes so small teams can build responsibly.

Companies have a matching list.

- Localize compliance from day one. Build to the strictest club you want to sell into, not the loosest one you can pass.

- Partner with grid operators early. Power is now a procurement partner, not a line item.

- Choose domains with sovereign data. Find markets where a curated dataset exists but a champion model does not. Become the standard model for shipping, pathology, tax compliance, or another vertical that a nation is actively enabling.

- Design for attestation. Assume verifiable provenance, secured supply chains, and runtime integrity checks are table stakes. That posture aligns with the direction described in Attested AI becomes default.

- Prepare for the agent era. As sovereign infrastructure matures, firms will operate like software runtimes where agents coordinate workflows under tight controls. We explored this shift in the firm turns into a runtime.

Culture will change with the infrastructure

Sovereign models will not only reshape supply chains. They will shape culture. A schoolchild will learn from a tutor that speaks in local idioms and teaches to local curricula. A small business will file taxes with an agent trained on national rules and agency guidance rather than generic templates. A journalist will interrogate a model that cites domestic case law with local precedents. The texture of the internet will tilt toward places where compute and data are co located, and national cultures will become more legible in AI mediated life.

None of this needs to fragment the web. Federation works when we build standards for compatibility and norms for safety. That is why model treaties, export regimes bound to site governance, and compute pacts that include public capacity matter. They convert political will into working infrastructure without breaking the network effects that made AI useful in the first place.

The bottom line

May and June 2025 marked the moment sovereign AI became industrial strategy. Saudi Arabia treated compute like a refinery input and started building. The United Kingdom scoped sovereign workloads and locked in sites and chips. South Korea committed to national models tuned to its language, law, and industries. The race will accelerate capability and diffusion. It will also force hard choices about openness, alignment, and who captures the rents of intelligence. If the last century’s power was measured in barrels, the next will be measured in model tokens per kilowatt. The smart countries will make sure those tokens are minted on their soil and spendable across borders.