Proof-of-Compute Is Here: Attested AI Becomes Default

Confidential AI moved from policy talk to cryptographic proof. Learn how compute passports, TEEs, and GPU attestation are reshaping data deals, compliance, and platform moats across the AI stack in 2025.

Breaking news: the runtime became the trust model

Something foundational shifted in the second half of 2025. Major clouds rolled out confidential AI runtimes that pair hardware Trusted Execution Environments with cryptographic attestation across CPUs and GPUs. On Google Cloud, confidential virtual machines that attach NVIDIA H100 GPUs now support driver and device verification, including remote GPU attestation that returns signed claims you can present to a counterparty or regulator. The practical guidance lives in Google Cloud’s documentation for confidential computing and GPU device attestation, including how to use NVIDIA’s verifier, as shown in the Google Cloud GPU attestation guidance.

The headline is not only that confidential AI became widely available, but that the locus of trust moved. For high stakes AI, the default answer is no longer trust a policy binder. It is prove where and how cognition ran.

From policy to proof

Trusted Execution Environments, or TEEs, are secure compute zones that encrypt memory and restrict privileged access so cloud operators, hypervisors, and neighboring tenants cannot peek. What changed in 2025 is that CPU TEEs met GPU device attestation, and clouds braided both into an ordinary developer workflow. The result is a verifiable chain from boot to model execution that lets you ask and answer new questions with math, not memos:

- Did this job run inside a TEE with the measurements I expect?

- Which driver and firmware actually touched my model weights and prompts?

- Which GPU device and microcode were in the loop?

- Was the run fresh, or is someone replaying an old token?

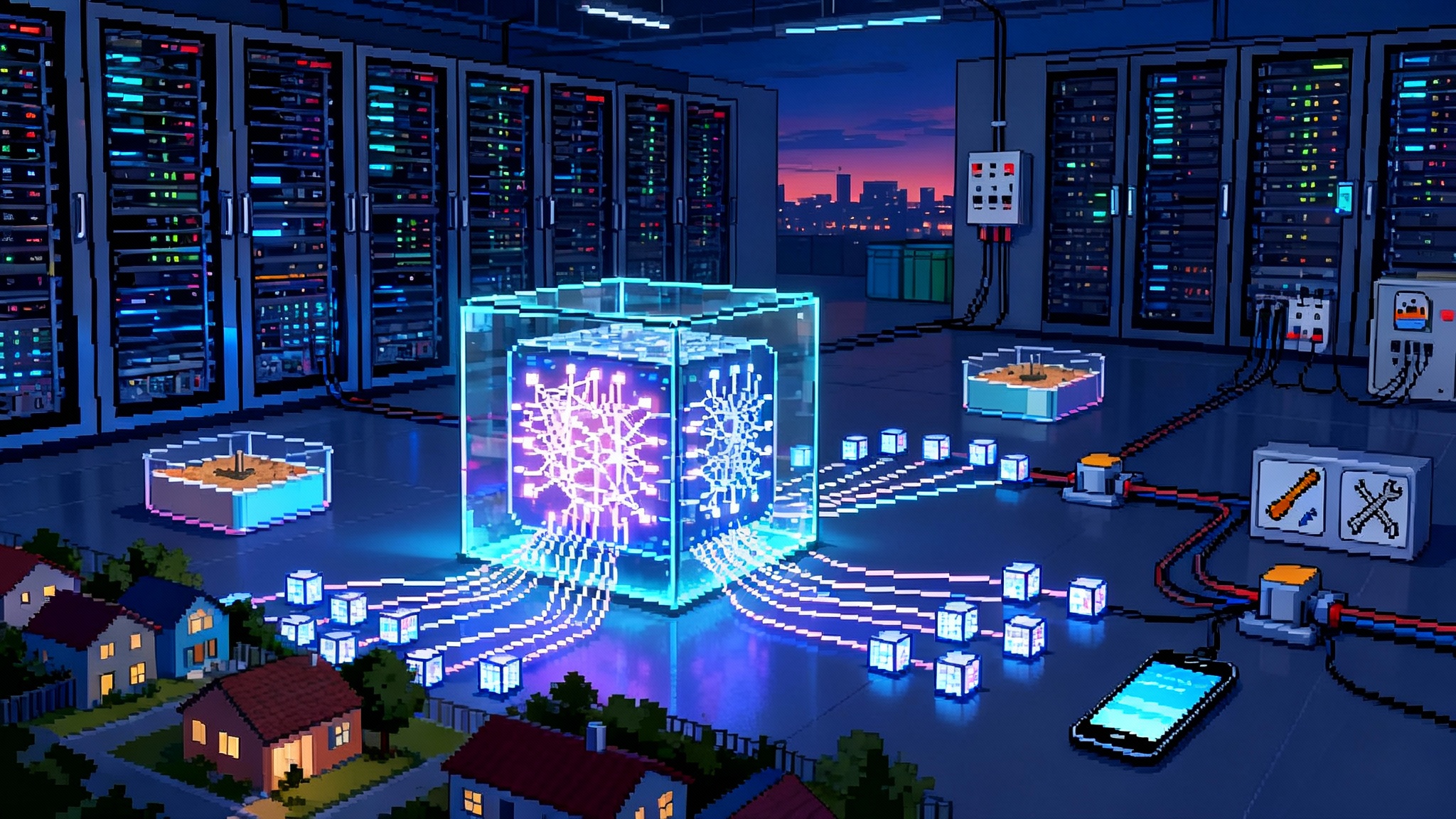

In practice, the chain looks like this. At launch, the CPU TEE measures firmware and kernel, exposing a signed report. The GPU driver sets up an authenticated session with the device and collects evidence about the GPU stack. A remote verifier issues a signed attestation token that encodes claims like device identity, firmware version, driver lineage, and certificate status. Your service only releases the decryption key for the model or data if the claims match a policy you define.

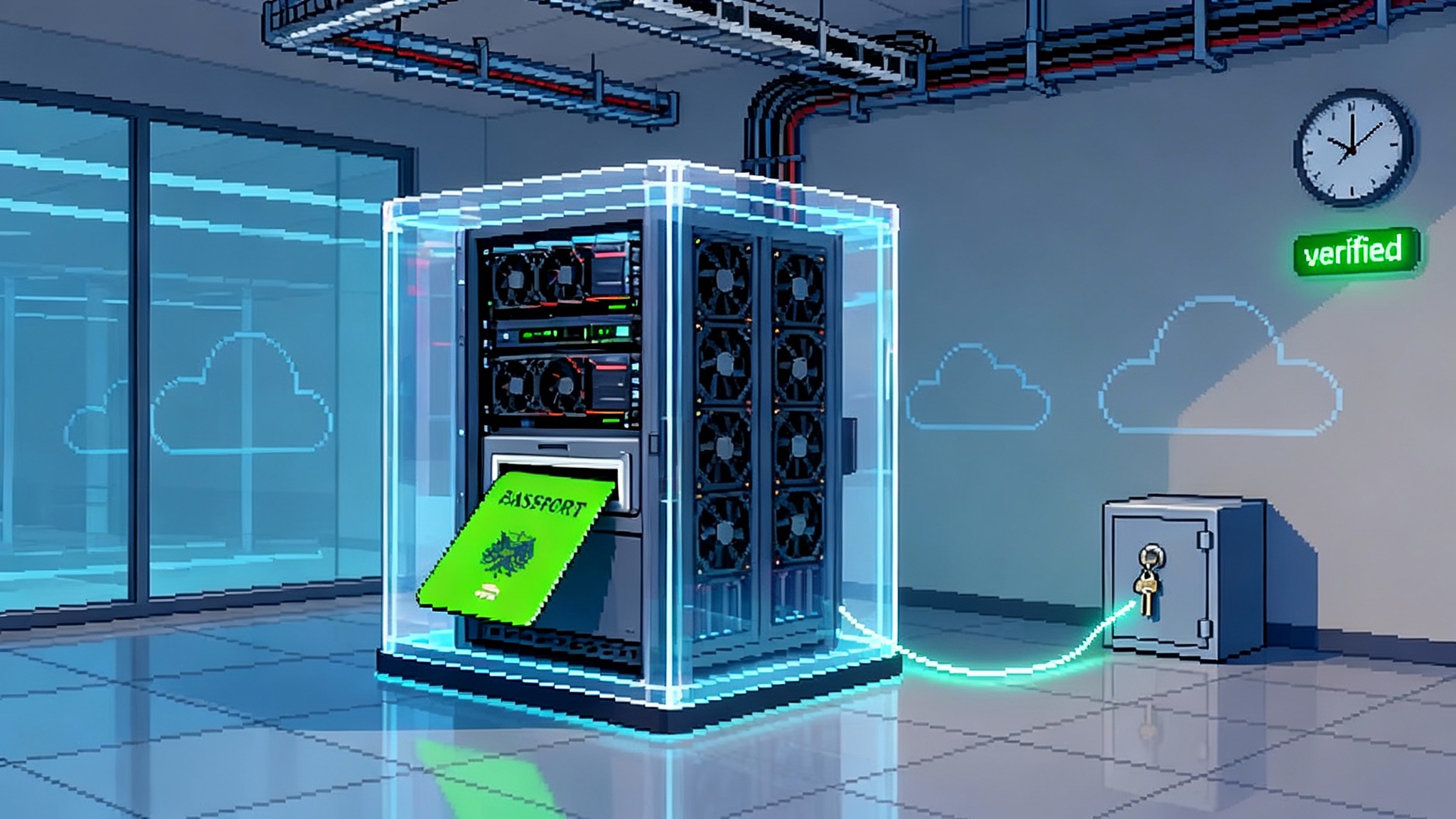

If that sounds like a passport check at an airport, that is the right mental model. Data, weights, and prompts sit behind a border control. The attestation token is the passport. The verification service is the customs officer. If the stamps are wrong or expired, the gate never opens.

Why now, and why GPUs mattered

CPU TEEs matured first. But most AI runs on accelerators, and until this year the proof story for the device doing the heavy lifting was thin. Two ingredients matured in 2025:

- Vendor attestation services that sign device claims. NVIDIA’s Remote Attestation Service issues verifiable tokens about a specific GPU and its software stack, aligned to the Entity Attestation Token standard so downstream systems can parse claims without bespoke code. Read the NVIDIA remote attestation service.

- Cloud runtimes that stitch CPU and GPU claims into one policy gate. That gives developers a practical way to say: only release my key when the VM and the GPU both match these versions with these revocation states, and when the request carries a nonce proving freshness.

Once that door opened, patterns that were once academic became operational.

Compute passports explained

A compute passport is a signed envelope of attestations that proves where and how a model ran. It is not a marketing badge. It is a machine verifiable set of claims tied to a run. You can attach it to a job record, a payment, or a compliance filing.

A minimal compute passport typically includes:

- Who attested what. The verifier identity and public key, plus references to vendor endorsement roots.

- What ran and where. Measurements of the VM, kernel, and container, plus the GPU device identifier and firmware versions.

- When and with what policy. A nonce or timestamp so the token cannot be replayed, and a statement that this run satisfied the relying party policy.

- Optional bindings. Hashes of model artifacts or config blobs so the passport is materially tied to the cognitive action.

Think of it as a packing list for cognition. If you have it, you can answer not only that a result exists, but also that it was produced inside the boundaries you negotiated.

Privacy preserving data deals that do not require trust falls

Before this wave, the standard advice for sensitive data was to build a clean room and appoint auditors. Today, you can structure a data deal so that the provider never sees the raw data and the consumer never sees the raw model. The deal is enforced by cryptography, not a clause.

Here is a concrete template:

-

The data owner encrypts a dataset and stores it in the cloud. The decryption key sits in a key manager that only releases keys to workloads presenting a valid compute passport.

-

The model owner ships a container image with the model and logic to run a fixed evaluation or fine tune. The image hash is listed in the policy.

-

The cloud launches a confidential GPU VM. At startup, it produces CPU and GPU attestations. The remote verifier issues a signed token.

-

The relying party checks that the token matches the expected measurements and that revocation lists are clean. If everything matches, it asks the key manager to release the data key to that enclave for that session only.

-

The job runs, produces an output artifact and a signed compute passport. Payment and release of results are conditioned on verifying the passport.

No one had to trust an operator promise. Everyone trusted a proof.

Verifiable compliance instead of compliance theater

Most AI governance today reads like a stack of policy binders. They are useful but weak at the exact moment when it matters most, which is when the model touches sensitive inputs. Attested AI lets you turn regulatory clauses into runtime checks.

- Residency. A passport can bind the run to a specific region and cluster, satisfying data localization rules that are otherwise hard to prove.

- Role separation. Keys only release if the job ran in a mode with no administrative access, preventing sticky fingers from lifting prompts or weights.

- Lifecycle controls. Policies can require that the GPU driver and firmware are within a supported window, with no known critical vulnerabilities.

- Incident response. Because passports carry concrete measurements and timestamps, you can rapidly scope what ran where and when, without combing through a sea of opaque logs.

Regulators can ask for proof that a given cohort data never left a boundary or that a particular model version only ran in approved conditions. You hand them a stack of compute passports and the policy that produced them.

The new platform moat: attestation networks

Attestation sounds like a feature, but it behaves like a network. Several roles form the fabric:

- Device vendors publish endorsements and revocation information for firmware and drivers.

- Cloud verifiers validate evidence and mint tokens under their keys.

- Token brokers and relying parties create policy and gate secrets based on the claims.

Whoever sits in the verifier seat accrues power. If your keys only open for tokens minted by specific verifiers, you implicitly centralize trust. Over time, this looks like certificate authorities for compute. There is a race on to become the default trust root for confidential GPU runs.

Expect moats to form around:

- Strong device coverage. Verifiers that understand many GPU generations and microcode quirks will be more useful.

- Token distribution. The easier it is to present tokens to services like key management, identity, or payment rails, the more valuable the verifier.

- Multi cloud portability. Developers will avoid lock in by accepting tokens minted by several verifiers and normalizing claims across vendors. This will become table stakes for large buyers.

If you are building a platform, your attestation story is now part of your go to market, not just your hardening guide. For a broader view of how infrastructure advantages compound, see how [AI platform moat] (/compute-eats-the-grid-power-becomes-the-ai-platform-moat) dynamics emerge when power and placement dominate.

What this does not solve

Attestation does not attest that your code is correct or your prompts are safe. It does not prevent a buggy model from making a mistake. It proves that the run happened inside a defined envelope. You must still:

- Pin container and model hashes so you know exactly what you executed.

- Scan supply chain inputs so the thing you measure is the thing you intended to ship.

- Treat passports like secrets. Tokens can be replayed if you do not use nonces and time bounds.

- Watch for side channels. TEEs lower risk, they do not erase physics. Follow vendor guidance for noisy neighbors and memory access patterns.

The good news is that these are tractable engineering chores, not unsolved mysteries.

A builder playbook for adopting attested AI

If you need practical steps, start here.

-

Choose your base TEE and GPU stack. Pick a confidential VM type that supports GPU device attestation on your target cloud. Read the vendor attestation docs and confirm the exact claims you can verify. For Google Cloud and NVIDIA, the Google Cloud GPU attestation guidance and the NVIDIA remote attestation service show the flow end to end.

-

Define a human readable policy, then encode it. List acceptable driver and firmware versions, allowed regions, and the image hashes you will run. Turn that into a policy that your verifier enforces. Keep the policy file in version control alongside application code.

-

Gate everything with passports. Make data and model keys conditional on a valid token. The simplest path is to integrate your key manager with an attestation check so secrets only decrypt inside a matching run.

-

Stamp every job. At the end of each run, record the token and bind it to the output object. If you cannot show a passport, you cannot ship the artifact.

-

Build change control around proofs. When you update drivers, firmware, or images, update the policy and cut a new allow list. Treat it like a schema migration. Roll forward with staged policies and verify that the old policy is formally retired.

-

Test for failure modes. Expire a token early and verify your services fail closed. Rotate a driver to a revoked version in a sandbox and confirm that the key path stays locked. Simulate a replay attack and ensure your nonce and timestamp logic catches it.

-

Plan for cross cloud runs. Normalize claims from different verifiers into a common format so you can move workloads without rewriting policy logic. Consider a broker that maps claims across vendors and clouds.

-

Connect provenance to output. Bind the passport to content provenance tags so downstream consumers can verify origin. As content authenticity systems evolve, they will link back to the compute evidence that produced an output. For more on this convergence, see why content authenticity systems are becoming an API surface.

How governance and procurement change

Procurement usually asks vendors for policies, audit letters, and penetration test summaries. With compute passports, buyers can ask for something sharper:

- Show that your inference path only unlocks with a valid GPU device attestation.

- Prove that your fine tuning jobs ran in a region locked TEE with no operator access.

- Provide a sample passport for a production job, along with the policy that accepted it.

Regulatory language will catch up too. Expect filings that attach attestation tokens alongside model documentation. Expect insurance underwriters to price coverage with discounts for attested runs. The oversight function will shift from paper checks to protocol checks, which aligns with the rise of AI market gatekeepers who assess practice rather than posture.

The next twelve months: what to watch

- Widening device support. Expect attestation coverage for new accelerator generations and more granular driver claims.

- Better developer ergonomics. SDKs that fetch evidence, verify tokens, and talk to key services will reduce boilerplate so teams can adopt without a platform rewrite.

- Cross verifier portability. Relying parties will push for mappable claims so a token minted in one cloud can be accepted in another with minimal friction.

- Tighter content provenance links. Expect downstream authenticity systems to reference compute passports so the origin of an output can be traced back to a verifiable run.

Each of these is incremental, but together they make the confidential runtime the default for workloads where privacy, IP protection, or regulatory control matter.

A final note for skeptics

There is a temptation to think of all this as ceremony. It is not. Anyone who has operated a regulated workload knows how much time is burned on controls that do not actually control. Attested AI replaces several pages of paperwork with a check that is either true or false. That is progress you can measure.

Conclusion: the proof snowball is rolling

This year’s rollouts did not merely add features. They reset the trust stack for AI. When your data and models are guarded by a policy that only cryptographic proof can satisfy, you ship faster and argue less. You close data deals that were previously impossible because the barrier was the lack of verifiable boundaries. And you turn governance into something a system can enforce instead of a binder that sits on a shelf.

Proof of compute is not a slogan. It is a habit. The sooner teams adopt compute passports and wire their secrets to unlock only for attested runs, the sooner AI can move from policy promises to proof that stands up in daylight.