The UI Becomes the API: Agents Learn to Click After Gemini 2.5

Google’s Gemini 2.5 Computer Use turns every pixel into a programmable surface. Agents can click, type, and scroll like people, collapsing the gap between UI and API. Here is how products, safety, and moats will change next.

Breaking: the interface is now programmable

On October 7, 2025, Google DeepMind announced the Gemini 2.5 Computer Use model, a system that lets agents operate software through the screen itself. Instead of calling a neat application programming interface, the model receives a screenshot, proposes a click or keystroke, executes the step in a browser, and loops until the job is done. Google reports internal production use and a developer preview. In practical terms, the user interface has become a programmable surface, as described in the official Gemini 2.5 Computer Use announcement.

For years, teams waited for universal APIs to unlock automation. Gemini 2.5 changes the calculus. If a person can complete a task with a mouse and keyboard, an agent can increasingly do the same, with supervision and policy.

From API walls to clickable streets

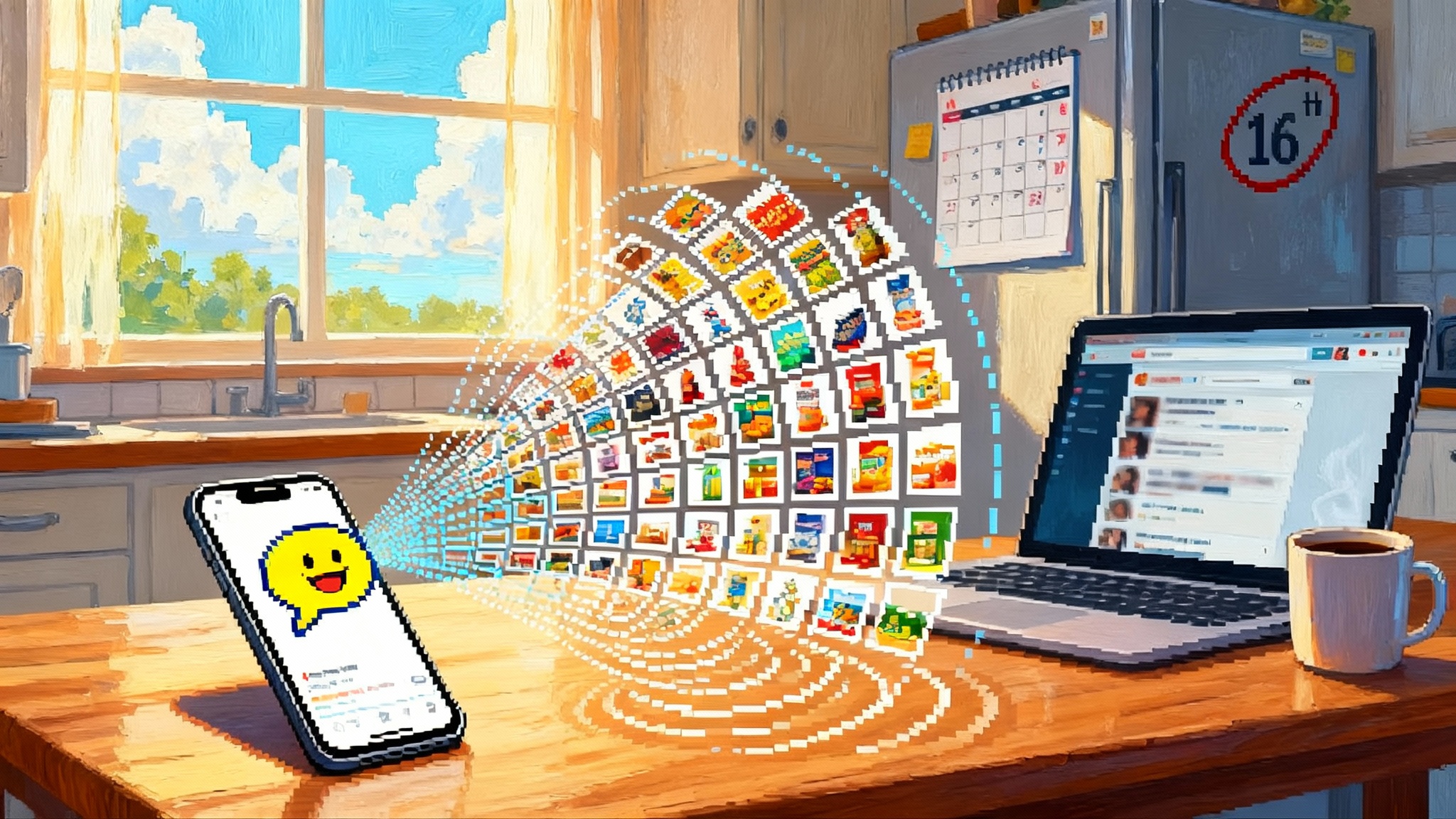

Software has long had two neighborhoods. On one side, the tidy avenues of API endpoints, where developers trade structured requests and responses. On the other, a bustling city of screens, forms, tables, and dialogs that only a person could navigate reliably. Companies built API moats around their data, and everyone else came in through the front door like a human user.

Agents that can click, type, scroll, and drag turn side streets into throughways. A claims specialist no longer needs a brittle scraper to move numbers from a portal into a core system. A researcher can direct an agent to extract citations from a journal site that never offered export. A customer team can reconcile billing across two legacy dashboards that never received integration funding. The agent sees what a person sees, acts as a person acts, and leaves behind an auditable trail that looks like a careful human session.

This does not kill APIs. It narrows the gap between human access and automated access. When an interface is built for people, it is now implicitly usable by machines that behave like people.

What Gemini 2.5 actually unlocks

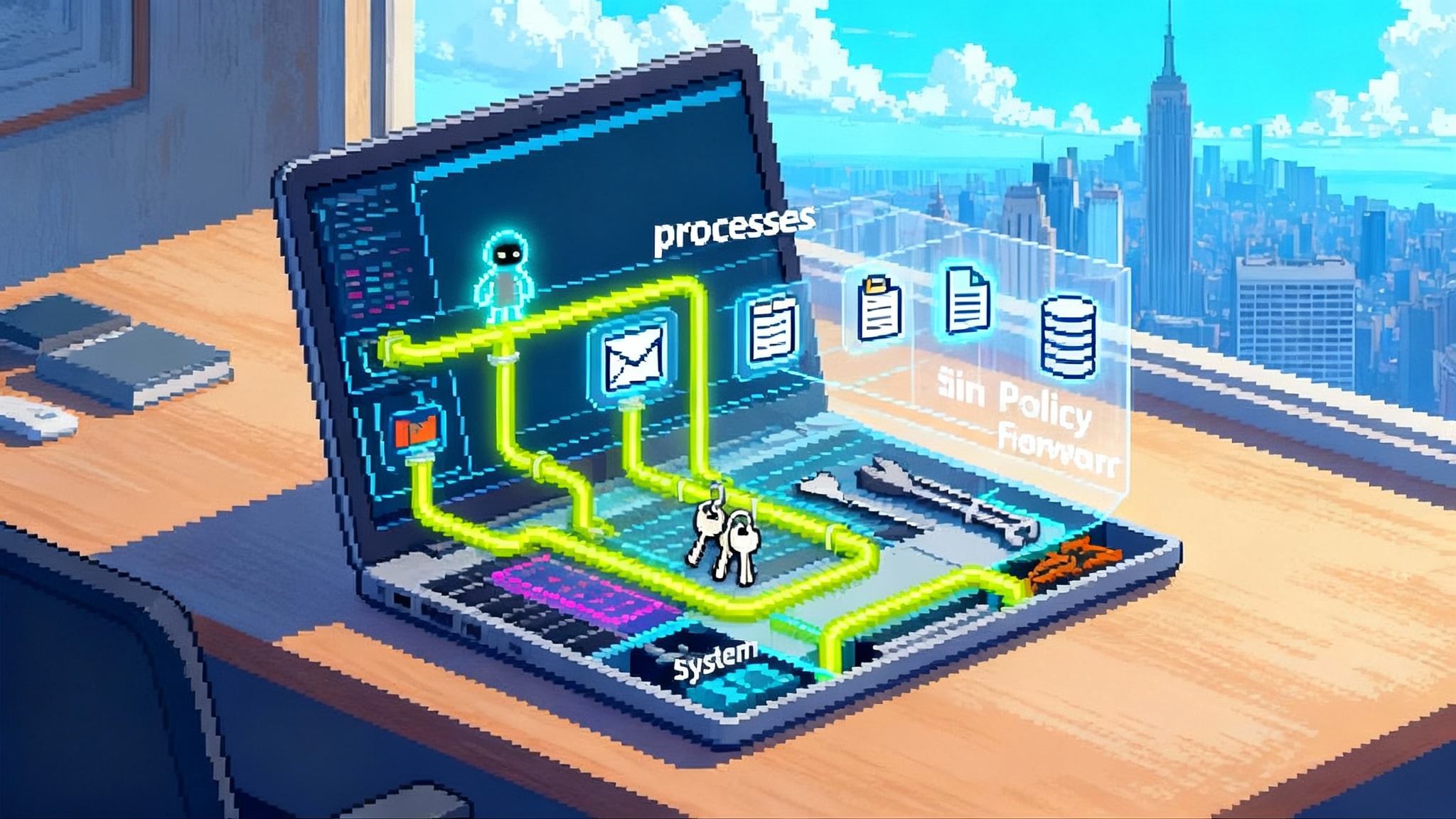

Under the hood, Computer Use exposes a set of predefined UI actions. Developers send a screenshot and a goal, the model proposes an action, a runner executes the action in a browser, then returns a fresh screenshot. In practice, that means:

- Agents can complete multi step workflows behind logins that previously blocked pure scraping.

- They adapt when layouts shift slightly, because they reason over pixels and text rather than brittle selectors alone.

- They can be supervised at the level that matters: which element was clicked, which field was filled, which modal was dismissed.

It is still a preview, and it works best in a browser. It requires an execution harness that opens pages, captures screenshots, and applies clicks and keystrokes. The direction is unmistakable. Operating a computer is becoming a first class capability, not a parlor trick.

Parallel moves confirm the pattern

Google is not alone in pushing agents toward the screen. Earlier this year, OpenAI described an agent loop that perceives pixels, plans steps, and acts with a virtual mouse and keyboard. Their write up of the OpenAI Computer Using Agent outlines the perception plan act cycle and its safeguards. Anthropic and Microsoft have introduced computer use features inside sandboxed browsers. Robotic process automation veterans such as UiPath and Automation Anywhere are weaving modern language models into familiar click to automate flows. The pattern is converging: screenshots in, clicks out, with human confirmation for sensitive steps.

The cross lab momentum matters because it signals a new interface contract for automation rather than a niche feature.

The boundary between user and automation collapses

When an agent behaves like a user, your sign in flow, your shopping cart, your settings panel, and your export menu function as de facto APIs. Every label, icon, tooltip, and tab name becomes part of an implicit contract with the agent.

Three shifts follow:

-

Precision over polish. Copy crafted for tone must now be crafted for meaning. Button text that says Got it may delight a person, but an agent needs Confirm or Dismiss to avoid ambiguity.

-

Stability over surprise. Delightful microinteractions that move targets around can confuse policies that verify safe agent behavior. Renaming tabs, relocating primary actions, or toggling layouts without semantic signals becomes an automation regression.

-

Observability over opacity. When a person complains, support can ask for a screen recording. When an agent misfires, the product must log structured events: which region was visible, which element was clicked, which validation rule fired. Without that, you cannot debug at agent speed.

Agent symmetric product design

Agent symmetric means an interface that treats human and agent as first class citizens. It does not turn your app into a developer console. It makes the UI readable, predictable, and governable by software and people.

A practical starter checklist:

- Use visible, stable text that maps to function. Prefer Save changes over Done.

- Expose semantic roles and accessible attributes. ARIA landmarks and labels help people using assistive tech, which now includes agents.

- Avoid visually identical buttons with different outcomes. If two actions are destructive versus safe, reflect that in color, label, and position.

- Provide explicit state on the page. If a filter is active, show it as a tag with text, not a subtle dot.

- Make critical actions idempotent or reversible. Agents that retry should not double charge a card.

- Offer a scoped consent dialog for automation. When an agent is detected, present narrow permissions and an expiring session token.

If you are building orchestration layers, consider how this trend rhymes with the rise of protocol centric control surfaces such as MCP. For a deeper dive on that idea, see how MCP turns AI into an OS layer.

Safety moves from prompts to interfaces

In the first wave of agent tools, safety lived primarily inside prompts and policy documents. That is necessary but not sufficient. Once agents operate UIs, safety shifts into interface governance. The app itself enforces what is allowed.

Treat terms of service like physics, not poetry. If your rules prohibit automated creation of unlimited accounts, the interface should rate limit signups per device or session, require validated contact information, and throttle repeated identical actions. The rule is not just text. It is enforced where the agent acts.

Practical controls include:

- Rate limits and action budgets bound to sessions, not only to user identifiers.

- Consentful clicks that require explicit confirmation for high risk steps such as money movement or data deletion.

- Human verification puzzles used sparingly at risk boundaries rather than everywhere.

- Pixel anchored attestations. When an agent submits a transaction, the client can package a signed summary of the visible region and the action taken.

- Continuous authentication on sensitive screens, such as a biometric check before a wire transfer even if the session is still warm.

These are the same defensive patterns mature teams already use for fraud and abuse, adapted to a world where many legitimate sessions will be agent driven.

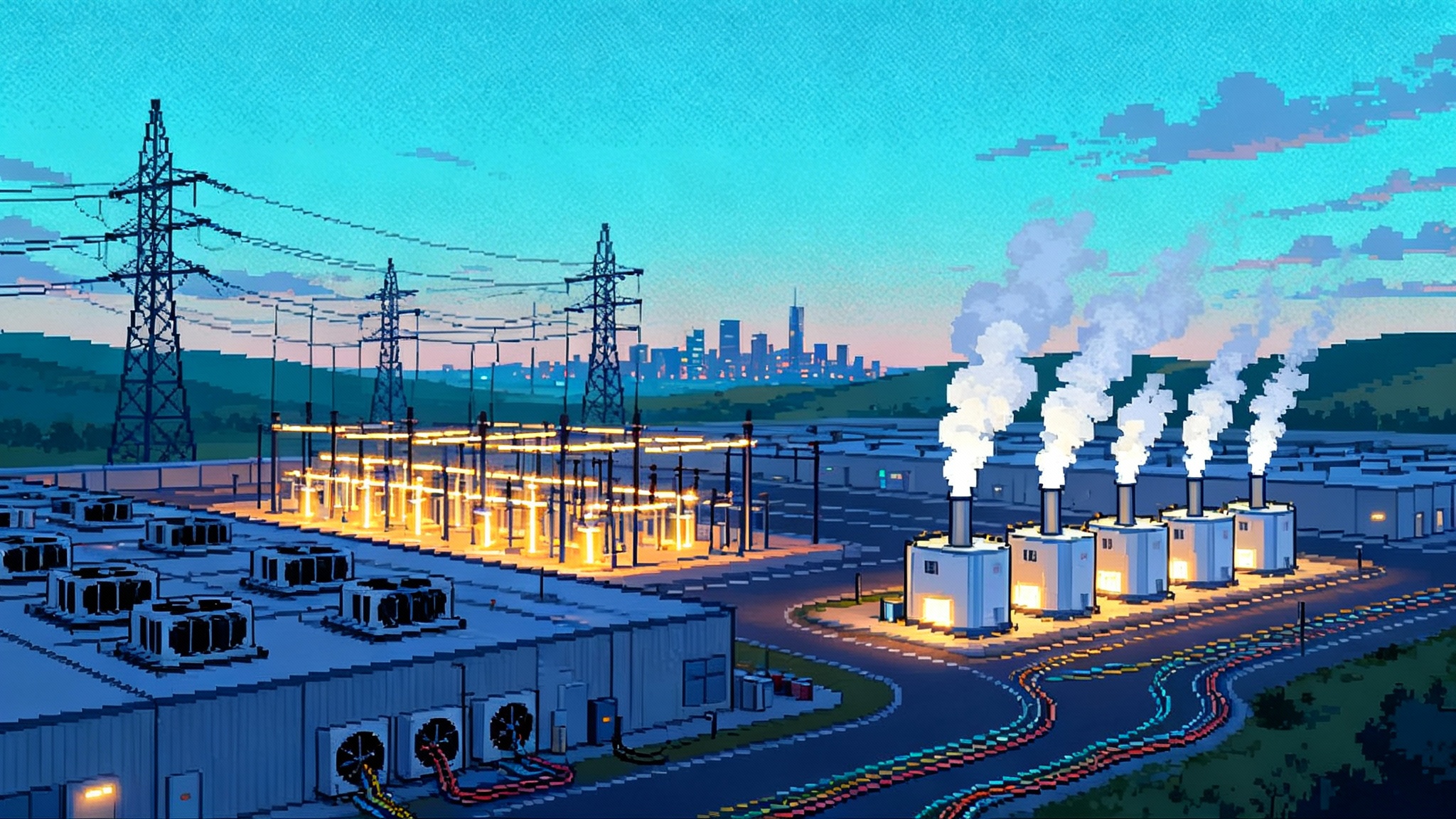

The near term stack that will emerge

Expect three layers to congeal around UI native agents:

-

Agent readable UX standards. A lightweight schema that rides in the DOM and native components to label primary actions, risk levels, identity scope, and confirmation requirements. It should be incremental, lintable, and backward compatible with accessibility.

-

Transaction attestations. When an agent executes an action, the runner packages the goal text, a screenshot hash, the element identifier, and a signed statement of intent. Servers verify the attestation before honoring irreversible changes. That yields clean audit trails and defenses against replay or spoofing.

-

Mediation layers. Between the model and the app lives a broker that translates goals into clicks, enforces policy, and asks for human approval at the right moments. It caches routines such as file an expense from a receipt, with safeguards baked in. Over time, these mediators will look like operating systems for agent work.

This does not require a new universal protocol. These are pragmatic extensions to existing interfaces, which is why this shift will move faster than prior interoperability pushes.

What teams should do now

Cross functional execution beats waiting for a standard. Here is a focused plan by role:

-

Product management: pick three high value flows that customers regularly perform by hand. Define success as an agent completing each flow through the screen with no custom API. Measure time to completion and failure modes, then publish an automation readiness score.

-

Design and research: run moderated agent sessions just like human usability tests. Instrument cursor heatmaps and time to first click on primary actions. Replace ambiguous labels, reduce cluttered panels, and increase visible state.

-

Platform engineering: provide a hosted, sandboxed browser with logging and replay. Expose a simple internal runner that any team can call with a goal, a URL, and a policy. Capture per action telemetry and store short lived video snippets to aid debugging.

-

Security and compliance: move from blanket automation bans to scoped permissions. Introduce allow lists, session level secrets, and action rate ceilings. Add attestation checks on money movement, credential changes, and data exports. Update your terms with specific, testable behaviors that your app enforces.

-

Legal and risk: define what constitutes consent for agent driven actions. When a user authorizes an assistant to act for them, record the delegation and tie it to the attestation payload. Clarify liabilities across the user, assistant provider, and the app.

-

Customer operations: build a playbook for agent incidents. If an agent loops on a form error or locks itself out, support should revoke the agent session token and unblock the affected account with a standard recovery flow.

If you are turning playbooks into durable capabilities, connect this work to how playbooks become enterprise memory. The combination of reliable routines and agent readable UIs shortens the path from intent to outcome.

The long tail gets unlocked

The excitement is not only about flagship apps. It is about the long tail of tools that never prioritized integrations. School portals, county record systems, boutique SaaS, and internal dashboards become automatable with modest design improvements and a mediation layer. Benefits compound quickly. Teams gain better testability, more reliable operations, and new growth where customers bring their own assistants.

There will be rough edges. Agents will misread icons, fail on infinite scrolls, and get tripped by odd dialog timing. That is why the shift to interface governance matters. It gives us controls that do not rely on peeking inside a model. We set guardrails at the one boundary we fully own, the screen.

Benchmarks, limits, and honest expectations

Early results point to improved performance on web and mobile control tasks, and the available action set already covers everyday work, from navigation and scrolling to form entry and drag interactions. Latency and reliability still vary by task complexity and network conditions. Browser centric approaches remain more robust than full operating system control, which is why hosted runners and sandboxed environments are the default place to start.

Treat previews as previews. Keep agents away from irreversible operations without human confirmation. Isolate credentials. Expect to iterate on strategies that translate high level goals into compact, verifiable steps. If you measure success by cycle time and error rate rather than demo magic, you will ship improvements without taking on hidden risk.

A note on business models and moats

If your moat relied on the absence of an API, plan to rebuild it. Defensibility will shift toward quality, brand, community, and the friction a competitor must bear to deliver the same outcome with an agent. The lowest friction wins. That reality pushes vendors to embrace agent symmetric design, publish clear policies that agents can follow, and offer first party mediation layers that make automation safe and reliable.

Vendors that lean in will gain distribution. If your app is easy for agents to operate, assistants will recommend it more often, workflows will include it more naturally, and customers will treat it as the default surface for a task. On the governance side, expect buyers to scrutinize evidence and attestations of safe automation. For how oversight evolves as markets mature, see the lens on new AI market gatekeepers.

The next twelve months

By this time next year, expect to see:

- A draft standard for agent readable UI roles that travels with accessibility metadata. Nothing ornate, just enough to tag primary actions, risk levels, and required confirmations.

- Widely adopted transaction attestations for money movement and identity changes. Formats will start vendor specific, then converge.

- Enterprise policy packs for agent governance that combine allow lists, rate limits, and screen level approvals. Procurement will add these to security questionnaires.

- Marketplace listings where apps advertise automation readiness grades and supported agent patterns, similar to how they advertise single sign on or data residency today.

Conclusion: click to accelerate

We spent a decade waiting for perfect APIs to make software programmable. The reality is simpler. The screen is the universal protocol. Gemini 2.5 puts that protocol within reach of any developer who can host a browser and follow a loop. Other labs show the same path with their own guardrails.

The strategic choice is between resisting and redesigning. Resisting may buy time, then fail when a competitor ships a safer, faster mediated path. Redesigning takes work, then compounds into better products and happier customers. Make the UI readable to agents and people. Move safety into the interface where it can be measured. Add attestations so actions are traceable. Ship a mediation layer so humans stay in control.

Do this, and you do not need new protocols or sweeping alliances. You can unlock the long tail of software next quarter by inviting agents to click like the rest of us. The UI has become the API. Treat it that way and the future of automation arrives on your screen.