Protocol Eats the App: MCP Turns AI Into an OS Layer

Two quiet moves changed the AI roadmap. Anthropic connected Claude to Microsoft 365 over MCP, and Google brought MCP to Gemini. As agents shift from apps to protocols, MCP turns AI into an operating layer where policy and safety are code.

The week protocols ate the app

Two announcements, separated by months but aligned in intent, redrew the AI roadmap. On October 16, 2025, Anthropic unveiled a Microsoft 365 connector for Claude that runs over the Model Context Protocol. In practical terms, that means Claude can work across Outlook, SharePoint, Teams, and OneDrive through a standards-based bridge rather than product-specific glue code. The move stated a simple product truth: the protocol is the product. Anthropic made that case plainly in Anthropic's Microsoft 365 connector for Claude.

Back in May 2025 at Google I/O, Google added support for Model Context Protocol tools in the Gemini API and SDKs. This placed one of the largest AI platforms on the same wire format that Anthropic champions. When two rivals agree on a pipe, the pipe starts to look like the platform. Google framed MCP as part of a broader push for agent ecosystems in Gemini, as noted in its Google I/O recap on MCP compatibility.

If 2023 and 2024 were the era of model worship, late 2025 is the moment we begin optimizing the interface between models and the world. The app is no longer the center. The protocol is.

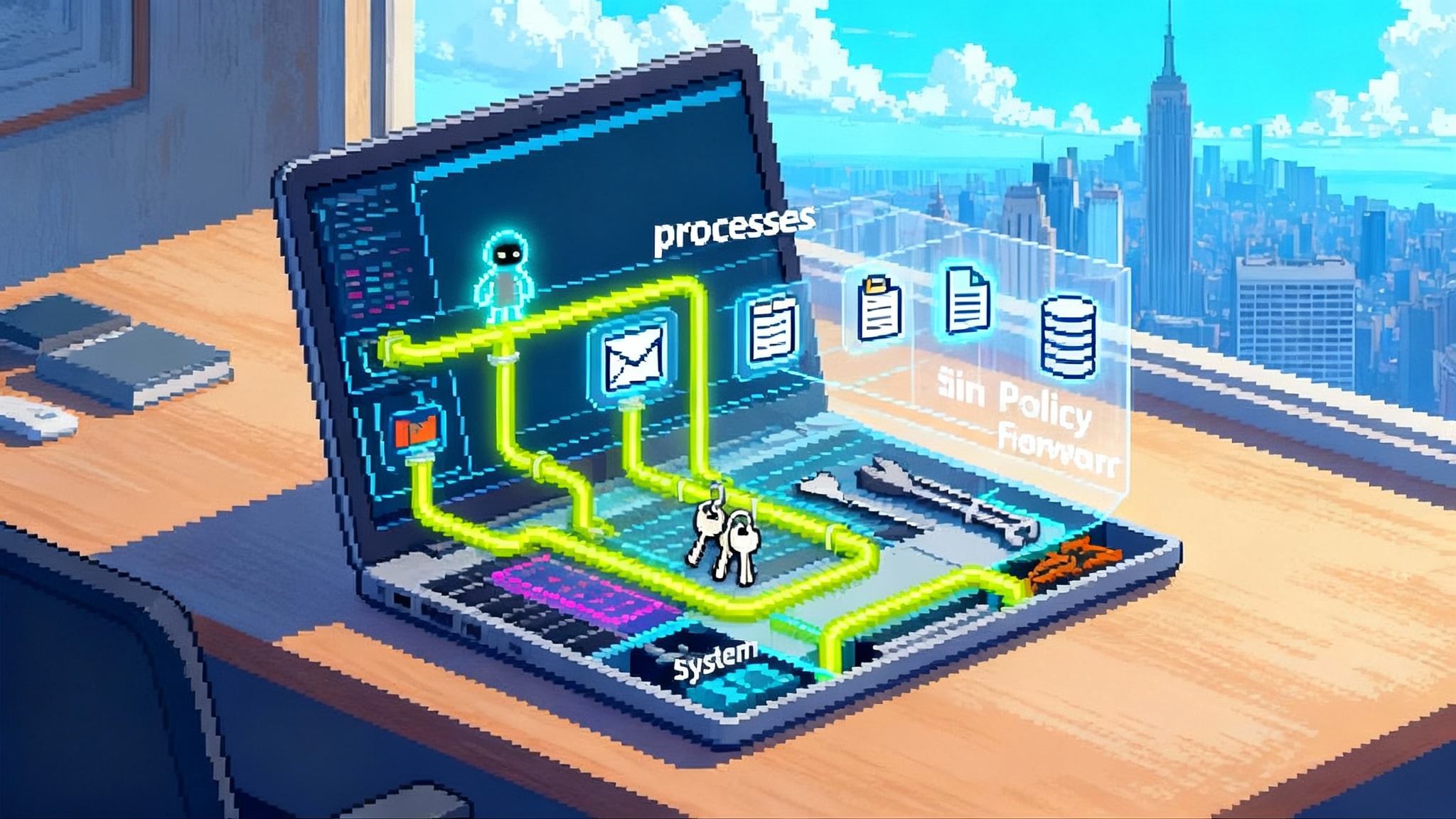

What MCP is, in one picture

Think of an operating system. Processes run code. When they need the outside world, they make system calls. The operating system checks permissions, performs the action, and returns a result. Model Context Protocol makes agents feel like processes and makes external tools feel like system calls.

- The agent is a process with memory, a goal, and a thread of execution.

- A tool is a system call with a typed capability such as read_email, query_docs, schedule_event, or file_search.

- The protocol is the kernel interface that governs discovery, authentication, calling, streaming, and results.

With MCP, you do not hardcode one-off integrations inside your app. You describe capabilities behind a stable contract and let compliant agents discover and call them. It is the difference between plugging a toaster into a wall outlet and soldering its wires directly into the electrical panel.

Intelligence as negotiation

A productive way to reason about agentic systems is to treat intelligence as negotiation. Goals, constraints, capabilities, and risk all meet at a boundary. Protocols make that negotiation explicit and testable.

- Capability offer: a tool declares what it can do, the inputs it requires, the outputs it returns, and the potential cost or side effects.

- Request proposal: an agent proposes a call with specific parameters, context, and justification.

- Gatekeeping check: a policy engine or human verifies that the request is allowed for this identity with this purpose right now.

- Execution and receipt: the tool executes and returns results along with a receipt that can be logged, audited, and revoked if needed.

In a world of implicit integrations, this negotiation is hidden in bespoke code. In a world of protocols, it is out in the open. That is how you scale capability and responsibility without rewriting everything for each vendor.

Consent and identity as capability tokens

Legacy permissions revolve around the app. You grant a monolithic application access to your data and hope it behaves. Protocolized agents flip the model. You grant capability tokens to identities and roles, and those tokens unlock specific system calls. It looks like capability-based security from operating systems, applied to tools that speak a shared language.

- Identity carries the minimum set of capabilities needed to act. A sales agent identity might hold tokens for read_email_subjects, read_calendar_slots, and draft_email but not send_email.

- Consent is granular and time bound. You can issue a token that expires after one hour or after a single use.

- Delegation is explicit. A manager can delegate a limited capability token to a project agent for a week.

- Revocation is deterministic. You can claw back tokens and instantly reduce blast radius.

This design suits the enterprise reality where compliance teams care less about which model you used and more about who did what, when, with which authority. It also connects to a broader shift in organizations where skills become enterprise memory and policy is encoded directly in the systems that do the work.

Why alignment on MCP matters

A protocol gains power from convergent adoption. Anthropic has made MCP the spine for Claude’s tooling and connectors. Google chose to expose MCP tool definitions in the Gemini API and SDKs. That means a tool vendor can write one MCP server and reach both ecosystems without bespoke adapters. It also means buyers can experiment with multiple models while keeping a stable interface to their data and actions.

This is not altruism. It is supply and demand. Tool builders want one contract to reach many agents. Platform owners want many tools to make their agents useful. Customers want portability and control. MCP provides a workable least common denominator today.

The center of gravity moves from apps to protocols

Here is the practical impact of protocolization.

- Switching costs drop. If your tool surface is defined in MCP, you can test two agents on the same stack without a rewrite. The model becomes a replaceable part rather than the hub.

- Distribution flips. Tools that speak the protocol can be discovered and used by many agents. Adoption is no longer hostage to one storefront.

- Safety becomes programmable. You can define policy at the call boundary rather than scattering it across many app integrations.

- Procurement gets simpler. You can evaluate an agent by pointing it at a staging MCP server and seeing whether it respects capability boundaries.

We have seen this movie before. The web beat native portals by standardizing on HTTP and HTML. Payments became portable when networks aligned on a few rails and tokenization standards. In each case, protocols shifted power away from app silos toward a shared substrate where competition moved to quality, latency, security, and price.

Safety that compiles

AI safety is often framed as vibes. Protocolization makes it code. When every action is a typed call with explicit scope, you can enforce and audit safety in concrete ways.

- Preconditions: deny or downscope requests that do not include required context, purpose strings, or human approval.

- Rate and budget controls: cap risky calls per identity per hour or per session budget.

- Structured red teaming: replay recorded call sequences against updated policies to find gaps.

- Principle of least privilege: provision identities with the smallest token set required for the task.

- Verifiable receipts: bind outputs to inputs with signed receipts that downstream systems must verify before acting further.

This is safety with mechanisms. It complements model-level safeguards and content filters by governing the boundary where the model touches the world. For readers tracking the maturation of AI risk disciplines, the move resembles the shift toward an aviation-style safety era with shared incident patterns and testable controls.

Developer leverage over the next 12 months

Expect four changes by the end of 2026 that materially expand developer leverage.

- One adapter, many agents: Building an MCP server becomes the default way to expose a business capability to agents. A single implementation can serve Gemini, Claude, and other platforms that adopt the contract.

- Policy as code for AI actions: Teams standardize on policy engines that evaluate MCP requests with data-aware rules. Allowlists, purpose strings, and risk scores become first-class conditions in call admission.

- Tool marketplaces measured by receipts: Instead of app stores measured by downloads, we will see catalogs measured by successful, safe MCP calls, with pricing attached to call classes and outcomes.

- Portable agent profiles: Agent identities and their capability bundles will travel across vendors. A developer will package a task graph and a token manifest and run it on multiple model backends with minimal rework.

For builders, this dovetails with a simple operating principle. Invest in stable contracts and policy. Spend less time on custom integrations. The cost curve will favor teams that can swap models without touching the tool layer, and organizations that turn process into skills become enterprise memory.

The stack that emerges

As MCP adoption spreads, expect the emergence of a common runtime stack. It will look less like a single product and more like a set of opinionated layers.

- Capability registry: a discovery index where MCP servers publish contracts and where agents subscribe by domain or risk profile.

- Policy firewall: a gate that validates every call, enriches context, and enforces budgets, approvals, and audit trails.

- Routing broker: a mediator that sends calls to the best model or tool instance based on latency, cost, or compliance rules.

- Receipt ledger: a tamper-evident store for call receipts that other systems can verify before acting.

- Observability: traces, redaction, and replay focused on tool effect and data movement, not only prompts and tokens.

Vendors will compete for slices of this stack. Cloud providers will pitch managed registries and firewalls. Security companies will sell policy as code tuned for MCP. Dev toolchains will integrate receipt-aware testing. The winners will not be those with the largest model alone. They will be the ones who make the protocol economy safe, fast, and boring.

A practical playbook for the next 90 days

If you own a product that could benefit from agents, here is a specific plan.

- Inventory capabilities: list the top ten tasks an agent should perform for your users. Define each as a verb with inputs, outputs, and side effects.

- Ship an MCP server: implement those verbs behind MCP with clear schemas and example calls. Host it with proper authentication and rate limits.

- Wrap consent in tokens: adopt capability tokens tied to your roles. Start with read-only calls, then graduate to action calls with human approval flows.

- Add a policy firewall: choose or build a policy engine that evaluates request context, risk signals, and user intent. Log every decision.

- Test with two agents: wire your MCP server to two different agent platforms. Document where each struggles. Use that learning to adjust prompts and policies rather than rewriting integrations.

- Instrument receipts: store signed receipts and build a small verifier that checks them before any downstream action.

Complete these steps and you will have a portable, auditable interface that works today and appreciates in value as the agent ecosystem composes around it.

Power dynamics in a protocol world

Protocols flatten some hierarchies and create new ones.

- From app silos to service providers: Tools that do one thing well can be adopted faster if they speak the protocol. You do not need to win a storefront to get distribution. You need to pass a policy gate and deliver value per call.

- From model lock-in to model marketplaces: Buyers will run multiple models behind the same capability surface. Expect procurement to push for swap tests where two models run blind on production traces while the policy firewall keeps risk in check.

- From opaque integrations to auditable effects: Compliance teams will demand receipts for high impact actions. Vendors that ship receipt support and verifiers will win regulated buyers.

- From broad scopes to scoped trust: Capability tokens make it easy to give an agent a narrow path to value. Products that default to narrow scopes will see faster approvals.

This does not erase the advantages of scale. Large platforms still own identity, device reach, and default distribution. But the economic rent moves up and down the stack. Contracts and policy take a larger share of strategic attention.

Performance and reliability

Protocol layers add both visibility and potential failure modes. The answer is to design for them.

- Prefer asynchronous tools for long running tasks. Return a receipt and poll or callback when done.

- Cache read-only results with scoped keys to avoid wasteful repeated calls.

- Use idempotent write calls with replay protection so retries do not duplicate actions.

- Measure not only tokens and latency, but successful tool outcomes per minute.

Handled correctly, the protocol layer does not become a bottleneck. It becomes a coordination point that makes systems more predictable.

The next 12 months, concretely

By this time next year, three practices are likely to be normal rather than novel.

- Cross vendor agent runs: a single task graph with capability tokens will run on Claude one week and Gemini the next, with changes focused on policy tuning rather than rewrites. Human supervisors will remain in the loop to edit critical actions, reinforcing the pattern described in humans edit, models write.

- Composable enterprise search: search across email, files, and internal wikis will be delivered as an MCP tool that any compliant agent can use, gated by identity and purpose strings.

- Regulated rollouts: auditors will sample receipts for high impact actions and tie them to user consent trails. Passing audit will look like passing test suites rather than writing memos.

These are the boring, necessary wins that make an agent economy sustainable.

The conclusion: the interface becomes the industry

The app was once the product. The cloud then made the service the product. Now the protocol is becoming the product. Anthropic connected Claude to everyday work through MCP. Google put MCP on the critical path for Gemini developers. The result is that agents look more like operating system processes than chatbots, tools look more like system calls than integrations, and consent and identity look more like capability tokens than permanent grants.

Those are not slogans. They are mechanisms you can ship. Standardize the boundary and you gain choice, safety, and speed. Ignore it and the market will make the choice for you. Build for the protocol layer now, and you will own a durable asset when the agent operating layer becomes the default way software gets things done.