The Consent Collapse: When Chat Becomes an Ad Signal

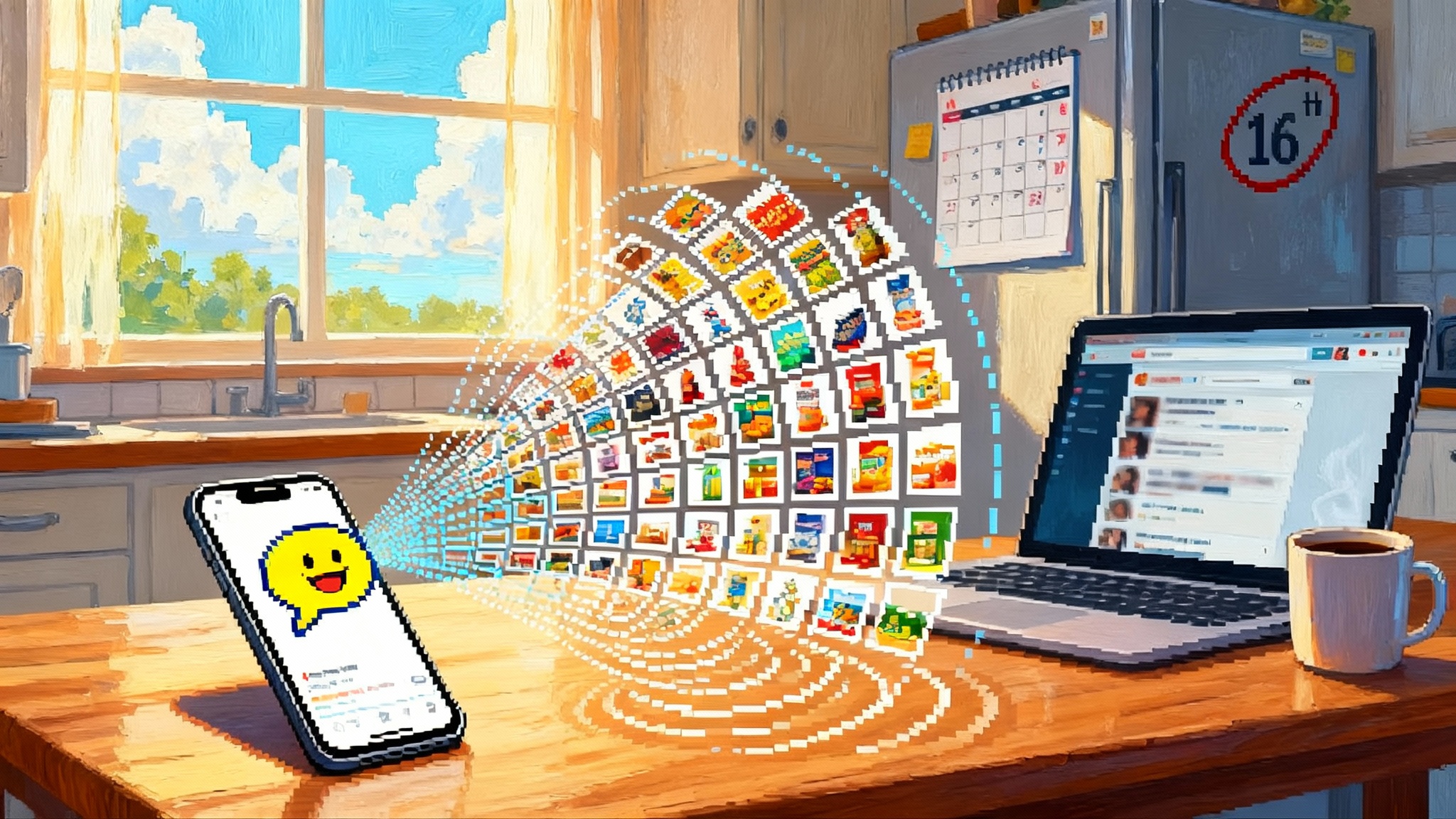

Starting December 16, Meta will use conversations with Meta AI to personalize feeds and ads. This shift treats assistant chats as ad signals, raising urgent questions for trust, consent, and how we design agents people can believe in.

Breaking change: your chats are now ad signals

On October 1, 2025, Meta announced that it will begin using people’s conversations with Meta AI to personalize what they see across Facebook, Instagram, and other Meta apps, including ads, starting December 16, 2025. The company framed this as a way to make feeds feel more relevant and to reduce content people do not care about. You can read the details in Meta’s October 1 announcement.

The short version is simple. If you ask Meta AI about hiking, you should expect more hiking in your life. Not just posts and reels, but also ads. Meta says it will not use certain sensitive topics for ads, and it reminds people that they can adjust Ads Preferences. What Meta does not offer is a true opt out of this new signal. That detail matters because it shifts the default from private assistance to commercial input. Reuters reported no opt out.

What exactly changed

- Conversations with Meta AI are now inputs for personalization systems across multiple Meta surfaces.

- Unless a region is excluded at launch, the new signal becomes part of ranking and targeting without a separate, explicit opt in.

- People can manage some categories in Ads Preferences, but the top level choice to keep chat signals out of ads is not offered at launch.

Why this feels different than clicks

A chat is not a click. People experience conversations with assistants as closer to a private note than a public gesture. They contain verbs, constraints, and time frames that reveal more than a Like ever could. That gulf between felt privacy and system use is where trust can fray.

The consent collapse

Consent collapses when the context of an action suggests one meaning to a person but the system maps it to another. In a chat window, the intent is to ask for help. The system’s interpretation is to extract signals that shape attention supply across an entire platform. The social contract of assistance flips. A whisper to a helpful agent now speaks to the billboard factory behind the feed.

Here are the mechanisms behind the collapse:

- Contextual shift: a private feeling conversation produces public facing outcomes in the feed and ad stack.

- Default enrollment: personalization from AI chats begins without a direct, affirmative choice about that new signal type.

- Cross surface propagation: what you say to a bot influences what you see elsewhere. Meta notes that if you have not linked an app to Accounts Center, interactions there do not personalize other apps, but many people link accounts for convenience.

- Semantic expansion: a single chat about hiking near Denver can imply budget, location, fitness level, and travel intent, which all become inputs for ranking and ads.

Assistants as adtech surfaces

This decision reframes assistants from tools you summon to surfaces that harvest intent. That changes product design in three ways.

- Queries become inventory. A chat about a ski trip is now a pre click signal that can be auctioned across ad and recommendation systems.

- Safety policies become targeting rules. The list of sensitive categories that Meta says it will not use for ads aligns with legal constraints rather than with user expectations. People may believe that therapy, debt, fertility, or immigration topics are private, yet the boundary for what counts as sensitive is set by policy.

- Memory becomes retargeting. If a system retains chat context, even temporarily, that context seeds future impressions. Ephemeral memory is still a memory for ranking systems.

A useful metaphor is a smart notepad that lives in your pocket. You jot ideas for a weekend. Later, the city seems to bend around your plans. Storefronts highlight ski gear. Your friends’ posts about powder days bubble up. The notepad did not sell your notes directly. It whispered to the city’s signage team.

What changed and why it matters

Meta’s rationale is straightforward. Interactions with content already shape your feed. Interactions with AIs are another kind of engagement, so they should count. The product story is coherence and relevance across surfaces. In practice, three consequences follow.

- Precision increases. Chat language is richer than a Like. It carries verbs, nouns, constraints, and time frames. A request that mentions kid friendly hikes near Boulder next Saturday is a stronger intent signal than clicking a photo of a mountain.

- Privacy expectations diverge from platform rules. Many people feel that assistant queries are closer to search terms or even to journal entries. Treating them like feed taps will not land well unless the interface makes the trade visible at the moment of disclosure.

- Trust fragments. Once people learn that chats steer ads, the content of their questions shifts. They will self censor or move sensitive questions to other tools. That creates selection bias in what assistants learn and undermines their usefulness.

The notice problem

Meta says it will notify people on October 7 and the change goes live on December 16. That timing is generous compared to past industry moves, but the core tension remains. Most notices are abstract. They disclaimer purposes and list controls, yet they do not meet people at the point of disclosure. The critical moment is the line where a person types I am anxious about a new job or I want to plan a trip with my father before his surgery. If the system intends to use parts of that line to steer ads, the interface should offer a timely, intelligible choice.

Designers know how to build timely consent. Two examples show what good could look like.

- Inline scoping chips. When a person asks for hiking tips, show chips like Use to improve feed and Use to improve ads, both off by default. If toggled on, keep a small, persistent indicator in the thread that shows the current scope.

- One conversation, one purpose. Let people mark a thread as Personal Research, Work, or Private. Use strict rules to constrain where signals from that thread are allowed to travel.

If you are building agents, this aligns with the idea that protocols should carry intent and control, not just data. For a deeper look at that shift, see how agent capabilities can move into the stack in Protocol Eats the App.

Pressure on UI and safety policies

Opt outless personalization puts new pressure on three product layers.

- Input layer. People need to know what will happen before they speak. A plain language preamble above the composer can help. So can microcopy that appears only when a query mentions obvious commercial intent like flights or boots.

- Memory layer. Platforms should show a visible memory panel with a per item scope. If a detail is memorized for personalization, keep it editable and exportable. If it expires, show the schedule. This is consistent with treating saved knowledge as skills rather than undifferentiated data, a pattern we discussed in Playbooks, Not Prompts.

- Safety layer. Today’s sensitive categories are policy lists. The real problem is a semantic neighborhood around those categories. Even if politics is excluded, a chat about voter registration drives civic intent into the system. The safety bar should consider adjacency, not only enumerated topics.

Regional and cross app nuance

Rollout details matter. Reporting indicates the update will not apply in the European Union, the United Kingdom, or South Korea at launch. People will start receiving notifications on October 7, and signals will begin shaping experiences on December 16. Interactions can flow across Meta apps if accounts are linked in Accounts Center. If WhatsApp is not linked, a WhatsApp chat will not personalize your Instagram feed. That is a useful constraint for people who want to compartmentalize. For readers following regional governance, the move will likely meet very different scrutiny in jurisdictions that treat AI infrastructure as public interest. Background on that trend is in From Rules to Rails.

What builders should do now

Accelerationist teams want to ship. You can ship fast without borrowing trust. Here is a playbook that turns consent into a product advantage.

Build consentful agents

- Per prompt scoping. Present an obvious choice to use the current message for feed improvement, for ads, for model quality, or not at all. Remember the choice per thread.

- Memory by request only. Do not auto save anything. Let the user pin facts. Label each pinned item with a scope tag.

- Transparent transformations. If you turn a chat into a topic signal, show the extracted phrase and let the user edit or delete it.

Go local first where it counts

- On device reranking. Keep intent extraction and reranking on device when feasible. Ship a small on device embedding model for topic detection. Sync only coarse, non sensitive summaries when necessary. For teams exploring model choices, see why smaller models can be the better trade in The Good Enough Frontier.

- Edge caches with clear expiry. Store short lived intent locally for 24 to 72 hours and let people purge it with a single tap.

Give users a data vault

- User owned storage. Place long term memory in a private vault the person controls, even if the app hosts it. Access via a fine grained permission model and a visible audit trail.

- Purpose binding. Tag every vault item with the purpose that justified its collection. Only allow downstream use if the purpose matches. If a new purpose emerges, ask again.

Rethink monetization

- Shift from behavioral to declared intent. Give people a clear way to state interests and time frames, then compensate them with higher quality results or discounts. Declared intent beats inferred intent for both sides.

- Offer a paid mode. Some users will pay to keep assistant chats out of ad systems. Validate this with pricing tests rather than speculation.

A 30 60 90 day blueprint

Days 0 to 30: ship visibility

- Add a thread level scope selector with defaults set to Do not use for ads.

- Implement a memory panel that lists every saved fact with its scope and expiry.

- Instrument a telemetry stream that records each consent decision without logging message content.

Days 31 to 60: ship control

- Add per prompt scoping chips for feed and ads, both off by default.

- Integrate an on device lightweight intent extractor. Keep commercial intent detection local.

- Release a vault export in machine readable format with a human readable summary.

Days 61 to 90: ship value

- Build a declared interest form with simple time bounds like Next 7 days and Next 30 days.

- Offer a paid privacy mode that disables ad signal generation and uses only declared interests for sponsored content.

- Publish an annual transparency note that lists top intents captured, average retention time, and percentage of consents by scope.

Measuring consent as a feature

If consent becomes a product feature rather than a checkbox, it can be measured. These metrics are practical, not vanity.

- Consent acceptance rate per scope over time

- Percentage of threads with scope changes after the first message

- Opt down events after people see an ad that appears tied to a chat topic

- Memory edits and deletes per thousand active users

- Model performance on tasks that do not require personal data versus those that do

- Net present value of declared intent campaigns versus inferred intent campaigns

The hard questions product leaders must ask

- Are we making this choice at the right moment, in context, or burying it in a settings page

- Would a reasonable person expect this chat to influence ads

- Can we ship a paid mode that turns off ad signal generation entirely, not only hides it

- If we lost all chat derived signals tomorrow, would the product still be good

- Do we have a clean boundary between model improvement, feed personalization, and ad targeting, and can the user see and control each boundary

What this means for the ecosystem

If chat becomes the new cookie, everything upstream of the ad stack will try to capture conversational intent. Vendors will build chat plugins that specialize in ad friendly signals. Browsers will start surfacing local intent controls. Regulators will look at consent drift in conversational interfaces. Researchers will test whether people change behavior when told that chats influence ads.

There is also a strategic fork. One path treats assistants as another watchful surface in a broader attention market. The other path treats assistants as personal software that sometimes participates in commerce. The first path optimizes for extraction. The second optimizes for declared, time bound intent that the person authorizes.

A practical note for people using Meta apps

If you want to compartmentalize, do not link every app to Accounts Center. Keep sensitive queries out of assistant chats altogether until controls mature. Use Ads Preferences to blunt obvious categories, but know that the new chat signal is not optional at launch. If you need an assistant for private matters, use one that keeps inference local or offers a paid mode that guarantees no ad signal generation.

Conclusion: rebuild trust with design, not slogans

Meta’s move is a watershed. It clarifies how platforms see assistants. They are not only tools for you. They are tools for the system to learn from you. That shift can either corrode trust silently or become the pressure that forces better agent design. Builders should choose the latter. Ship agents that treat consent as a living part of the interface. Keep inference local when you can. Put long term memory in a vault the user controls. Make each new use visible and revocable in the moment it matters. If we do this well, chat does not have to become the new cookie. It can become the place where software finally learns to ask first, use less, and prove why.