Compute Eats the Grid: Power Becomes the AI Platform Moat

AI’s scarcest input is not chips, it is electricity. Amazon is backing modular nuclear in Washington, Georgia may add about 10 gigawatts, S&P sees demand tripling by 2030, and DOE is backing grid upgrades.

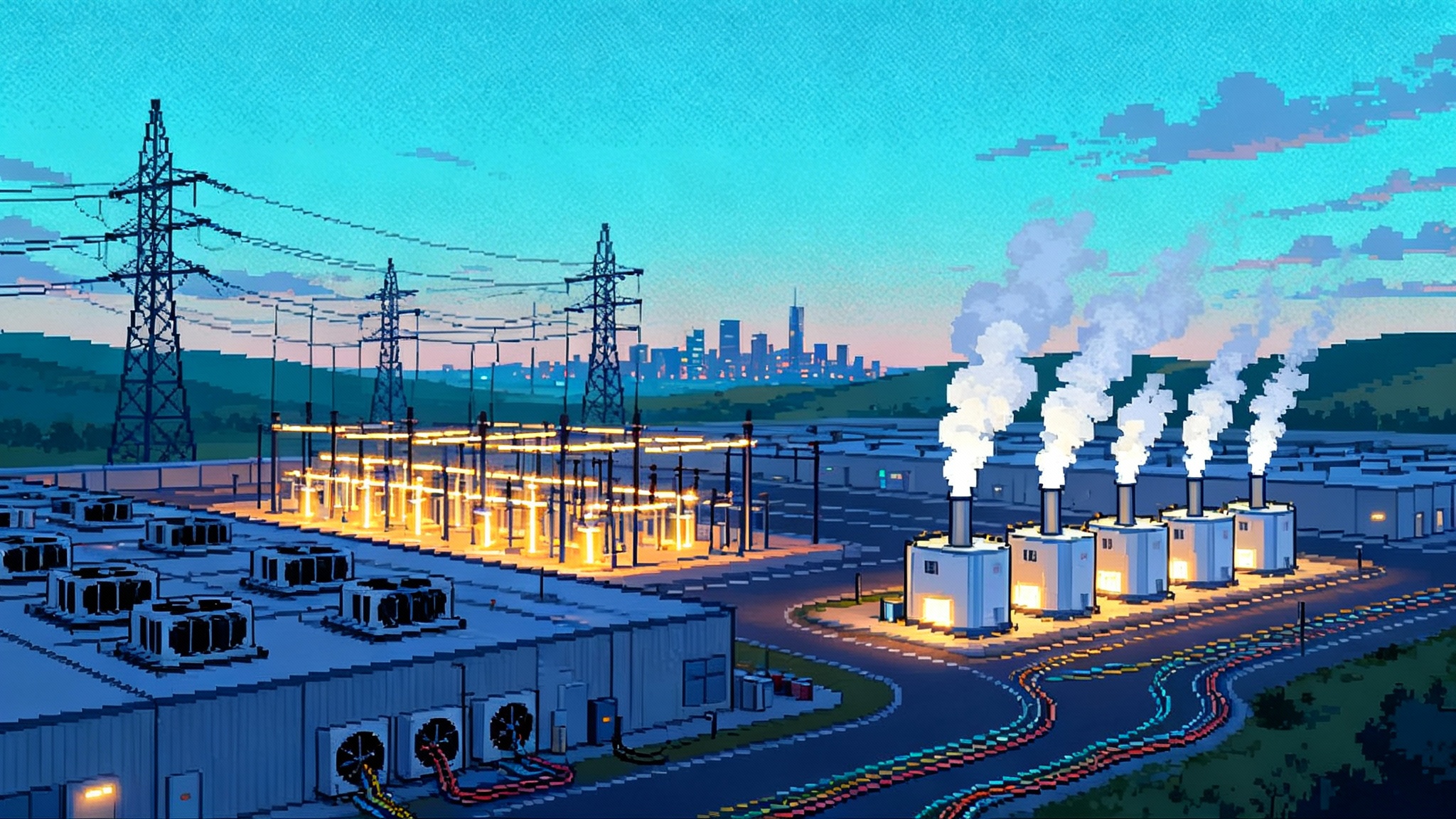

Breaking point: the platform moat turns into electrons

In the span of a single week, the center of gravity in artificial intelligence shifted from code to current. On October 16, 2025, Amazon revealed plans with Energy Northwest to co-develop a modular nuclear facility in Richland, Washington, aimed at feeding cloud and AI workloads. Also on October 16, 2025, the U.S. Department of Energy finalized a multistate transmission upgrade loan guarantee for American Electric Power. By October 19, 2025, Georgia’s push to add roughly 10 gigawatts to meet surging data center demand dominated headlines and regulatory agendas. All of this followed a fresh S&P data center forecast released on October 14, 2025 that expects U.S. grid demand from data centers to nearly triple by 2030. The message is blunt: access to dependable, low-cost, low-carbon electrons is becoming the new platform moat.

This is not a thought experiment. It is industrial policy being drafted in real time around substations, rights of way, and interconnection queues. As agentic AI systems scale, capital and compute are beginning to behave like energy-seeking organisms. They migrate toward places with power density, fast interconnects, and regulatory clarity.

The constraint has moved: from models to megawatts

For a decade, the biggest bottleneck in machine learning was better algorithms and bigger training runs. Today the rate limiter is electricity delivered where and when it is needed. A single cutting-edge accelerator can draw hundreds of watts at full tilt. Multiply that by tens of thousands of accelerators, then add cooling and power distribution, and you approach the load of a small city concentrated in a few buildings. A modern campus can easily require hundreds of megawatts, and unlike many industrial loads, demand is 24 hours a day with minimal downtime.

This is why grid physics, not just Moore’s law, now shapes the frontier. The grid is not a tap you can simply open wider. New generation takes years. Transmission can take longer. Substation capacity is finite. Queues for interconnection are measured in years. Equipment lead times for big transformers and switchgear can be two years or more. In that environment, a guaranteed, schedulable source of clean electricity becomes more defensible than a new chip tapeout or a clever optimizer.

Agentic systems as energy-seeking organisms

Think of agentic AI as a colony of industrious ants. Ants do not care about property lines, they care about food sources and the easiest paths to reach them. In the same way, compute clusters spread along the paths of least resistance: spare transmission capacity, substation headroom, and friendly interconnection rules. If a region cannot deliver stable megawatts quickly, the workloads will march elsewhere.

That pattern is visible now. Northern Virginia strained under unprecedented loads in recent years, so operators fanned out to Ohio, Georgia, and Texas. Utilities are upgrading thousands of miles of lines to move more power without adding new corridors. State commissions are rewriting tariffs and queue rules to ration scarce megawatts and protect residential customers. In many places, the single most important asset is no longer land or fiber, it is a reserved slot at a substation.

This migration rhymes with our earlier analysis of how governance and standards become control points. When market power shifts, new gatekeepers emerge. For a deeper look at that pattern inside AI, see our take on the new AI market gatekeepers.

Amazon’s nuclear signal, and why it matters

On October 16, 2025, Energy Northwest named its planned small modular reactor campus the Cascade Advanced Energy Facility and published concept images for the site in Richland. Amazon is working with Energy Northwest and X-energy on an initial phase of four Xe-100 reactors, with potential expansion to a dozen units over time. It is early days: licensing and financing must still clear federal and state processes. Yet Amazon’s move reframes the scoreboard. It tells every hyperscaler, chipmaker, and model lab that the next defensible advantage is firm, carbon-free power close to where the bits live. Read the details in Energy Northwest’s Cascade release.

The specifics are less important than the template. Corporate buyers are moving from buying green certificates to catalyzing new steel in the ground. They are treating generation as part of the compute stack. That mindset is going to spread to geothermal, advanced nuclear, long-duration storage, and even recarbonized natural gas with carbon capture where policy allows.

The new stack: from land and fiber to power and permits

The classic site-selection checklist started with land, fiber, water, and tax incentives. The new version begins with power. Not just nameplate capacity, but firm delivery dates, quality of service, and the legal rights to interconnect. Queue position is now an asset. So is the ability to control your own destiny with behind-the-meter power and on-site distribution.

- Substation adjacency beats cheap land. If you are within a few miles of a high-capacity substation, you control your destiny. If your site depends on a new transformer that will arrive in 2027, you do not.

- Transmission rights are a moat. Long-term access to a congested corridor lets you place more load on the same geography. This is why utilities are upgrading existing lines as fast as they can permit crews and equipment.

- Regulatory clarity saves years. States with transparent interconnection studies and performance-based ratemaking can move from intent to energized meters far faster than jurisdictions with opaque processes.

This is also a protocol story. As compute orchestration stretches across regions and resource types, the interface becomes the operating system. We explored this shift in how a protocol layer turns AI into an OS for infrastructure.

How the load evolves: from blunt to flexible

Training loads are intense and relatively schedulable. Inference is continuous but can be steered. As agentic systems mature, the grid will see more elasticity in when and where compute runs, even at very large scale. That opens a path to treat compute like a grid participant rather than a passive customer.

Practical examples:

- Carbon-aware orchestration: training that pauses when local marginal emissions spike and resumes when cleaner power is available, while workloads spill to regions with spare wind or nuclear.

- Curtailable inference tiers: low-urgency agents accept a five-minute delay during local peaks in return for lower prices, while high-urgency traffic pays a premium for guaranteed power quality.

- Thermal flywheels: campuses oversize chilled water or phase-change storage to shave electrical peaks without adding generators.

Each tactic turns megawatts into a controllable variable. It also gives utilities confidence to serve large loads without overbuilding peakers that will be stranded later.

Utilities as core AI infrastructure

The accelerationist move is to treat utilities like part of the AI stack and to give them the tools to deliver safely and quickly. That means three things in practice.

- Fast-track zero-carbon power that matches AI’s duty cycle

Advanced nuclear and geothermal offer high capacity factors and stable output. Solar plus batteries is valuable, especially for inference, but training farms want a round-the-clock profile. When buyers lean in with pre-construction commitments and standardized contracts, they unlock cheaper capital and shorter timelines. Modular nuclear is not the only answer, but the underlying principle is universal: align corporate purchases with the specific shape of the load you will place on the grid.

- Make dynamic pricing the default for large compute

Most big campuses still pay tariffs designed for factories. The grid needs compute-native rates that expose real-time costs. A data center should see a price signal that reflects the cost of turning on a gas peaker at 6 p.m. and the savings from shifting training to 2 a.m. For regulators, that means approving tariffs with transparent locational and temporal components. For operators, it means building scheduling into the platform and promising measurable flexibility to the utility.

- Tie power purchase agreements to compute milestones

A power purchase agreement, often called a PPA, can be more than a fixed block of megawatt-hours. Tie contract tranches to compute deployment milestones and commissioning dates. When a cluster goes live, the next tranche of firm power activates. When a model’s footprint scales down, power can be resold into the market. That creates discipline for both sides and reduces the risk that ratepayers subsidize speculative builds.

Georgia as a bellwether

Georgia’s current cycle shows the stakes. The state’s utility has asked regulators to certify roughly 10 gigawatts of new resources in the wake of a forecast heavily influenced by data centers. Supporters point to jobs and long-term growth. Critics worry about shifting costs to households and locking in gas infrastructure. The correct path is neither a rubber stamp nor a blanket moratorium. It is a binding playbook that assigns costs to the creators of the load, sets zero-carbon targets for new capacity, and rewards flexibility that keeps peaker use down.

Put differently: if you want priority access to scarce megawatts, bring your own solutions. Bring a firm zero-carbon plan. Bring a demand response commitment. Bring local benefits like waste heat reuse or workforce pipelines that make neighbors feel like stakeholders rather than bystanders.

Transmission is the silent kingmaker

Generation gets headlines, but transmission decides how fast the future arrives. The Department of Energy’s freshly finalized loan guarantee for a multistate upgrade by American Electric Power is a reminder that line upgrades can add capacity years before entirely new corridors get sited. Reconductoring, advanced power flow controls, dynamic line ratings, and substation automation can all squeeze more electrons through the same steel.

Here is the policy unlock: treat proven upgrades as the first dollar spent, then reserve new corridors for places where no amount of optimization will do. Pair that with permitting reform that sets clear federal-state timelines, and you turn a seven-year build into a three-year one. For a governance perspective on how public infrastructure choices set competitive rails, see our piece on public AI infrastructure rails.

Financing the new moat

For model labs and cloud providers, power is now a balance sheet decision. The leaders will create energy subsidiaries that can originate projects, hold long-dated contracts, and arbitrage across geographies and technologies. Expect a wave of compute-tied infrastructure funds that finance firm power in exchange for offtake linked to specific clusters. Expect joint ventures with utilities where buyers co-fund distribution upgrades in return for guaranteed interconnection dates.

The numbers can work. Firm, zero-carbon power with long-term buyers attracts lower-cost capital than speculative merchant plants. Standardized contracts, shared designs, and preapproved vendor lists compress timelines and lower risk. The lesson from content delivery networks applies: when you standardize and repeat, what looked bespoke becomes a kit.

A practical playbook, role by role

-

For hyperscalers and model labs: build an electricity portfolio, not just a renewable certificate portfolio. Mix firm zero-carbon, variable renewables, and storage. Allocate training to regions with spare capacity and high clean fractions. Publish a flexibility budget so utilities can plan around real reductions in peak demand.

-

For startups: make energy a product feature. If your agentic system can tolerate latency or deferral, expose that to customers and price it. Buyers will choose slightly slower agents that run on cleaner, cheaper electrons once that value is visible.

-

For utilities: create a compute tariff with real-time granularity, clear performance credits for flexibility, and penalties for nonperformance. Offer a fast lane for projects that commit to specific zero-carbon shares and on-site backup that reduces local system risk.

-

For regulators: require compute-tied PPAs to include milestones, clawbacks, and cost-allocation rules that shield residential customers from speculative load. Approve reconductoring and grid-enhancing technologies as default options. Set clear, short windows for interconnection studies with standardized data requirements.

-

For investors: evaluate data center real estate as an energy asset first. Underwrite queue position, substation proximity, and access to firm power ahead of tenant mix. The winners will look more like independent power producers with fiber than warehouses with servers.

What success looks like

If the sector gets this right, the United States can expand the frontier of AI without social blowback. The power mix tilts toward zero carbon faster because demand growth comes with ironclad offtake. Transmission upgrades unlock stranded wind and sunshine. Data centers become good grid citizens, able to flex when the system is stressed. Households see stable bills because new loads shoulder their true costs and reduce the need for expensive peakers.

The alternative is a world where queues stretch to the horizon, neighborhoods revolt, and model deployment slows because someone could not find 300 megawatts within 200 miles of a fiber hub. That is not inevitable. We can choose the faster path.

The conclusion: build the moat where the volts are

The last platform wars were fought in software, then silicon. The next one wraps around transformers, switchyards, and rights of way. Amazon’s nuclear move, Georgia’s push for capacity, S&P’s demand forecast, and the Department of Energy’s transmission boost all point the same direction. The real advantage is not just faster chips or smarter agents. It is priority access to clean, firm, and fairly priced electricity where compute wants to live.

Treat utilities as core AI infrastructure, make dynamic pricing the norm, and write compute-tied power purchase agreements that add steel without adding backlash. Do that, and the frontier keeps expanding at the speed of electrons and the pace of public consent.