Charter Alignment: OpenAI’s Foundation to PBC Pivot and AGI Gates

OpenAI’s shift to a foundation-controlled public benefit corporation rewires how frontier AI is financed and released, from Microsoft’s refreshed pact to an AGI verification panel that gates commercialization and shapes accountability.

The week corporate governance became an alignment tool

On October 28, 2025, OpenAI completed a restructuring that placed a new public benefit corporation under the control of a nonprofit foundation and refreshed its long-term agreement with Microsoft. The updated pact introduces an independent panel to validate any future claims that OpenAI has achieved artificial general intelligence, and it reframes economics and product rights for the next stage of growth. In simple terms, a foundation now sets the constitution for a powerful commercial entity, while a contract embeds a safety gate into how breakthroughs are monetized and deployed, as detailed in Reuters reporting on the restructure.

If last year was about model checkpoints and evals inside labs, this year adds charters and covenants outside them. Alignment by charter has entered the stack. Not as a slogan, but as legal plumbing that can move billions of dollars and decide who ships what, when.

A new alignment layer: charters plus verification panels

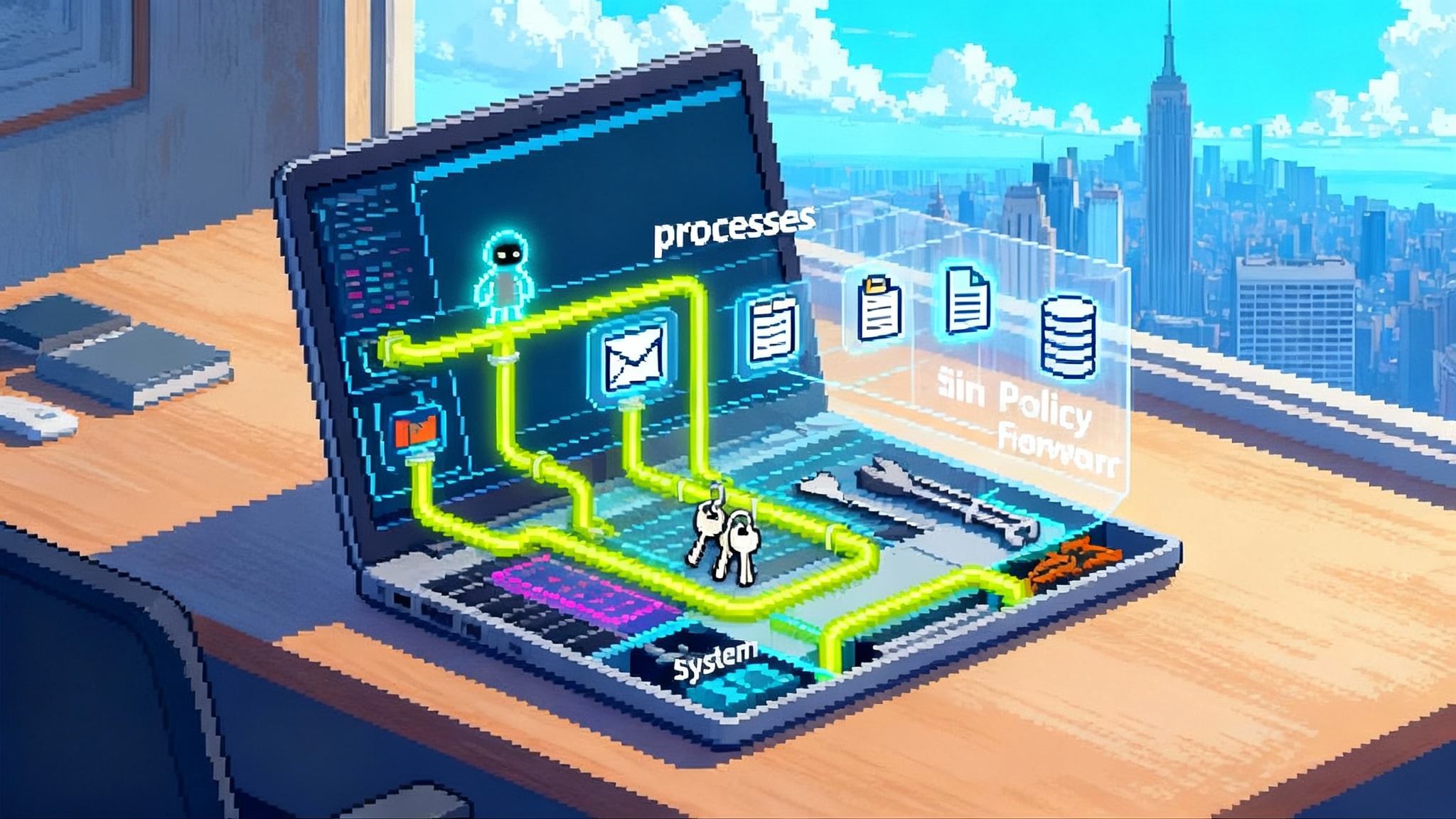

Think of the foundation as the operating system for mission and guardrails. It writes the rules for the public benefit corporation, appoints directors, and sets the public purpose that management must pursue alongside profits. The Microsoft agreement then functions like an application that runs on this operating system, codifying the conditions under which advanced systems can be commercialized. One crucial element is the AGI verification panel, an independent group empowered to validate extraordinary capability claims before the deal’s most valuable rights toggle on. Reuters notes that this panel acts as a hinge for rights and revenue flows, rather than a publicity badge.

This is not traditional compliance. It is corporate constitutionalism. Instead of waiting for a regulator to define AGI or police every release, the company and its largest partner pre-commit to a private certification process. The incentives attach directly to revenue, compute access, and distribution rights.

A useful metaphor is the relationship between building codes and private inspection. Cities set the baseline, but developers cannot collect rent until a private inspector signs off. Here, the foundation charter sets the purpose and oversight, while the panel’s sign-off acts like a certificate of occupancy for the frontier of capability.

This private governance layer also rhymes with the shift we explored in new AI market gatekeepers. When the power to say yes or no to scale moves from internal labs to verifiers that sit at arm’s length, audits begin to feel less like paperwork and more like market infrastructure.

Why the split unlocks capital and speeds deployment

-

Public benefit corporation status widens the pool of potential investors. Traditional nonprofit caps limited growth financing and made large secondary sales awkward. The new structure can issue equity and debt at scale, which means big cloud and hardware commitments can be financed without constantly renegotiating around nonprofit constraints. Reuters reports that Microsoft retains a significant minority stake and long-term product rights, while relinquishing some rights that previously narrowed OpenAI’s options, a design that encourages fresh capital to flow in through more flexible terms.

-

The foundation’s controlling role reassures cautious partners. Many enterprises do not want to underwrite a lab that could pivot overnight. A foundation that can hire and fire directors, demand benefit reports, and define mission narrows the set of surprises management can spring. That decreases counterparty risk and speeds large deployments.

-

The AGI verification panel reduces model risk pricing. Investors and strategic partners can price contracts with clearer contingencies. Compute prepayments, minimum spend obligations, and milestone-based warrants can reference panel determinations rather than vague internal thresholds. This lowers the legal friction of doing very large deals quickly, and it aligns with the market’s drift from glossy demos toward verifiable thresholds.

The accountability relocation

Public benefit corporations sit in a middle lane between traditional nonprofits and pure profit-seeking firms. Directors retain a duty to stockholders, but they are also required to pursue the specific public benefit chosen in the charter. That creates two critical enforcement channels:

-

Board governance with mission locks. The foundation can control a majority of the board seats or hold special veto rights, which lets it enforce the charter when profit-only incentives run hot. If a release plan or partner contract conflicts with the benefit purpose, directors are obligated to consider the mission and can be held to account by the foundation, public benefit stockholders, and in some jurisdictions by the state attorney general.

-

Public benefit reporting. Annual benefit reports and third-party assessments create a record that can be compared against stated safety and societal goals. These disclosures become the performance scoreboard for civil society and customers. If the reports look like decoration, the legitimacy cost climbs, and so does the litigation risk.

In effect, accountability shifts from regulators who act after the fact to boards and charters that act in advance. The Microsoft agreement strengthens that shift by baking a private certification step into commercialization itself, according to Reuters reporting on the restructure.

What rivals now have to decide

-

Anthropic already has a Long Term Benefit Trust that appoints most board members. The OpenAI move pressures Anthropic to consider whether a foundation-controlled public benefit corporation would give it more financing flexibility without diluting its safety governance. If it stays with the trust structure, expect it to copy the idea of verification triggers in large commercial contracts.

-

Google DeepMind lives inside a public company. Alphabet can still adopt charter-like commitments by inserting safety triggers into cloud and advertising contracts. For example, large distribution agreements for Gemini features could condition rollout to younger users on specific eval thresholds reviewed by an independent committee.

-

Meta and the open model ecosystem will face a different question. If you do not control downstream use, what is the equivalent of a verification panel. One answer is a panel that certifies releases against a defined capability schema before weights go public, combined with post-release incident escrow, where a percentage of cloud credits and partnership cash flows are held back until safety metrics stabilize. For deeper context on forks and distribution, see who governs forkable cognition.

-

Mistral, xAI, and other challengers must choose whether to trade some autonomy for cheaper capital and large distribution. Verification panels and benefit charters can be pitched to investors as risk reducers that lower the cost of capital. If your rival can raise at two points less because their structure carries a safety premium, you are on the back foot.

How investors should read the new structure

Investors now have a clearer term sheet menu:

-

Milestone finance tied to evaluation gates. Debt or structured equity can be released in tranches that unlock when pre-agreed external evals are passed. Combine this with covenants that escalate safety spending when model capability grows faster than expected.

-

Compute supply agreements as collateral. Long-dated cloud commitments can secure financing if they reference verification triggers. If the panel certifies AGI-like capabilities, a step-up clause can increase minimum compute spend, which raises the value of receivables. For a view of how power and supply shape leverage, revisit power becomes the AI platform moat.

-

Golden share and purpose vetoes. Minority investors worried about mission drift can ask the foundation to hold a golden share that vetoes changes to the public benefit. Alternatively, investors can require a two-key release process where both the board and an independent ethics committee approve frontier launches.

-

Incident-linked economics. Tie revenue sharing and cloud credits to incident rates reported in a public safety dashboard. If real-world misuse rises, economics shift toward safety remediation and away from expansion until metrics recover.

The European overlay: GPAI obligations meet private alignment

Starting on August 2, 2025, providers of general-purpose AI models in the European Union must satisfy new obligations, including technical documentation, a copyright policy, and, for the largest models, additional risk management and incident reporting. The Commission has also published guidance and a voluntary code of practice that providers can use to demonstrate compliance. These public obligations sit neatly alongside private charters and verification panels. See the Commission’s overview of General-purpose AI obligations under the AI Act.

Here is the practical interaction:

-

If your corporate charter requires independent evaluation before major capability jumps, your EU incident reporting and systemic risk assessments become easier to evidence. The panel’s methods and results can be appended to your model’s technical documentation.

-

If your Microsoft-style agreement ties commercial rights to a verification panel, your European partners can treat that as a compliance anchor. It does not replace the law, but it reduces their secondhand liability by showing that significant releases are pre-vetted against known risks.

-

The voluntary code of practice can map onto your board calendar. For example, make the benefit report coincide with the code’s transparency chapter, and require the verification panel to review the copyright chapter’s training data disclosures before approval.

Companies that treat the EU regime as paperwork will overspend. Those that use charters and panels to hardwire compliance into release and revenue gates will save both time and legal ambiguity.

The near-term design questions that now matter

1) Who certifies AGI, and by what test

-

Option A: A standing, independent nonprofit lab with rotating membership, funded by a levy on large cloud contracts. It publishes test suites, cross-lab benchmarks, and incident summaries. To avoid capture, members recuse when their own lab is under review, and the levy is distributed in proportion to time spent on cross checks.

-

Option B: An accredited network of evaluators, similar to financial auditors. The foundation or a partner picks from a roster. Evaluators are licensed against a public methodology and can lose accreditation if they miss material risks. Conflicts of interest rules would mirror audit rotation and partner cooling-off periods.

-

Option C: A treaty-like recognition system. If Model A passes at Evaluator 1 in the United States under Method Alpha, the EU AI Office recognizes the result if Alpha matches its risk schema. This reduces duplicative tests and creates a competitive market for better methodologies.

Key principle: the panel should certify operational claims that matter to contracts, not abstract status debates. It should gate access to distribution, safety budgets, and compute scale, not confer bragging rights.

2) How are public-benefit mandates enforced in practice

-

Build the mandate into director pay. Tie long-term compensation to safety metrics and social objectives, not only revenue. Metrics might include adversarial robustness improvements, external red-team coverage, and reductions in measured misuse.

-

Require an annual negative release decision. At least once per year, the board must publicly explain a material capability it chose not to ship, the evidence behind the decision, and what would change that conclusion. The point is to show that the benefit mandate actually bites.

-

Create a user ombuds office with power to escalate noncompliance directly to the foundation board. Give it a budget and data access. Measure its independence by the number of times it triggers board review.

3) How can capital commitments encode safety without throttling progress

-

Use conditions precedent, not blanket freezes. For example, a cloud prepayment unlocks when an external eval demonstrates that a new reasoning capability meets misuse and robustness thresholds on a pre-registered test suite.

-

Step up safety spend with capability, not revenue. For each order of magnitude increase in training compute, automatically allocate an additional fixed percentage of operating expense to red teaming, sandboxing, and third-party evals.

-

Design a circuit breaker that respects customers. If the panel flags an unacceptable risk, pause high-risk features for new users while maintaining critical enterprise continuity. Offer credits to affected customers and publish a remediation roadmap with target dates.

What to watch next

-

The composition and governance of the AGI verification panel. Independence will hinge on who appoints members, how conflicts are managed, and whether dissenting opinions are published. Look for fixed terms, budget independence, and transparent methodologies.

-

Whether other labs copy the contract gate. We should see at least one more hyperscaler-lab agreement that references external evals as revenue triggers. Watch for language that ties distribution rights to panel sign-offs, not just to vague safety aspirations.

-

How the EU AI Office treats private certification. If the Office starts to accept panel outputs as partial evidence for systemic risk duties, expect a wave of convergence on a few trusted eval frameworks.

-

Board behavior at the foundation level. Early elections, special committees on safety, and public benefit vetoes will reveal whether the foundation is more than a brand wrapper.

The deeper signal

OpenAI’s October 28 restructuring and refreshed Microsoft agreement show that alignment is no longer only a technical discipline. It is also a corporate design problem solved with charters, covenants, and third-party panels that can move money and throttle launches. That design unlocks unprecedented capital and can accelerate deployment, but it also relocates accountability from distant regulators to near boards and benefit charters. If this model spreads, frontier AI will be governed by a blend of public rules and private constitutions. The challenge now is to make those constitutions real, with teeth, and to ensure the panels certify what matters most: whether society is actually safer when the next capability ships.