Once the Weights Are Out: Who Governs Forkable Cognition?

Open weights turned advanced models into forkable engines that anyone can run, tune, and ship. This piece maps the new power centers, shows how safety moves to the edge, and outlines a practical runtime and compliance playbook for 2025.

2025's breakpoint: when weights left the lab

In 2025, a quiet but irreversible shift took hold. The industry stopped debating whether open models would matter and began living with the consequences of their spread. OpenAI publicly signaled an open weight release for the summer, then delayed it twice as anticipation surged among developers. The signal alone changed the room. It acknowledged that open weights were not a sideshow but a central tool. The first shoe dropped with that admission, captured in early coverage of the plan in a Wired explainer on OpenAI's open weight plan. The second shoe was the delay itself, a reminder that once weights leave a lab, the decision cannot be walked back.

While OpenAI hesitated, others shipped. Meta released Llama 4 as open weights, seeding millions of developer laptops and edge servers with new capabilities and fresh obligations. For a crisp snapshot of the moment, see Reuters on Meta releasing Llama 4 as open weights. DeepSeek doubled down on openness with permissive licensing and a steady drumbeat of releases that invited auditing, distillation, and remixing. Alibaba put a clear flag in the ground by licensing Qwen3 models, including Qwen3-Next, under Apache 2.0, which made it straightforward for enterprises to adopt without unusual legal overhead.

If you work with models, you felt the ground move. When a company publishes weights, it publishes a capability that anyone can fork, audit, or recombine. In that world, centralized policy loses leverage relative to distributed engineering. Safety becomes an edge problem. Compliance becomes ecosystem design. Competitive moats migrate from model access to deployment excellence, data flywheels, and distribution power.

This piece takes a pragmatic, slightly accelerationist stance: assume propagation, not prohibition. The question is not whether advanced cognition will spread. It already has. The question is how to operate responsibly when cognition is forkable.

Forkable cognition, in plain terms

Think of a model as an engine block. An API is like renting time on someone else's dyno. Open weights are more like owning the engine blueprint and a crate motor. You can drop it into your car, shim it, retune it, and bolt on your own safety gear. You also inherit the responsibilities of fuel quality, brakes, and roll cage.

Open weights are not the same as open source in the classic sense. In most cases you get the trained parameters, not the entire training data or full build scripts. Still, the practical effect is massive. Engineers can:

- Run models locally to keep sensitive data in place.

- Fine tune rapidly with domain data.

- Inspect and profile behavior under real workloads.

- Blend models, distill, or add reasoning scaffolds.

The reason 2025 feels like a breakpoint is that the quality floor of open weights rose enough to meet mainstream needs. Meta put powerful models into the commons. DeepSeek's permissive licensing made remix culture unavoidable. Alibaba's Apache 2.0 choices made legal teams comfortable. This is not theoretical. It is happening at scale across consumer apps, enterprise systems, and research labs.

To ground the claim about mainstream reach, remember that Llama 4 did not just appear as a code repository. It also arrived prewired into products used by billions, a signal that open weights can travel through the largest distribution channels on Earth. Distribution and openness are no longer in tension. They now reinforce each other.

What changes when weights are everywhere

The obvious change is access. The deeper change is where control lives.

- Control moves from platform policy to deployment practice. If you can run a capable model offline, the most effective controls are the ones that ship with the deployment. This elevates runtime safety, hardware governance, and post deployment observability.

- Compliance shifts from point in time checks to supply chain discipline. You are no longer vetting a single cloud interface. You are vetting a model artifact, the adapters attached to it, the tools it can call, and the logs it emits. That shift echoes the rise of the new AI market gatekeepers, where audits and runtime proofs matter more than headline benchmarks.

- Competition moves toward operating leverage. When core weights are public, defensibility depends on how well you deploy, where you can distribute, and what private or synthetic data advantage you can compound over time.

Safety becomes edge engineering

A world of forkable cognition demands that safety become a property of the runtime, not just the provider. That means modular safety that travels with the model and keeps working when the internet is off.

Here is a concrete, shippable stack you can assemble today:

- Guarded tool use. Route all external actions through a broker that enforces allowlists, rate limits, and structured approvals. For example, file system write, database mutate, and network post should be distinct capabilities with separate consent and logging.

- Layered input filters. Combine lightweight lexical filters with compact classifiers and a local retrieval layer of banned prompts, known exploits, and jailbreak patterns. Make it hot swappable so communities can update filters without waiting on a new model.

- Risk adapters. Attach small, trainable adapters that suppress or reshape responses in high risk domains such as chemical synthesis, bio protocols, or social manipulation. Because a base model's weights are fixed once released, adapters give you a way to evolve safety behavior without retraining the core.

- Execution sandboxes. When the model writes code or calls tools, run those actions in restricted containers with time, memory, and network budgets. Log every system call. Treat code generation as you would untrusted plugins.

- Watermark actions, not words. Content watermarking is brittle once people can fine tune. Instead, watermark the action trail. Sign tool calls and attach provenance to every state change. If something goes wrong, you can prove who did what, when, and with which model build.

- Observability at the edge. Stream structured traces of prompts, tool calls, and outcome flags into a local buffer, then sync to a central store when online. Build playbooks that trigger on specific patterns, such as repeated failed jailbreaks or attempts to access restricted tools. This playbook mindset aligns with how playbooks become enterprise memory.

For organizations that want a one page plan, implement the following minimum viable controls in any environment that runs open weights:

- Safety broker in front of tools and data. 2) Local red team pack that exercises common jailbreaks weekly. 3) Model manifest with license, version, hash, adapter list, and approved capabilities. 4) Signed audit logs shipped off host.

Compliance becomes ecosystem design

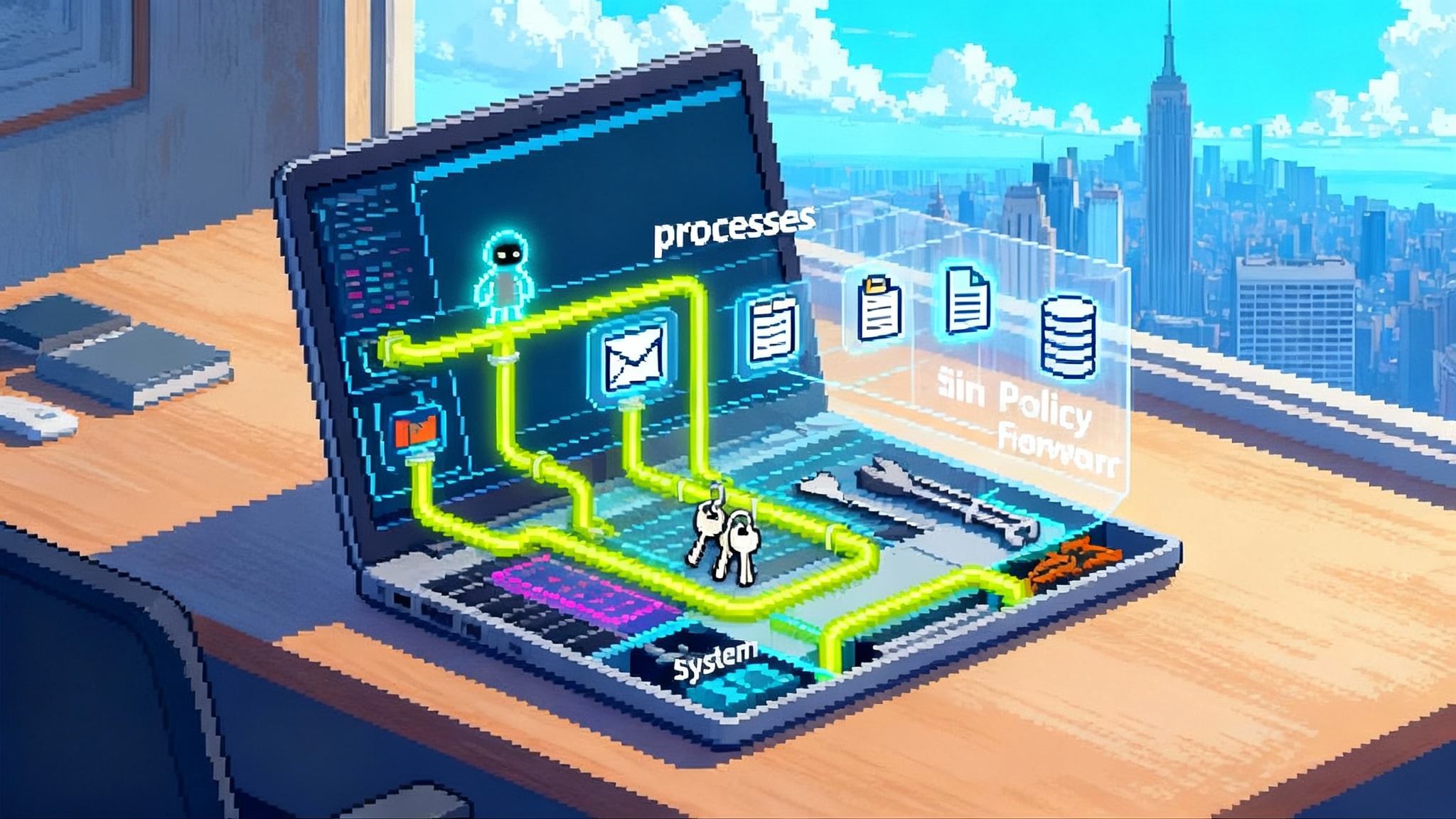

Traditional compliance imagines a single vendor you can certify. Open weights distribute responsibility across a supply chain of model files, adapters, tool plugins, and orchestrators. That requires composable compliance.

- Treat models like packages. Every model artifact should ship with a manifest that includes cryptographic hashes, license, training disclosure, known hazards, and operational limits. Store manifests in a registry that supports pinning and rollback.

- Make the governance surface part of your software. Provide toggles for dangerous capabilities and publish their default state. If your model can call a browser or a shell, that is not a footnote. It is a central risk surface that needs on by default controls and tamper evident logs.

- Adopt shared red teaming. Join or form an exchange where participants contribute new jailbreaks, high risk prompts, and evaluation datasets. Update your filters and adapters from that exchange on a regular cadence.

- Use licenses strategically, but do not rely on them for runtime safety. Licenses set expectations and move friction to where you want it. Apache 2.0, community licenses, and custom terms all have a place. Just remember that once weights are out, license compliance is civil enforcement after the fact, not a technical brake before the fact.

Regulators can meet this moment by focusing on outcomes and interfaces:

- Require signed manifests and action level provenance for sensitive deployments. You do not need to ban open weights to demand traceability.

- Encourage standard safe inference runtimes that implement allowlists, budget controls, and logging. Make certification about the runtime and the operating procedures, not the underlying model's brand name. Efforts to standardize agent interfaces echo the momentum of MCP turning AI into an OS layer.

- Publish domain specific hazard catalogs, then test for mitigations. A hospital does not need the same safety kit as a game studio. Give each sector a target list and a test harness.

Moats migrate: access gives way to deployment, data, and distribution

When everyone can download similar weights, winning means:

- Deployment mastery. Get more capability per watt and per dollar. Ship quantization that holds quality, caching that reduces token costs, and compilers that make multimodal models feel snappy on edge devices. If your model boots fast, streams gracefully, and survives spotty networks, you will win users.

- Data flywheels with consent. Build private corpuses that your customers opt into, then fine tune adapters that capture their domain language, tools, and edge cases. The moat is not mysterious. It is a compounding loop of permissioned data improving outcomes.

- Distribution everywhere. Put the model in the places where people already work and talk. Messaging apps, office suites, terminals, design tools, and field devices. Llama 4 rode WhatsApp and Instagram. Qwen's Apache 2.0 variants rode enterprise channels and even mobile runtimes. The weights are table stakes. The distribution is the differentiator.

If you are a founder deciding where to invest, use a simple rule: for every dollar spent training a base model, spend at least as much hardening the runtime and twice as much on distribution and developer experience.

Case studies in the new balance of power

-

Meta's Llama 4. The headline is not only that Meta released open weights. It is how they traveled. Llama 4 upgrades landed in Meta AI assistants across the company's apps while the same weights propagated to developer platforms. That bridge from consumer scale to developer hacking accelerated the pace at which safety practices, license debates, and performance questions had to be answered in the open.

-

DeepSeek's openness as a force multiplier. Releasing under permissive terms created a rapid culture of distillation, auditing, and benchmarking. Critics argue that weights without full datasets are not truly open. Practically, the effect was dramatic. A global community started treating reasoning models as forkable infrastructure rather than a black box service. It also put price pressure on everyone else by showing that competitive cognition could be trained and run far below the assumed cost curve.

-

Alibaba's Apache 2.0 posture. By licensing Qwen3-Next and related models under a standard permissive license, Alibaba made it painless for enterprises to treat these models like any other open component. That does not magically solve safety, but it smooths procurement and legal review, which is often the real barrier to adoption.

Each example shows the same thing. Once weights are out, governance moves closer to the people who do the deploying. The world stops waiting for a single platform's policy update and starts shipping adapters, manifests, and safe runtimes.

Responsibility, once cognition can be cloned

What does responsible look like when anyone can copy, audit, and recombine the engine block?

- Responsibility is traceable action. If your model can act, every action should be signed, rate limited, and explainable. The audit log is your immune system.

- Responsibility is opt in learning. If you collect data for fine tuning or retrieval, treat consent as a product feature. Clear knobs, clear value, and clear exits beat a fuzzy privacy policy.

- Responsibility is hazard first design. Do not debate abstract risk. Enumerate domain hazards, publish your mitigations, and test them in public. If you ship to hospitals, publish your emergency stop procedures. If you ship to schools, publish your persuasion limits and monitoring approach.

To operationalize this, adopt three switches that travel with any serious deployment of open weights:

- Hardware switch: drivers that can block certain system calls or device control from model initiated tools when risk thresholds are exceeded.

- Tool switch: a broker that requires human approval or a second model's vote for high impact actions such as wire transfers or server reconfigurations.

- Liability switch: an escrowed liability pool that pays for independent audits and covers harms up to a published cap, funded by a small tax on each inference above a threshold.

These are not slogans. They are buildable features. They move the conversation from ideology to implementation.

How to build with open weights in 2025

A concrete checklist for teams embracing the new reality:

- Pick a license and stick to it. Apache 2.0 if you want the fewest surprises. If you ship a community license, publish a plain English summary of what is allowed and where.

- Publish a model manifest. Include the model hash, license, training cutoff, supported modalities, context length, known hazards, and the list of attached safety adapters. Treat the manifest like a lockfile in modern package managers.

- Attach a safe inference runtime. Do not let the model call the world directly. Route tools and data through a broker that enforces budgets, allowlists, and approvals.

- Build a red team schedule. Run weekly automated jailbreak suites and monthly human red team sessions. Track regressions over time and publish summaries.

- Instrument everything. Ship signed logs of prompts, tool calls, and outcomes. Encrypt logs at rest. Provide customers with their own logs by default.

- Make it fast and friendly. Open weights without a great developer experience will lose to a slightly weaker model that is easy to deploy, cheap to run, and pleasant to build with.

Teams that already live this way treat their runtime as a product, not a glue script. They pin model versions, keep adapter trees tidy, and use staged rollout strategies that include holdout canaries. They have playbooks for incident response that begin with action revocation and continue with targeted adapter changes rather than full retrains.

The real governance question

Who governs when the weights are everywhere? The honest answer is that governance becomes multi layered and local by design. National rules will matter for distribution and liability. Platform policies will matter for app stores and cloud marketplaces. But the decisive layer will be the runtime you ship and the ecosystem you cultivate around it. That is where safety is real, where compliance is testable, and where innovation compounds.

If you need a single mental model, picture three concentric rings. The outer ring is public policy and sector standards. The middle ring is platform policy and distribution channels. The inner ring is the runtime in your hands: the broker, the adapters, the logs, the tests, the playbooks. Most of the leverage sits in the inner ring, and it is the part you control directly.

The moment to decide

You cannot put spilled seeds back in the packet. Open weights will keep sprouting in new places, cross pollinating ideas and capabilities. That is not a reason to panic. It is a reason to design for the world we have. If 2024 was the year of closed demos and careful previews, 2025 is the year of open engines and local brakes. The next advantage belongs to teams that treat safety as an engineering discipline at the edge, treat compliance as an ecosystem they can design, and treat distribution and data as the real moats. Once the weights are out, power reorganizes around those who can ship responsibly at scale. That is the work now, and it is work worth doing.