Licensed Memory Arrives: AI's Shift to Clean Data Supply

AI is moving from scrape first habits to rights cleared memory with warranties, recalls, and lineage. Here is how licensed data supply chains reshape models, procurement, and the products customers will trust.

The moment the web turned into a warehouse

This year felt different. Instead of arguing in circles about fair use, the market moved. Across 2025, a stack of rulings, settlements, procurement updates, and agency playbooks started drawing clearer lines between lawful training and unlawful acquisition. The tone changed from debate to documentation. Buyers began to ask where knowledge came from, what rights accompany it, and how to unwind it if something goes wrong. In short, model memory started to look like inventory.

For a decade, builders lived by a simple creed: scrape first, apologize later. That culture treated data as air. Oxygen is free until a firefighter needs a certified tank. In 2025, enterprises started asking for tanks. The request is not philosophical. Buyers want provenance, warranties, and a right of recall. Sellers want predictable revenue and indemnification. Regulators want audit trails that survive a courtroom. The result is a supply chain.

What actually changed

The most important shift is a distinction. Training on material that was lawfully acquired and used under a valid license is not the same as acquiring material unlawfully in the first place. Courts and regulators did not bless any and every use. They pushed industry to separate acquisition, permission, and purpose. That sounds bureaucratic. It is actually a blueprint for scale.

- Acquisition asks: how did the dataset enter your house.

- Permission asks: what are you allowed to do with it.

- Purpose asks: what exactly did you do.

When all three align, training or retrieval can proceed. When one fails, the pipeline is at risk. This simple triangle translates legal theory into engineering constraints and commercial contracts.

Public bodies reinforced the message. Agencies and court systems began publishing AI use rules and, in some cases, approved tool lists. That one procurement habit forces vendors to prove where knowledge came from, to log usage, and to show that answers can be traced to inspectable sources. A procurement officer who once asked for latency now asks for lineage.

From weights to memory markets

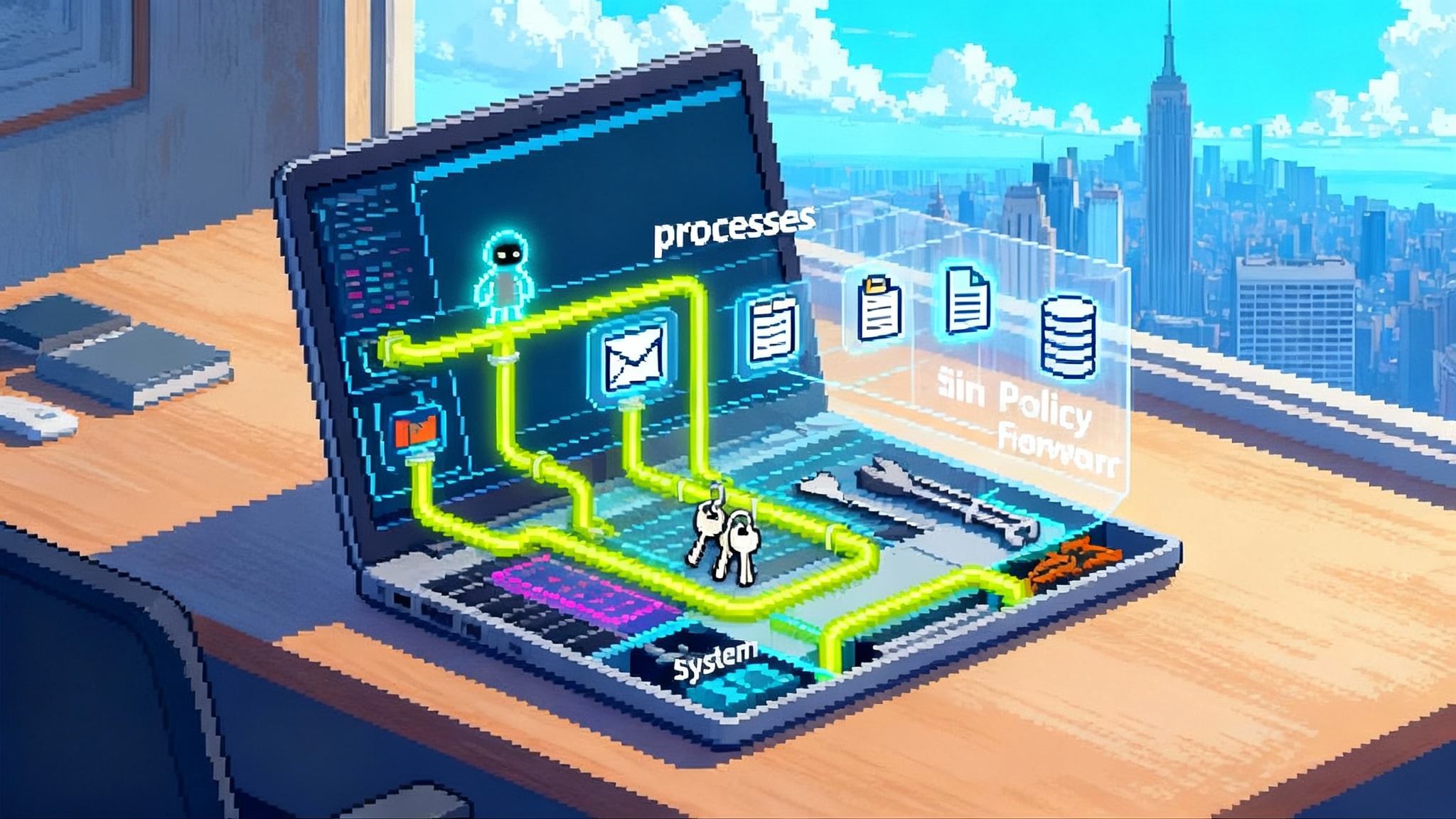

For years, the field obsessed over weights as if neural parameters were the whole product. Weights still matter. But the customer experience, safety profile, and legal position are now dominated by the memory that surrounds those weights. Retrieval augmented generation turned this from philosophy into practice. A system fetches snippets from a memory store and uses them to answer. That store can be a slush pile of whatever the crawler found, or a rights cleared corpus with a ledger.

Here is how a memory market operates in practice:

- A model provider negotiates a catalog license that specifies ingestion scope, caching rules, and answer display limits.

- A vector database emits embeddings into zones labeled by license and purpose.

- A policy engine enforces retrieval constraints so that regulated users can only access zones permitted for their role.

- Logs capture source identifiers, timestamps, and license versions used for each answer.

- If a rightsholder revokes a subset, the provider removes those items from active recall, reindexes, and publishes a change log.

The product team calls this a recall. The legal team calls it compliance. The finance team calls it cost of goods sold.

Once memory is a product, new players step forward. News agencies, scientific publishers, image libraries, audio labels, stock houses, and archives can package their catalogs as clean memory with clear rights. Data brokers evolve into memory syndicators. Cloud providers offer clean rooms that enforce purpose constraints during training and retrieval. The winning bundle is not just speed and capacity. It is a bill of materials for what your system knows.

Content Credentials and chain of custody

Every supply chain rises or falls on chain of custody. Who touched what, when, and under which controls. The creative industries spent years building the C2PA Content Credentials standard to encode authorship, tools, and transformations in a portable manifest. That work is moving to the center of the AI pipeline.

Picture a scanner at the loading dock of a factory. Every crate passes under a camera. The system reads a digital seal, checks a registry, and writes an entry to a ledger. The AI equivalent is an ingest service that verifies Content Credentials, records a cryptographic hash, extracts license terms, and maps them to policy labels. The model does not absorb an anonymous blob. It absorbs a row in a memory table that says: use allowed for training, snippet display up to N characters, license expires on date X, attribution required, indemnified up to amount Y.

That unlocks the magic words buyers want to hear: warranty and recall. Sellers can warranty ownership and licensing. Buyers can demand a recall if a batch later proves to include infringing works or sensitive data. Because the parts list exists, the system can remove the batch, reindex, and publish a change history without tearing down the entire model.

The rise of the AI bill of materials

We already have model cards and data cards, but those describe more than they guarantee. An AI bill of materials converts description into a contract surface. It lists the datasets that shaped a model or populate its memory. It lists license terms, supplier names, warranty coverage, revocation pathways, expiration dates, and the security controls used during handling. It references audits. It presents change history in a diff format a regulator can read and a purchaser can compare across vendors.

If this sounds familiar, it should. Software is going through a similar transition with software bill of materials guidance. A credible AI bill of materials borrows the same muscle memory while adding license boundaries, purpose constraints, and recall guarantees.

Here is how a bill of materials gets used. A hospital buys a clinical assistant. The buyer asks for the memory bill. The vendor provides a signed document with entries like: clinical guidelines licensed from a medical society with monthly updates, articles licensed from a publisher with a display cap, internal policies from the hospital under a business associate agreement, and a sandbox of deidentified notes with documented deidentification methods. Each line item carries a supplier warranty and an expiration date. If any item is revoked, the vendor commits to shut off retrieval, reindex within a defined window, and notify users whose answers may have been affected. The hospital can now compare vendors by memory quality, not just demo flair.

Related dynamics are reshaping how products cross the market gate. As argued in new AI market gatekeepers, benchmarks are giving way to audits and attestations. The bill of materials is the artifact those auditors will request.

How procurement will change

Public sector buying often sets the tone for the private sector. With more agencies formalizing AI use rules, procurement teams are asking new questions:

- Show us the ingestion controls and evidence of content provenance checks.

- Show us the recall procedure and the service level you will meet.

- List your suppliers and their warranties. Do you indemnify and at what cap.

- Provide per answer audit trails that include source identifiers and license versions.

- Offer a quarantine mode for disputed sources and a process to rehydrate answers.

These are boring questions, which is why they are powerful. They turn AI into equipment. Equipment can be certified.

Expect to see service levels tied to data as well as compute. Not only uptime and latency, but also recall time, label accuracy, and contamination rate. Expect tiered pricing for clean memory versus open memory. Expect regulated buyers to standardize templates for bills of materials and to require third party audits. When that happens, the cheapest system with the largest model will often lose to the cleanest system with the safest memory. The market will not reward clever prompts when what it needs is chain of custody.

What builders should do now

You cannot bolt this on in a week. Start with your highest risk or highest volume memory, then work outward. A practical checklist:

- Inventory what your system knows. Separate base weights, finetunes, and retrieval memory. For each memory source, record acquisition method, license scope, renewal date, and supplier warranty.

- Add an ingest gateway that verifies content provenance signals. Hash all inputs. Store hashes and license terms alongside embeddings.

- Implement policy labels and retrieval constraints. Make it impossible for a query to pull from a memory zone that the license does not allow.

- Log source identifiers and license versions for every retrieval answer. Keep logs for the life of the contract. Provide a user facing disclose sources mode.

- Build a recall switch. Given a list of identifiers, remove those items from active retrieval, purge caches, reindex, and push a change log to customers.

- Generate a machine readable bill of materials and a human readable summary. Update both with every content refresh. Tie audit controls to these artifacts.

- Align contracts with engineering. Map warranty language and indemnity caps to specific controls and metrics. If your contract promises a five day recall, instrument it.

- Price clean memory as a line item. Track cost per retrieved thousand tokens the same way you track inference cost per generated thousand tokens.

If you are building enterprise skills, revisit how you package knowledge. Internal playbooks are increasingly the product. See how playbooks become enterprise memory and bring those principles to your retrieval design.

What rights holders can build

Rights holders are not stuck in a posture of complaint. They can turn catalogs into licensed memory products that sell.

- Package works into predictable bundles with a clear scope for training and retrieval.

- Publish update feeds and revoke feeds. Offer sandbox evaluation corpora with reference answers.

- Provide Content Credentials with signatures. Offer liability warranties priced into the license.

- Tag content with safety and suitability labels so buyers can enforce purpose restrictions automatically.

Think in stock keeping units instead of lawsuits. A publisher can offer a current events memory with a latency promise and a permitted snippet display length. A scientific society can offer a peer reviewed memory with a tag that blocks use in unsupported indications. A photo agency can offer a model safe image memory with explicit releases for training and generation. When the pitch shifts from threats to service levels, buyers show up with budgets.

Risks and false comfort

There are hazards in this new era.

- Compliance theater. It is easy to publish a handsome bill of materials that tells a story but does not connect to controls. Auditors should spot check by reproducing answers and verifying that logged sources match permitted memory zones.

- Cost. Clean data costs money. Many teams will try to hedge by running a small clean corpus next to a large gray corpus. Over time, that gray memory becomes toxic. As recalls grow, the operational burden eats the savings. Pick a side and commit.

- Equity. If licensing dollars only flow to the largest catalogs, the long tail gets squeezed. Market designers should encourage collective licensing for smaller creators with transparent splits, simple terms, and fast payout.

- Quality. Licensed does not mean correct. A bill of materials proves you bought it fairly, not that it is true. Keep evaluation and calibration separate, and connect quality metrics to specific suppliers so you can pay more for accurate sources and less for noisy ones.

Second order effects

As memory becomes an asset with provenance and price, model strategy will change. Expect more compact base models that excel when paired with high quality, rights cleared memory. Expect vertical models with deep, licensed memory that defeat general models in regulated settings. Expect a new role on product teams: the memory supply lead, a person who thinks in catalogs and contracts as much as in loss functions.

Insurance and finance will follow. Insurers will underwrite liability based on the quality of the bill of materials and the presence of recall controls. Lenders will value datasets as collateral when licenses include transfer provisions. Private equity will roll up fragmented catalogs into memory syndicates with guaranteed update schedules. Rating agencies will score memory portfolios for recall risk and contamination rate.

On the technical side, the retrieval layer will mature. Token accounting will appear for memory just as it did for inference. You will measure cost per retrieved thousand tokens and allocate that cost per customer or per feature. You will forecast license renewals the way you forecast cloud bills. You will audit that your safety filters and negative prompts apply to the memory zones they are supposed to govern. In parallel, protocol level coordination will matter more as teams wire together tools and data sources. The argument that MCP turns AI into an OS layer will feel concrete when policy and provenance flow through every call.

What this means for startups and incumbents

Startups finally have a wedge against giant models. Assemble a specialized, licensed memory that a general provider cannot easily copy. Sell trust as a bundle of contracts and controls, not as a slide with a lock icon. Start your go to market with a bill of materials tailored to a vertical buyer.

Incumbents have a different edge. You already own proprietary data, distribution, and compliance teams. Package internal knowledge as memory with clear terms and ship it to your own products first. Then consider external licensing with strict zones. Do not throw it into a global model without a ledger. Once it leaks, it does not come back.

The scoreboard two years from now

By 2027, expect league tables for memory portfolios. Vendors will compete on provenance coverage, recall speed, indemnity caps, and contamination rates. Courts and agencies will maintain living registries of approved tools and memories with time stamped approvals. Creative unions will operate collective licensing portals with transparent analytics. Universities will license classroom memories that refresh each term with faculty review. Big cloud providers will sell clean room templates with prewired policy checks for common content licenses.

Consumers will not read bills of materials. They will feel the difference. Systems with licensed memory will hallucinate less and attribute more. They will answer with confidence that can be traced. When something goes wrong, they will issue a recall notice rather than a blog post. The vibe will feel less like a demo and more like a product.

Conclusion: better knowledge with a paper trail

The old internet rewarded speed and scale without receipts. The new market rewards traceable knowledge. The 2025 inflection points nudged the field over the line. Teams are separating training from acquisition, upgrading ingestion with Content Credentials, and exposing a bill of materials that turns memory into inventory. Once models must prove where their knowledge came from, the winners will not only ship the best weights. They will own the cleanest, licensable memory markets and they will move knowledge work from a crawl of unknowns to a supply chain you can trust.