The Post-Benchmark Era: When AI’s Scoreboard Breaks

Leaderboards made AI progress look simple. A new review of 445 tests shows many benchmarks miss the mark. Here is a practical plan for living evaluations, safety cases, and market trials that measure real reliability.

The day the scoreboard blinked red

On November 4, 2025, a cross‑institutional team published a review that examined 445 large language model benchmarks and found that many do not actually measure what people think they measure. The authors call this a problem of construct validity. If your ruler is bent, your measurements are too. The paper’s dataset and checklist are public, and the headline conclusion is plain: much of our AI progress narrative rests on wobbly tests. See the authors’ construct validity study of 445 benchmarks.

That is not a minor housekeeping note for academic conferences. Benchmarks set incentives. They shape product roadmaps, influence investment, and increasingly inform regulation. When the tests fail to map to the real‑world phenomena they claim to measure, the scoreboard becomes theater. We mistake test‑taking skill for capability.

Why yesterday’s shock was inevitable

The surprise is how long it took to arrive. Leaderboards were irresistible because they were simple. But simplicity invited four patterns that were bound to collide with reality:

- Shortcut learning: models can appear to reason when they memorize patterns, echoes, or common solutions from training data. That does not guarantee the same reliability when the world moves.

- Prompt sensitivity: tiny wording changes can swing scores. A one‑shot test that flips under small perturbations is a weak indicator of robust competence.

- Data contamination: if answers or near duplicates are in the training set, performance inflates. Benchmark designers fight contamination, but the line between public data and model pretraining soup keeps blurring.

- Saturation and gaming: once a benchmark becomes a leaderboard, the ecosystem optimizes for that specific test. Over time the metric is maximized while its meaning is minimized. Goodhart’s Law is not a suggestion; it is gravity.

Put together, these pressures mean single‑shot scores on static datasets cannot carry the weight we place on them. You would not judge a pilot by a single multiple‑choice quiz. You judge by flight hours, incident reports, simulator checks, and the record of decisions under pressure. AI systems are now pilots of tooling, codebases, and workflows. They need the equivalent of flight logs, not just exam grades.

If you are already building agentic systems inside the enterprise, you have felt this shift. As we argued when exploring how agents reshape the firm, the boundary between product and process is dissolving. Evaluation must evolve with it.

From snapshots to motion pictures

The way out is not better snapshots. It is motion pictures: living evaluations. These are continuous, evidence‑rich assessments that track reliability, behavior, and cost over time, under varied conditions and with real consequences. They include:

- Telemetry‑rich trials: instrumented runs that record what an agent did, which tools it called, how long steps took, what failed, and what mitigations fired.

- Longitudinal safety cases: structured arguments with evidence that a system is safe enough for a defined context of use, maintained over its lifecycle rather than filed once and forgotten.

- Market‑like task trials: pay‑for‑performance task markets and time‑boxed competitions that stress agents with incentives, budgets, and adversaries. Not just accuracy, but yield, cost, and tail risk.

If leaderboards were scoreboards at the end of a game, living evaluations are season stats, referee reports, and the video review room.

Pillar 1: Telemetry‑rich trials

A modern agent is not a static model. It is a policy that reads a goal, invokes tools, calls other models, and alters plans based on results. A single pass or fail obscures the most important question: what did it do along the way?

Design your trial like a black box that happens to have windows:

- Record traces: task prompt, all intermediate thoughts or plans that are explicitly output, tool calls, returned data, errors, and final answers.

- Time and cost: wall‑clock time per step, tokens or compute spent, human minutes spent supervising, retries. These become part of the score.

- Safety and policy checks: log when the system refuses, when it asks for help, when it hits rate limits or policy filters, and when monitors intervene. A refusal is different from a failure, and both differ from a flawed action that almost shipped.

- Diverse conditions: run the same tasks with small perturbations. Change file paths, user roles, or network latency. Reliability is the stability of results under plausible change.

Good telemetry turns a pass rate into a reliability story. It also unlocks targeted improvements. If a coding agent reliably stumbles only when a repository has two similar configuration files, you can fix that pathway specifically rather than guessing at prompts.

To make this concrete, look at the growing class of evaluation sandboxes. Government labs and industry groups have begun to publish libraries that define tasks with built‑in monitors and safe failure modes. One example is AISI’s ControlArena library for control experiments, which illustrates how to test agents when they face opportunities to take unwanted actions.

Telemetry shines when it is trustworthy. That is where attestation comes in. As covered in our work on attested compute for evaluators, verifiable execution and signed traces reduce disputes about what the agent actually did. You are no longer arguing over screenshots. You are auditing structured evidence.

Pillar 2: Longitudinal safety cases

Safety cases come from aviation, medical devices, and nuclear power. They are structured arguments that a system is acceptably safe for a given use, backed by a living portfolio of evidence.

In the post‑benchmark era, think of a safety case as the big argument. Not a slogan, a diagram. It begins with a claim, such as: This research assistant can read internal documents and propose edits without causing material confidentiality, integrity, or availability risks beyond X per quarter. Under that claim sit sub‑claims and evidence: red team results, transcript analyses, incident postmortems, monotonic improvements from mitigations, and field telemetry from shadow or limited releases.

What makes it living is cadence and expiry:

- Every piece of evidence has a shelf life. If three months pass or the base model changes, the evidence automatically turns yellow until it is refreshed.

- Incidents create obligations. A pattern of misclassification in logs expands the scope of mitigations or triggers a gate review.

- Risk budgets drive go or no‑go. A team sets a maximum expected loss or incident rate, then shows, with uncertainty intervals, why the current system stays within that budget.

This approach trades a single point score for an argument you can inspect. It exposes what you know, how well you know it, and where the cracks are.

Pillar 3: Market‑like task trials

Real users care whether the system does the job, how often, how fast, and at what cost. To learn that, run market‑like trials that make tasks look like work.

- Pay for outputs: set a budget and pay the agent per verified deliverable. Your unit could be a reconciled invoice, a successful data pipeline fix, or a resolved support ticket.

- Hide adversaries in the stream: mix normal tasks with red team inserts and known traps. Do not announce the mix. Calibrate false positive and false negative harms, then score accordingly.

- Compare systems like vendors: you are not just comparing accuracy. You are comparing cost of confidence. How much spend, how much human time, and how many retries does it take to be 99.5 percent sure the weekly job completes without a safety event?

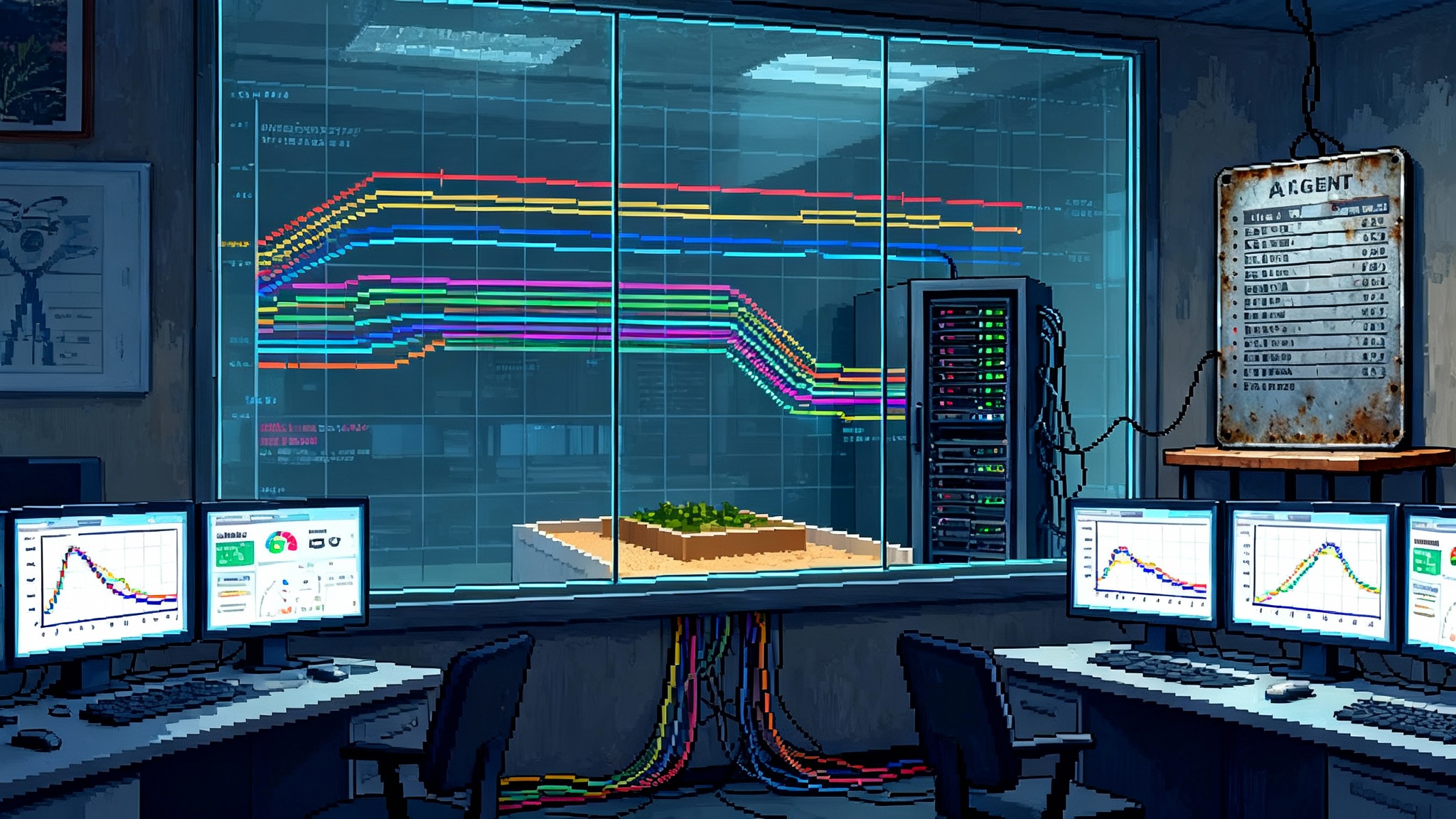

- Publish reliability curves, not crowns: show yield versus cost across the month, including down weeks and incident days. A model that is cheaper but spikes in failure under load is a different product from one that holds steady.

A good market‑like trial looks less like a quiz and more like an operations dashboard. This is close to the world emerging in competitions and lab exercises where agents learn to debug, recover, and escalate. For a taste of what that looks like in practice, see our discussion of autonomy after AIxCC.

What changes for agents, regulators, and clouds

Agents and product teams

- Replace one‑shot Evals with scenario suites: define 10 to 20 canonical job scenarios, each parameterized to generate hundreds of realistic variants. Rotate them weekly.

- Maintain a failure catalog: for every incident, add a short narrative, the upstream condition, and the guardrail or policy that now blocks it. Close the loop by testing the fix in the next rotation.

- Separate trusted and untrusted modes: let a safe but less capable policy cover everyday tasks, while a more capable policy is gated behind monitors and human sign‑off for consequential actions.

- Treat evaluation as a product: assign ownership, service level objectives, and a roadmap. If a feature has stories and sprints, your evaluation suite should too.

Regulators and auditors

- Ask for safety cases, not press releases: require structured arguments with evidence and an update cadence for systems above a risk threshold. Evaluate the process, not only the point estimates.

- Mandate field performance reporting: define minimal telemetry that organizations must retain and, when needed, disclose. Focus on timing of interventions, human‑in‑the‑loop efficacy, and the rate of near‑misses.

- Approve contexts of use, not models: an identical base model may be safe for one job and reckless for another. Tie approvals to the safety case and the deployment environment.

- Encourage shared scenario banks: regulated industries benefit when common failure modes are collected and anonymized so they can be replayed against new systems.

Cloud platforms and tool providers

- Offer eval‑as‑a‑service with sandboxes: run customer agents inside isolated environments that collect standardized traces by default. Provide built‑in red team tasks and monitors.

- Ship circuit breakers: managed policies that cut off tool access or escalate to human review when behavior crosses thresholds. Let customers set risk budgets and see burn‑down in real time.

- Standardize agent traces: agree on schemas for tool calls, user intents, and outcomes. The first platform that makes this easy will double as the preferred place to prove reliability.

- Integrate attestations: signed logs and reproducible runs reduce audit friction and build trust between buyers and sellers.

Metrics that will matter in a post‑benchmark world

Leaderboards rewarded single numbers. Living evaluations need a small vocabulary of meaningful metrics anyone can audit.

- Reliability at K: the probability the system completes a task within K attempts, with bounded variance across perturbations.

- Mean time between consequential errors: clock hours or tasks between failures that cross a materiality threshold set by the owner.

- Cost of confidence: total spend in compute, model calls, and human time required to reach a target confidence that a job will complete safely within a service level objective.

- Intervene‑to‑success rate: the fraction of runs where a monitor or human stepped in and the system still produced a correct, safe outcome.

- Safe refusal accuracy: the rate at which the system correctly refuses tasks that violate policy, without blocking legitimate tasks. This is a precision and recall problem, not a vibe.

- Drift sensitivity: measured change in performance as inputs shift over time, computed on rolling windows with distribution shift diagnostics.

These are not perfect. But they measure properties that operators and customers actually feel. They also line up with the way budgets, on‑call rotations, and executive reviews work.

A concrete 90‑day plan

Here is a plan any serious team can run without waiting for new standards.

Week 1 to 2

- Choose 12 core tasks that represent how your system creates value. Write one paragraph per task describing inputs, steps, tools, and failure risks.

- Instrument traces. Capture prompts, tool calls, results, and timings under a single schema.

- Set a risk budget per task. Decide what counts as a consequential error.

- Define K for Reliability at K. Pick K values that reflect real user patience or retry budgets.

Week 3 to 6

- Build a scenario generator. For each core task, define five parameters you can vary to produce hundreds of realistic instances.

- Add unseen traps and obvious traps. The point is to probe vigilance and monitor quality, not embarrass the model.

- Launch small, market‑like trials. Pay agents per verified output. Track cost of confidence weekly.

- Stand up a failure review. For every bad outcome, log the trigger, the trajectory, and which mitigation would have prevented it.

Week 7 to 10

- Write your first safety case for one context of use. Include current evidence, expiry dates, gaps, and planned mitigations.

- Practice a circuit breaker drill. Simulate a spike in near‑misses and ensure the system escalates or pauses safely.

- Publish a reliability curve to internal stakeholders, not a single score.

- Introduce attested traces for one task so auditors can verify that telemetry has integrity.

Week 11 to 13

- Refresh evidence. Rotate scenarios. Swap a base model or a prompting strategy and observe drift and cost changes.

- Summarize what changed. Which failures went away. Which new ones appeared. What the monitors caught. What slipped through.

- Update the safety case and repeat next quarter.

This is the opposite of a one and done report. It is a habit.

What about science and open research

None of this sidelines scientific rigor. It expands it. The review that triggered this conversation did not call for more marketing decks. It called for clear definitions, transparent assumptions, and tests that reflect the phenomena we care about. In research, that means:

- Publish codebooks and checklists alongside datasets.

- Report uncertainty and sensitivity analyses, not only averages.

- Prefer studies that test behavior over time and under perturbation, even if they are messier to run.

- Use static benchmarks as unit tests, not as banners. They belong inside a broader suite that exercises systems under change.

Science is repetition under variation. If an agent cannot survive small changes in wording, path names, or latency, the claim that it has mastered a task is not ready for prime time.

Objections and real‑world constraints

Some pushbacks are predictable. Here is how to meet them with specifics, not slogans.

- Living evaluations are expensive. Yes, and so are incidents. Calibrate evaluation scope to risk. Start with one context of use, not all of them. Track cost of confidence so leadership sees spend per percentage point of assurance.

- We already have a red team. Keep it. Then embed red team cases into your scenario generator so they recur. A once‑yearly test is theater. A weekly rotation is practice.

- Our data is sensitive. Build sandboxes with realistic synthetic inputs and instrumented tools. Use attested traces so you can prove no sensitive data left the boundary.

- We need a single score for procurement. Provide a small bundle instead. Reliability at K, cost of confidence, and mean time between consequential errors together fit on one slide and tell a better story than a crown on a leaderboard.

The leaderboard is dead, long live the flight recorder

The review of 445 benchmarks is a line in the sand. Leaderboards made it easy to believe we were sprinting ahead. In reality, we were skating on a thin layer of curated questions. As AI agents graduate from demos to daily work, the only evaluations that matter are the ones that survive contact with time, diversity, and incentives.

That means investing in telemetry, writing safety cases you are proud to show regulators, and running trials that look like markets. It means talking about reliability curves and cost of confidence instead of state of the art. It is a more demanding standard. It is also more honest.

When the scoreboard stops working, do not squint at the numbers. Change the game. Replace trophies with flight recorders, and build systems that can prove they are safe and useful week after week. That is how progress will be judged after the benchmark collapse.