Self-Healing Software Is Here: After AIxCC, Autonomy Leads

At the AI Cyber Challenge finals, autonomous systems found real flaws in widely used code and shipped working fixes in minutes. Here is how self-healing software reshapes pipelines, registries, governance, and the economics of zero days.

The line we just crossed

At the finals of the United States government’s AI Cyber Challenge, a two year competition run by the Defense Advanced Research Projects Agency, autonomous systems did something defenders have wanted for decades. They found real vulnerabilities in widely used code and produced working patches on their own. DARPA named Team Atlanta the winner and said multiple teams released their cyber reasoning systems as open source for immediate use by defenders. The program’s results included dozens of patched flaws across tens of millions of lines of code, with some fixes arriving in under an hour from discovery. The tempo changed in public, measurable ways, and the tools are now in the commons. DARPA’s results announcement is the official record of the inflection point.

The implication is larger than a single contest. We have crossed from manual, calendar driven repair to machine time defense. That change pulls on everything around it, from how we design pipelines and package registries to how we govern open source communities and assign liability. If defenders can generate and land high confidence fixes within minutes, the half life of a zero day vulnerability begins to shrink. Offense relies on windows of opportunity. Autonomy narrows those windows.

From Patch Tuesday to machine minutes

For twenty years, the rhythm of software security was set by human schedules. Vendors batched fixes into monthly drops. Open source maintainers worked through queues after work. Enterprises ran staged rollouts on maintenance weekends. The cadence made sense given human limits. It also gave attackers a predictable clock to work against.

Machine generated patching shifts that clock. In the finals, systems analyzed large codebases, generated patches, and paired them to the right vulnerabilities faster than human teams could triage bug reports. Performance was scored on speed and accuracy, not volume, so the systems had to submit precise, testable changes rather than shotgun edits. When a patch arrives minutes after discovery, the attacker’s playbook changes. Exfiltration plans must execute immediately. Persistence gets harder. Exploit resale value falls.

There is a historical parallel in the move from batch to streaming data processing. Once data could be acted on as it arrived, entire industries redesigned their operations. Security is in a similar moment. The unit of work is no longer a ticket. It is an event, and the repair can be an automated action.

If you have been following our discussions about turning organizations into execution environments, the shift will feel familiar to the argument in Agent OS Arrives: The Firm Turns Into a Runtime. Security joins that operating model when patches become continuous, signed, and automatically propagated.

Software as an immune system

Security teams have described defense with biological metaphors for years. Now the analogy is practical. Think of an application as a body with a circulating immune system.

- Sensors act like antigen detectors. They notice suspicious inputs or malformed packets.

- Reasoning engines act like T cells. They recognize a pattern of harm and decide how to respond.

- Patch generators synthesize antibodies. In code, that might mean a bounds check, a type change, or a safe parsing routine.

- Deployment pipelines act like the bloodstream. They deliver the fix to the right places with canaries and rollbacks.

This is not hand waving. The challenge results showed that automated systems can take a report generated by fuzzing or static analysis, prove the bug is exploitable, and emit a minimal change that neutralizes it. That is an immune response that does not wait for a help desk ticket or a quarterly sprint.

What the new agents actually do

To make the idea concrete, consider what an agentic patch bot looks like inside a modern continuous integration and continuous delivery pipeline. Terms first. Continuous integration means every change is automatically built and tested. Continuous delivery means approved changes ship quickly and safely.

Here is a basic flow an autonomous agent can execute today using the building blocks most teams already have:

- Intake and triage

- Subscribe to vulnerability feeds such as the Open Source Vulnerabilities database and internal scanners.

- Look for matches against known dependencies and link them to specific files and functions.

- Reproduce and prove

- Spin up an isolated environment to replay the failing input or fuzz case.

- Produce a proof of vulnerability that a reviewer or another bot can verify.

- Generate and test a patch

- Propose a minimal code change, often a few lines, and regenerate relevant tests.

- Run the full test suite plus targeted security tests.

- Prepare a safe rollout

- Create a signed pull request with a clear diff and proof artifacts.

- Stage a canary rollout, monitor key metrics, and watch for regressions.

- Land or back out

- If health checks pass, merge and propagate the fix.

- If anything looks odd, automatically roll back and escalate to a human.

If this sounds like Dependabot or Renovate, that is the right mental model, but the remit is broader. Instead of bumping versions, the bot edits application code and library internals. It does not only advise. It acts, within guardrails that you define.

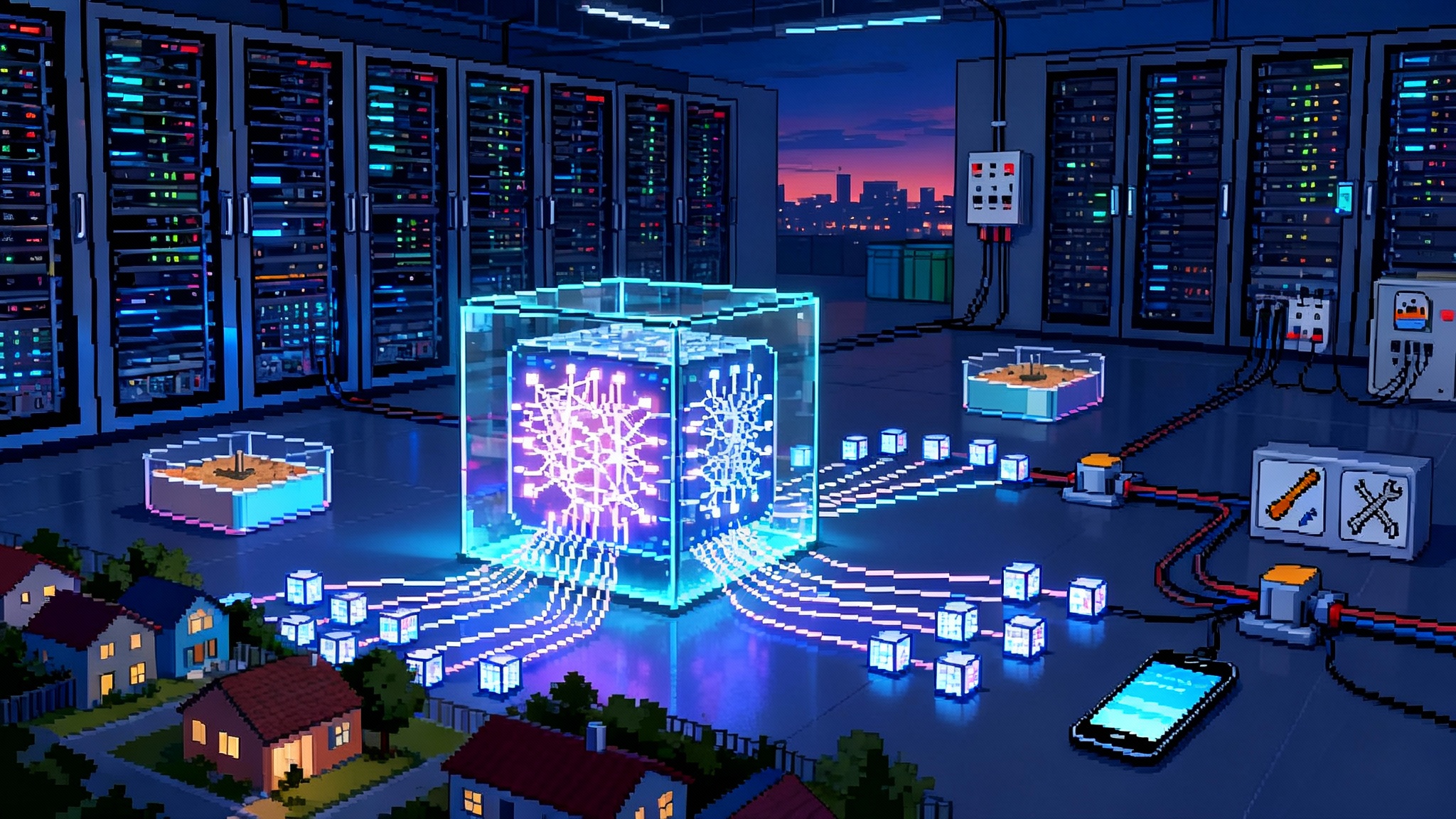

Package registries as public health systems

Package registries such as npm, PyPI, Maven Central, and crates.io function like public health hubs. They are where infections can spread, and they are also where herd immunity can begin. Autonomy changes how registries can behave.

- Quarantine and auto fix lanes. When a new vulnerability is filed against a package, the registry can route it into a quarantine lane that accepts automated patches. Maintainers review and approve, but the agent does the clerical work.

- Backport campaigns. Agents can generate backports to older supported versions where most users actually live. This reduces the pressure to do risky urgent upgrades.

- Pre publish scanning. Before a new version goes live, registry side agents run the same reasoning engines used in enterprise pipelines. If a serious issue is found, the publish halts and a patch pull request is created.

- Signed, attestable repairs. Every automated change is signed, linked to a reproducible build, and bundled with provenance documents, so downstream consumers can trust the fix.

None of this requires a new theory of software. It requires wiring the same capabilities used in the challenge into the supply chain we already rely on, with clear rules of engagement for human maintainers.

For more on why verifiable artifacts matter, see how attestation becomes a default in Proof of Compute Is Here. Self healing code is more credible when every action can be traced and verified.

The ethics of agents editing the code commons

Open source is a commons managed by people who care. Introducing autonomous editors raises practical ethics questions that are more specific than abstract worries about artificial intelligence.

- Consent and control. Maintainers must be able to opt in to automated edits, set rate limits, and define what kinds of changes agents may propose.

- Attribution and accountability. Every automated change should carry cryptographic identity and detailed logs, so credit flows to the right people and investigations have evidence.

- Triage discipline. A wave of mediocre machine patches would burn out maintainers. Registries and forges need quality gates that reject noisy or speculative edits.

- Safety against new supply chain attacks. An adversary could hide a backdoor in a superficially helpful patch. Mandatory secondary review by an independent agent, plus human spot checks, reduces this risk.

Ethics here means designing for respect. Agents do the toil. Humans make the calls that set project direction and taste. The goal is to free maintainers from the grind so they can do the creative work that attracted them in the first place.

Liability and governance will shift

When a serious safety technology becomes available, regulators and courts eventually treat failure to use it as negligence. Think of automatic emergency braking in cars. Software will follow the same arc.

- Standard of care. Once autonomous patching is widely available, a hospital that delays a critical fix for days because it still relies on hand processed tickets will have to defend that choice.

- Auditability. Autonomy must produce complete, tamper evident logs. If an automated change introduces a defect, clear records let insurers and courts allocate responsibility.

- Safe harbors. Policy makers can speed adoption by creating safe harbors for organizations that implement certified autonomous patching and disclose incidents quickly.

- Procurement pressure. Critical infrastructure contracts can require that vendors expose machine actionable interfaces for patch bots and supply provenance data with every update.

The governance tone should be firm and enabling, not punitive. The public interest is best served by rapid, verifiable repair, paired with clear expectations and transparent reporting.

Why shortening the half life of zero days flips the balance

Attackers thrive on dwell time, the period between first exploitation and effective remediation. If an exploit lives for weeks, it pays to build reliable chains, integrate them into crimeware kits, and resell them. If a fix lands in hours and is automatically propagated, the economics change.

- Lower resale value. A vulnerability that is fixed quickly cannot be packaged into long lived campaigns, which reduces incentives to hoard it.

- Fewer soft targets. As autonomous repairs roll through package registries and managed platforms, the pool of unpatched systems shrinks faster, so botnets grow more slowly.

- Harder privilege escalation. Many serious incidents rely on chaining a fresh bug with a known but unpatched weakness. Faster patching removes the old stepping stones.

Speed alone is not a panacea. Visibility, segmentation, and backups still matter. But turning a week of exposure into an afternoon changes the shape of risk.

What autonomous by default looks like in critical infrastructure

The phrase sounds bold. In practice it means building guardrail rich pipelines where automation handles the first response and humans supervise.

- Two key control. Security and operations must each grant a policy that allows agents to land patches in critical systems. Either party can pause automation at any time.

- Canary everything. Every change deploys to a small slice of the fleet first, with automatic rollback if error budgets are exceeded.

- Separate safety layers. Pair code fixes with runtime mitigations such as configuration sandboxing or input rate limiting, so a rushed fix is not the only defense.

- Practice outages. Run game days where the agent proposes and lands a patch in a realistic scenario. Humans watch the telemetry and rehearse intervention.

- Human escape hatches. A red button to stop autonomous changes is always visible, staffed, and tested.

This is not about replacing people. It is about making human judgment the exception handler rather than the main loop.

A practical playbook you can apply now

You do not need to be a federal program winner to start. Here is a sequence any large organization can follow in the next quarter.

- Establish patch automation policy

- Define which repositories and environments permit agentic changes.

- Require signed commits and provenance attestations for every automated fix.

- Wire the pipeline

- Insert an agent stage after static analysis and fuzzing.

- Feed the agent proofs and require it to attach its own proofs to each patch.

- Tighten the loop with registries

- Subscribe to registry advisories and enable backporting for supported versions.

- For internal registries, add quarantine and auto fix lanes with maintainer approval.

- Build observability and rollback

- Instrument health checks specific to security fixes, not just generic error rates.

- Prefill rollback plans, including cache and configuration resets.

- Run an autonomy fire drill

- Choose a known low risk bug and let the agent land the fix end to end.

- Capture lessons and refine guardrails before tackling higher stakes code.

If you want a deeper view of the compute economics that make this practical, the drop in reasoning cost we explored in The Price of Thought Collapses explains why defenders can afford to run many tests and many candidate patches in parallel.

Open sourcing the engines that learned to heal

A pragmatic reason to be optimistic is that the systems that just demonstrated machine time repair are being released to the public as open source. That means enterprises, small businesses, and open source projects can adopt and adapt the same techniques without negotiating one off licenses or waiting for a vendor roadmap. In the run up to the finals, DARPA also set the expectation that finalists would open source their systems under an Open Source Initiative approved license, which lowers the barrier to experimentation. DARPA’s scoring and release note explains the open source plan.

Open source release matters for a second reason. It lets independent researchers audit how these systems decide, test, and patch. That scrutiny is how the community will spot blind spots, tune models for different languages, and harden the guardrails that prevent harmful changes.

Culture change for maintainers and platform teams

Autonomy introduces new habits.

- Merge etiquette. Automated pull requests should come with lightweight, standardized templates that make review quick. Large, multi file edits should be split into atomic changes.

- Budgeting for safety. Project leads should reserve time not only for feature work but for agent policy updates and simulation. That time protects the project when the next big incident hits.

- Shared language. Security, platform, and application engineers should agree on a small vocabulary that describes what an agent is allowed to do in each repository. Ambiguity is the enemy of safe automation.

These are small moves, but they build trust between humans and their new teammates.

What could go wrong and how to prevent it

Every new capability creates new failure modes. The right question is how to make those failures safe, visible, and rare.

- False fixes. An agent might silence a symptom without curing the bug. Countermeasure: require a proof of vulnerability before the patch and a proof of non vulnerability after.

- Performance regressions. A correct fix could slow a critical path. Countermeasure: extend canary checks to include latency and throughput gates.

- Model poisoning. If training data includes malicious patterns, agents could learn harmful habits. Countermeasure: use curated corpora for code repair tasks and test agents on red teamed benchmarks.

- Abuse of automation. An attacker might try to use your agents as a backdoor deployment path. Countermeasure: hardware backed signing keys and strict identity checks for every agent action.

None of these risks are a reason to stall. They are engineering prompts.

The road ahead

What happens after a public line is crossed is that the practical work begins. The research community will publish the benchmarks and the audits. Vendors will plug reasoning engines into familiar tools. Registries will experiment with quarantine lanes and backport campaigns. Regulators will ask what a reasonable deployment of autonomy looks like for a hospital, a pipeline operator, or a cloud platform.

There is a high level lesson hiding in the details. Security gets better when we move toil to the fastest safe loop and reserve human attention for choices that shape the system. The AI Cyber Challenge showed that the fastest safe loop for a large class of software defects is no longer human. It is an agent that can prove a bug, propose a tight fix, test it without mercy, and ship it with a safety net.

Adopt that loop, watch your mean time to repair collapse, and you will feel a change in posture. Incidents become shorter and less dramatic. Release fear subsides. Maintainers get their evenings back. Offense loses the clock it counted on. That is what it looks like when software heals itself.