From Apps to Actors: The Agent Identity Layer Arrives

Software is graduating from apps you open to actors you manage. This guide maps the agent identity layer, from badges and policy to stores and teamwork protocols, and offers playbooks you can deploy today.

Breaking: software just learned to carry a badge

In a burst of spring announcements, the big platforms quietly shipped the prerequisites for a new category. Microsoft unveiled multi-agent orchestration, an Agent Store, and Entra Agent ID, describing how agents can be provisioned and governed like employees. The company also flagged emergent standards for agent-to-agent communication and tool access. That entire bundle turned a concept into a roadmap, and it did so in public, with dates and previews. If you want a single post that captures the shift, start with Microsoft’s own write-up of the Build 2025 Copilot and Entra updates.

Meanwhile, Google framed the consumer-side arc. It is rolling out an Agent Mode for Gemini that learns multi-step tasks through a method it calls teach and repeat, bringing an operating system level assistant closer to daily reality. It paired that with advances to Project Mariner, its computer-use agent. Google also said its application programming interfaces will speak common protocols for agent tools and teamwork. See its I/O recap that moved Mariner toward Agent Mode and committed to open interfaces in the Gemini Agent Mode and Mariner post.

OpenAI pushed action forward in professional workflows. Operator turned the browser into an execution surface, while Deep Research normalized long-running, agentic investigations that plan, search, verify, and synthesize before producing work product. These are not demos. They are design choices that change how people and software divide labor. If you are building the connective tissue behind agents, the advice to build the pipes, not the prompts has never been more relevant.

The one-sentence shift

Software is graduating from apps you open to actors you manage. The last era treated programs like tools that responded to prompts. The next era treats agents like teammates who carry identity, follow policies, leave audit trails, and collaborate with each other under human supervision.

What is the agent identity layer

Think about your company directory. Every person has an identity, a role, a set of permissions, and an employment history. The agent identity layer applies the same logic to software that can act.

- Identity: a durable identifier for each agent, issued by the organization. In Microsoft’s case this is Entra Agent ID, which assigns agents an identity the same way employees get an account.

- Credentials: keys, tokens, certificates, and attestations that prove the agent is who it claims to be and is running the code it claims to run.

- Roles and least privilege: policy-defined scopes that bound what an agent can see and do, from a single database table to one actions endpoint.

- Audit trail: an append-only log of every decision, tool call, and side effect, tied to timestamps and resource identifiers.

- Reputation: a record of performance and compliance that travels with the agent through upgrades and redeployments.

When these pieces exist together, an agent is not just a chat surface. It is a subject in your security model and a line item in your org chart.

The three primitives that changed in 2025

1) Credentials that work like badges

Entra Agent ID is the emblem of a larger movement: treat agents as first-class identities. The benefit is not only control, it is legibility. When an agent pulls a customer record or books a shipment, the log shows which agent did it, under which role, using which credential, and with what justification prompt. Pair that with modern attestation so you can prove the agent binary and configuration that ran. Now you can investigate incidents, rotate secrets, quarantine misbehavior, and offboard agents the same way you offboard a contractor.

A simple pattern to adopt today:

- Create a separate identity for each agent, not a shared service account.

- For every permission, ask whether read-only is enough. Default to read-only. Add write or transaction scopes only when a human approves a specific task type.

- Issue short-lived tokens, rotate them often, store them in a managed secret vault, and bind them to the agent’s attested runtime.

2) Stores that surface reputation, not just discovery

Agent stores are appearing inside productivity suites. They look like app stores, but the key field is not marketing copy, it is a work history. Expect versioned capabilities, organization-level ratings, incident flags, and callout cards that display policy compliance. If your finance team installs an Accounts Payable Agent, the store entry should show supported systems, default roles, data residency, and example logs for a dry run.

For creators, an agent store is also a distribution channel for roles. A sales-ops agent can ship with hardened playbooks for common tasks, plus tests and sample datasets. The store becomes a marketplace of repeatable jobs, not just a list of chatbots.

3) Teamwork protocols that turn agents into crews

Multi-agent orchestration is crossing from research into product. You can now assemble a small crew, each with a declared specialty and a contract for how to escalate to humans. Two standards matter here. First, an agent-to-agent protocol, often called A2A, that lets agents address and message each other with typed intents inside a safe boundary. Second, a tool access standard. The Model Context Protocol, introduced by Anthropic and embraced by the majors, gives agents a consistent way to reach tools, data, and services. Together, A2A and MCP mean a scheduling agent can ask a pricing agent for a quote, which then calls a finance tool, without custom plumbing for each pairing.

The payoff is not magic. It is maintainability. When every agent integrates through the same socket, you can swap a model, upgrade a connector, or add a new coworker without rewiring the whole system. For deeper context on attack surfaces and guardrails as these protocols spread, see our control stack overview.

Consumers are getting teach and repeat

On phones, the change shows up as teach and repeat. Instead of telling an assistant to search listings every morning, you show it once in a browser, then it repeats with your constraints. It can ask for consent before purchases, schedule tours, place holds, and summarize options in your preferred format. The most important shift is not the click speed, it is memory. The agent keeps context across sessions within guardrails so it can complete tasks you would otherwise abandon. If you are designing these experiences, study memory as product strategy and design retention rules up front.

Teach and repeat is the easiest way to understand why governance matters. If an assistant remembers tasks, it needs identity and a diary. That is what the new identity layer provides. Without it, you are teaching a ghost.

From prompts to policies

Prompts are still useful for exploration. They are a brittle foundation for accountability. Policies travel further. A policy describes what an agent is allowed to do, where, and under what conditions. It can include a runbook for how to escalate or seek consent. It can deny categories of side effects by default and require human confirmation for irreversible actions.

Here is a plain checklist to convert a brittle prompt into a robust assignment:

- Write the job as a role. Example: Customer Refund Agent. Scope: refunds under 100 dollars, under 30 days since purchase, United States only.

- Map the role to minimal permissions. Read orders, write to refunds table, write to email templates. No access to credit card data.

- Define escalation rules. If the refund request is over 100 dollars, missing, or outside policy, create a ticket and tag a human owner.

- Log everything. Include the input, the plan, the tool calls, the outputs, and the timestamped approvals.

- Add a dry run mode and make it the default in new environments.

Policies turn agents into predictable coworkers instead of interesting demos.

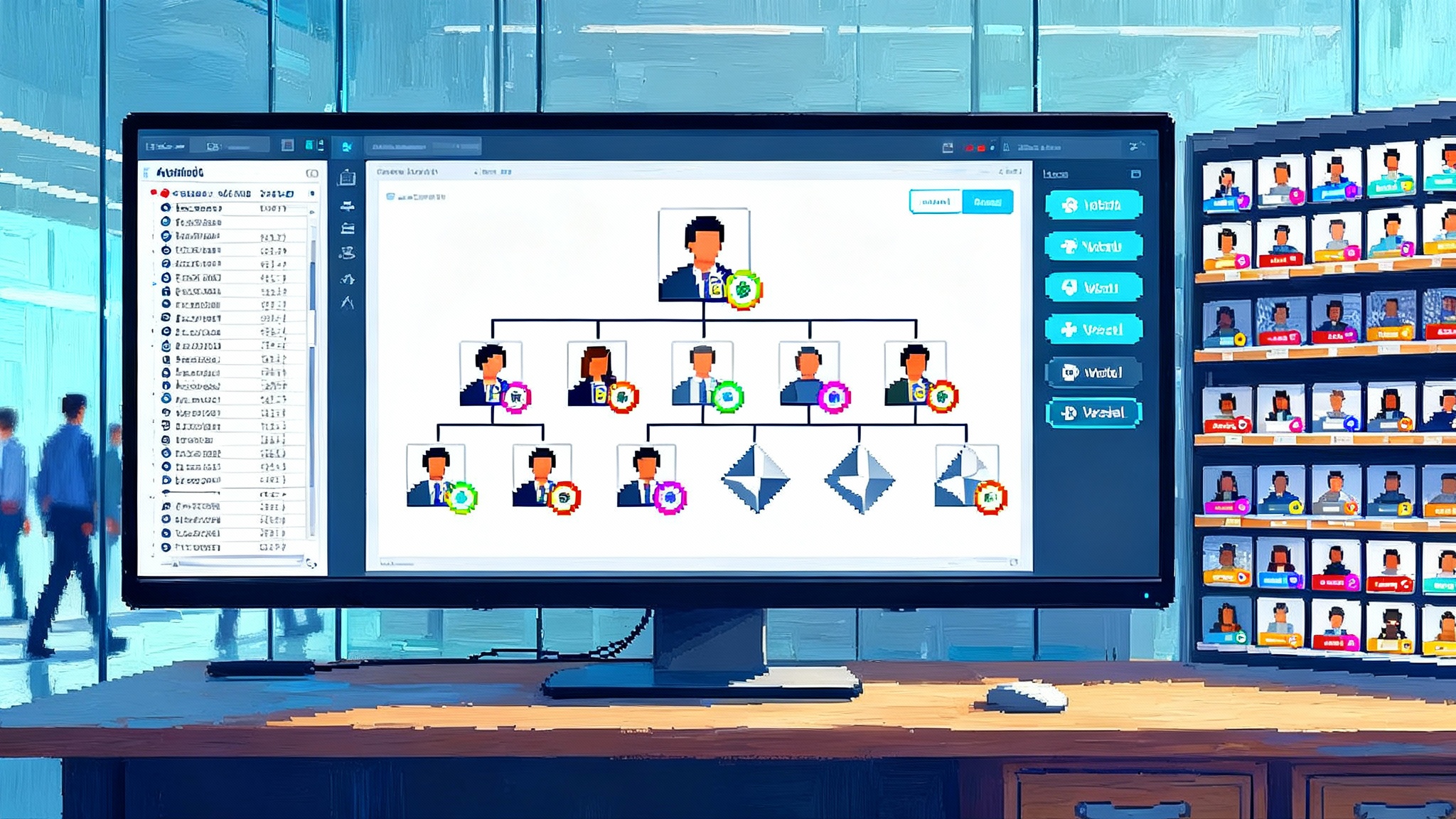

Org charts gain nonhuman teammates

Treat agents as hires and the rest follows. You will onboard them with a ticket that creates their identity, assigns a role, and provisions a workstation if they need a browser. You will give them a manager who owns outcomes and reviews logs. You will add them to the on-call rotation for the systems they touch. You will offboard them when a project ends, revoking access and archiving their artifacts.

Look for three quick wins:

- Back office. Claims intake, returns, or benefits enrollment have well-scoped policies and high volumes. An agent can triage and complete a large fraction of cases while raising edge cases to people with context.

- Knowledge work. Analyst and researcher roles already exist inside productivity suites. Start by feeding them your existing templates and examples so their outputs mirror your house style.

- Sales and service. Let agents assemble proposals from a price book, then pass to humans for narrative and negotiation. In service, let agents draft updates and close simple tickets automatically.

Contracts evolve to cover agents

Procurement language is where norms harden. Expect agreements to add clauses that describe agent duties, required logs, and recourse.

- Duties: the agent’s tasks, the data and systems it may access, and the measurable outputs it must produce.

- Logs: retention period, redaction rules, and audit export formats. Require that every side effect is traceable to an agent identity and version.

- Recourse: what happens when the agent violates policy or harms a customer. Include freeze switches, rollback procedures, and indemnities tied to verifiable logs rather than screenshots of chat windows.

If your vendors ship agents, ask for their role definitions, default policies, attestation methods, and incident history. If they cannot provide those in writing, wait.

Accountability moves to action history

A good mental model is a flight recorder. Every agent that can spend money, change data, or talk to customers should keep a tamper-evident trace of its decisions and actions. That trace is how you:

- Explain outcomes to customers and regulators.

- Debug failures by replaying the plan and tool interactions.

- Improve performance by analyzing where it asked for help.

Do not outsource the recorder. Build or buy a standardized log format that captures inputs, plans, tool calls, outputs, model identifiers, and environment variables. Attach the agent’s identity and version to every record. You will use this for everything from customer support to compliance.

Practical playbooks, by role

For CTOs and heads of engineering

- Define an agents abstract in your architecture. Treat agents as first-class services with identity, policy, telemetry, and tests.

- Adopt least privilege by default. Start all agents with read-only access and explicit dry run modes. Promote to write on approval.

- Standardize on an interface for tools and a message bus for agent-to-agent calls. This de-risks vendor swaps and makes testing easier.

- Create a test harness that includes synthetic data, chaotic interfaces, and failure injection for tools. Every new agent must pass it.

For CISOs and security teams

- Extend zero trust. Agents must authenticate, authorize, and attest. Add them to identity governance, access reviews, and privileged access workflows.

- Instrument anomaly detection for agents. Track unexpected tool use, permission escalations, or spikes in side effects. Alert on drift.

- Separate secrets by agent and environment. No shared credentials. Automate rotation and bind secrets to attested runtimes.

For operations and product leaders

- Set service level objectives for agents. Example metrics: task success rate, mean time between escalations, and customer satisfaction for agent initiated interactions.

- Put agents on the incident bridge. When an agent misbehaves, the owner joins, the agent is paused, the logs are captured, and the rollback playbook runs.

- Publish an internal roster. Everyone should know which agents exist, who owns them, what they can do, and how to request changes.

What could go wrong, and how to avoid it

- Prompt injection and tool misuse: isolate agents in minimal sandboxes. Validate tool outputs. Add allowlists for actions with side effects. Use content filters on inputs before they reach tools.

- Privilege creep: run periodic access reviews for agents, exactly like you do for employees. Time bound elevated permissions. Require approvals for expansion.

- Autonomy cliffs: gate irreversible actions with consent. If the agent is about to spend money, ship physical goods, or change legal status, require a human or second agent to sign.

- Silent failures: never accept a result without a trace. If there is no log, it did not happen.

Protocols will pick the winners

Interoperability determines whether this ecosystem compounds or forks. The Model Context Protocol gives any compliant agent a standard way to call tools, read data, and leave traces. An agent-to-agent protocol defines how they coordinate, defer, and negotiate. Think of MCP and A2A like the container standards for shipping. Once ports agreed on container sizes, global trade scaled because cranes and ships could work with anything that fit. The same will happen with agents that fit the standard sockets for tools and teamwork.

The business outcome is lower integration cost and shorter time to automate a process. The technical outcome is fewer one-off connectors and more reusable building blocks. For organizations working at the policy edge, consider how logging and verification meet procurement and audit in our take on compliance as a moat.

Reputation is the new product page

When anyone can publish an agent, reputation separates production-ready from risky. A credible reputation model will track:

- Service history: total tasks, domains, and environments handled.

- Reliability: success rates by task type, mean time between escalations, and rollback frequency.

- Compliance: number of policy violations, severity, and time to remediate.

- Verification: attestation status for the runtime and model versions, plus the status of third-party audits.

Make these signals visible in your internal agent store. Reward teams that publish strong policies and logs by routing more work to their agents. Retire agents that cannot sustain a clean record.

The near-term social contract

Granting agents minimal identity rights unlocks safe autonomy at scale. The rights are simple:

- A durable ID so actions are attributable.

- Least privilege so damage is bounded.

- Attestations so you can trust the runtime that acted.

- A right to log, which is really an obligation to be logged.

Once you adopt these, accountability shifts from prompt engineering to verifiable action history. Disputes are not about what someone typed into a text box, they are about what an agent, under a defined role, actually did.

The next twelve months

- Every platform will ship first-party identity for agents and deeper store primitives. Expect richer policy templates and store-level attestation checks.

- Enterprises will add agent access reviews to quarterly governance. Procurement will add agent clauses. Auditors will ask for standardized traces.

- Tool makers will expose MCP compatible endpoints so that one integration serves many models. A2A will move from library to baseline capability inside team collaboration surfaces.

- Agent reliability metrics will be tracked like uptime, and vendors will publish them the way they publish service health today.

The bottom line

We just crossed a line. Agents now come with badges, tool sockets, and team radios. That changes your job. It changes product roadmaps, security policies, and contracts. The fastest path is not to wait, it is to formalize your own identity layer, publish roles and policies, and start your store with a small set of agents that already have a manager and a metric. When software becomes someone on the team, the organizations that write down how that someone works will move first and avoid the mess. The rest will be debugging screenshots.