Interfaces Become Institutions: How GUI AIs Rewire Power

As AI agents learn to see and click like people, the screen stops being a skin and becomes governance. Explore how UI treaties, clickstream audits, and accessibility reshape power, policy, and product strategy in 2026 and beyond.

Breaking: the interface just became the institution

In early November 2025 a quiet but profound shift landed. Google showed that a general model can look at a screen, decide what to do, and then click and type until the task is complete. Instead of relying only on private engineering hooks, the agent works through the same pixels people see. Google detailed its Gemini Computer Use model for agents, which began rolling out to developers in October. The framing sounds simple. The implications are structural.

When artificial intelligence systems can operate graphical interfaces at scale, interface design stops being cosmetic. It becomes the de facto governance layer for capability, safety, and market power. For two decades, platform strategy meant owning the application programming interface. The new contest is about owning the screen, the focus ring, the permission prompt, the accessibility tree, and the replayable record of what happened between clicks.

From APIs to pixels

Agents like Gemini Computer Use do not wait for a product team to expose a field or automate a workflow. They see a page, comprehend the layout and labels, then execute an action plan: open, scroll, click, type, submit, repeat. The system works in a loop. It receives a task, a screenshot, and a history of prior actions. It proposes the next step, then re-evaluates after the interface changes. That loop lets an agent fill a form, adjust filters, or move through pages behind a login.

This sounds like convenience. It is also a change in who holds the keys. The API once decided which partners could extend a product and which could not. If a field or action was not exposed, you were locked out. With computer-using models, the interface itself becomes the programmable surface. Any clickable item becomes addressable. The cost of an integration shifts from months of engineering to hours of prompt design, policy definition, and testing.

Benchmarks and early internal deployments suggest the approach works well on the web and on mobile flows that mirror human behavior, including long tails that no one ever formalizes as an API. Inside large companies, the same techniques already support interface testing and automation. The direction is clear. The agent does what a person would do, only faster and with perfect memory.

Why the UI becomes a governance layer

When an AI is the operator, the user interface is not just a veneer. It is a rulebook that enforces what is possible, what is safe, and what is permitted. Three mechanisms make the interface into an institution:

-

Representation. The accessibility tree, roles, labels, and states become a shared language between the agent and the application. If a button has the right role and a clear label, the agent can reason about it. If an input is marked as high risk, the agent can pause for extra confirmation.

-

Orchestration. Dialogs, confirmations, and permission prompts become policy gates. Just as an API can require a token with certain scopes, a UI can require a human in the loop, a one time code, or a double checked intent before the agent proceeds.

-

Accountability. The clickstream is an event log. Every action can be recorded, signed, and audited to establish who did what, when, and under which policy. The interface provides the observable trace that structured integrations never expose by default.

If this sounds like governance, that is because it is. The interface is where platforms negotiate capability, safety, and power with the agents that want to act.

The end of pure API gatekeeping

APIs will not vanish. They will coexist with agents that are comfortable working on screens. But API gatekeeping loses exclusivity. If a platform denies a partner an endpoint, the partner can dispatch an agent to do the job through the interface. The decision shifts from whether there is an endpoint to whether the interface allows it under published constraints.

This invites a new contract that does not require a business development team. Call it a UI treaty.

- The platform publishes an action policy in human and machine readable form. It states which flows are allowed for agents, which require extra confirmation, and which are prohibited.

- The agent declares its identity and capabilities and commits to log every step.

- The platform specifies rate limits in terms of page loads and critical actions, and it reserves the right to revoke privileges if audits fail or abuse is detected.

A UI treaty is lighter than an API agreement yet more structured than scraping. It accepts that the surface of interaction is the interface itself, and it codifies how agents must behave there. This aligns with the idea that the agent identity layer arrives, where identity and provenance travel with every action an agent performs.

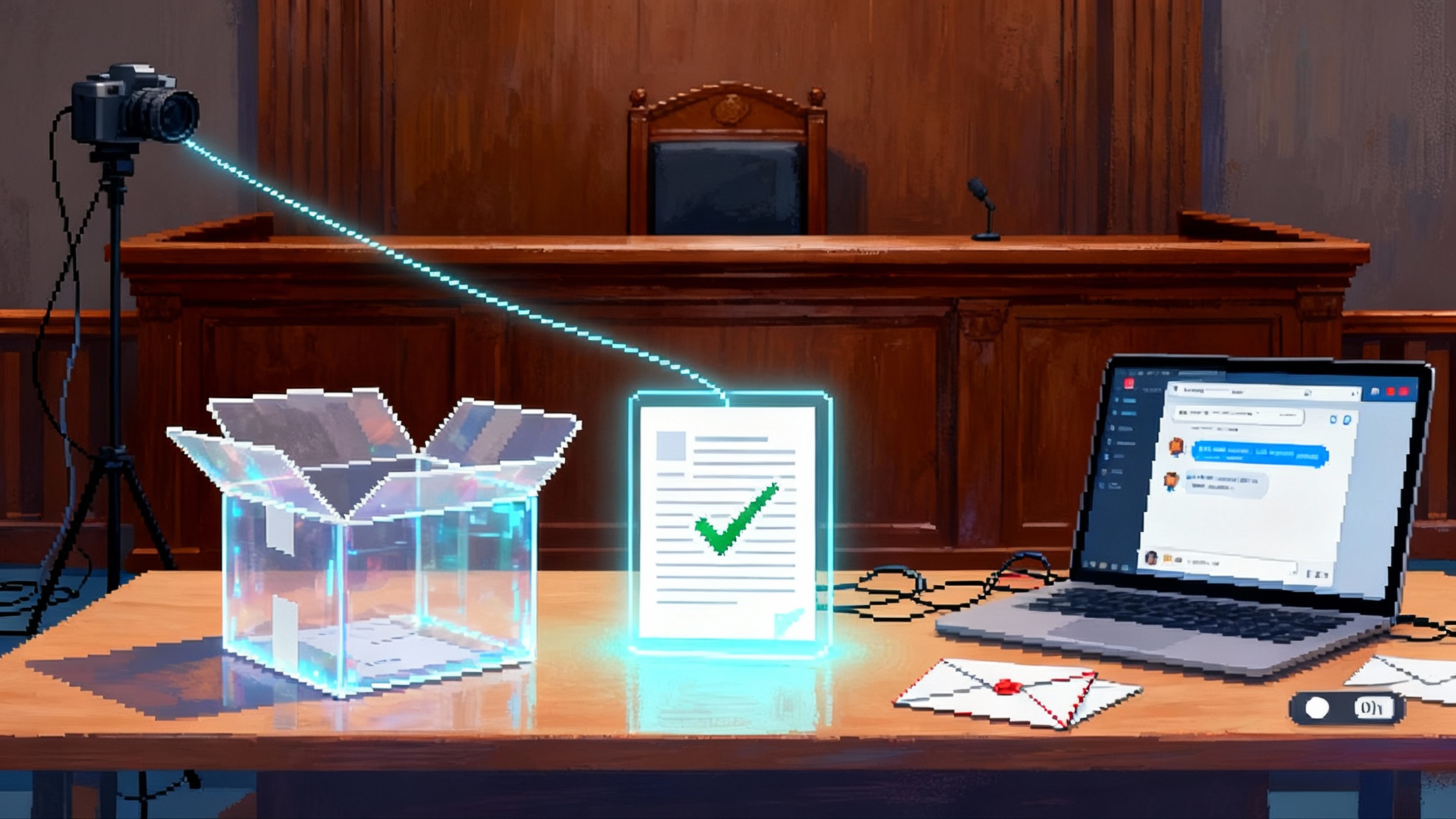

Clickstream audits as a first class control

If an agent can control the screen, then we need an audit trail that people can trust. Clickstream audits make every step legible. Imagine a signed transcript of an interaction: at 10:14:03 the agent clicked Transfer, typed 500 dollars, selected Savings, and confirmed with a one time code. That record can be hashed, timestamped, and attached to a case or a customer profile.

For regulated industries, this is more than convenience. It is compliance. Financial teams already review trades with detailed logs. Healthcare teams track exactly who accessed a chart. Agents that act through interfaces will need similar logs, with clear schemas for action types, selectors, screenshots, redaction policies, and human approvals. Tool vendors will compete on the reliability, security, and searchability of these logs.

Clickstream audits also change incentives. If a platform wants to encourage responsible automation, it can grant higher limits and faster performance to agents that submit verifiable logs and pass randomized spot checks. In a world of computer using models, the threat of silent abuse drops because the path of interaction is visible by design. This dovetails with a broader shift toward consent and provenance by default.

Accessibility by default becomes a moat

The fastest way to make a UI legible to an agent is to make it legible to everyone. Clear labels, consistent roles, proper focus management, and deterministic navigation are not just good for people who use assistive technology. They are also the markup layer agents use to reason.

Companies that invest in accessibility will enjoy compound advantages. Their flows will be easier for agents to traverse. Their error states will be easier to recover from. Their metrics will be easier to audit. Over time, accessible semantics become a distribution advantage. If an online store’s interface is agent friendly, enterprise customers may prefer it because their own agents can comparison shop, reconcile invoices, and file disputes without bespoke integrations.

We should expect professional grade accessibility to become table stakes for business software, with standard test suites that evaluate agent success rates across the top tasks in a given vertical. Documentation will include an agent compatibility badge alongside browser support.

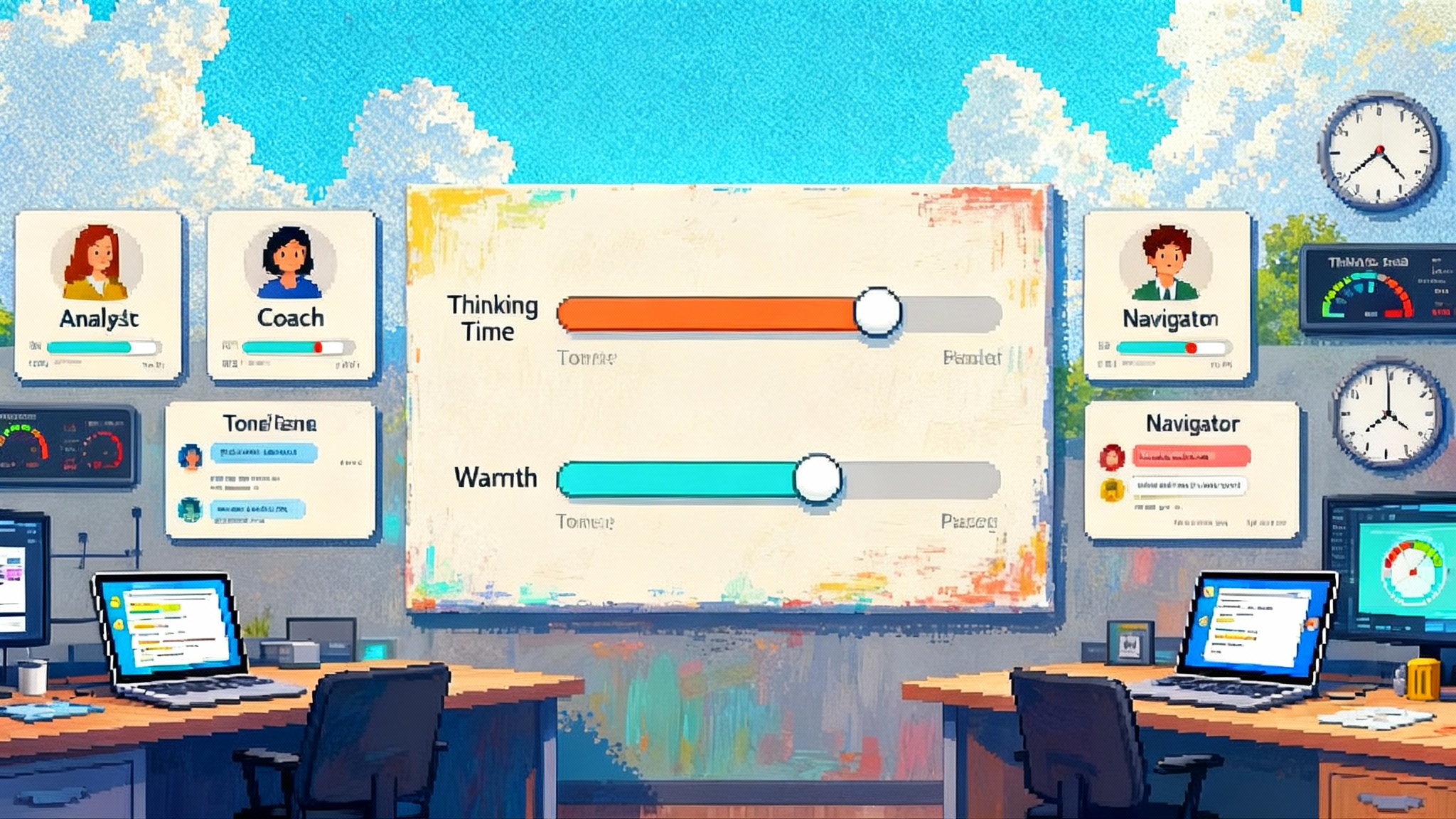

The operating system becomes a policy engine

Operating systems have had automation hooks for years. What changes now is the default posture and the policy sophistication required. When many users rely on agents to run their software, operating systems must arbitrate who can capture screens, who can click on behalf of a user, and how confirmations are staged. Expect three shifts:

- Scoped automation permissions. Instead of granting blanket screen control, permissions attach to named applications, windows, or even view hierarchies. A user can allow an assistant to operate the browser but not system settings, or to operate a specific domain and nothing else.

- Human confirmation primitives. An OS native confirmation dialog that is agent resistant appears for high risk actions like wire transfers or policy changes. It may require a biometric or a second device, and it will be designed explicitly to withstand attempts by an agent to click it away.

- Execution sandboxes. Agents run in constrained environments where they can observe and act only through brokered channels. Think of a driver that translates high level intents like select the Billing tab into safe, verifiable clicks.

When operating systems bake these ideas into their security model, the competition between platforms becomes a contest of interaction constraints. The platform with the clearest, safest, and most developer friendly agent policies will attract the next wave of software.

Safety shifts left to the interface

One practical insight from Google’s rollout is that safety checks can happen per step, not only per session. The model proposes a click or a keystroke, an external service evaluates risk, and only then does the action execute. Google also outlined Gemini 2.5 security updates at I/O 2025, including defenses against indirect prompt injection during agent operation.

This stepwise supervision model will spread. Security teams will define risk classes for actions that agents can take. Editing a calendar event might pass silently. Initiating a payment might require an out of band confirmation. Changing account ownership might be blocked unless a human supervisor co signs. The interface becomes both tripwire and safety valve.

UI treaties in practice

What does a UI treaty look like between an agent builder and a platform in 2026?

- Identity and provenance. The agent identifies itself with a signed token. Each step in the clickstream ties to that identity and to the end user or service account.

- Action classes and guardrails. The platform publishes a list of action classes for high risk flows. An agent must either avoid these classes or route them through human confirmation.

- Rate and complexity limits. Instead of raw requests per minute, the treaty defines limits for screen transitions, critical actions, and cumulative risk scores that factor in the sensitivity of the page.

- Redress and remediation. If audits show misuse, the platform can downgrade the agent’s privileges, suspend automation for a domain, or require re verification with new keys and attestations.

- Transparency hooks. The platform provides a read only log feed so customers can reconcile what their agent attempted with what actually occurred.

This is not science fiction. It is the next logical step from robotic process automation, only the scope is the public web and the tool is a general purpose model rather than a brittle script. The commercial edge goes to those who make their treaties legible and testable.

New moats for a new era

Model capabilities will continue to leapfrog one another. Durable advantage will come from what surrounds the model. Three moats will matter most:

- Semantic stability. Interfaces that maintain stable roles, labels, and layout semantics will be more controllable by agents. Breaking changes should come with deprecation windows and diffable maps of what moved.

- Auditable interaction. First class clickstream logs that are tamper evident will become part of service level agreements. Vendors will sell storage, analytics, and anomaly detection for agent activity.

- Accessibility by default. Teams that design with accessibility from the beginning will win on reliability and distribution. The same semantics that support people also support agents at scale.

Each moat converts what used to be a compliance checkbox into a growth engine. If your roadmap includes customer facing automation, build these moats early and treat them as product features, not paperwork.

Competitive dynamics: who gains and who adapts

- Platforms with strong browsers and permission systems gain leverage. They can set the norms for confirmation dialogs, action classes, and agent identity. Browser vendors will compete on agent ergonomics the way they once competed on JavaScript speed.

- Enterprise systems with clear semantics become the backbone for automation. Core platforms for CRM, finance, and procurement will export agent optimized flows and market their agent readiness to win deals.

- Accessibility toolchains and test harnesses become hot properties. If your toolkit can evaluate an agent’s success through complex flows and produce human readable audits, you will be pulled into procurement cycles that used to go to observability and security vendors.

Incumbents that rely on obscure interfaces, hidden state, or anti automation measures will face pressure. They can delay the shift only so long before customers ask why their agents keep failing at the same tasks employees do daily. The market will reward clarity and punish opacity.

What to do next

For product leaders

- Publish a UI treaty. Start with your top ten tasks. For each, declare whether agents are allowed, what confirmations are required, and which action classes are high risk. Provide examples and test cases.

- Stabilize semantics. Audit your accessibility tree. Assign owners to roles and labels. Ship a weekly report on breaking changes, with clear rollback plans.

- Instrument the clickstream. Build or buy a logging pipeline that captures every agent action with redaction. Make it queryable by customer, agent identity, task, and risk class. Connect it to your incident response flow.

For designers and researchers

- Design for an audience of people and agents. Label elements unambiguously. Avoid state that is invisible to the accessibility tree. Treat error messages as structured data, not just text.

- Create confirmation patterns that are agent resistant but human friendly. Use human centric signals, secondary devices, and time delays for truly high risk actions.

- Prototype with agents in the loop. Add agent walkthroughs to usability studies, alongside human sessions. Capture success rates and blockers in both modes.

For security and compliance teams

- Move from request level to action level controls. Build policies that evaluate each proposed click or keystroke in context.

- Define a schema for audit logs. Include action type, selector, before and after screenshots, outcome, risk score, and confirmation status. Make the schema public to customers.

- Write an incident playbook for agent actors. Revoke tokens, reduce privileges, and re run sessions from logs to reproduce outcomes. Tie remediation to treaty terms.

For marketers and data leaders

- Expect agents in the funnel. Buying committees will deploy agents to collect quotes, compare terms, and test your onboarding flows. Treat agents as first class visitors.

- Measure agent experience. Build dashboards for agent completion rates, time to success, and confirmation friction. Optimize them as you do human conversion.

- Plan for conversational channels. As AI chats replace tracking pixels, the best signal will come from intent rich dialogues tied to verifiable actions.

For policymakers and standards bodies

- Encourage transparency with standardized agent identity and action level logging. Customers should be able to verify who acted and under which policy.

- Promote accessibility benchmarks that reflect agent performance. Evaluate whether labels and roles enable consistent agent behavior across top tasks.

- Support interoperable confirmation protocols. High risk actions should be gated consistently across platforms, with clear human override paths.

A near term forecast

- UI treaties become common in 2026. Large platforms begin to publish agent policies the way they publish content policies today.

- Clickstream audits roll into enterprise procurement. Buyers ask vendors to supply agent activity logs as part of due diligence and to sign SLAs that include audit integrity.

- Accessibility earns budget as a growth lever. Teams that once fought for compliance funding are charged with unlocking agent adoption instead.

- Operating systems advertise agent grade controls. Permissions get finer, confirmations get smarter, and sandboxes get stricter.

By the time we look back, the shift will feel obvious. Agents will not be special. They will be how work gets done across departments and devices.

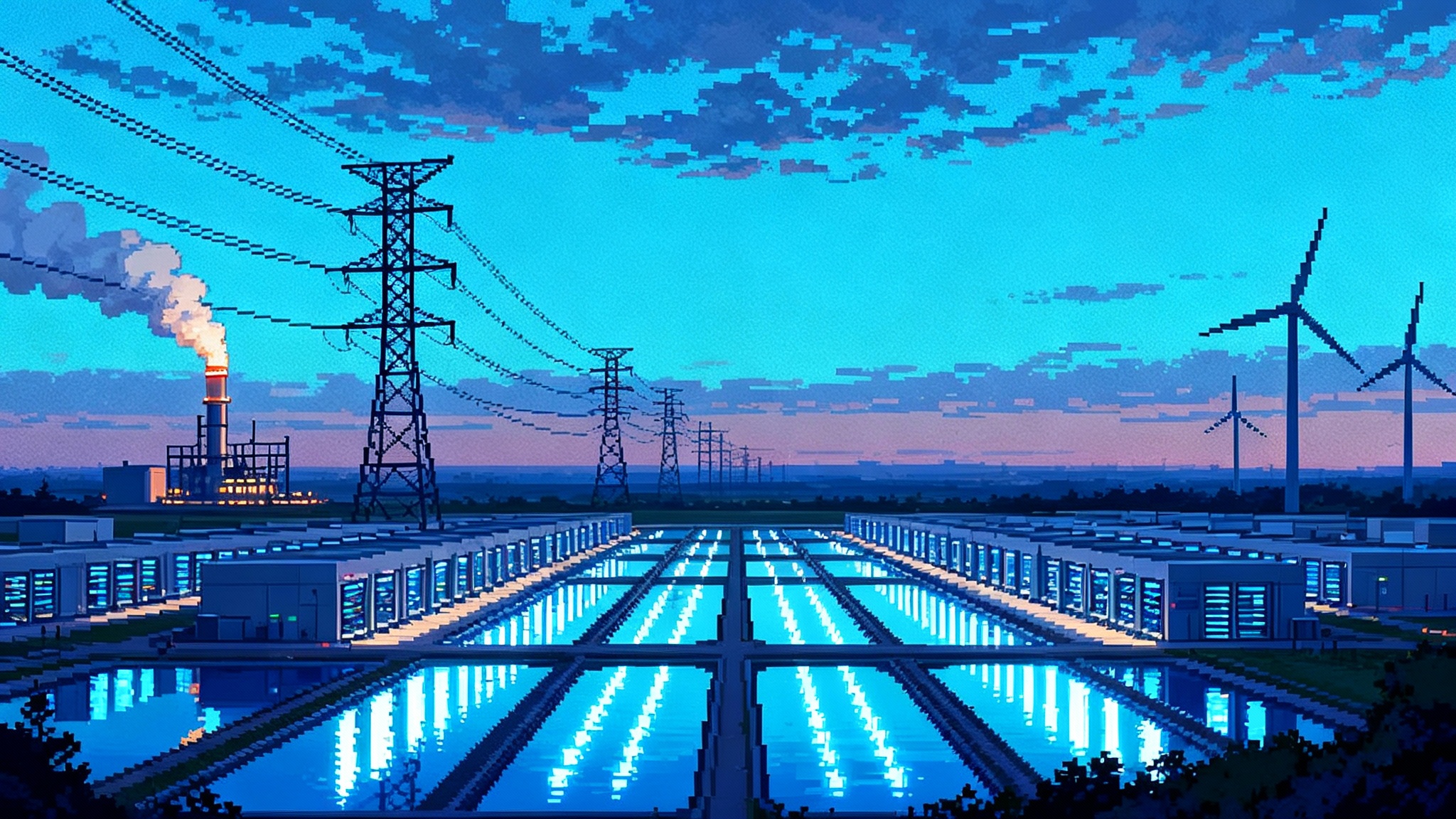

The bigger picture

Google’s Computer Use push is not the only route to agentic software, but it normalizes a simple idea: if the interface is good enough for people, it should be good enough for software. When that premise becomes standard, the interface stops being a front end. It becomes a constitution written in labels, roles, prompts, and logs.

That is why this release matters. It marks the moment when model weights stopped being the whole story. The new source of power lives where philosophy meets ergonomics, where safety is enacted one click at a time, and where market structure is shaped by how a dialog behaves.

We are about to build the next generation of software around that reality. The thoughtful teams will not wait for a standard to be forced upon them. They will publish their treaties, stabilize their semantics, and light up their audits. Then they will let the agents in. If they do it well, the interface will not just be a surface. It will be the institution that keeps the new software economy running on time.