2025: When AI Flips to Consent and Provenance by Default

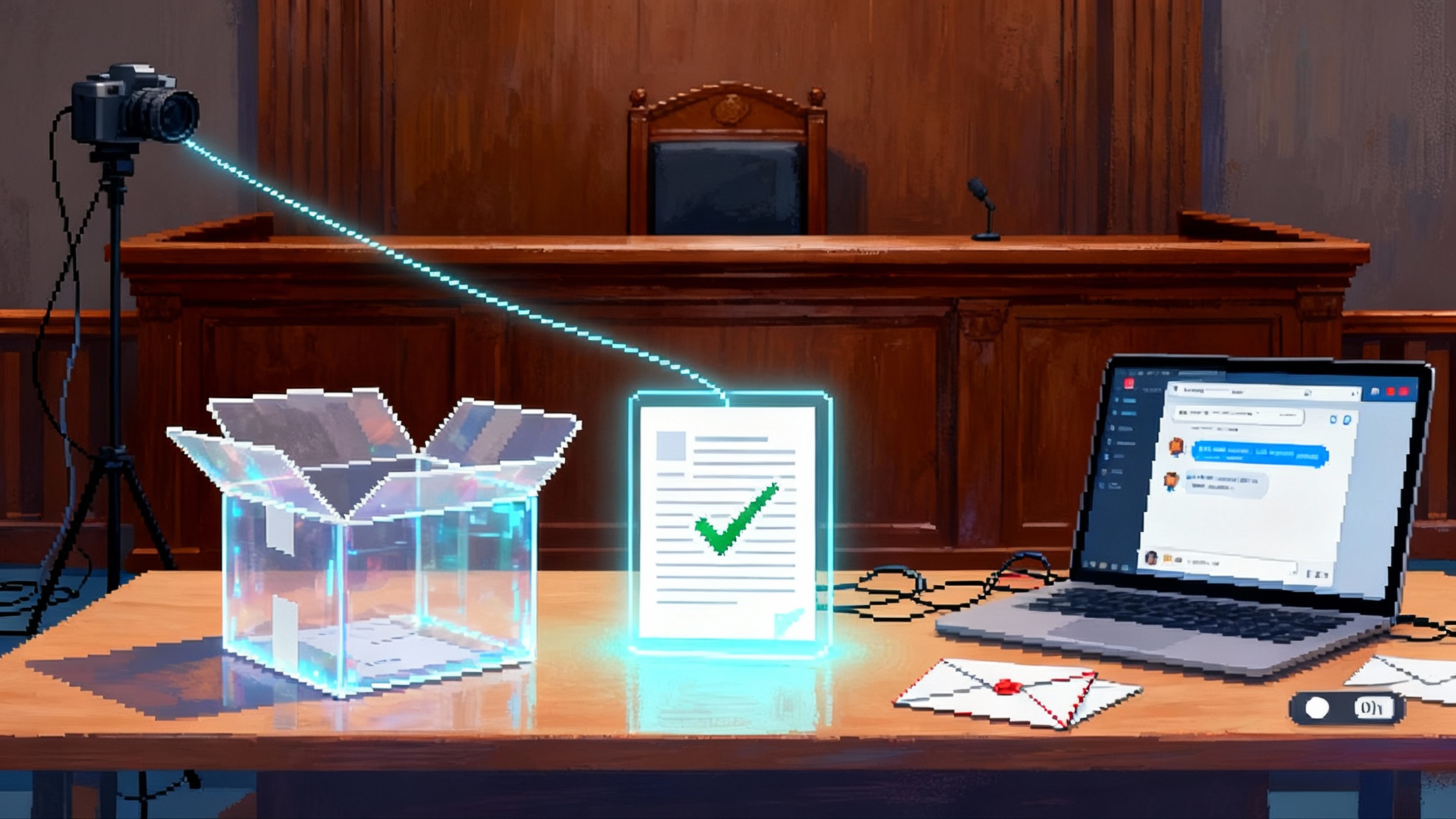

A courtroom fight over millions of chat logs made one lesson clear in 2025: AI must ask permission and prove its work. Teams that ship trusted memory, proof-carrying outputs, and auditable agents will win.

The courtroom spark that changed the tempo

On November 12, 2025, a fight in federal court over a request for roughly twenty million ChatGPT chat logs made the invisible visible. For years, logs were treated like harmless exhaust from people exploring real questions with artificial intelligence. That hearing forced everyone to picture what twenty million private conversations look like when treated as evidence, and to ask whether the industry’s instinct to collect first and reason later can survive a world of subpoenas, settlements, and scrutiny.

The debate was not about whether logs exist. They always do. It was about what those logs contain, who controls them, and how long they should live. The scale itself was the story. A request that large is not a fishing rod, it is a trawl net. It raised a blunt question for every builder of agents and assistants: if your product’s memory were dropped on the courtroom floor tomorrow, would you be proud of what is inside, and could you prove that each piece belongs there?

From train first to ask first, prove always

Over the last decade, many builders treated the open web as a commons for model training. Companies scraped text, images, audio, and code to fuel ever larger models. Complaints and lawsuits gathered but rarely changed the default posture. That default is collapsing in 2025 because incentives shifted on three fronts at once.

First, authors, newsrooms, and rights holders stepped up coordinated pressure for deals and settlements. The economics of training data moved from silent appropriation to active licensing. Second, regulators began to enforce rather than only signal. The Federal Trade Commission reframed deepfake misrepresentation as consumer harm and business liability. If you want one page that captures the tone shift, read the FTC impersonation rule explainer, which turned impersonation from a nuisance into a compliance risk that can shut down launches.

Third, provenance technology that once felt academic is landing in production. The Coalition for Content Provenance and Authenticity, known as C2PA, moved beyond still images into broadcast grade video pipelines. Newsrooms, camera manufacturers, and cloud platforms now test signed capture, chain of custody metadata, and cryptographic manifests as ordinary steps in their workflows. For the curious, the C2PA spec overview explains the signed ingredient list that lets a piece of media be verified at every hop.

Put those forces together and you get a new default. The old playbook said ship fast, apologize later, and patch consent retroactively. The new playbook says your product must negotiate consent up front and carry its own receipts. The practical outcome is not slower software. It is faster trust.

Provenance grows up: broadcast grade authenticity

When a video camera stamps each frame with a cryptographic signature, it is the media equivalent of a tamper evident seal on a medicine bottle. You can open it, but everyone will know. The C2PA approach combines signed manifests with a timeline of edits so a journalist, a platform, or a court can see whether a clip came from a specific camera, whether the audio track was replaced, or whether an editor cropped out a detail. That is what broadcast grade means in 2025. It is not a sticker in the corner of the screen. It is a verifiable paper trail.

The same logic is coming to language models and agents. A response will not only say something, it will show how it knows. If a model claims it used a licensed encyclopedia paragraph and a company style guide, the response will carry machine verifiable links to those artifacts, plus signatures that prove they were available at time of generation. Think of it as a receipt that ships with every answer. This receipt is a small file with proofs, not a banner of logos. It travels with the output and can be checked offline.

The design stack of 2025: consent and provenance by default

Three product patterns are emerging as table stakes. Each one aligns incentives, not slogans.

1) Ephemeral recall as the default

Memory once meant saving everything forever. That is efficient for machines and dangerous for people. Ephemeral recall flips the mental model. The system learns in session, proposes to save only what the user expressly approves, and forgets on a schedule.

Here is how it looks in practice:

- Memory is scoped to a project, not to a person. A recruiting agent remembers candidates only inside the role it is filling, then purges that memory when the role closes.

- The first time the system wants to remember something, it asks. The prompt is not a wall of legalese. It is a one sentence request that states the benefit, the retention period, and the cost of refusal. For example: “Save your meeting preferences for two weeks to shorten scheduling by five minutes per meeting.”

- Retention is budgeted. Teams set a maximum number of tokens or days per memory class. When the budget is full, older items roll off automatically. The default is to forget.

- Users can open a memory ledger that shows what is stored, who accessed it, and when it will be deleted. The ledger supports one tap revoke. Deletion is not a request into a black hole. It is a visible action with an immediate effect.

This design reduces discovery risk, lowers storage cost, and turns consent from a blanket checkbox into a living dial.

2) Proof carrying outputs

The future of AI outputs looks like a signed parcel. The content is the front of the parcel. On the back is a small sealed pouch of proofs. A proof answers questions that matter to buyers and regulators without blowing up user experience.

What goes inside the pouch:

- Provenance: Which models and datasets were involved, with signed identities and version numbers.

- Policy compliance: Which policies were evaluated at generation time, which ones matched, and what mitigations ran. If a healthcare agent avoids summarizing protected health information, the proof notes the policy and the filter that triggered.

- License status: Whether the output is derived from licensed sources, fair use excerpts, or public domain. If a paragraph is styled after a paid reference, the proof carries the license hash and expiration date.

- Safety filters: Whether content filters or red team checks fired, with anonymized reasoning traces that can be independently verified.

The key is portability. A proof object can be stapled to a chatbot response, an image, a video, a spreadsheet formula, or an autonomous action. It is small, cacheable, and verifiable by third party auditors without phoning home. Buyers can put a verifier in their procurement checklist the way they already scan software components for license conflicts. This is how AI becomes intelligence as utility instead of a bundle of marketing claims.

3) Machine readable policy cards

The web had robots.txt to ask crawlers to slow down or stay away. Artificial intelligence needs a richer version to express what is allowed, what is paid, and what must be proven. A policy card is a signed document published by a creator, a publisher, or a data platform. It says, for example, that an outlet allows summarization within a daily rate limit, bans style mimicry, offers paid fine tuning access at a posted price, and requires that any output citing its material include a specific attribution string.

A policy card is more than a signal. It is machine action. Agents read it, negotiate, and conform in real time. If a card requires payment, the agent can attach an escrowed consent token that unlocks access. If the card disallows training but allows search, the agent shapes its requests accordingly. The card can also include standard fields for minors’ content, biometric data, or sensitive categories like medical records.

Policy cards move the conversation away from vague claims of fair use and toward explicit offers. They also connect directly to the agent identity layer, because identity, authorization, and audit need a shared way to represent who can do what, for how long, and with what proof attached.

A concrete example: an auditable travel agent

Picture a travel agent that can read your email, scan your calendar, and book flights with a corporate card. Under the 2024 mindset, you would connect all accounts and hope the vendor is careful. Under the 2025 mindset, three things change.

- Ephemeral recall: The agent proposes to remember your favorite departure times and seat preferences for fourteen days. It shows the expected time saved and lets you opt out entirely or shorten the window. The memory ledger lists these preferences with a countdown clock to deletion.

- Proof carrying outputs: When the agent drafts the itinerary, it includes a proof pouch. It shows that it read three specific emails, that all belonged to the work domain, that no personal accounts were accessed, and that payment was limited to the approved vendor list. Your finance team can verify the proof using a standard tool without contacting the vendor.

- Policy cards: The airline’s card allows dynamic pricing queries for corporate accounts within a daily rate limit and offers bonus features if the agent shares route flexibility windows. Your company’s card says no personal data may be stored beyond seven days and requires a consent token for accessing messages tagged confidential. The agent complies with both.

The result is not a slower booking. It is a faster yes from security and procurement, because the product proves what it will and will not do.

Why trust makes winners faster

Trust pays in cycle time. Teams that can prove provenance and consent do fewer meetings and ship more updates because the risk review is not guesswork. Their products pass audits faster because proofs are standardized. Their sales cycles shrink because buyers can verify claims in a sandbox before signing.

Trust also pays in partnerships. Platforms are more willing to grant real time access to data when they can enforce policy cards and receive proof carrying logs in return. Media companies prefer agents that carry receipts. Cloud providers will privilege workloads that stamp provenance at capture and encrypt proofs at rest. Even insurers are beginning to price liability based on the presence of verifiable controls, not slide decks.

This is also how distribution changes. As search shifts toward direct answers, the products that can show how they know will be ranked higher, cached longer, and shared more. The winners in the answer economy shift will be those that deliver proof bundles with every response.

Consider two companies building sales agents. One scrapes freely, stores indefinitely, and offers a marketing page that says responsible uses only. The other ships with ephemeral recall, policy card negotiation, and proofs attached to every summary. The first team will spend months negotiating exceptions and writing custom promises for each buyer. The second team will point to a standard verifier and start the pilot next week.

A pragmatic playbook for builders

You do not need a new research lab to make this shift. You need a few concrete decisions and a willingness to show your work.

-

Map your memory. Draw a simple diagram of where your agent stores any user data, how long it lives, and what it is for. Set retention budgets by data type, not by database. Delete by default.

-

Build a memory ledger. Expose what is stored, who touched it, and when it is scheduled for deletion. Make revoke work in seconds. Treat deletion as an active feature, not a support ticket.

-

Stamp provenance at capture. If you handle media, adopt signed capture on devices and in ingestion pipelines. If you handle text, sign the ingestion manifest with dataset identities and policies. Prefer standards like C2PA for media and keep keys in hardware backed vaults.

-

Ship proofs with outputs. Start simple. Include a signed model version, a dataset manifest, and a policy compliance summary. Publish a verifier as an open source tool so buyers can check without calling you.

-

Parse and respect policy cards. Teach your agent to fetch, cache, and honor machine readable rules from publishers and partners. If a card denies training but allows on the fly reasoning, enforce that distinction in code and in logs.

-

Make consent obvious. Use plain language prompts that explain the win for the user, the retention period, and the controls. Offer a no memory mode that still works well.

-

Measure trust like a product metric. Track the time from dataset discovery to signed approval, the number of proofs per output verified by customers, and the median revoke time. Publish these numbers.

-

Red team discovery risk. Stage a mock subpoena for logs and see what falls out. If the answer is too much, lower your retention budgets and tighten scopes.

What changes for platforms and regulators

Platforms can amplify this shift with a few clear moves:

- Standardize consent tokens. Define a portable token that proves a user granted a scope, with an expiration and a revocation endpoint. If a user revokes consent, the token becomes invalid everywhere at once.

- Build a consent clearinghouse for small creators. Let photographers, writers, and podcasters publish policy cards and receive payments for narrow uses without signing enterprise deals.

- Require proofs for privileged access. If a partner wants firehose or batch access, demand proof carrying outputs in return and rate limit based on provenance quality, not only traffic.

Regulators can enable speed without guessing at code:

- Offer a safe harbor for proofs. If a company ships proof carrying outputs, honors policy cards, and deletes on schedule, it qualifies for reduced penalties for first time incidents that are disclosed quickly.

- Fund open verification tools. Independent verifiers reduce vendor lock in and let buyers choose without fear. Public money spent on verification pays for itself in fewer enforcement actions.

- Encourage procurement standards. When agencies buy agents, require proofs, ephemeral recall, and policy card compliance. The market will follow.

Limits and open questions

No tool is a magic shield. Watermarks can be stripped, though provenance checks make tampering visible. Proof systems can add latency, though smart caching and parallel verification keep experiences fast. Policy cards can fragment if every publisher invents a new dialect, which is why the industry should rally around a small shared schema.

Privacy budgets require care. Forgetting too quickly can make assistants feel amnesic. Forgetting too slowly invites risk. The right balance depends on context. A medical agent needs stricter defaults than a movie recommendation bot. Users also need clarity. A good rule is that any person should be able to answer two questions at any moment: what does this system remember about me, and how do I make it forget.

There is also a cultural change. Teams must treat consent and provenance as product features, not as compliance chores. That means prototypes should include memory ledgers, proof pouches, and policy card parsers from day one. When those elements are in the scaffolding, they shape better decisions everywhere else.

The fastest way to move is to be trusted

The court fight over twenty million chat logs was a mirror. It reflected how much private life has moved into conversational systems, and how fragile the old defaults look when exposed to daylight. It also marked the moment when a new approach became obvious. Consent first. Proofs always. Memory that forgets unless invited to remember.

The companies that embrace this shift will not move slowly. They will move confidently. They will close deals faster, pass audits faster, and integrate with partners faster because the burden of proof is not rhetorical, it is cryptographic. In 2025, artificial intelligence stops being a mystery box and starts being a signed parcel. The winners will be the teams that ship privacy preserving memory and auditable agents, and they will move faster precisely because they can be trusted.