Agentic BI Arrives: Inside Aidnn and the end of dashboard sprawl

Aidnn, a new agentic analytics assistant from Isotopes AI, plans tasks, fetches data, and explains results. See how agentic BI can end dashboard sprawl with clear evaluation criteria, integrations, and safeguards.

Why Agentic BI Matters Right Now

Every enterprise knows the feeling. You open your analytics portal and face a maze of dashboards that seemed useful when they were created but now compete for attention, contradict one another, and rarely answer the question you actually have. The cost is real. Leaders wait for analysts to build yet another view. Analysts copy and paste the same logic into different tools. Teams lose trust when the numbers do not align.

Agentic BI aims to break this pattern. Instead of making end users hunt through a library of prebuilt views, an agentic analytics assistant plans a task, fetches the data it needs, analyzes that data, and explains the result with citations to both logic and lineage. Aidnn, from Isotopes AI, is an example of this shift. It does not simply visualize. It reasons, executes, and then teaches you what happened.

In this piece, we use Aidnn to frame what agentic BI is, how to judge solutions, where to integrate them, what to watch for, and how to run a pragmatic pilot that delivers measurable impact without losing governance.

From Dashboards To Decisions

Dashboards were built for a world where questions changed slowly. You standardized a handful of KPIs, published them, and taught people where to click. That world is gone. Business questions now change weekly, sometimes daily, driven by constant experimentation and shifting customer signals. When the question shifts faster than your dashboard backlog, dashboard sprawl is the inevitable result.

Agentic BI addresses this by moving up the stack. The agent listens to a business goal or question, picks the right data sources, composes a plan, executes queries or transformations, evaluates the quality of its own output, and explains the answer with plain language and references. The unit of value is no longer a static chart. It is a solved task with an auditable trail.

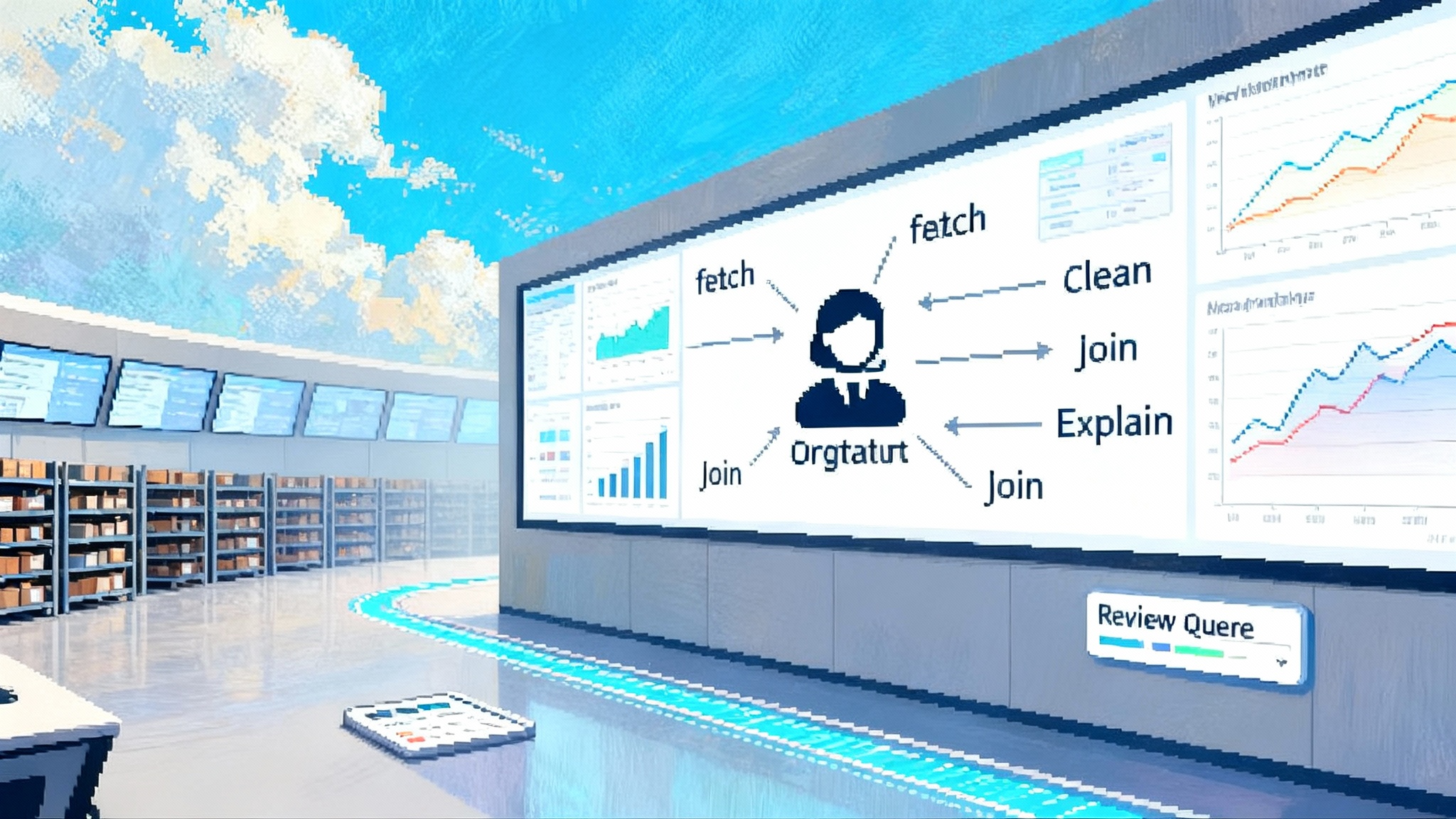

What Makes Aidnn Agentic

While different products vary, Aidnn is representative of three essential capabilities:

- Planning

- Translates a natural language goal into a multi-step plan.

- Locates relevant tables, metrics definitions, and policies.

- Chooses methods to join, aggregate, and validate data.

- Execution

- Runs queries against approved data sources.

- Applies statistical or machine learning techniques when needed.

- Handles retries, fallbacks, and guardrails if a step fails.

- Explanation

- Summarizes the result in plain language.

- Links back to the query logic and the underlying metric definitions.

- Surfaces limitations, confidence levels, and assumptions.

This plan-execute-explain loop is the heart of agentic BI. Get it right and you displace many dashboards with one assistant that can answer a broad range of questions while staying within policy.

How Agentic BI Differs From Traditional BI

- Unit of delivery: Traditional BI delivers charts and dashboards. Agentic BI delivers solved tasks with traceable logic.

- Scope of reasoning: Traditional BI relies on human analysts to decide which joins, filters, and metrics to apply. Agentic BI can propose and execute those decisions, then justify them.

- Change management: Traditional BI requires tickets and development cycles to add new views. Agentic BI adapts to new questions by re-planning on demand.

- Governance: Traditional BI centralizes governance in modeling layers and dashboard approvals. Agentic BI must embed governance within the agent’s planning and execution steps.

Architecture Patterns You Will See

You can expect an agentic BI stack to include the following layers:

- Data access: Connections to warehouses, lakes, and operational stores, gated by service accounts and row-level security.

- Semantic layer: Metric definitions, entity relationships, and data contracts that give the agent a stable vocabulary.

- Policy and guardrails: Rules for PII handling, query cost ceilings, rate limits, and tiered approvals for sensitive domains.

- Planning engine: A reasoning module that turns a goal into a sequence of data and analysis steps.

- Tooling adapters: Query runners, vector search for documentation, feature libraries for statistical tests, and charting functions.

- Self-evaluation: Checks that confirm query correctness, sampling, bias detection, and consistency with metric definitions.

- Explanation layer: Natural language reports with citations to queries, models, and definitions.

Aidnn’s promise is to unify these layers so an end user can ask a business question and receive a vetted answer that is both useful and auditable.

Evaluation Criteria You Can Trust

Before you pilot any agentic BI system, establish criteria you can measure. The following checklist has worked well for early adopters:

- Task success rate: Percentage of user questions answered correctly on the first attempt, as judged by subject matter experts and by consistency checks.

- Time to insight: Median time from question to useful answer, compared to your current BI process.

- Plan quality: Are the generated steps logically sound, consistent with metric definitions, and efficient on compute?

- Explainability: Can the agent show the exact queries, assumptions, and definitions it used? Are caveats stated clearly?

- Policy adherence: Does the agent respect data classification, PII rules, and cost limits without human intervention?

- Recovery and resilience: When a plan fails, does the agent correct itself or fall back gracefully?

- Reusability: Can you save resolved tasks as reusable playbooks or automations for others?

- User trust: Do business users prefer the agent over dashboards for exploratory questions? Track adoption and satisfaction.

Early Integration Patterns In The Wild

Agentic BI does not replace your stack overnight. The early value often appears in focused patterns:

- Analyst copilot: Embed the agent in your SQL workbench. Let it propose joins, edge-case checks, and test scaffolds, then show the resulting queries for review.

- Metric helpdesk: Put the agent in front of your semantic layer. When someone asks what a metric means or how it is calculated, the agent answers with the official definition and example queries.

- Decision brief generator: Feed the agent a goal and a time window. It composes a short brief with key drivers, charts, and recommended follow-ups.

- Postmortem assistant: For incidents or KPI dips, the agent assembles a timeline, relevant metrics, and likely contributing factors.

- Live demo partner: If your sales team already experiments with AI-driven presentations, connect the agent to curated demo datasets so it can field open-ended questions. We explored a related pattern in our piece on how AI reps join your demos.

- Marketplace-aware workflows: If your org discovers analytics add-ons or data skills through marketplaces, align the agent’s tools and permissions accordingly. See our take on the rise of AI marketplaces for adoption lessons.

Risks And How To Govern Them

No agent should roam freely across your data estate. Treat agentic BI like any powerful platform and design for control from day one.

- Scope creep: Start with a bounded domain. Limit the agent to datasets with strong ownership and clear definitions. Expand only after you hit success thresholds.

- Silent divergence: Agents can produce plausible but incorrect answers. Require self-checks that compare results against precomputed metrics or sample queries. Log and review discrepancies.

- Policy violations: Bake policy into planning. The agent should read and apply rules about PII, row-level access, and cost caps as first-class constraints.

- Query cost explosions: Place budgets per user, per domain, and per task. Add query simulation so the agent can estimate cost before it runs heavy steps.

- Overfitting to natural language: Encourage users to pin definitions and filters rather than relying only on conversational phrasing. Teach the agent to confirm ambiguous terms.

- Shadow analysis: Without lineage, it is hard to trust a result. Require every answer to include the executed query, metric versions, and data freshness.

- Human factors: Analysts may worry about replacement. Position the agent as a force multiplier. Use it to clear backlog and elevate analysts to design and oversight.

A Pilot Playbook That Works

A good pilot is small enough to run fast and big enough to matter. Here is a four-week blueprint that has produced reliable results.

Week 0: Preparation

- Pick a single business domain with high question velocity and strong data ownership, for example digital marketing or support operations.

- Assemble a pilot squad: 1 product owner, 1 domain SME, 1 data engineer, 1 analytics lead, and 5 to 10 representative users.

- Freeze the metric catalog for the pilot’s scope. Finalize names, formulas, and owners.

Week 1: Guardrails and onboarding

- Connect only the pilot datasets. Enforce row-level policies and cost caps.

- Seed the agent with your metric definitions and documentation.

- Run dry tests using synthetic questions to exercise planning and error handling.

Week 2: Real questions under supervision

- Collect 50 to 100 real user questions. Classify them by type: descriptive, diagnostic, predictive, or prescriptive.

- Let the agent answer under human review. Score task success, time to insight, and plan quality.

- Create a backlog of improvements to definitions, metadata, and prompts.

Week 3: Autonomy with spot checks

- Allow the agent to answer low-risk questions without pre-approval, while logging everything.

- For medium-risk questions, require a human sign-off before the result is published.

- Track adoption and satisfaction. Interview users about trust and clarity.

Week 4: Wrap-up and go-no-go

- Compare pilot metrics to your baseline. Did task success exceed 80 percent on first attempt? Did time to insight drop by at least 50 percent for routine questions?

- Document governance policies and playbooks for scale-out. Identify next domains.

- Decide whether to expand, pause, or iterate.

Metrics That Prove Business Impact

You do not need exotic KPIs to prove value. Focus on a small set you can measure every week.

- Cycle time: Median time from question to accepted answer. Target 2 to 5 minutes for routine tasks.

- Analyst throughput: Number of tasks resolved per analyst per week. Track with and without the agent.

- Rework rate: Percentage of answers revised due to policy or logic errors. Trend it downward with self-checks and improved definitions.

- Cost per answer: Query compute and human time per resolved task.

- User adoption: Share of exploratory questions that go to the agent rather than static dashboards.

- Data trust: Survey-based confidence score tied to explanation clarity and lineage completeness.

Use these metrics to guide incentives. For example, reward teams that contribute high-quality metric definitions and documentation that directly raise task success.

Build Versus Buy

The build-buy decision is not binary. Most enterprises will buy a product like Aidnn to accelerate planning and explanation, then extend it with custom tools and domain knowledge.

Buy when:

- You want a working assistant in weeks rather than quarters.

- You value product-grade planning, self-evaluation, and policy tooling.

- You have limited platform engineering capacity.

Build when:

- You need deep customization across unusual data systems or regulatory regimes.

- You already operate a strong semantic layer, feature store, and policy engine.

- You aim to embed agentic BI as a core capability across multiple internal apps.

Hybrid is often best. Treat the agent as a platform. Standardize data contracts and policies once, then support multiple agents for different domains and personas.

Designing For Trust And Explainability

An agent that cannot show its work will not last. Design for explainability at three levels:

- Data level: Always show data sources, freshness, sampling, and row counts.

- Logic level: Display the queries, joins, filters, and computations used. Keep a versioned history.

- Narrative level: Present a concise explanation with uncertainties and next-best questions.

Encourage a culture of friendly skepticism. Users should be comfortable asking the agent to justify a step, to compare with a saved baseline, or to run a small counterfactual test.

What The Near Future Looks Like

Agentic BI will not eliminate dashboards altogether. Instead, dashboards will evolve into living artifacts generated by agents, with controls that let teams pin canonical views while the agent handles the long tail of questions. Expect three shifts:

- Dynamic briefs: Instead of static pages, you will receive time-boxed briefs that summarize performance, anomalies, and recommended actions.

- Closed-loop actions: Agents will increasingly connect to operational tools. After explaining a trend, the agent can open a ticket, start an experiment, or schedule a follow-up analysis with guardrails.

- Shared playbooks: High-quality answers become reusable playbooks that others can run or adapt, creating a library of trustworthy analyses rather than a gallery of disconnected charts.

How To Get Started This Quarter

- Pick a domain with strong ownership and a clear backlog of unanswered questions.

- Clean up your metric definitions and document them in one place.

- Pilot an agent like Aidnn with strict policies and logging.

- Measure task success, time to insight, and user trust.

- Expand based on evidence, not hype.

If you already invest in AI across go-to-market workflows, treat agentic BI as the analytical counterpart of that shift. The same patterns that let AI reps join your demos apply inside your data organization. And if you source capabilities through marketplaces, the rise of AI marketplaces offers a clear map for curating agent tools and permissions at scale.

The Bottom Line

Agentic BI turns analytics from a library of static artifacts into a living assistant that plans, executes, and explains. Aidnn is a strong signal that this model is ready for enterprise pilots. Start small, govern tightly, and measure relentlessly. The payoff is fewer dashboards, faster answers, and higher trust.

When your business can ask a question in plain language and receive a reliable, fully explained answer in minutes, dashboard sprawl stops being a tax on attention and becomes a solved problem.