When Power Writes the Model: AI’s Thermodynamic Turn

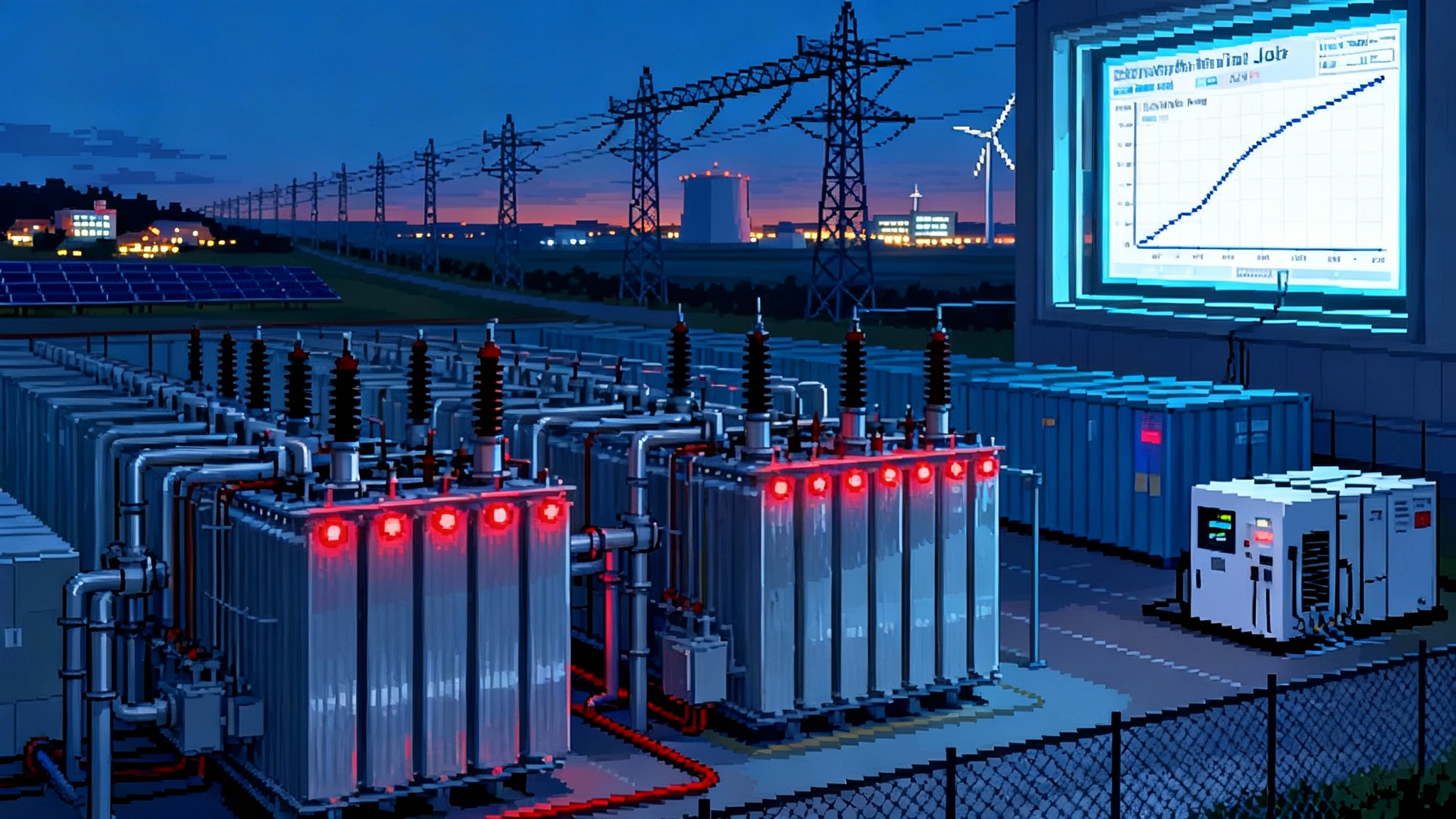

Frontier AI is hitting a new ceiling: electricity. Utilities, hyperscalers, and federal agencies are redesigning the grid around compute, turning megawatts, contracts, and siting into the real constraints on model scale and reliability.

Breaking news that changes the question

In late September 2025, the center of gravity for artificial intelligence shifted from chips to circuits. Several large utilities unveiled capital plans built for a new class of always on load. Pacific Gas and Electric proposed tens of billions in transmission upgrades through 2030. CenterPoint sketched a 2026 to 2035 roadmap tied directly to surging data center demand and rising peak loads, a trend documented in new utility plans. At the same time, hyperscalers signed long horizon capacity deals that lock in racks, power blocks, and delivery schedules. Some read less like cloud contracts and more like industrial procurement.

The federal posture followed suit. The U.S. Department of Energy advanced siting on federal lands for data centers colocated with new generation and transmission corridors, building on directives from earlier in the year. The signal was unambiguous. The country would accelerate grid and siting pathways for compute through designated DOE sites and solicitations.

The headline is simple. The decisive constraint on intelligence is now power, not parameters. We used to ask how many accelerators and how much memory we could marshal. Now the first question is where the megawatts will come from, at what price, with what curtailment rights, and under which interconnection queue.

Why power now sets the ceiling

Think of a frontier model as a steel mill for tokens. Training is a heat process. Inference is the rolling line. Parameters describe the furnace, but power availability and quality determine how hot and how often you can fire it. If the grid can only feed 80 percent of the heat you planned, you either shrink the batch, slow the cadence, or change the alloy. That is what power does to models.

Four mechanics that now rule scale

-

Topology shapes scale. Training across two campuses on opposite sides of a transmission bottleneck will see different availability, latency, and curtailment profiles than training behind one high voltage substation. In a world where new 500 kilovolt lines take years, the physical map constrains feasible cluster size and the practical parameter budget.

-

Contracts shape cadence. Take or pay energy clauses, minimum demand obligations, and penalties during system stress force operators to pick training windows and checkpoint intervals that respect grid realities. Scarcity windows create natural rhythms that favor curriculum style training, staged fine tuning, and scheduled retraining instead of continuous burn.

-

Prices shape inference economics. Locational marginal prices, congestion charges, and resource adequacy costs convert every thousand tokens into a power line item. If your model runs in a node with frequent scarcity pricing, value per token falls unless your service can flex in time or colocate with firmed power.

-

Curtailment shapes alignment choices. During stress events, the question becomes which workloads get priority. Some operators will privilege safety evaluators and red team harnesses over noncritical generation. Others will throttle batch inference before touching real time guardrails. Alignment becomes a resource allocation problem, not only a philosophical one.

The grid writes the roadmap

We can now read the grid as if it were a product requirements document for models.

-

Model size. Cluster size will be capped by the megawatts that can sit behind a feasible point of interconnection without multiyear transmission work. That pushes builders toward mixture of experts, sparse activation, and activation steering to deliver scale without one monolithic hot bucket.

-

Training cadence. Utilities increasingly prefer predictable large loads that pre declare maintenance like windows. Expect quarterly or monthly training blocks that line up with shoulder seasons and renewable surpluses, plus emergency pause protocols built into training loops.

-

Inference pricing. Live tokens will carry implicit time of use pricing. Providers that can time shift low priority inference to off peak periods will undercut those that cannot, even if both use the same silicon. This is one reason the deliberation economy matters. If inference becomes a structured research loop, then schedulers can shift nonurgent deliberation to low cost hours.

-

Alignment and safety budget. When power bites, organizations will choose to keep alignment evaluators hot while allowing some product features to cool. That choice will be tested on the hottest day in August or the coldest night in January.

Concentration risk, antitrust, and the new rate base politics

Follow the money and the copper. Utilities recover capital by adding assets to rate base, then earning a regulated return. If data center driven investments dominate new rate base, concentration risk is no longer only about a few model makers. It becomes a grid wide posture where non data center customers bear part of the cost of serving very large digital loads.

Expect four developments.

-

New tariffs and classes. Several utilities are proposing dedicated rate classes for high load factor customers above a clear threshold. That allows cost allocation to match causation and gives commissions a lever to protect households and small businesses.

-

Siting conditions tied to community benefit. Local commissions will require backup power standards, noise and air quality controls, and community energy investments as conditions of approval. Backup diesel fleets will face stricter testing windows and emissions limits. Fuel cells, batteries, and onsite renewables will become permitting sweeteners.

-

Antitrust lenses on capacity hoarding. When capacity deals look like exclusive control over substations or long duration firming, competition enforcers will ask whether incumbents are foreclosing rivals from power, not just from chips. This is part of the new politics of compute.

-

Financial stress tests. Credit analysts and regulators will test plans for scenarios where data center demand arrives slower than promised or at lower load factors. The lesson from past booms is simple. Align contract minimums, interconnection deposits, and rate recovery so that stranded assets are not socialized.

For builders, this landscape means power strategy is a board level topic. For utilities and commissions, it means compute is now a rate design and policy challenge, not simply a customer class.

An ethic of grid scale power

There is a moral dimension to system scale loads. A model that improves cancer screening while destabilizing the local grid is a net failure. The ethic is to internalize externalities at design time.

-

Design for demand response. If your architecture cannot reduce load by 20 percent inside five minutes without corrupting state, you are not grid ready. This is a solvable engineering problem. Use snapshotting, staggered expert activation, and gradient accumulation pauses to create safe throttle points.

-

Replace diesel with credible backup. The default stack of hundreds of diesel generators is increasingly indefensible. Battery plus fuel cell hybrids, microturbines with strict run hour limits, and thermal storage can carry through a four hour curtailment without an air quality penalty.

-

Share resilience. Where possible, build community microgrids that allow nearby critical facilities to island with you during outages. This turns a data center from a pure consumer into a resilience anchor.

-

Price the externalities into product. If your service requires firm power during scarcity, reflect that in customer pricing and disclosures. Fair prices improve social license.

From data provenance to power provenance

We learned to document where training data came from. We now need the same discipline for energy. Power provenance answers four questions per workload. What fed the electrons, where was the energy generated, when was it consumed, and what rights were exercised over it.

A practical power provenance label should include:

- Source mix time stamped at five to fifteen minute intervals.

- Grid node with congestion indicators and loss factors.

- Contract type including any firming, storage, or curtailment clauses.

- Emissions intensity both direct and marginal.

This label should flow through internal billing, customer invoices, and sustainability disclosures. It also unlocks new markets. If an enterprise buyer requires that its inference queries run on sub 200 grams of carbon dioxide per kilowatt hour power, a verified label lets you price and route those queries appropriately. Provenance also complements the policy terrain described in the invisible policy stack, where hidden rules and incentives can determine who gets access to firm power.

Compute for kilowatts swaps

In the 2000s, data centers bought renewable energy certificates to offset consumption. In the 2010s and early 2020s, hyperscalers signed virtual power purchase agreements. The next step is bilateral swaps where compute providers deliver useful workloads back to the energy system in exchange for firm or cheap kilowatts.

Three deal forms are emerging.

-

Tolling for compute. A generator provides a fixed block of megawatts behind the fence. The data center provides time flexible batch inference or physics simulations that run when the plant needs to absorb output. The strike is in megawatt hours. Settlement is in tokens or cycles.

-

Storage offtake as scheduling rights. A storage operator grants scheduling rights for a portion of its battery. You agree to reduce load or to absorb charge when the storage needs to hit a state of charge target. This lets you arbitrage five minute price spikes without constant human intervention.

-

Local flexibility markets. Municipal and cooperative utilities create markets where large loads bid to provide non wires alternatives, from reactive power to frequency ride through. A training cluster with modern power electronics and fast pause capability can sell those services.

The finance translation matters. Treat power as a portfolio with physical and financial legs. Hedge with a mix of long term supply, congestion risk management, and demand flexibility. The goal is not only a low average price but predictable volatility and guaranteed availability during the hours your customers value most.

Energy aware architectures

If power writes the model, then models must learn to read power.

-

Budget by megawatts, not only by dollars. Give research teams a power envelope per experiment. Reward improvements in tokens per kilowatt hour at equal weight to tokens per second.

-

Scheduling as a first class discipline. Integrate locational marginal pricing and congestion forecasts into your job scheduler. When a node goes red, the scheduler should move experts to cooler sites or lower activation density without losing coherence.

-

Safety and resilience as code. Build curtailment simulation into continuous integration. Every pull request should prove that the job can shed 10, 20, and 30 percent load gracefully.

-

Preference learning under power constraints. Teach agents to prefer plans that fit within a known power budget. This is alignment for the physical world.

-

Topology aware checkpointing. Place checkpoints to minimize replay cost under expected outage patterns. In regions with afternoon scarcity, orient longer steps earlier in the day and keep late day steps short and easily resumable.

-

Thermal and power co design. Treat cooling and power as parts of a single control loop. If heat rejection capacity dips, activation density should respond before throttling becomes forced at the hardware level.

AI as a stabilizing grid actor

Artificial intelligence is not only a large load. It can be a grid tool.

-

Forecasting and dispatch. High resolution forecasts of load, renewables, and congestion can lower imbalance costs. If you own a fleet of clusters, offer your forecasters as a service to the regional operator or your host utility.

-

Virtual inertia and voltage support. Inverters and power electronics at scale can provide synthetic inertia and reactive power. With the right interconnection agreements, a campus can improve local power quality.

-

Permit tech and siting acceleration. The permitting timeline is often the true constraint. Tooling that accelerates environmental review and interconnection paperwork shortens time to energization and lowers cost for everyone.

These roles earn social license. Communities tolerate large loads that help keep the lights on, not only the ones that consume quietly behind a fence.

Scenarios for 2026 to 2030

Scenario 1, local gates on global ambition. State commissions and counties add siting conditions that require firm power and community benefits. Utilities implement dedicated tariffs for customers above 25 megawatts with minimum demand charges and curtailment obligations. Result, model builders favor fewer, very large, colocated campuses with onsite firm generation. Mixture of experts dominates because scaling through one giant model rarely fits interconnection timelines.

Scenario 2, nuclear colocation unlocks a step change. A handful of advanced nuclear projects reach commercial operation and sign behind the fence deals with hyperscalers. These sites prioritize training during off peak regional demand and export power during system stress. The training cadence becomes seasonal. Parameter counts rise, but the more interesting change is variance reduction. Teams commit to fewer, better scheduled giant runs because they can trust the power envelope.

Scenario 3, grid service markets mature. Regions expand markets for load flexibility, fast frequency response, and non wires alternatives. Large clusters become paid stabilizers. Inference costs fall in those nodes because the grid pays the cluster to be available and flexible. Model companies shift some safety and evaluation workloads to these nodes to guarantee uptime during stress hours.

Scenario 4, antitrust and access rules on capacity. Regulators examine exclusive capacity deals that function like control over substations. Remedies require utilities and developers to publish transparent queues and to offer pro rata access to new capacity. The effect is a more competitive market for training slots and an acceleration of model diversity as more firms gain entry to firm power.

Scenario 5, municipal and cooperative renaissance. Cities and co ops use federal tools and low cost capital to build local campuses tied to universities and industry. They insist on power provenance, onsite storage, and community microgrids. These sites specialize in public sector models, health, and education. The result is a more polycentric map of intelligence.

What to do next

-

If you build models, appoint a head of power strategy who sits with research, not only with facilities. Give that leader authority over experiment scheduling and model roadmaps. Ask for monthly tokens per kilowatt hour metrics alongside loss curves. Tie promotion to improvement on both.

-

If you run a utility, publish a transparent large load queue, a template tariff for data centers, and a menu of paid grid services that a cluster can provide. Invite compute for kilowatts pilots that treat large loads as partners and that demonstrate measurable benefits for residential and small business customers.

-

If you regulate, tie approvals to power provenance, backup emissions limits, and measurable community benefits. Require curtailment capable architectures as a condition of interconnection for very large loads.

-

If you invest, underwrite builders who design for power constraints. Favor teams that show steady improvement in tokens per kilowatt hour and who can earn grid service revenue to offset scarcity pricing.

The line that will age well

For a decade we argued about whether data or parameters mattered more. The grid just answered. Without electrons in the right place and time and under the right contracts, even the best model stays an idea. With credible power, smart siting, and architectures that respect physics, the next frontier becomes feasible and dependable. The grid is writing the model, and the builders who learn to read it will lead.