Claude joins Microsoft 365 Copilot: building multi-model agents

Microsoft is adding Claude to Microsoft 365 Copilot and Copilot Studio. Learn how multi-model routing changes agent design, governance, cost, and safety, with a practical blueprint you can implement this month.

What just changed and why it matters

Microsoft is expanding model choice inside Microsoft 365 Copilot by adding Anthropic's Claude to both the Researcher agent and Copilot Studio. In practical terms, model selection becomes a first class control for enterprise builders who want the right tool for each step of a workflow rather than a one size fits all default. In its announcement on expanding model choice in Microsoft 365 Copilot, Microsoft highlights Claude Sonnet 4 and Claude Opus 4.1 as options for deep reasoning, orchestration, and workflow automation inside the Microsoft 365 experience.

Why this matters for teams building agents:

- You can tune quality, latency, and cost per task by routing across models.

- Copilot Studio becomes a vendor neutral surface where capabilities can be segmented by model.

- Governance shifts from a single vendor policy to a portfolio approach that treats each external model as a distinct processor.

The rest of this guide shows where Claude appears in Microsoft 365, how to design a vendor neutral agent stack, and what to watch for on governance, cost, and safety.

Where Claude shows up in Microsoft 365

There are two places you will notice first.

Researcher in Microsoft 365 Copilot

Researcher is a reasoning agent that synthesizes information from the web, trusted third party data, and your Microsoft 365 work graph. With this update, you can start a session on Claude Opus 4.1 when your task calls for patient reasoning and structured synthesis. Selection is explicit and organizations must opt in at the tenant level before users can choose Claude.

Good fits for Researcher on Claude:

- Complex synthesis and analysis that spans many sources

- Longer investigations, risk reviews, or scenario analysis

- Structured planning or summarization that benefits from disciplined reasoning

Copilot Studio

In Copilot Studio, Claude Sonnet 4 and Claude Opus 4.1 become model choices when you compose custom agents. You can assign a model per capability or per step. That enables orchestration and tool selection on one model and narrative drafting or structured outputs on another. It also gives you a place to run head to head tests that prove the business value of multi model routing before you expand beyond a pilot.

Governance and data handling in plain language

There is one essential fact to internalize. When you enable Anthropic models inside Microsoft 365, your organization is choosing to send data to Anthropic for model processing. That processing occurs outside Microsoft managed environments and outside Microsoft product terms. Microsoft documents this clearly in its admin guidance to connect to Anthropic’s AI models.

What this means in practice:

- Admin enablement is required. Anthropic models are opt in at the tenant level.

- Treat Anthropic as a separate processor with its own audit surface and retention profile.

- Do not assume your existing Microsoft 365 DLP rules cover data after it leaves Microsoft boundaries. Use pre processing redaction or policy based routing to prevent sensitive categories from being sent out of boundary.

- Be transparent with users. Label when a session or agent uses Anthropic models and link to your internal data policy page.

What Claude is likely to be good at inside your workflows

The best multi model stacks rely on task to model fit. Based on common enterprise patterns and published model positioning, these are strong starting bets for Claude inside Microsoft 365.

- Deep synthesis across many sources. Research memos, market landscapes, and diligence packets that favor careful reasoning and structured outputs.

- Policy grounded summarization. Turning regulatory or policy text into checklists and decision briefs with citations to source passages.

- Instructions that require consistent structure. Forms, tables, compliance narratives, and data extraction that must follow a fixed schema.

- Long running planning and decomposition. Multi step plans, investigative flows, or scenario analysis where the model must reason step by step.

Start narrow, measure outcomes, and expand routing to Claude where it beats your default model on quality per cost.

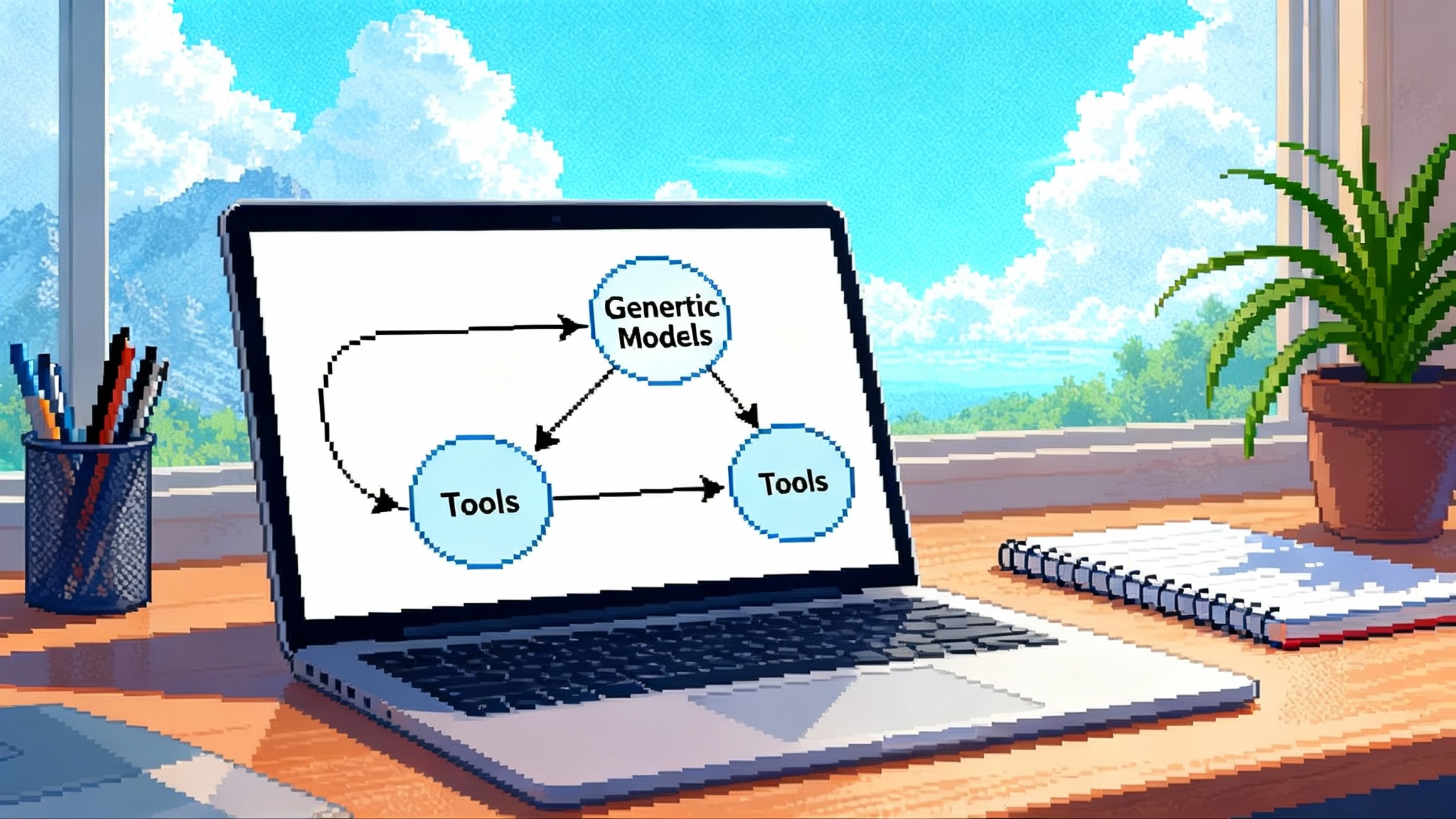

A practical routing blueprint for a vendor neutral agent stack

The goal is simple. Send each task to the model that yields the best business outcome per dollar while meeting safety and latency constraints. Here is a blueprint you can implement in Copilot Studio today.

- Define task fingerprints

- Write a short taxonomy for your agent's tasks. Example: triage, retrieval, analysis, drafting, critique, formatting, grounding, code generation, data extraction.

- For each task, define target metrics. Quality, expected token footprint, latency SLO, safety constraints, and failure modes.

- Build a model matrix

- Rows are tasks, columns are candidate models. Include your OpenAI defaults and Anthropic's Claude variants.

- For each cell, track offline evaluation scores, average latency, cost per accepted answer, and safety incident rate.

- Implement policy based routing

- Start with rules. Example: if task is analysis and token budget is high and your policy requires strict refusals for ungrounded content, prefer Claude.

- Add confidence thresholds. If the selected model returns low confidence or poor grounding, escalate to a stronger model or fall back to a deterministic tool flow.

- Add canary and shadow testing

- Mirror a small percentage of production tasks to an alternative model without user exposure. Compare outcomes and promote when stable wins appear.

- Use buckets per department or geography to respect data policies while you test.

- Observe, learn, and lock in

- Instrument each step. Track time to first token, total tokens in and out, user correction rate, citation correctness, and safety filter triggers.

- Lock a routing rule when you have two weeks of stable wins. Revisit when models or policies change.

Designing agents in Copilot Studio with multi model segments

Copilot Studio lets you orchestrate multi step agents that call tools, ground to data, and invoke different models per capability. A pattern that works well looks like this.

- Orchestrator. Use a capable model for tool selection and plan decomposition. Start with your default OpenAI model or test Claude Sonnet 4 if your tool graph is complex.

- Retrieval and grounding. Keep this deterministic. Use Microsoft search connectors, SharePoint retrieval, or a vector index. Apply grounding first, then send the composed prompt to your selected model.

- Analysis segment. Route to Claude Opus 4.1 for multi source synthesis when quality matters more than speed.

- Drafting segment. Choose the model that best matches the tone and style your users prefer. Measure edit distance from user final drafts as your quality proxy.

- Fact checking and cite verification. Implement an automatic second pass that verifies each citation against retrieved sources and flags low confidence claims for review.

- Formatting and export. Use a lightweight model for templating or a code function that renders to Word, PowerPoint, or HTML to reduce token spend.

Tip: Keep prompts modular. One prompt per segment with clear input and output contracts. This makes it easy to test the same segment across models without rewriting the entire agent.

Cost control without losing quality

Cost governance is simple in principle and tricky in practice. Adopt these controls from day one.

- Token budgets by task. For each task fingerprint, set a soft and hard token cap. Log overruns and investigate.

- Graduated model tiers. Use a cheaper default for low risk tasks. Escalate to Claude or your strongest model when the task is high value or the model signals low confidence.

- Early stopping and compression. Ask for outlines before full drafts. Summarize large source sets before synthesis. Use retrieval to narrow context rather than dumping entire documents.

- Reuse and cache. Cache intermediate analyses and allow reuse across users when the source set is unchanged and the content is not sensitive.

- Outcome based cost metric. Do not chase cost per token. Track cost per accepted answer or cost per task completed with zero edits.

Safety and compliance in a multi model world

When you use more than one model, safety controls must be layered and consistent.

- Pre processing. Classify inputs by sensitivity. Redact PII or regulated data before sending to external models. Block categories that are not allowed to leave Microsoft controlled boundaries.

- Policy based routing. Route highly sensitive tasks to models that meet your data residency or contractual requirements. Keep a Microsoft only path when required.

- Output moderation. Apply a uniform content policy and output filter after every model response. Normalize outputs to the same policy language.

- Grounding checks. Require citations for claims and verify those citations against your trusted data before the answer reaches the user.

- Audit and forensics. Log model choice, prompts, tool calls, retrieved sources, and user edits. Store enough detail to reconstruct incidents without storing unnecessary content.

Microsoft's guidance is explicit that enabling Anthropic sends data to a processor outside Microsoft audit and product terms. Review the linked document and align your controls and notices to that reality before you switch it on.

When to reach for Claude versus your default model

Try these decision heuristics during pilot:

- Choose Claude for long form synthesis, complex multi source analysis, and policy grounded summarization with structured outputs.

- Choose your default OpenAI model for quick interactive drafting, chatty ideation, and tasks where latency and style are the priority.

- Mix them when the job has distinct phases. Example: analysis on Claude, drafting on your default model, formatting on a lightweight model.

- Keep a clear override. Let expert users pick the model per session in Researcher when they know the task profile better than your router.

Evaluation that survives model churn

Models update often. Build an evaluation harness that is boring and repeatable.

- Golden sets. Maintain a versioned set of tasks with gold answers and scoring rubrics. Include short tasks, long tasks, noisy inputs, and edge cases.

- Multi metric scoring. Measure accuracy, citation correctness, hallucination rate, latency, and user edit distance. Weight metrics by business priority.

- Human in the loop sampling. Review a fixed percentage of answers each week. Rotate reviewers across functions to avoid blind spots.

- Regression gates. No routing change ships unless it beats the incumbent on the primary metric without a material safety regression.

Operating model and roles

- Product owner. Owns the task taxonomy, success metrics, and rollout plan.

- ML or prompts engineer. Designs prompts, tools, and routing rules. Maintains the model matrix.

- Data and security lead. Owns data classification, DLP, and redaction pipelines. Signs off on external processing enablement.

- Observability lead. Builds dashboards for quality, cost, and safety. Runs weekly scorecard reviews.

- Change manager. Communicates model changes to users and updates help content and disclosures.

A sample multi model agent you can build this month

Agent: Market and risk research partner for your strategy team.

- Inputs. A list of target companies, your internal analyst notes, and a set of PDF reports from third party providers.

- Plan.

- Retrieval. Pull the latest filings, news summaries, and internal memos.

- Analysis. Route to Claude Opus 4.1 for a structured comparison of market position, pricing power, moat, and key risks. Require citations to internal and external sources.

- Drafting. Route to your default model to craft a crisp two page brief per company in your house style.

- Fact check. Auto verify each citation and flag low confidence claims for human review.

- Export. Render briefs to Word and PowerPoint with a standard template and a one page executive summary.

- Metrics. Acceptance rate without edits, citation correctness, average time to produce a brief, and cost per accepted brief.

Rollout plan that respects risk

- Week 1. Enable Anthropic in a sandbox tenant. Build two or three tasks in Copilot Studio that use Claude in one segment only. Stand up dashboards.

- Week 2. Pilot with a small user group in Researcher. Allow model selection per session. Collect edit distance and user ratings.

- Week 3. Expand to one production workflow that is low sensitivity and high value. Turn on canary shadowing for a more sensitive workflow.

- Week 4. Review metrics and safety incidents. Lock in routing rules that show stable wins. Publish a short internal guide that explains when to choose Claude.

Frequently asked builder questions

- Do I need to retrain users? A short primer helps. Teach how to pick the model in Researcher, how to start with an outline, and how to request citations.

- Will costs spike? They can if you move long documents without retrieval or if you always escalate to the strongest model. Set token budgets and use outlines first.

- What about data residency? Treat Anthropic as a separate processor. Use pre processing controls for sensitive content and keep a Microsoft only path for restricted data.

- How do I know routing works? Ship with dashboards and weekly reviews. If some tasks regress, roll back the rule and investigate.

Related reading from AgentsDB

If you are mapping the new boundaries of agent runtime and orchestration, these pieces provide helpful context from the rest of our blog.

- Learn how browser native agents change the runtime with Claude for Chrome as the agent runtime.

- See how real world transactions reshape agent evaluation in AP2 unlocks real commerce for agents.

- Understand how enterprise platforms are converging in GPT-5 arrives inside Agent Bricks.

The bottom line

Adding Claude to Microsoft 365 Copilot and Copilot Studio turns model choice into a daily design decision. It lets you route work to the model that fits the task and gives you new room to shape quality, latency, and cost. It also raises the bar for governance. You must treat each external model as a distinct processor with clear controls, auditing, and user transparency.

If you start with a simple routing blueprint, invest in evaluation and observability, and build a clear policy for data handling, your organization can get the upside of multi model Copilot without surprises. The result is a vendor neutral agent stack that works the way your business works, not the way a single model works.