Inside Algolia Agent Studio: From Demo to Enterprise Agents

Most AI agents shine in a demo, then crack in production. This review explains how Algolia Agent Studio tackles retrieval, orchestration, and observability so teams can ship reliable assistants within a focused 90 day plan.

Why this review matters now

If your team has ever wowed leadership with an AI agent demo, only to watch it stumble once real users, messy data, and incident tickets arrive, you know the gap. Prototypes look smart in a sandbox. Production demands correctness, speed, and governance you can prove. That gap usually opens in three places: brittle retrieval, orchestration that buckles as tools grow, and an observability hole that makes debugging guesswork.

In September 2025, Algolia introduced Agent Studio in public beta, pitching it as a retrieval native platform that wraps those gaps with a single runtime. The promise is straightforward: keep your data where it already lives, choose the model you prefer, and gain built in tracing and experimentation so improvements are measurable. You can find the official news that introduced Agent Studio in public beta for context.

This review takes a product manager and platform engineer view. We explain what Agent Studio is, where it helps, where DIY can still win, and how to evaluate it in 90 days without risking long term lock in.

What Agent Studio is in plain language

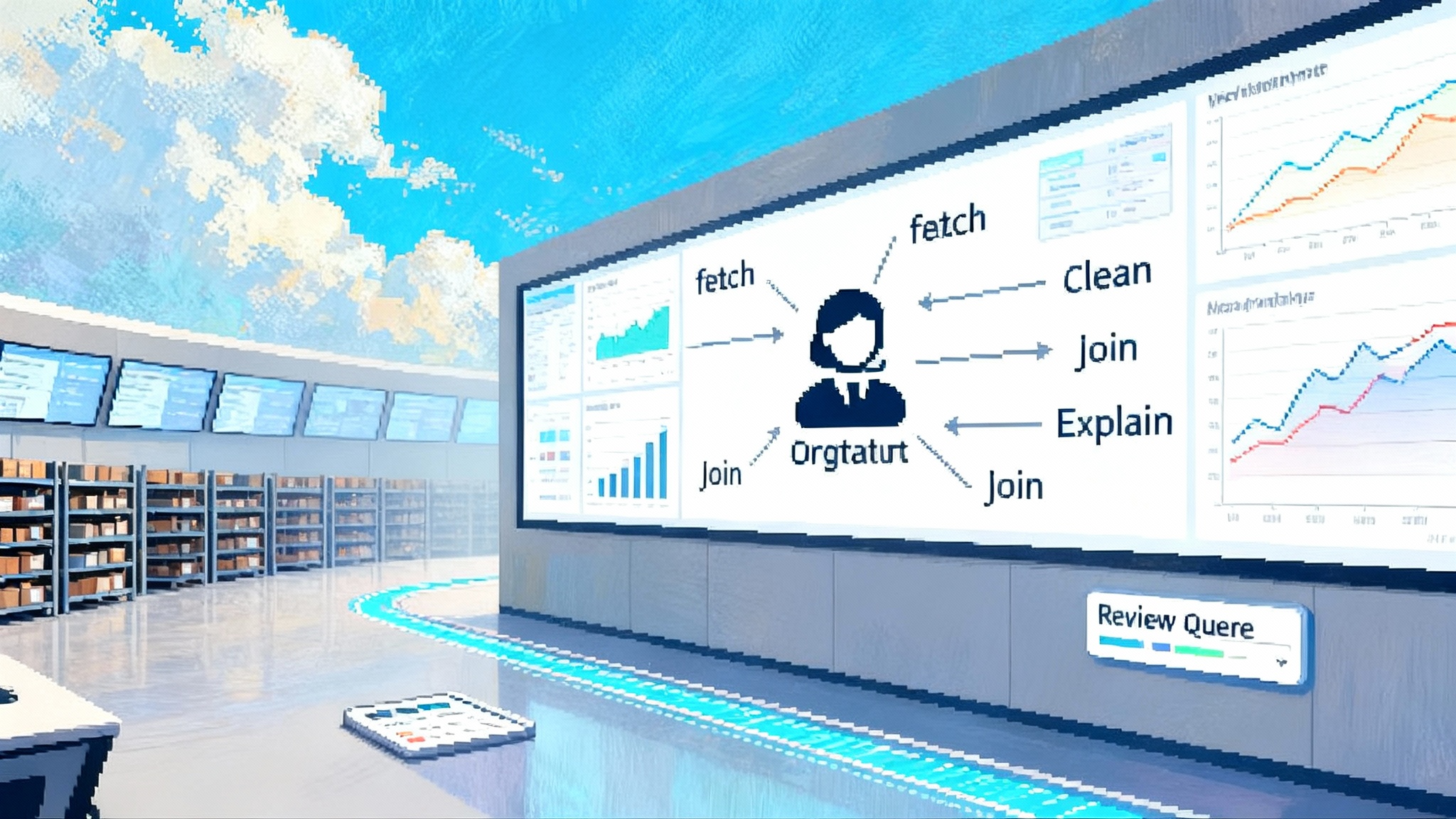

Agent Studio bundles three hard problems into a single opinionated stack:

- Retrieval you can trust. Hybrid keyword plus vector search aligned with your existing indices, rules, and personalization. Correctness starts with what the agent reads.

- Model agnostic orchestration. A managed runtime chooses tools, assembles context, and calls your selected LLM without binding your app to a single provider.

- Built in observability and experimentation. Every turn is traced. Tokens, latency, and tool outcomes are visible. A B testing is first class so you can prove progress.

In practice, you configure an agent in a dashboard, define its purpose, tools, and model provider, set regional controls, and ship. The runtime handles tool selection, executes calls with timeouts and retries, collects standardized traces, and surfaces metrics you can act on.

The retrieval native difference

Most agent stacks start with a language model and bolt on retrieval. Agent Studio flips the order. That matters because model eloquence cannot fix bad context. If you index well, rank precisely, and keep freshness high, your agent feels reliable. If you treat retrieval as an afterthought, you spend your quarters chasing hallucinations.

Three design points stand out:

-

Hybrid retrieval by default. Keyword matching gives you precision, filters, and exact terms. Vector semantics capture intent and synonyms when users do not type canonical names. Algolia merges the signals so you get broad recall with control. If you want to understand the mechanics, Algolia’s NeuralSearch overview explains combined vector and keyword search.

-

Latency that holds under load. Search needs to return in milliseconds, not seconds, so the agent can afford multiple retrieval calls per turn without blowing your p95.

-

Personalization and rules you already own. If your web or app search tunes relevance with rules or events, the agent can inherit that work rather than forcing a parallel RAG pipeline.

For teams whose proof of concept works on happy paths but breaks in edge cases, retrieval centric design is the practical fix.

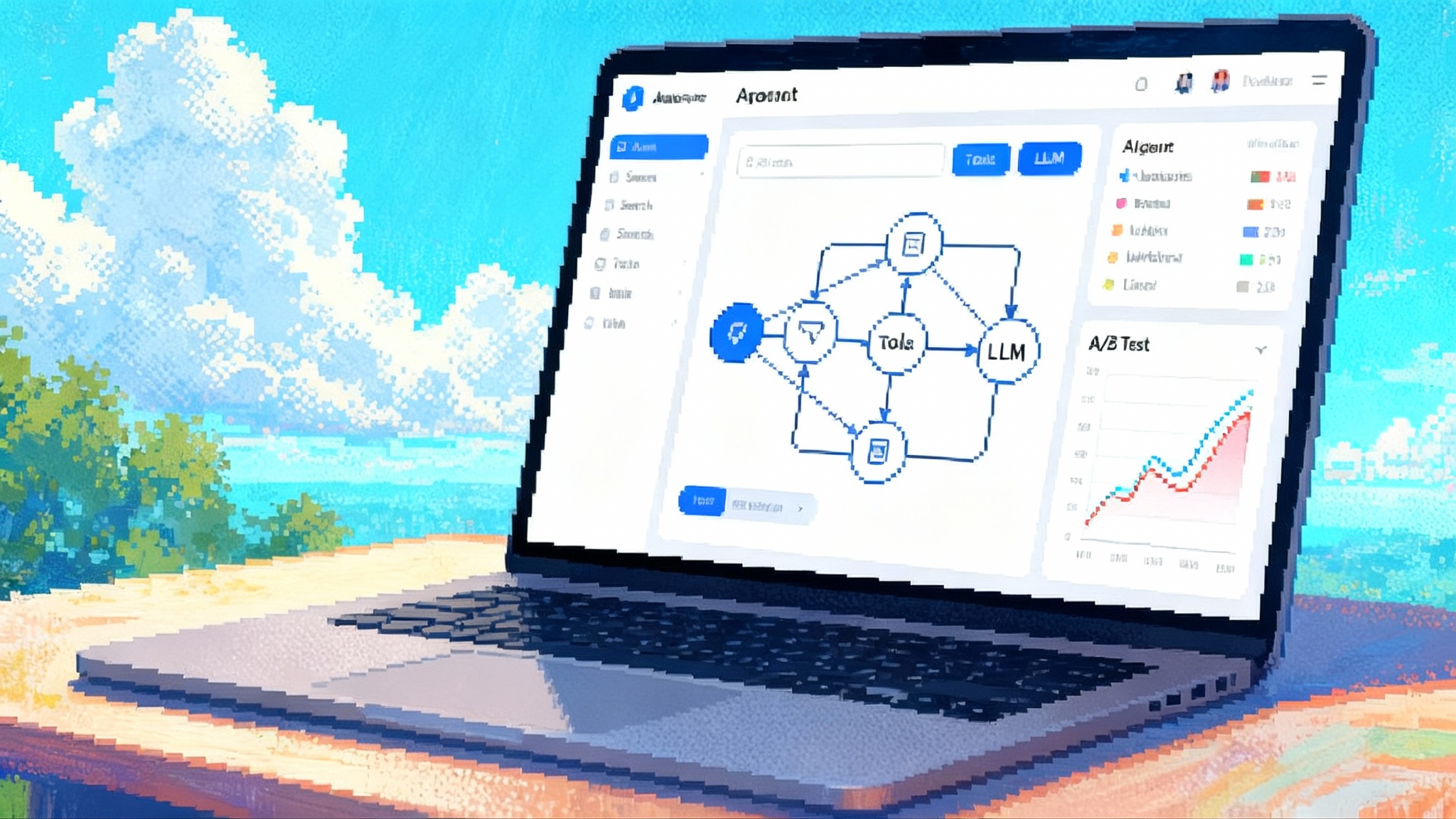

Orchestration without lock in

Agent Studio is explicit about separation of concerns. Retrieval and policy controls live with Algolia. Model choice is up to you. Start with a popular hosted model, set a backup, and change providers as quality or costs evolve. The agent delegates generation while keeping tool selection, guardrails, and context assembly consistent. That decoupling reduces procurement friction and makes head to head model tests feasible without painful refactors.

Observability and evaluation built in

Prompting is not a one and done craft. Shipping a reliable agent depends on a tight feedback loop: trace, diagnose, fix, and measure. Agent Studio generates standardized traces for requests and tool calls, tracks tokens and latency, and supports variant testing. When an answer goes wrong, you can see the retrieved context, the tool outcomes, and the prompt that ran. When you improve retrieval or adjust a policy, you can measure whether exact answer rate or conversion truly moved.

If your current stack has tracing as a backlog item or scattered logs in three systems, this is the selling point to scrutinize first.

How it compares to a DIY stack

Open source toolkits like LangChain or LlamaIndex paired with a vector database are powerful and flexible. You can assemble best in class components, keep full control, and avoid a single vendor bill. The trade is engineering lift and operational sprawl. Here is a pragmatic side by side.

1) Retrieval

- DIY. You embed your corpus, pick a vector store, design hybrid search if you want keywords too, and implement re ranking. You own synonyms, drifting taxonomies, filters, and the glue code that merges keyword hits with vector neighbors. You must tune for both relevance and latency.

- Agent Studio. Hybrid retrieval and ranking are first class. Your indices, analytics, rules, and personalization flow into the agent. You still control your data, but you avoid building a parallel retrieval system just for agents.

2) Orchestration and tool calling

- DIY. You wire function calls, manage auth and rate limits, and handle failure modes across a growing tool surface. Adding a second model often duplicates adapters and logs.

- Agent Studio. Tools are defined with permissions and executed by a managed runtime that already knows how to pass context, enforce timeouts, and capture traces. Switching providers is a configuration step.

3) Observability and evaluation

- DIY. You pick or build tracing, log storage, dashboards, and an evaluation harness. This is tractable but time consuming. Under load, missing traces multiply on call pain.

- Agent Studio. Traces, metrics, and A B tests live next to the runtime. The same system that executes the agent tells you what happened, where it slowed down, and what moved the needle.

4) Governance and safety

- DIY. You implement guardrails in prompts and code, manage secrets, build role based access, redaction, and document data flows for compliance.

- Agent Studio. Policy controls are part of the platform. You configure tool permissions, define which data an agent can reach, and set provider regions. You still need a good policy model, but you do not build the scaffolding from scratch.

5) Cost and speed

- DIY. Spend is flexible but spiky. You pay for vector DB, LLM, and observability separately. The largest line item is engineering time, and it does not shrink as usage grows.

- Agent Studio. Pricing maps to search usage plus your LLM bill. If you already invest in Algolia search, standing up agents may have a small marginal cost compared to building a parallel RAG stack.

None of this means the open source path is wrong. Many teams succeed with it, especially for research or highly bespoke workflows. The question is not ideology. It is speed to reliable value.

A 30 60 90 day evaluation plan

You want a go or no go decision in 90 days with evidence, not vibes. Use this plan to create proof while preserving future choices.

Days 0 to 30 — make a thin slice real

Goals

- One production like use case in staging with real data. Two or three tools wired. Clear success metrics.

Work plan

- Pick a high leverage case: support answerer for known questions, in product guide, or a commerce assistant for structured catalogs. Favor tasks where fresh retrieval is the primary value.

- Run a data audit: inventory indices, freshness SLAs, PII fields, rules, and personalization signals. Define public, internal, and restricted data zones.

- Plan retrieval: confirm which indices the agent will query. For unindexed sources, decide whether to index with Algolia now or bridge through a tool that fetches the source and writes to a temporary index.

- Configure the agent: write a crisp purpose prompt, list allowed tools, set provider and region. Enforce per tool timeouts and retries. Define clear failure fallbacks.

- Set UX budgets: document p50 and p95 latency per turn, token ceilings, and user facing error states.

- Build an offline set: sample 100 to 300 real questions, label expected outcomes, and mark sensitive cases. This guards you from chasing anecdotes.

- Establish baselines: exact answer rate, grounded citation rate if relevant, first token time, full turn latency, tool error rate, and human handoff rate.

Deliverables

- A working thin slice with traces and dashboards, plus a baseline report on the offline set and a tiny live pilot.

Days 31 to 60 — deepen capability and govern it

Goals

- Expand the corpus and tools, add guardrails, and introduce memory. Begin controlled experiments.

Work plan

- Broaden data coverage: bring in two more sources. For semi structured content, index fields that support both keyword and vector matching. For unstructured docs, define chunking and metadata that reflect business concepts.

- Implement policy based governance: model who can ask what and which tools are callable. Use user roles, entitlements, and geographic restrictions. Add a suppression list for sensitive terms and a rule to escalate to a human or classic search.

- Introduce memory MVP: store short summaries of prior turns or user preferences in an index keyed by user or session. Start with opt in storage and a short time to live. Retrieve memory via a tool call at session start.

- Launch A B testing: define one variant that changes a retrieval parameter or a prompt instruction. Target a single KPI such as exact answer rate or conversion lift. Keep scope tight for clean attribution.

- Improve reliability: set stop loss alarms if error rate or latency breaches thresholds. Write simple runbooks for on call engineers with common failures and recoveries.

Deliverables

- A governance spec with enforcement points, a minimal memory module with retention rules, and your first A B test with power analysis.

Days 61 to 90 — harden and decide

Goals

- Prove reliability at realistic load, widen experiments, and make a decision with confidence.

Work plan

- Load and chaos: drive traffic at expected peak plus a safety margin. Inject tool failures, timeouts, and provider rate limiting to confirm graceful degradation.

- Multi model readiness: configure a second model and run a narrow head to head on the offline set plus a small live slice. Compare accuracy, latency, and cost. Validate before shifting traffic.

- Deepen evaluation: add longitudinal checks for frequently changing content. Track wrong answer categories and confirm that retrieval improvements reduce them.

- Security and compliance sign off: document provider regions, data flows, retention, and opt out paths. Confirm trace redaction for PII.

- Business decision: weigh engineering time saved, reliability results, and cost profile against the DIY alternative. Decide to ship widely, extend the trial, or pivot back.

Deliverables

- A decision memo with evidence and a cutover plan that includes rollback steps and first week success thresholds.

A first integration blueprint

Hand this to an engineer and a product manager to get moving without guesswork.

1) Provision and configure

- Connect your app to the Agent Studio dashboard. Define the agent purpose, allowed tools, and chosen provider. Set regional options to match data residency requirements.

2) Index and instrument

- Confirm indices are healthy and include the fields users actually ask about. Send click and conversion signals so personalization is available. Tag documents with roles or entitlements where access varies by user type.

3) Wire the UI

- Start with a simple chat panel or an inline answer component. Present clear error states and offer a quick handoff to traditional search or a human when confidence is low.

4) Guardrails

- Enforce timeouts and retries per tool. Add a suppression dictionary and escalation rules. Redact sensitive fields from traces by default.

5) Evaluate and iterate

- Run the offline set daily. Keep one live A B test running at all times, even if tiny. Let metrics, not anecdotes, drive prompt and retrieval changes. Maintain a changelog of every tweak and its measured impact.

Avoid locking into a single LLM

Agent Studio aims to be model agnostic, but your integration choices determine real portability. Four patterns help:

- Version prompts outside code. Use platform prompt management so you can swap models without a redeploy.

- Standardize output schemas. If your UI expects JSON with specific fields, validate and repair outputs in the runtime before they reach the client.

- Separate retrieval from generation. Treat retrieved context as a structured artifact you can log, inspect, and replay. This makes head to head evaluation straightforward.

- Test for regressions. Use your offline set and a small live slice before migrating traffic to a new provider.

For a wider look at how agentic workflows are reshaping analytics and product experiences, see our related pieces on the Agentic BI arrives analysis and the role of AI reps in product demos. If you are exploring distribution, the rise of AI marketplaces offers useful perspective.

What to watch during a pilot

During your first 90 days, four risk areas deserve extra scrutiny.

-

Freshness and drift. Retrieval quality decays as content changes. Track freshness by source and build alerts for stale indices. Add longitudinal checks for volatile pages like pricing or policy.

-

Tool reliability. One flaky tool can sink your p95. Add circuit breakers, idempotency guards, and fallbacks per tool. Trace every failure with a reason code that shows up in dashboards.

-

Policy gaps. Over permissive tools or data access often slip through early pilots. Start restrictive, expand carefully, and log denied calls to find legitimate needs.

-

Cost surprises. Token usage and tool fan out can creep. Set per turn token ceilings and default to smaller models for non critical steps. Use cached results for predictable lookups.

Where Agent Studio fits best

You will likely see the most benefit if:

- Your team already uses Algolia search and wants to extend that investment to agents.

- Retrieval quality is the bottleneck in your proof of concept, and hybrid search would reduce wrong answers.

- You need integrated observability to move from guesswork to measured improvements.

- Procurement and compliance prefer model choice with regional controls.

DIY may remain a strong choice if:

- You have a dedicated platform team and want full control across every layer.

- Your retrieval needs are minimal or highly bespoke, with little overlap to a unified index.

- You aim to run entirely on your own infrastructure with no managed services.

In practice, a hybrid approach is common. Many teams keep an internal DIY stack for research and niche workflows while using a managed platform for mainstream, user facing features that must scale.

The bottom line

Agent Studio packages the messy parts of production agents into a single runtime that respects model choice and existing search investments. Hybrid retrieval grounds answers in the right data. Managed orchestration trims the cost of stitching tools together. Built in tracing and A B testing turn gut feelings into evidence. Compared with a pure open source stack, you trade some flexibility for speed and lower operational risk. With a focused 30 60 90 evaluation, you can learn quickly whether that trade pays off for your product and your team.