The New AI Arms Race Is Bandwidth: Memory, Packaging, Fiber

The next leap in AI will be won by those who control memory bandwidth, advanced packaging, and fiber. As recall and I/O become the bottleneck, power shifts to HBM, packaging, and network providers. Here is what changes and why it matters.

The week bandwidth became the story

In late September 2025 the center of gravity in artificial intelligence shifted from model size to movement of data. On September 22, 2025, OpenAI and Nvidia announced a plan to deploy 10 gigawatts of systems, a scale that turns the phrase AI factory into a literal program of record. On October 1, 2025, Reuters reported that Samsung and SK Hynix will supply memory for OpenAI’s proposed Stargate complex. Those two sentences describe a simple but consequential pivot. The next frontier is not parameter count. It is memory bandwidth, advanced packaging, and fiber.

This change is intuitive to anyone who has tried to run serious agents at scale. You can expand a model’s parameters, but if it cannot retrieve, move, and combine information at speed, it stalls. The drama is no longer in how many neurons a model simulates. It is in how quickly the right facts reach those neurons and how fast results get back out to other machines, users, and tools. In practice, intelligence is now bottlenecked by recall and I/O.

Why model size is no longer the frontier

The industry learned to grow models by feeding them data and capital. That era continues, but the payoff curve is bending. Adding parameters without fixing memory and interconnect constraints is like installing a bigger engine in a car whose fuel line is a straw. The engine has potential. The acceleration does not arrive.

Modern training and inference hammer three limits at once:

- The rate of recall from fast memory near the chip

- The ability to coordinate many accelerators through low latency links

- The speed of moving context, embeddings, and results across racks, rooms, and regions

When those three are tight, bigger models wait on memory and networking. You can feel this every day. Long context chat slows when the system must scan, shard, and fetch. Multimodal agents pause when large assets traverse saturated links. Even well tuned inference clusters idle while waiting for data to arrive.

If you have followed our view on platform dynamics, this pivot fits a broader pattern. As models proliferate, the shape of competition moves up the stack to coordination, policy, and infrastructure. For more on how non-obvious layers govern outcomes, see our analysis of the invisible policy stack.

The physics of recall and I/O

The fastest memory lives on the chip. The next fastest sits beside it in stacked towers of high bandwidth memory. Then come layers of pooled memory, solid state drives, and the network where storage and compute meet. Each extra centimeter of wire adds latency. Each connector challenges signal integrity. Each hop consumes power.

High bandwidth memory places dozens of dies in a vertical stack and bonds them to the accelerator through a silicon interposer. Think of a skyscraper attached to a train station, where short local elevators move people much faster than buses across town. The skyscraper cannot house everyone, which is why capacity matters as much as speed. You need both a tall building and enough units inside.

Advanced packaging determines how these towers meet compute. Approaches such as 2.5D interposers and chiplets bring memory and processing close together and enable wider, faster links. The choice of interconnect technology between chips and across boards decides how many accelerators can act as one machine. Inside a server you might see custom links for extremely low latency and high throughput. Across servers you see Ethernet variations and specialized fabrics. Across the building you see fiber. Each step up the hierarchy adds distance that must be beaten back with better materials, smarter topologies, and more power.

Memory hierarchy for agents

A useful way to reason about agent performance is to map it to a memory hierarchy:

- Hot context near the accelerator: prompts, tools, and the immediate working set

- Pooled or shared memory for a team of agents: short term artifacts, intermediate code, partial plans

- Object storage and databases: cold but durable knowledge, logs, and civic data sets

Great systems do not treat this hierarchy as invisible. They promote and demote information intentionally and instrument every movement. When they do, cost curves bend and quality rises.

Who controls the bottlenecks now

Follow the physics and you find the new centers of power:

- HBM suppliers: Samsung and SK Hynix lead today, with Micron pulling hard and new entrants racing to catch up. Stacked memory is not a commodity part. Yields are difficult, supply chains are deep, and the capital cost is vast. When HBM allocation is tight, entire training schedules slip.

- Advanced packaging houses: Taiwan Semiconductor Manufacturing Company has a strong position in interposer and chiplet lines. Intel Foundry and major outsourced assembly and test firms are expanding capacity. The constraint is not only lithography. It is bonding, bumping, underfill chemistries, and thermal design that can handle concentrated heat.

- Optical and network providers: The backbone is fiber, from campus-scale rings to long-haul and subsea routes. Co-packaged optics bring light closer to the switch. Broadcom and Marvell supply key silicon. Corning pulls glass. Cloud providers operate regional fabrics that behave like private internets. If the fabric saturates, your model’s memory feels smaller because it cannot fetch and exchange what it needs in time.

In that map the headlines make sense. Nvidia aims to turn 10 gigawatts into usable intelligence. That implies accelerators and the memory stacks that feed them, plus networks that let thousands of chips behave like one computer. OpenAI wants Stargate to feel like a single system, not a scattered archipelago. Memory and bandwidth become the keels of the ship.

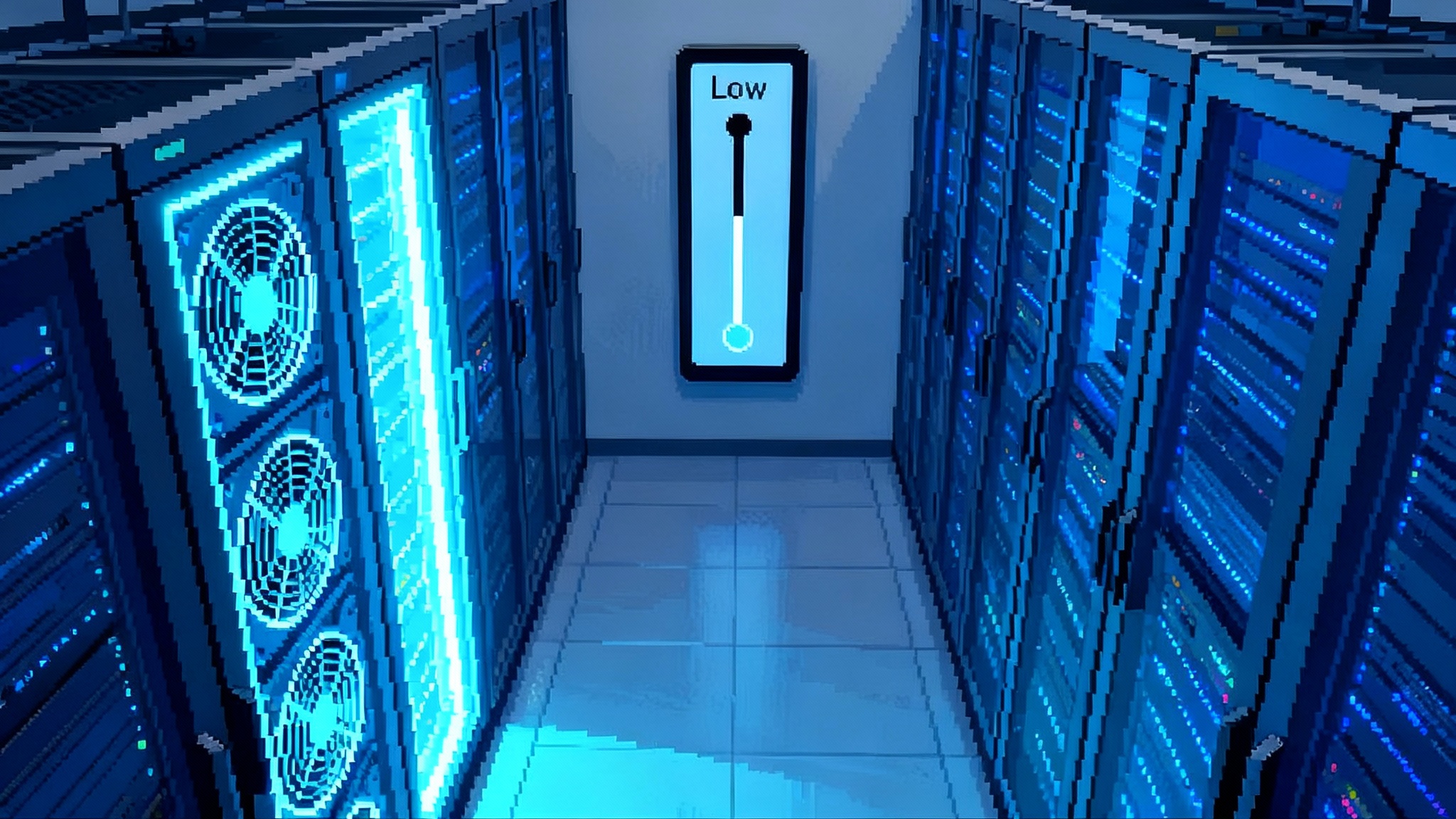

A simple test: where the time really goes

If you want to probe the bottleneck, run a day in the life of a serious agent fleet. Give ten thousand agents the same long knowledge base. Ask them to resolve conflicts in real time, write and test code, assist users, and sync to a cross region vector store. Profile where the time and energy go.

You will see bursts of math, but the long tail belongs to waiting on memory and the network. The system is smart, yet it is often hungry for recall. The punchline is practical. If memory bandwidth doubles and interconnect latencies fall, quality goes up even if the base model stands still. Tool use improves because context is fresh. Safety improves because the agent can retrieve and check before it acts. Cost per useful answer falls because the cluster wastes fewer cycles idling.

This is one reason we have argued for plural architectures and interoperability rather than a single giant brain. Diversity in models and routing only works if recall is fast and common across the fleet. For the strategic case behind that diversity, revisit our take on portfolios of minds insights.

The philosophical and civic stakes

Civilizations become what they can remember and retrieve. The ancient Library of Alexandria mattered because it condensed a world of recall into a place where scholars could combine ideas. The early web felt magical because everything was linkable, fast enough, and open by default.

Today’s agents are cultural machines. They learn, summarize, and decide. Their character is shaped by what they can reach in time. If the only memory they can consult is the one owned by the platform that hosts them, you get narrow minds. If they can recall from civic memory that is fast and permissionless, you get plural minds that can argue with their hosts.

This turns bandwidth into a civic issue. A slow or gated recall layer is a quiet form of control. The more society relies on agents to mediate work and public debate, the more we should treat recall speed and reach as a public value, similar to water systems or roads. The capacity to remember together is not just a technical metric. It is self-determination.

The accelerationist playbook

Accelerating AI is not only about bigger models. It is about building the memory and fabric that let intelligence flow. Here is a concrete plan that companies and policymakers can execute in parallel.

1) Treat bandwidth as public works

- National and regional fiber programs: Build dark fiber rings around data center clusters and between them. Lower the barrier for new entrants to lease wavelengths rather than entire strands. Incentivize co-packaged optics manufacturing close to the clusters to reduce cost and lead time.

- Rights-of-way modernization: Simplify trenching and pole access for fiber and power. Co-design fiber routes with transmission lines and substations so power and bandwidth scale together.

- Open access meet-me infrastructure: Expand neutral interconnection hubs where cloud providers, carriers, and civic networks exchange traffic at low cost. Raise the baseline performance of the public internet so small developers can build memory-rich software without a private backbone.

- Measured outcomes: Track median and tail latencies from edge to data center by region. Publish heat maps and set targets, as cities set goals for commute times or air quality.

2) Design memory-centric agent stacks

- Put retrieval first: Start with the memory graph, not the model. Define how facts, episodes, code, and preferences are indexed and reconciled. Use retrieval augmented generation as the default path and write back useful updates to improve the graph on every interaction.

- Build a layered memory hierarchy: Near the accelerator, keep hot context and tools. In pooled memory, keep shared working sets for teams of agents. In object storage, keep cold but durable knowledge. Make promotion and demotion between layers explicit so cost and latency can be tuned per task.

- Co-design with packaging constraints: Assume near memory is expensive but fast. Train agents to make a small set of hot tokens do most of the work. This is cache design with richer structure and feedback.

- Plan for distributed recall: Treat agent-to-agent communication as a substrate, not an afterthought. Use compact representations for facts and claims that can be validated cheaply. Reward agents that resolve disagreements by fetching and checking, not by voting.

- Instrument everything: Treat memory misses and cross-server retries as first-class telemetry. Build dashboards where product leaders watch recall and I/O as carefully as they watch accuracy.

3) Build policy around a right to remember

- A user right to durable recall: People should have the right to instruct their agents to remember and to retrieve across services at speed. Portability must include indexes and embeddings, not only raw files.

- Transparent memory budgets: Platforms should reveal retention windows, eviction policies, and recall bandwidth guarantees. If an agent promises to be your outboard brain, it should state how big, how fast, and how long that brain remembers.

- Civic memory funds: Cities and universities can sponsor fast public knowledge bases for law, health, transit, and local history. Make them permissionless to read and affordable to call at high rates. This is a library card for machines.

- Safety by retrieval: Prefer verify-then-act patterns. If an agent is about to take a consequential action, it should prove that it could retrieve and check the relevant facts quickly. This flips safety from static filters to active recall.

For a deeper look at the power and energy constraints underneath these choices, our piece on AI’s thermodynamic turn outlines how watts and heat shape what software can become.

What this means for companies and investors

- Hyperscalers: The winning cloud will be the one with the easiest path to memory-rich applications. Expect aggressive builds of fiber, optics, and packaging partnerships that let clusters operate as one giant computer. Pricing will evolve to reflect recall latency, not just compute minutes.

- Chipmakers: The scoreboard now includes memory controllers, interposers, and optical interfaces. Success depends as much on coordinating with HBM suppliers and packaging houses as on core chip design. Product marketing must shift to whole-system throughput per watt.

- Carriers and data center operators: Neutral meet-me rooms, on-ramps to private backbones, and campus-scale optical networks are core revenue opportunities. If a campus can advertise low tail latency for recall-heavy workloads, it becomes a magnet for agent startups.

- Software builders: Do not wait for new hardware. Architect agent stacks around memory budgets now. Give users clear knobs for speed versus cost. Teach agents to compress, summarize, and cache with discipline, then publish the gains. Make recall latency part of every product review.

Signals to watch next

- HBM capacity roadmaps: Follow announcements on new stacks, yields, and expansions in South Korea, the United States, and Japan. Watch delivery lead times. When HBM gets tight, entire programs slip.

- Packaging lead times: If interposer and chiplet assembly slots are booked, chip launches lag even when wafers are ready. Track capital plans by major outsourced assembly and test firms and new facilities near front-end fabs.

- Co-packaged optics adoption: When large switches move light onto the package, cluster sizes can grow without blowing the power budget. This is a quiet unlock for very large training runs and realtime multi-agent systems.

- Memory pooling standards: Compute Express Link and related approaches can stretch memory across nodes. If software makes pooled memory feel natural to agents, we get a smoother path from prototype to planetary scale.

- Public fiber builds: Cities that deploy dense fiber and neutral meet points will see faster growth in AI startups that need recall. Expect the map of AI hubs to correlate with fiber and power more than with tax breaks.

A note on the economics

A 10 gigawatt target is breathtaking because it ties software ambition to hard constraints. Power is one obvious limit. The others are the cost and delivery of memory stacks and interconnects that must arrive in lockstep. When memory supply is tight, accelerators sit underutilized. When optics supply is tight, racks cannot be tied together at the needed speeds. This is why partnerships now stretch from model labs to fabs, packaging lines, and fiber routes. Capital follows the bottleneck.

For builders this changes risk management. Securing memory and packaging capacity early can be as strategic as securing chip allocation. For policymakers it suggests that incentives boosting HBM and packaging capacity may yield more societal benefit than chasing one more front-end fab alone. A balanced portfolio of power, memory, packaging, and fiber delivers the most throughput per dollar.

The human scale of recall

There is a personal dimension to all of this. People adopt agents when those agents remember them. Not only faces and schedules, but projects, drafts, and half-formed ideas. That bond is built on recall bandwidth. If an agent can retrieve the right paragraph from last spring’s notebook while you are in the flow, it feels like a partner. If it spins a beachball, it feels like a toy.

Designers can honor that feeling by making memory visible and malleable. Show what the agent now considers hot. Let users pin memories to the fast tier and see the cost. Let them export the index that makes their work life coherent. Help them teach the agent to forget with intent so that memory is not only long, but wise.

Conclusion: the age of recall

The late September news puts a fine point on it. The next tenfold leap will not come from raw model size alone. It will come from stacked memory that feeds hungry chips, from packaging that shrinks distance, and from fiber that turns continents into coherent computers. As that happens, intelligence begins to look less like a single giant brain and more like a nervous system, with signals racing across a body we are still building.

Treat bandwidth as public works. Build agent stacks that start with memory. Write policy that gives people a right to remember. Do this, and the promise of those 10 gigawatts becomes more than an engineering milestone. It becomes the foundation of a civic memory we own together.