Tiger Data’s Agentic Postgres unlocks safe instant parallelism

Tiger Data’s Agentic Postgres pairs copy-on-write forks with built-in BM25 and vector search, plus native MCP endpoints. Teams get zero-copy sandboxes, faster retrieval, and safer promotions for production agents.

The breakthrough: a database agents can actually use

For two years, agent demos have dazzled while real systems stalled on data plumbing. Agents that plan, code, and reason still hesitate at the boundary of production tables. They need context from scattered stores, safe places to test, and predictable ways to act. A Postgres-first approach called Agentic Postgres pushes that boundary back by shipping copy-on-write forks, hybrid search, and first-class endpoints for the Model Context Protocol.

The headline is simple. Instead of bolting a vector store and a feature service onto your primary database, you use the database your software already trusts. With copy-on-write forks, teams and agents get safe instant parallelism. With built-in BM25 and vectors, retrieval becomes both precise and forgiving. With MCP endpoints, schemas turn into skills that any compatible runtime can discover and govern. You can read the technical pitch on the Agentic Postgres overview, then map it to your stack with the blueprint below.

Safe instant parallelism explained

Imagine your production database as a master cookbook. Every experiment should happen on a photocopy, not on the original. Copy-on-write forks make that pattern immediate. A fork appears as a full clone at creation, yet only changed blocks consume storage. The effect is profound:

- Risky migrations or experiments run in parallel without waiting on slow snapshots.

- Debugging happens on perfectly reproducible environments, captured at a moment in time.

- Successful changes flow back through a policy gate, while forks remain available for audit and replays.

This is what safe instant parallelism feels like in practice. Evals that used to subsample fixtures can run on full fidelity copies. Multi-agent workflows that previously serialized on one staging database can fan out across dozens of forks. The cost model works because forks are created in seconds and pay only for their deltas.

What copy-on-write unlocks for teams

Forks seem like a developer convenience. They are actually an organizational unlock.

- Evals with teeth: spin a fork per run, seed fixtures or mask fields, and record prompts, plans, and diffs. When a metric improves, replay the exact state to verify the win.

- Incident forensics: capture a fork at the failing state. Attach a read-only agent to inspect query plans, hot indexes, or schema drift without touching production.

- Safer learning loops: let agents propose transformations in their own forks. A policy engine runs static checks, unit tests, data quality scans, and cost projections before anything promotes.

Treat every job as a short-lived environment that can be diffed, audited, and promoted. The less time humans spend orchestrating staging environments, the more time they spend improving relevance, reliability, and latency.

Built-in hybrid search inside Postgres

Agentic systems rise or fall on retrieval. Hybrid search pairs lexical signals with semantic vectors to balance precision and recall. In this model, the database exposes both sides as first-class capabilities: BM25 ranking for keyword relevance and vector retrieval for semantic similarity. Because both live in Postgres, an agent can pull a candidate set with vectors, re-rank with BM25, then perform relational joins in the same transaction.

- BM25 rewards rare and discriminative terms. That matters when names, identifiers, or error strings carry sharp meaning.

- Vectors recover paraphrases and synonyms that lexical search alone would miss.

- Blended scoring gets high recall without losing the ability to land on the exact record you meant.

The result is fewer hallucinations and faster answers. There is no hop to an external store and no side channel to reconcile. Your operational data is already warm, your indexes evolve in one place, and your audit trail remains centralized.

For teams tracking the journey from flashy demo to reliable product, this pattern echoes the industry shift we covered in Manus 1.5 signals the shift to production agents and the emergence of memory layers in The Memory Layer Arrives.

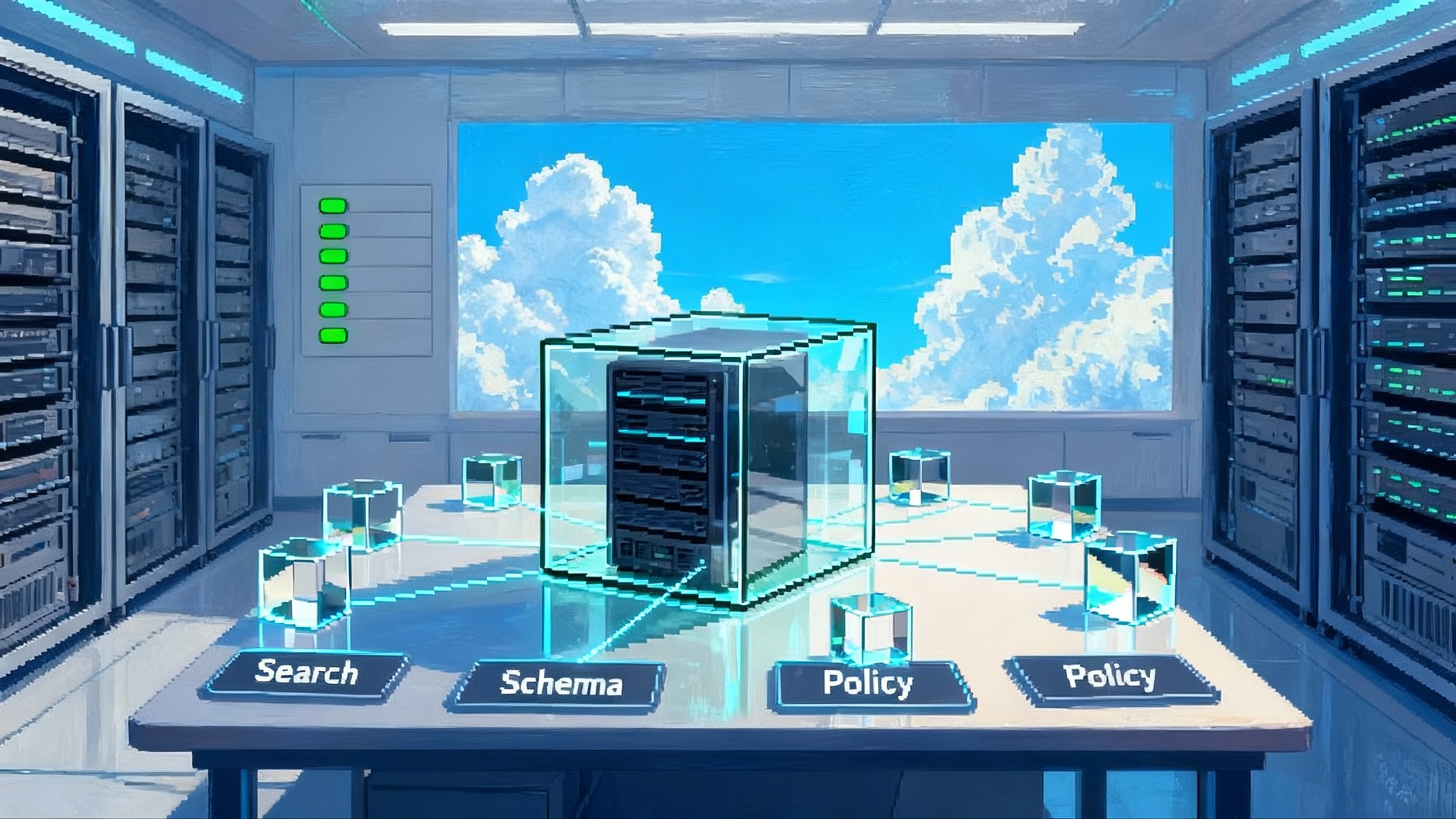

MCP endpoints turn schemas into skills

Connectivity is not enough. Agents need typed discovery, permissions, and repeatable actions. The Model Context Protocol provides that surface. MCP is an open specification that standardizes how applications expose tools and data to language models. It has become a common dial tone across agent runtimes, from local assistants to hosted SDKs. You can study the details in the Model Context Protocol documentation.

When the database ships with built-in MCP servers, three things happen:

- Discovery improves: agents list tools, tables, and schemas with metadata rather than guessing prompts or crawling readmes.

- Safety becomes explicit: MCP supports authentication, policy, and tool-level gating. You can expose read-only queries publicly while keeping writes behind approvals.

- Reuse increases: skills become portable. The same MCP endpoint that powers a developer assistant can back a production support agent.

In short, MCP turns your database into a catalog of skills with predictable behavior. You do not wire a dozen bespoke adapters. Your agent runtime connects to the server and learns what it can do.

Why this beats today’s fragmented stacks

A typical 2024–2025 agent stack looked like this:

- Primary operational database for source of truth.

- A vector database for embeddings and similarity search.

- A feature store to materialize signals for ranking, personalization, and evals.

- A cache for hot paths and a blob store for artifacts.

- Several bespoke services to adapt schemas to each agent and orchestrator.

Teams made that mosaic work for demos, then ran into real limits:

- Synchronization drift: embeddings and features lag behind source tables. Update jobs help but still miss fresh writes.

- Latency tax: every hop adds cumulative delay, which hurts agents that chain retrieval and planning.

- Safety gaps: write paths scatter across services with inconsistent controls, making audits painful.

- Operational overhead: each system has its own scaling, backups, and failure modes. Incident response turns into bingo.

Agentic Postgres collapses that surface area:

- Hybrid search lives next to your tables.

- Forks replace a zoo of staging and shadow environments with precise, quick clones.

- MCP servers present schemas and skills through a stable protocol instead of one-off adapters.

Keep a feature store for large offline learning if it earns its keep. But for agents that read, write, and learn continuously, a single database envelope reduces drag.

If your team is already shipping governed agent workflows, the pattern pairs well with the trends we explored in Governed Autonomy Ships.

Reference architecture teams can ship in 2026

Below is a pragmatic blueprint that leans into forks per job, MCP-exposed schemas and skills, and policy-guarded promotions. Everything rests on Postgres. The edges are where the work is.

1) Core data plane

- Highly available Postgres cluster with point-in-time recovery.

- Read replicas for heavy query workloads driven by agents.

- Hybrid search extensions enabled, with vector indexing for embeddings and BM25 for text fields.

- Declarative migrations managed in source control so every change is visible to policy checks.

2) Fork controller

- Service that creates zero-copy forks of the primary or a replica, including schema, data, indexes, and relevant artifacts.

- Metadata tracks parent hash, creation time, lifecycle state, and storage deltas.

- Default time-to-live caps cost, with automated purge or archive when policy demands it.

3) MCP layer

- Built-in MCP servers expose read-only and write-scoped tools. Read-only tools include query, explain, list tables, and search. Write tools include apply migration, upsert, and create index.

- Discovery endpoints describe tables, columns, constraints, and actions in machine-readable form. Tool descriptions include safety policies and audit tags.

4) Policy engine and auditor

- Policy as code governs who can spawn forks, what tools are allowed, and what promotes.

- Checks include static analysis on SQL, data quality tests, lineage impact, and cost projections.

- Every tool call is logged with a fork identifier, agent identity, prompt hash, and timestamp. Logs are immutable and queryable for investigations and eval analytics.

5) Orchestrator and job registry

- The orchestrator issues one fork per job. It passes credentials scoped to that fork and maps MCP tools to that fork’s endpoint.

- On job completion, the orchestrator signals the policy engine to evaluate outcomes and decide next steps.

6) Promotion and merge path

- Promotion is a controlled write path. If a job proposes schema changes or data writes, the engine generates a migration plan or diff bundle.

- Human reviewers approve or reject. On approval, the orchestrator applies the plan during a maintenance window or as an online migration per policy.

7) Observability and cost guardrails

- Metrics cover fork counts, storage deltas, query latencies, index health, and tool success rates.

- Budgets throttle new forks or restrict vector-heavy queries when cost or tail latency exceed thresholds.

8) Data protection

- Mask sensitive fields at fork creation using a domain catalog of masking rules.

- Provide a redaction tool in the MCP layer so agents can request deidentified slices when their role does not justify cleartext.

9) Eval and learning loop

- Each release includes an eval job that runs on a fork seeded with fresh production data.

- The eval reports relevance, accuracy, latency, and cost per skill.

- Learning jobs write candidate feature tables or prompts into forks that never promote automatically.

A day in the life with forks, skills, and policy

- 9:00 a.m. A product manager requests a pricing migration prototype. The orchestrator spawns a fork. The agent scaffolds a migration file, runs it on the fork, fills sample rows, and verifies referential integrity. MCP policy limits writes to the fork only.

- 10:30 a.m. A support analyst investigates a customer incident. They attach a read-only agent to a fork captured at the time of failure. The agent uses BM25 to find a rare error string, then a vector query to surface similar failures. It produces a report with explain plans and suggested index changes.

- 1:00 p.m. An eval run tests a new reranker. It uses hybrid search in Postgres, grades relevance on a fork seeded with the last seven days of data, and posts a diff showing improved precision at top five without cost regression.

- 3:00 p.m. Promotion window. Two migrations that passed policy and review are applied to production. The forks are archived with immutable logs so the team can replay any decision.

No heroic glue code. No nervous merges into a staging database that looks nothing like prod. Just forks, skills, and policies.

Migration path from vector plus feature store

You can adopt this pattern incrementally.

-

Start with read paths. Move hybrid retrieval into Postgres. Keep the external vector database hot, but point one agent at the in-database vector index and BM25 to validate latency and relevance.

-

Bring MCP online. Expose read-only tools first. Audit every tool call for a week before enabling write tools on forks.

-

Introduce forks per job for schema changes and data backfills. Keep promotions manual at first. Measure storage deltas and fork lifetimes to set budget alerts.

-

Retire the external vector database only when in-database relevance meets or exceeds current benchmarks. Keep a dual write period if you need an escape hatch.

-

Keep the feature store for large offline computations. Agents that need fresh features can compute them in their fork, then publish through the same policy gate.

This path trades a risky big bang for measurable wins in safety, latency, and operator sanity.

Tradeoffs and the sharp edges to plan for

No system eliminates tradeoffs. A forkable Postgres with hybrid search and MCP shifts where you pay. Plan for the following:

- Storage pressure: forks are cheap at first, then grow if agents churn on large tables. Enforce budgets, retention policies, and auto-compaction after runs.

- Cross-fork semantics: cross-fork joins are not supported. If agents expect to merge data from two forks, they must copy or coordinate upstream. Design jobs so reads and writes stay within a single fork.

- Vector drift and index hygiene: embeddings evolve as models change. Track model versions on disk, reindex during low-traffic windows, and store dual embeddings during migrations so agents can query both.

- Relevance tuning: BM25 and vectors need tuning for your corpus. Establish evaluation datasets, graded relevance, and per-domain scoring parameters. Do not let agents self-tune relevance in production without human oversight.

- MCP security: a standard protocol is not a security policy. Apply least privilege on every tool, require human confirmation above thresholds, and log inputs and outputs for every tool call. Make policy evaluation explicit and reviewable.

These are manageable if you treat forks as first-class resources, retrieval as a product, and MCP as an enterprise surface with owners.

What to watch as the ecosystem matures

- Better indexing in Postgres: expect more native vector indexes and smarter vacuum tuning for hybrid workloads. The tradeoff between recall and write throughput will decline.

- Stronger MCP governance: standardized capability descriptors, portable audit metadata, and risk tiers will make endpoints easier to reason about in regulated environments.

- Fork-aware observability: tracing and metrics systems will learn to treat forks as first-class spans so teams can attribute latency and cost to specific jobs.

The bottom line

Agentic Postgres is a clear signal that agents are moving from toy demos to resilient, data-native systems. Copy-on-write forks deliver safe instant parallelism. Hybrid search inside Postgres removes a class of synchronization and latency problems. MCP endpoints turn schemas into discoverable, policy-gated skills.

You can ship this pattern in 2026 with parts that exist today. Start with retrieval. Add MCP for typed discovery and tool gating. Shift risky changes into forks and make promotions boring. The teams that turn forks, skills, and policy into muscle memory will set the pace for the next wave of agent-powered software.