From prompts to production: Caffeine’s autonomous app factory

Caffeine turns a plain English prompt into a live, shippable web app by coordinating specialist agents across planning, code, tests, and deployment. Explore how its factory model compresses launch cycles and where it still struggles.

What just launched and why it matters

On July 15, 2025 Dfinity opened early access to Caffeine, an agent-driven platform that turns a plain English prompt into a running web application that can be shipped to users in real time. The company framed Caffeine as a prompt-to-production system rather than a code generator, which implies first-class support for deployment, routing, identity, payments, logs, versioning, and security. In short, the claim is not better autocomplete. It is a coordinated set of agents that take a business goal and assemble the app, data, and infrastructure needed to deliver it. You can read the original announcement in Dfinity opened early access to Caffeine.

If you have watched the evolution from autocomplete to code copilots to hosted agent platforms, Caffeine is the next rung. Instead of a single assistant that suggests code, it orchestrates a crew of specialists. Imagine a film set rather than a solo videographer. One agent writes the product spec, another scaffolds front end and back end, another sets up authentication and data models, another writes tests, and another manages deployment. The user stays in the director’s chair, steering with prompts and corrections.

From copilots to autonomous factories

To see what changes, compare two workflows.

Traditional copilot workflow:

- You describe a feature to an engineer.

- The engineer writes code with a copilot’s help.

- Operations engineers set up infrastructure, domains, certificates, and deployment.

- Security reviews and tests run.

- You ship once all pieces fit.

Caffeine-style workflow:

- You describe the finished product and its constraints.

- Agents synthesize a plan, ask clarifying questions, and begin to build.

- Within the same environment, they generate code, data schemas, integration glue, and tests.

- They deploy to a runtime that is already integrated with identity, storage, and an app registry.

- You refine through conversation, like a product manager working with a multidisciplinary team that never sleeps.

This shift is not only speed. It is also the locus of coordination. Copilots improve developer throughput inside a function. Autonomous builders coordinate across functions. That is why the idea resonates with founders who juggle many roles.

For context on how multi-agent coding is becoming more native to developer tools, see how Cursor 2.0 makes agents native. Caffeine extends that direction by binding the builder to a runtime that can ship instantly.

How Caffeine compresses ship cycles

A useful mental model is a factory line that can be reconfigured mid-run. Each agent is a station that operates on artifacts. The station list changes with the job, but a typical sequence looks like this:

-

Intake and scoping. The platform converts your prompt into a structured specification with user stories, data entities, non-functional requirements, and a deployment target. It surfaces ambiguities as questions and records decisions for traceability.

-

Plan to graph. It converts the plan into a dependency graph of tasks. For example, build a marketplace front end, create contracts for listings and orders, integrate payments, and configure access control. The graph lets agents work in parallel without stepping on each other.

-

Code and compose. Specialized code agents generate components and wire them together. They seed a test suite and basic observability with checks for uptime and key flows. Artifacts are versioned so you can roll back missteps.

-

Deploy and iterate. The runtime hosts each component, exposes URLs, and keeps stateful services alive while you refine via prompts. Iterations are tracked as drafts you can revert with one command.

Time savings come from eliminating handoffs and environment drift. When planning, coding, testing, and deploying live in one fabric, fewer surprises appear at the edges. The factory does not become fast by forcing workers to type quicker. It becomes fast by removing the forklift rides between buildings.

Consider a two-person startup that wants to launch a niche peer-to-peer marketplace. In a classic setup they must choose a stack, scaffold services, set up user management, pick a database, write listing flows, wire payments, stand up a dashboard, and wrap it in a deployment pipeline. Even with a copilot, each stage introduces delays and context switches. With an autonomous builder, the pair can spend the morning specifying the market rules and the afternoon exercising a working alpha, then tighten the flows through guided edits. You still need product taste and domain expertise. You just spend more time deciding what to build and less time fighting pipelines.

Why the runtime is not a footnote

Caffeine does not only generate code. It ships to a decentralized runtime, the Internet Computer, where smart contracts called canisters can serve web applications directly to users. Dfinity previewed the alpha and proposed network expansion to support it in a developer update announcing the alpha. The point is not only the event. The point is that the runtime is part of the product.

Consider three founder concerns.

-

Cost predictability. On many commercial clouds you pay for compute, storage, bandwidth, and a zoo of services. Bills can spike during growth or misuse. The Internet Computer uses a resource model paid in cycles, which are pre-purchased and then consumed by compute and storage. That turns part of your variable cost into a known budget line. It does not remove cost risk, but it makes it observable and pre-funded.

-

Sovereignty and lock-in. If your stack lives across proprietary services, you inherit their policies, outages, and pricing. On a decentralized runtime you deploy to a network governed by protocol rather than a single company. That gives you a different kind of control. You still depend on a community and a foundation, but not on the business priorities of one cloud vendor.

-

Serving model. Canisters can serve front ends and back ends without a separate web host. That reduces glue code, eliminates a layer of deployment complexity, and makes end-to-end integrity easier to reason about. You can attest to the origin of the app that users see, which matters for high-trust consumer and enterprise scenarios.

None of this makes the decentralized route a universal fit. Debugging, ecosystem libraries, and team familiarity still favor the commercial cloud for many teams. The claim is not that decentralized is cheaper or better for every case. The claim is that a native runtime tailored for agent-built apps can give certain founders cost visibility and control that are hard to match when stitching together many hosted services.

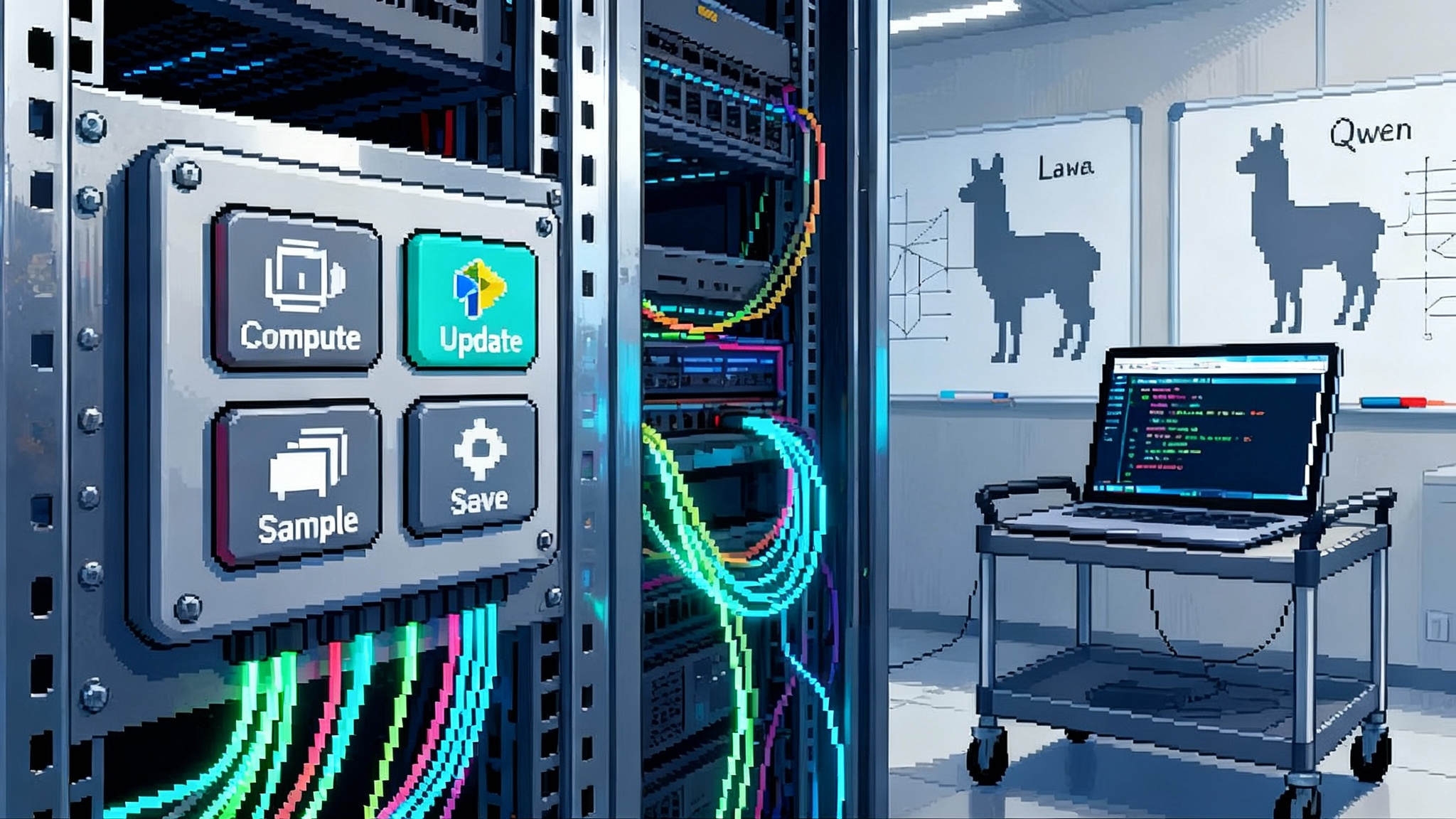

What the agent crew must do well

An app factory is only as good as its stations. Here are the jobs that matter most and how to judge them.

-

Planner. Converts messy prompts into a precise plan, asks targeted questions, and keeps a log of decisions. You should see it identify non-functional requirements like page load goals, data retention, and access control.

-

Front-end engineer. Produces accessible, responsive interfaces that are easy to restyle. Look for semantic HTML, clear state management, and testable components.

-

Back-end engineer. Produces data models, transactional boundaries, and clear interfaces. It should avoid overfitting to one prompt by keeping domain logic modular.

-

Integrator. Manages third-party services, secrets, and rate limits. It should propose safer defaults like OAuth for login, tokenized payments, and background jobs for heavy work.

-

Tester. Generates unit tests and synthetic journeys for critical flows. It should alert when prompts risk breaking existing behaviors.

-

Deployer. Manages environments, versioning, and rollback. It should snapshot data before migrations and annotate releases with prompt history.

When these stations coordinate, the cycle time shrinks. When they do not, you get fast code and slow shipping. Evaluate Caffeine or any agent platform by watching how the crew handles change. Ask it to add a refund workflow, then check whether tests, data, and UI update together.

Guardrails founders should require on day one

Autonomous builders are powerful, and power wants boundaries. Put these guardrails in place before you move beyond experiments.

-

Human approvals for privileged actions. Require a short review before data migrations, permissions changes, or public deployments. The review should be inside the tool, not in a separate chat.

-

Capability-based access. Give agents only the keys they need. Fine-grained permissioning for data tables, external APIs, and secret retrieval limits the blast radius of mistakes.

-

Package hygiene and artifact signing. Pin dependencies, generate a software bill of materials, and verify signatures at build time. Supply chain incidents hide in generated code like they do in human code.

-

Prompt hygiene. Treat outbound calls as untrusted input. Escape and filter where prompts can cross security boundaries. The platform should make prompt injection attempts visible in logs.

-

Policy as code. Encode privacy, retention, and data residency policies so the builder enforces them. If your rule says do not persist raw personally identifiable information, the platform should block schemas and code that violate it.

-

Red team prompts. Maintain a catalog of adversarial prompts that try to extract secrets, skip tests, or disable logging. Run them as part of pre-deployment checks.

-

Reproducible builds and rollback. You should be able to recreate a release from the same prompts and versioned models, and roll back with one command if metrics fall.

-

Transparent cost meters. Show projected cycle or runtime spend per environment before shipping, and alert when usage crosses thresholds.

-

Audit trail that humans can read. Store a narrative history of prompts, decisions, code diffs, and deploys. You will need this during incidents and audits.

If you want to see how enterprises are adopting approval workflows for agents, read about approval gated agents.

Where Caffeine may be strongest

Caffeine’s bet is that pairing an agent factory with a runtime designed to host it end to end yields a cleaner pipeline. The closer the builder is to the deployment substrate, the fewer seams you must seal. That can show up in three practical ways.

-

Lower integration tax. If identity, storage, and serving live in one place, you spend less time wiring and more time shaping features. That is especially helpful for teams that cannot afford bespoke infrastructure.

-

Tamper-evident deployments. A runtime rooted in a public protocol makes it easier to prove what code is serving your users. For regulated use cases, that can reduce vendor due diligence time.

-

Predictable scaling steps. When your cost model ties directly to compute and storage units rather than a patchwork of services, you can plan go-to-market experiments with clearer breakpoints.

None of these benefits erase the need for good engineering judgment. They can, however, change the slope of your first six months.

Where it will struggle

Autonomous builders still hallucinate. They can choose poor abstractions, introduce subtle security issues, and struggle with nuanced domain logic. Platform vendors often demonstrate classic use cases like blogs, stores, and dashboards. The test for Caffeine will be less glamorous. Can it handle a gnarly enterprise integration with an idiosyncratic identity provider. Can it migrate a live schema without downtime. Can it keep a test suite green over weeks of prompt-led edits.

Teams will also encounter a cultural question. Who owns the app if the builder wrote most of it. The answer should be unambiguous. Your team owns the app and the data, and you should be able to export, self-host, or reproduce it. Push the vendor for a clean exit path. For a view on operationalizing agent builds into a business, see how Appy.AI turns agent demos into revenue.

A practical pilot plan for founders

If you want to try Caffeine without risking your roadmap, take a measured approach.

-

Pick a scoped, non-critical product. A partner portal, a content site with light personalization, or an internal tool with known workflows.

-

Write prompts like a product requirements document. Include outcomes, metrics, and constraints. Avoid underspecifying. The better the inputs, the better the outputs.

-

Set a budget in cycles or runtime credits. Alert on spend. Treat this like any cloud pilot.

-

Establish a review cadence. Twice a day, ship to a staging environment and test key flows with humans.

-

Create a red team ritual. Before each release, run adversarial prompts and basic security scans. Track findings and fixes in the platform’s history log.

-

Define your exit. Before you begin, verify that you can export code, data, and configuration. Document that process.

Run the pilot for two weeks. At the end, ask whether the agent crew handled change gracefully, whether the runtime kept bills predictable, and whether your team felt in control.

The competitive frame

Caffeine does not exist in a vacuum. GitHub Copilot and Cursor make individual developers faster. Replit and Vercel offer hosted environments that connect idea to running app with minimal ceremony. Several startups market autonomous coding agents. The difference here is the alignment between builder and runtime, and the ambition to handle production from the first prompt. If your priority is speed with control, that alignment is not cosmetic.

Two signals to watch during evaluation:

-

Cross-cutting changes. Ask the platform to add a refund workflow, then observe whether tests, data models, UI, and deployment scripts all update in a single pass.

-

Long-running correctness. Keep the app under iteration for a week. If the test suite stays green as prompts accumulate, the factory’s coordination is real.

A quick note on governance

Agent platforms will become the control plane for many small companies. That entails new governance rituals.

-

Treat prompts as code. Store them, review them, and version them.

-

Treat agent capabilities as roles. Give finance agents only the keys for billing and reporting. Give engineering agents only the keys for deployments. Rotate those keys on a schedule.

-

Treat the platform as a regulated vendor. Expect security questionnaires, uptime reports, and incident postmortems. Ask for them.

-

Set a data boundary. Decide what data you will never send to a builder, and enforce that with gates in the platform rather than a policy document alone.

These habits keep the speed upside while making risks tractable.

The bottom line

The last decade moved from code to components to copilots. Caffeine pushes toward autonomous app factories that negotiate with us in plain language and carry work across the product, engineering, and operations boundary. The bet is that shipping becomes a few hours of crisp prompt writing and a handful of reviews rather than a few weeks of coordination overhead. The other bet is that a decentralized runtime gives founders cost visibility and control that commercial clouds do not always deliver.

Both bets are testable. Pick a small product, wire your guardrails, and measure. If the factory line holds under change, you have a new way to build. If not, you learned cheaply and can fall back to familiar tools. Either way, the line between idea and production just moved closer to the idea.