Publishers Seize AI Search as Gist Answers Goes Live

ProRata's September 2025 launch of Gist Answers moves AI search from aggregators to publisher sites. With licensed corpora, answer-aware ads, and shared revenue, operators gain control over trust, traffic, and monetization.

The breakthrough moment

On September 5, 2025, ProRata launched Gist Answers, a product that lets publishers place an AI answer box directly on their own sites. The timing matters. For two years, the internet has watched large language model results siphon attention to third-party aggregators. Gist Answers flips that flow. It lets a news outlet, a magazine, or a niche publisher keep the AI interaction on its page, powered by a corpus it controls and contributes to, and monetized through a native ad unit that sits beside the answer.

This is not a lab demo. ProRata paired the launch with fresh funding and said its licensed content network now spans hundreds of reputable publications worldwide. Think of recognizable names such as The Atlantic, Boston Globe Media, Vox Media, Fortune, and New York Magazine. The pitch is simple. Publishers contribute licensed content to a shared index, receive attribution and a cut of revenue, then embed a white-label answer experience that speaks in the voice of the brand.

Look closely and you can see the next phase of AI search forming. The internet is moving from a scrape era to a license era, and from off-site answers to on-site answers. The control surface is shifting from aggregators to operators.

From scrape to license

In the scrape phase, a model trained on unlicensed material generates a response, often without permission or attribution. Traffic accrues to the tool that hosts the model, not to the sources. In the post-scrape phase, training and retrieval use licensed material. The answer box lives on the publisher’s domain, not on someone else’s platform. Readers stay on the page, see the masthead, and interact with the brand.

This shift changes three levers at once:

- Distribution: The session begins and stays on the publisher site. Search behavior blends with reading behavior, which resets the funnel for newsletters, registrations, and subscriptions.

- Economics: The publisher now sells ads in the answer surface and shares in network revenue from licensed content. Monetization is tied to intent captured in context, rather than a referral from a general search engine.

- Compliance: Content owners opt in to training and retrieval, get attribution, and can audit usage. That reduces legal risk for everyone involved.

How the economics flip

In traditional search, publishers spend to win a click and then hope to monetize with a pageview. In answer-first experiences, the valuable moment is the response itself. It contains the structured summary, recommended links, and suggested follow-ups. Gist Answers adds a new surface that publishers can price on a different axis. Instead of generic display ads, you get answer-aware placements that align with the question and the evidence used to derive the response.

Imagine a local newspaper where a reader asks, “What are the best after-school programs near me?” The answer draws on the paper’s vetted listings and recent reporting. Next to it sits a sponsored placement for a nearby learning center, shown because the model’s retrieval step pulled that center’s open house announcement into the context window. Pricing can evolve from cost per thousand impressions to cost per qualified conversation or cost per guided click, because the system knows which passages informed the answer and what the reader asked next.

The money flow changes too. A publisher that licenses content to the network can earn when its articles fuel an answer on another site. That is off-site revenue. On-site, the same publisher can earn again from native placements around its own answer box or from subscription prompts that appear when a response references premium articles. The idea is to turn AI answers from a leakage point into a flywheel of first-party engagement and diversified revenue.

What Gist Answers changes for readers

Readers do not want a chatbot that shrugs. They want a source-grounded answer they can trust, with clear links to dig deeper. Gist Answers is designed to retrieve from licensed sources, cite those sources in-line, and reflect the host brand’s style guide. The result should feel less like talking to a generic model and more like asking a well-briefed editor who can summarize, point to evidence, and offer next steps.

Two details matter for experience design:

- Suggested follow-ups: After an answer, offer two or three high-yield prompts that map to your site architecture. For a travel publisher, that might be “Three-day Kyoto itinerary,” “Best neighborhoods to stay,” and “Seasonal festivals calendar.” These suggestions convert a single question into a session.

- Guarded ambiguity: When the answer is uncertain, say so. Then show the sources retrieved and ask the reader which direction to explore. It builds trust and gives the brand permission to recommend a newsletter, an app, or a booking widget.

The production stack pattern

Gist Answers is a strong reference design for how on-site AI search will ship across media. Whether you adopt ProRata, build in-house, or assemble with a vendor mix, the architecture will look similar. Here is a practical blueprint.

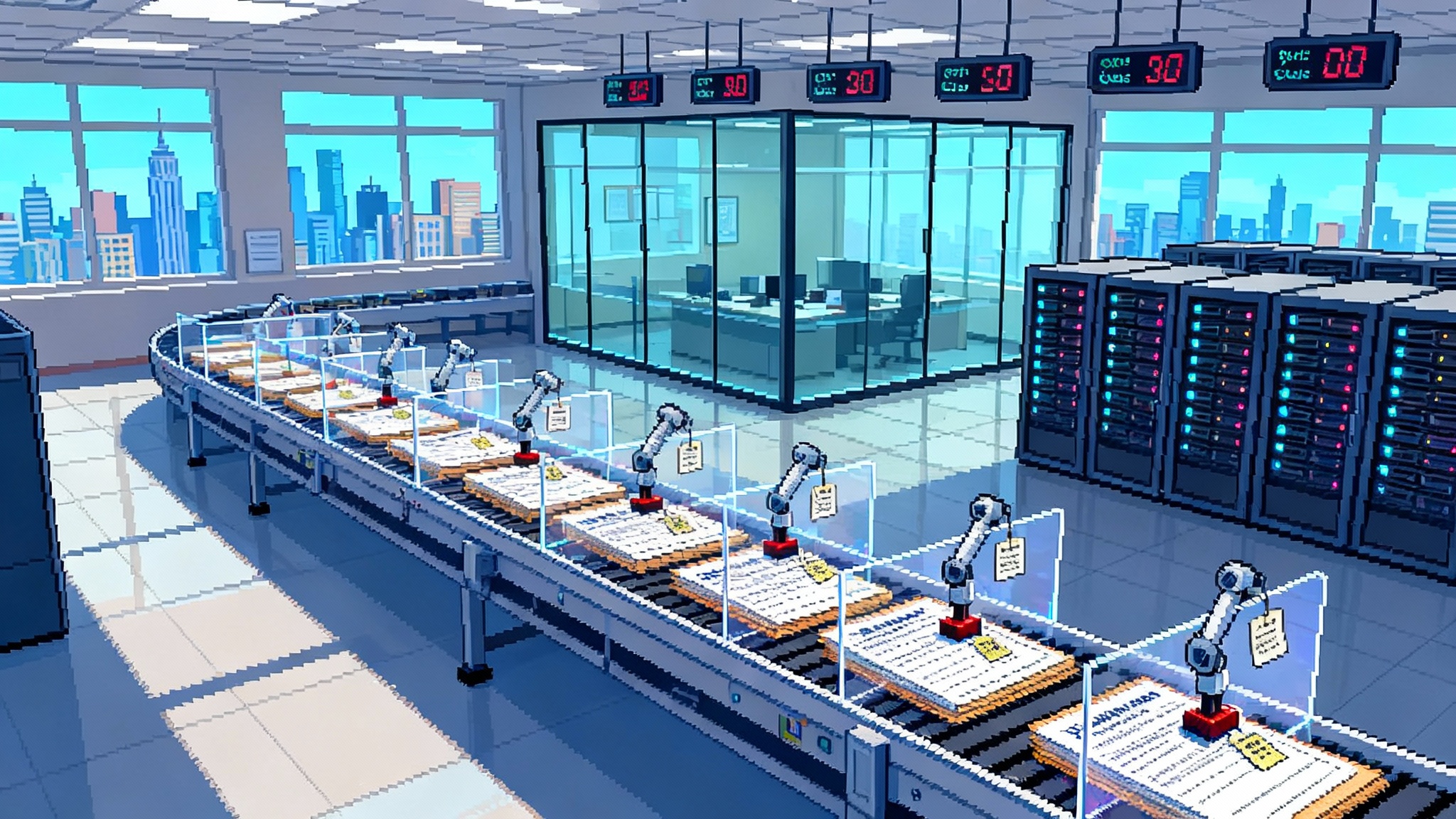

1) Corpus ingestion and licensing

- Contracted feeds from your content management system and archives, with rights metadata attached at the document and paragraph level.

- A connector to a licensed external network that expands breadth and freshness for retrieval while honoring usage terms and revenue share.

- Content fingerprinting to track which fragments appear in answers, so compensation and analytics are accurate.

2) Retrieval augmented generation

- Retrieval augmented generation means the model does not answer from memory alone. It first retrieves relevant passages from your index, then synthesizes a response that quotes, paraphrases, and cites. Expect hybrid retrieval that combines keyword search, semantic vectors, and link structure signals.

- Caching for hot questions. If a city election is tonight, the same three questions will repeat. Cache responses with short time-to-live settings so costs stay flat while facts stay fresh.

3) Model tiering and safety

- Use a stack of models. A fast, smaller model handles routing and classification. A larger, more capable model handles complex synthesis. Model choice can depend on query intent, risk, and monetization potential.

- Guardrails that block unsafe topics, enforce house style, and control claims. For news, use explicit rules that require a citation for any factual statement about a living person or an ongoing legal matter.

4) Policy and explainability

- Policy engines allow editors to declare what is in-bounds and who is authoritative. For example, on medical coverage, require that responses prefer licensed medical sources and never invent dosing guidance.

- Token-level or passage-level attribution that shows which sources informed the answer. This supports revenue sharing and gives readers confidence.

5) Observability and evaluation

- Observability means you can see what the system did. Log every retrieval set, generation trace, and ad decision, then roll up by topic and by source.

- Continuous evaluation loops measure answer quality and business impact. Benchmarks should cover factual precision, helpfulness, citation coverage, and safety. Tie those to engagement, subscription conversion, and ad performance.

6) Monetization surfaces

- Answer-aware placements that can take the form of a native card, a product carousel, or a unit that expands when the reader hovers over a cited source.

- Calls to action that match intent. If the question is about “best running shoes for flat feet,” the next step could be a size quiz, a gear guide, or a retailer’s offer that meets your commerce rules.

7) Governance and brand alignment

- Brand voice templates so the model sounds like your publication. Include style decisions such as sentence length, directness, and whether to speak in first or third person.

- Audit trails for compliance teams, including a history of answers that reference premium archives or sensitive reporting.

A short primer on success metrics

If AI search is on-site, the KPIs must also be on-site. Define and track the following, then share the dashboard weekly with editorial, product, and sales.

- Answer satisfaction rate: The percentage of sessions where the reader stops searching and begins exploring suggested follow-ups.

- Citation coverage: The percentage of answers that include at least two citations to licensed sources.

- Revenue per answer: Incremental ad and subscription revenue attributable to the answer surface.

- Latency and cost: Median response time and cost per answer by query class, with a goal to keep sub-second retrieval and predictable unit economics.

- Escalation rate: The percentage of sessions that require a human editor for sensitive topics.

The land grab is on

Expect to see answer widgets across top publishers by early 2026. Why that fast? First, consent-based licensing reduces legal drag. Second, implementation is now a snippet of code rather than a long integration. Third, the early revenue signal from intent-rich placements is stronger than display ads on many pages, especially for product discovery, local services, education, travel, and business software.

The real bottleneck will be go-to-market discipline. The first wave of publishers will treat AI answers like a new section of the site, with ownership, metrics, and a tight test-and-learn loop. Those teams will win distribution on search-heavy pages, then roll out to the homepage, live blogs, and evergreen hubs. The laggards will wait for perfect safety or perfect measurement. By the time they decide, the query space they cared about will be claimed by peers who trained the model on their style, built a following, and tuned the reply suggestions that keep readers engaged.

What smart startups can ship now

This new surface needs a lot of infra. The openings are concrete:

- Evaluation and replay: Build tools that score answers against ground truth, let editors replay a bad answer with a new policy, and schedule automated audits. Provide red team templates for legal, health, finance, and elections.

- Rights registry and attribution: Offer a neutral ledger that resolves which publisher owns which fragment, tracks cross-site usage, and reconciles payouts. Support paragraph-level hashing and human adjudication for ties.

- Policy orchestration: Give publishers a visual policy builder that maps topics to source allowlists, tone settings, and risk thresholds. Provide a real-time preview of how a policy change would alter an answer.

- Answer-aware ad tech: Create a marketplace for units that read retrieval context and match the claim with the creative. Support brand safety by excluding topics or sources that advertisers mark as off-limits.

- Cross-publisher analytics: Roll up insight on what people ask, which sources win retrieval, and what follow-ups convert. Package that for editorial planning and for sales decks.

- Measurement plumbing: Provide standardized events, cohort analysis, and incrementality tests that isolate the lift from answer surfaces. Help teams decide when to place a sponsor unit versus a paywall prompt.

If you want a crisp starting point, ship an evaluation harness that any publisher can drop in. Return a weekly report that scores truthfulness, citation coverage, response latency, ad visibility, and revenue per answer. Make it easy to benchmark against similar publishers while preserving privacy.

For teams building agent systems in adjacent domains, the same discipline applies. We covered how agent platforms turn demos into real businesses in our look at agent demos into revenue. And the analytics side of the stack is already evolving toward automated quality checks and semantic instrumentation, as seen in agentic analytics on Cube D3. On the vertical side, Harvey's agent fabric shows how policy and workflow can be codified for specialist use cases.

What media operators should do next

Speed to integrate and speed to measure are the new moats. Here is a punch list for the next 90 days.

- Inventory your corpus and rights: Tag archives with rights metadata. Identify premium collections that can be selectively exposed to the answer engine for subscribers.

- Choose an integration path: Pilot with an out-of-the-box product such as Gist Answers or scope a vendor-supported build. The goal is a live test on real traffic in under four weeks.

- Instrument everything: Log query text, retrieval sources, answer length, citations, ad impressions, and follow-up clicks. Use these to define revenue per answer and subscription conversion per answer.

- Configure policy guardrails: Establish topic-specific rules and a default fallback when confidence is low. Ensure legal, editorial, and ad ops sign off on the same playbook.

- Calibrate brand voice: Provide a style template and a small set of gold answers edited by your best editors. Use these as a reference set for tuning and evaluation.

- Set a revenue plan: Bundle sponsorships around high-volume answer hubs. Package audience segments based on question themes. Price tests weekly, not quarterly.

Risks to manage, not reasons to wait

- Hallucinations and drift: Even with retrieval augmented generation, models can make mistakes or overstate certainty. Mitigate with strict citation requirements, low-confidence disclosures, and editor-in-the-loop workflows for sensitive topics.

- Misattribution: If multiple sources feed an answer, who gets credit and revenue share? Solve with fragment-level fingerprinting and clear reconciliation rules.

- Traffic cannibalization: A summary can reduce clicks to articles. Counter by designing answers that include suggested deep dives, interactive tools, and short pull quotes that tease the full story behind a subscription.

- Privacy and consent: On-site answers are first-party interactions. Treat them that way. Honor regional privacy laws and give readers control over personalization of answers and ads.

The bigger implication

When answers live on publisher sites and the training set is licensed, the incentives change. The best content is no longer a free raw material for someone else’s model. It becomes a priced input to a shared index and a flywheel that rewards the original reporter or reviewer. Ad tech evolves from spraying banners to placing sponsored guidance at the exact moment a reader is making sense of a topic.

There is still hard work ahead. Policy will need to evolve. Benchmarks will need to mature. Editors will need new muscle memory for writing prompts and for supervising models. But the direction is set. September 2025 will be remembered as the moment AI answers left the aggregator and came home to the masthead.

The opportunity is plain. If you build, ship tools that help teams evaluate, attribute, and monetize answers. If you operate, stand up an on-site answer box, measure it ruthlessly, and tune it every week. The next winners in media will not be the first to deploy a model. They will be the first to turn an answer into a trustworthy product and a profitable business.