Voice agents hit prime time with Hume’s Octave 2 and EVI 4‑mini

Hume AI's Octave 2 and EVI 4-mini mark the moment real-time voice agents move from demo to production. See why sub second, interruptible conversation is the UX unlock and how to ship safer, faster systems.

The week voice went from cool demo to production plan

Real-time voice agents have hovered at the edge of usefulness for years. The voice sounded good, but the conversation did not. Latency stacked up. Turns collided. People waited, interrupted, and then waited again. This was the week that changed. With Octave 2 for text to speech and EVI 4-mini for speech to speech, Hume AI shipped pieces that make live conversation feel natural and controllable.

Hume describes the new release in the Octave 2 launch overview, and sets the pairing story for EVI 4-mini in its EVI 4-mini capabilities. The short version for builders is simple. Sub second start to first audio, coupled with true barge-in, moves voice agents from novelty to production.

Why sub second and interruptible is the unlock

Humans notice delays in tight bands. We tolerate a short pause while someone breathes, but not a long gap that makes us wonder if the line dropped. Likewise, we interrupt each other constantly and expect the other person to stop, listen, and pick up the new thread without confusion.

Two mechanics change everything:

- Start to first audio under one second. When an agent speaks within a heartbeat after you stop, you stay engaged. You do not look for a spinner or a transcript. You keep talking.

- Barge-in that actually works. Interruptions are not a pause button. The system has to stop cleanly, update context to the last spoken token, and route the partial utterance back into the language model so the next sentence is relevant.

When those two conditions hold, a voice agent stops feeling like a voice user interface and starts feeling like a person paying attention.

What changed with Octave 2

Octave 2 is designed as a text to speech engine that acts like a careful performer rather than a generic narrator. Hume positions it as faster and more controllable than its predecessor. For product teams, three shifts matter most:

- Speed you can design around. If speech can begin in roughly two tenths of a second, you can play short backchannel cues like yes, got it, or let me check while the longer answer streams. That makes wait time feel smaller and keeps the user in conversation.

- Deeper control of pronunciation and style. Phoneme level editing and voice conversion allow precise fixes when a brand name, a medication, or a place name comes out wrong. Instead of prompting your way around mispronunciations, you fix the sound itself.

- Multilingual reach with a stable identity. The same voice can continue across languages. That reduces friction in support and care settings where a single call may switch between English and Spanish, or between English and Hindi, without losing voice identity.

Think of Octave 2 as moving from a good audiobook reader to a voice actor who understands timing, stress, and intention.

What EVI 4-mini adds to the stack

EVI 4-mini is a speech to speech interface that listens, detects when to speak, and answers in a natural voice while delegating language generation to your chosen large language model. It coordinates timing, prosody, and turn taking, so your code can focus on tools and policy. Hume’s documentation highlights that EVI 4-mini is always interruptible and supports a growing set of languages in one loop.

If you have ever stitched together a streaming transcriber, a text model, and a separate text to speech engine, you know the failure modes. Audio overlaps. Replies continue after the user starts. The gap between turns is inconsistent. EVI 4-mini consolidates those edges into one control plane.

A simple mental model for the event loop

You can run a production-ready loop without exotic architecture:

- EVI streams user audio with timestamps and emits a signal when it detects end of turn.

- Your service assembles context and calls a language model with any tools required.

- EVI turns the returned text into speech with Octave 2 and streams it back. If the user interrupts, EVI stops instantly and emits the partial utterance so your service can adapt.

This separation is a feature. It lets you change how the agent thinks without changing how it speaks, and vice versa. It also makes observability cleaner, which matters when you are debugging the difference between a 400 millisecond and a 1.4 second turn.

The latency budget you can actually hit

Latency is no longer a fuzzy concept. You can plan it. An example budget for start to first audio might look like this on a typical web or mobile session:

- Endpointing and turn detection: 120 to 200 milliseconds.

- Language model draft time: 300 to 600 milliseconds for a short sentence when tools are not needed.

- First token to speech onset: 100 to 250 milliseconds when Octave 2 starts speaking as text streams.

- Transport overhead: 80 to 150 milliseconds depending on the network path.

That puts many turns in the 600 to 1,200 millisecond range, and the best turns well under a second. You can shape the perceived experience further by pre-rendering micro-cues and caching common openings like I can help with that or One moment while I look.

Safer voice design beats open-ended cloning

Octave 2 supports short-sample voice cloning. That is valuable for personalization and for reading long content with a consistent voice, but it raises obvious risks in the wild. Production teams do not need to open that door to deliver value.

A safer pattern is voice design. Create distinctive synthetic voices with documented style, range, and pronunciation rules. Give them clear names and visible disclosure that they are artificial. Lock configuration behind version control. Keep personal voice cloning behind explicit consent, with logging and human review for sensitive contexts like finance or health.

Treat voices like a brand system, not like a novelty feature. Your goal is trust across millions of interactions, not a one-time wow.

The builder’s playbook for three real deployments

Below is a field-tested approach for teams that want measurable latency, explicit control, and compliance from day one.

1) Telephony agents that do not feel robotic

- Call routing and audio bridge. Use your telephony provider to receive and place calls, then bridge audio to EVI 4-mini. Compressed audio and extra network hops add latency compared with WebRTC, so tune your backchannel cues accordingly.

- Immediate acknowledgments. Pre-synthesize micro-cues like mm-hmm or I am here and ready and play them as soon as you detect end of user turn. This buys your language model a second to think without dead air.

- Smart barge-in. Configure EVI to stop synthesis instantly when a caller interjects. Route the partial utterance and the current state to your model with a tag that the last answer was interrupted, so you do not generate apologies for an answer no one heard.

- DTMF and fallback paths. Offer touch tone fallbacks for account lookups or payments. Provide a short text alternative for noisy lines where speech intent is unclear. Do not trap callers in voice-only flows.

- Measure the four numbers. Track start to first audio, average stop time on interjection, average reply length in seconds, and successful task completion without human escalation. These reveal pacing issues fast.

- Consent and disclosure. The greeting should identify the agent as artificial and explain recording clearly. For outbound calls in the United States, document prior written consent and make opt-out easy.

2) Customer experience copilots that deflect tickets

- WebRTC or mobile audio. Run EVI 4-mini in the browser or app and stream audio to your servers for tool use. Keep your language model close to business systems so tool calls are supervised.

- A tight tool loop. Start with three tools: knowledge base search, order lookup, and ticket creation. Require tool plans in the prompt, cap tool uses per turn, and log every call.

- Latency shaping. If knowledge search sometimes takes a second or two, let the agent say I am looking that up and continue speaking for a few seconds while you wait. The voice makes the wait feel shorter without hiding real delays.

- Guard the keys. Block refunds, credits, or escalations behind a human-in-the-loop rule. Let the agent draft the action and read it back for approval.

- Measure what matters. Deflection rate only counts if satisfaction holds. Pair deflection with post-interaction satisfaction and redo rate. If customers call back, the metric was vanity.

For broader CX context, see how agentic AI makes CX autonomous and why that shift sets the first real beachhead for autonomy.

3) Healthcare pilots that respect privacy and context

- Start with structured use cases. Appointment scheduling, pre-visit intake, and post-discharge follow-ups have clear data capture and high patient value.

- Consent in plain language. The agent should introduce itself, ask for permission to proceed, and remind the patient that a human is available on request. Keep scripts readable and available in the patient’s preferred language.

- Data handling by design. Avoid sending protected health information to third parties beyond your contractual boundary. When possible, keep your language model inside a controlled environment. If you must call a vendor, minimize payloads, encrypt in transit, and put a business associate agreement in place.

- Clinical tone and empathy. Use calm, clear voices and include empathy phrases that fit sensitive contexts. Make it explicit that the agent cannot provide medical advice and must escalate for symptoms or safety concerns.

- Evidence through measurement. Track abandonment rate, time to complete intake, and percent of escalations. Have clinicians review a random sample of transcripts to catch subtle misunderstandings.

Observability and governance that last beyond the demo

Production voice runs on evidence, not vibes. Instrument everything and treat prompts and configuration like code.

- Prompt and tool reviews. Use change management, diff reviews, and rollbacks for prompts. Write least privilege scopes for tools and log input and output.

- Data retention policy. Store audio only as long as needed for quality improvement and compliance. Prefer transcripts for long-term analytics and remove sensitive attributes where possible.

- Incident playbook. If the agent says something it should not, you should know within minutes. Stream logs to alerts for banned phrases, repeated apologies, or unusual escalation spikes.

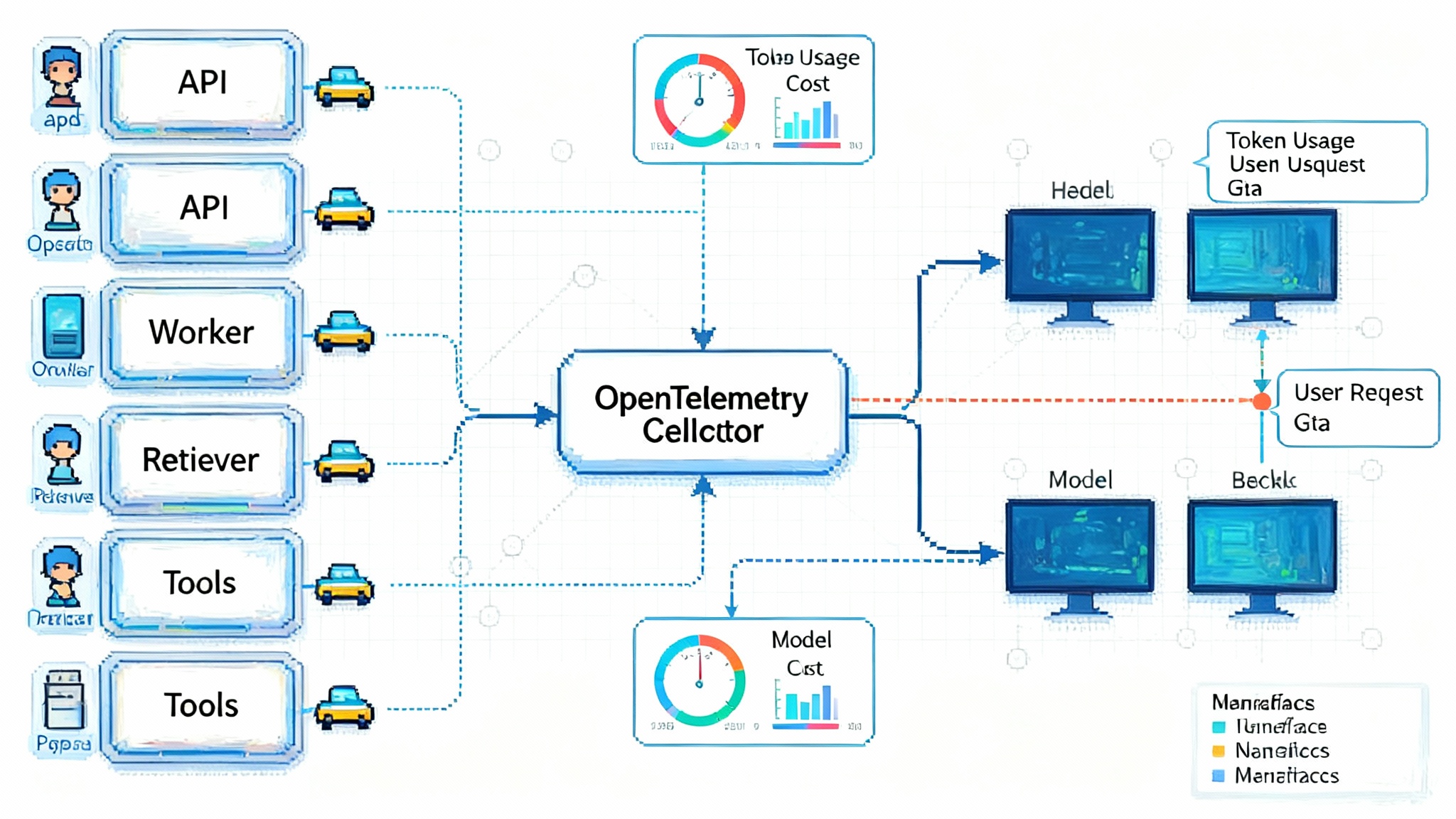

- Visibility without friction. Adopt lightweight tracing and metrics first so you can find latency hotspots in a day, not a month. For a deeper dive on visibility patterns, read our zero-code LLM observability guide.

Practical patterns to hit sub second more often

- Cache predictable phrases. Pre-render introductions, confirmations, and policy statements so the first 300 to 500 milliseconds are ready to stream.

- Shape answers. Encourage short opening sentences. The agent can follow with a longer explanation if the user stays silent.

- Prefer streaming everywhere. Stream transcription to your service, stream tokens from the language model, and stream speech back to the user. Avoid batch waits.

- Tune endpointing to the medium. On mobile websockets, allow a slightly longer silence threshold to avoid false starts when the user shifts the phone. On desktop, you can be more aggressive.

- Respect accents and code switching. Keep language auto-detection on, but allow user override in the UI. Log mispronunciations for later fixes with phoneme controls or custom dictionaries.

How to explain this shift to stakeholders

Different leaders care about different proofs. Here is how to frame it.

- Product leaders. You can forecast handle time savings and design reliable self-service flows. Sub second response times and barge-in support are specific, testable commitments.

- Engineering leaders. You can own the control plane. With EVI 4-mini handling speaking and listening, your services focus on models, tools, and data. Latency budgets become measurable, not mystical.

- Compliance leaders. You can put voice under the same policies you already use for chat. Consent language, retention periods, and escalation rules are testable and auditable.

If your stakeholders want examples of agent systems moving into production outside voice, point them to our report on publisher-owned AI search with Gist to see how teams ship control and accountability along with speed.

A test plan you can run this week

- Latency envelope. In a quiet room on a normal connection, measure start to first audio over 100 turns. Your target is under one second for most turns. Note outliers, then repeat on a mobile connection and a busy Wi-Fi network.

- Interrupt tests. Ask long questions, then interrupt twice in quick succession. A good agent stops within a heartbeat and resumes with the right context. If it apologizes or repeats itself, your state handoff needs work.

- Accent and language flips. Switch languages mid conversation. A good agent keeps the same voice identity while changing language output. Log mispronunciations and fix them with phoneme controls.

- Stress with tool use. Have the agent look up an order, reschedule it, and send a confirmation while you interject. Verify that tool results appear in the spoken answer without leaking internal identifiers.

- Governance checks. Verify disclosure language, consent capture, data retention, and escalation rules against policy. Run a simulated incident to test alerting.

Where this fits in the broader agent landscape

We are watching a clear pattern. The first production wins cluster in narrow, high-value surfaces where speed, control, and policy can be measured. That is true for voice and for non-voice agents. If you are tracking autonomy in customer service, read how agentic AI makes CX autonomous. If you care about search surfaces that keep control in-house, study how publisher-owned AI search with Gist reframes ownership.

Voice is now ready to join that list because the experience crossed the line from acceptable to natural. Octave 2 and EVI 4-mini did not just improve fidelity. They gave builders timing and control, which are the real UX levers.

The bottom line

Octave 2 and EVI 4-mini move voice agents out of the lab and into day to day service. The speed gains make speech feel live. The interruptibility makes it feel considerate. The pairing model gives teams control without locking them to a single language engine. If you design voices instead of cloning them, measure the key seconds, and put clear governance in place, your first production voice agent will not just sound good. It will win time back for users and earn trust with every turn.