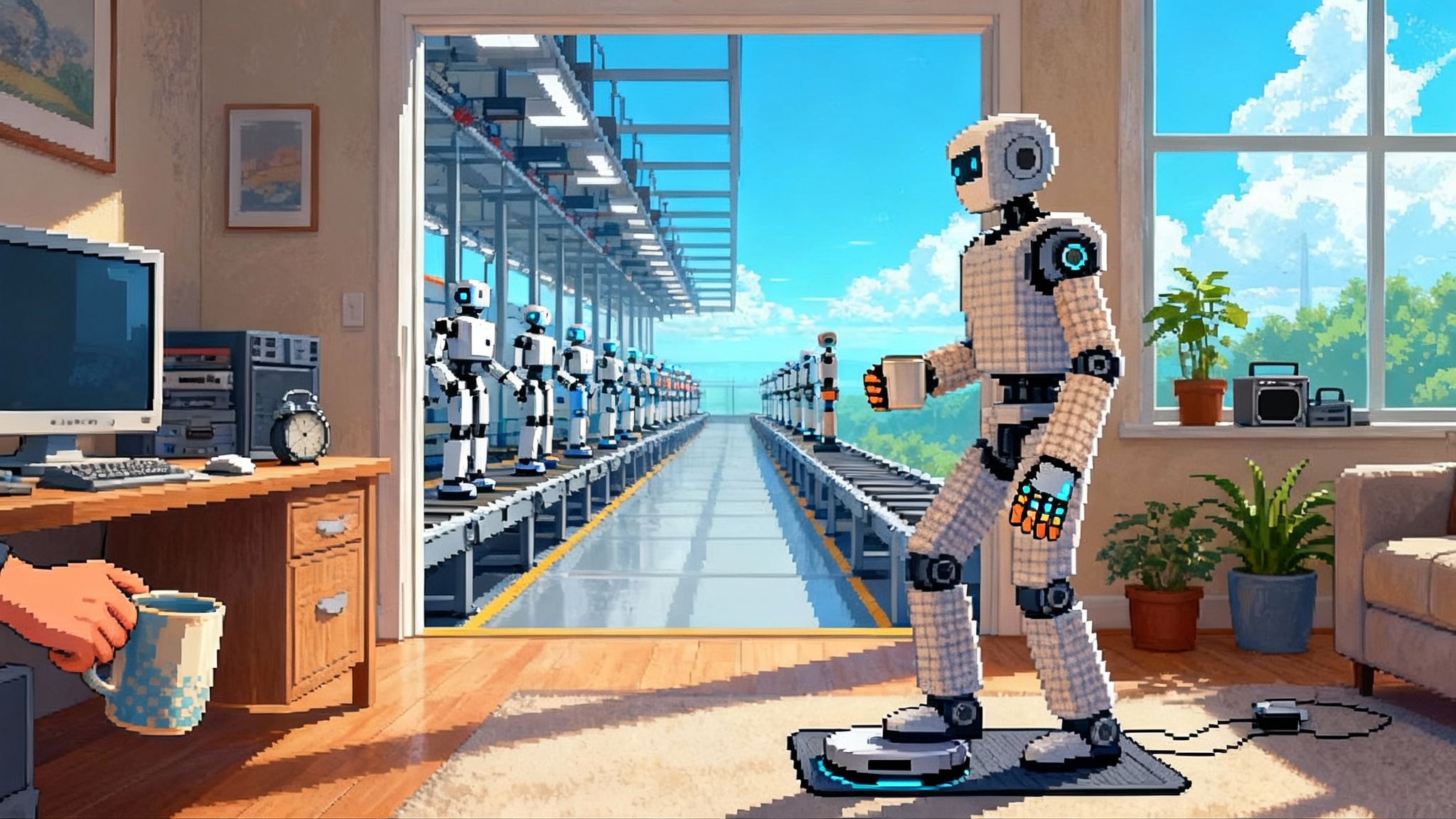

Figure 03 Moves Agents Off Screen And Into The World

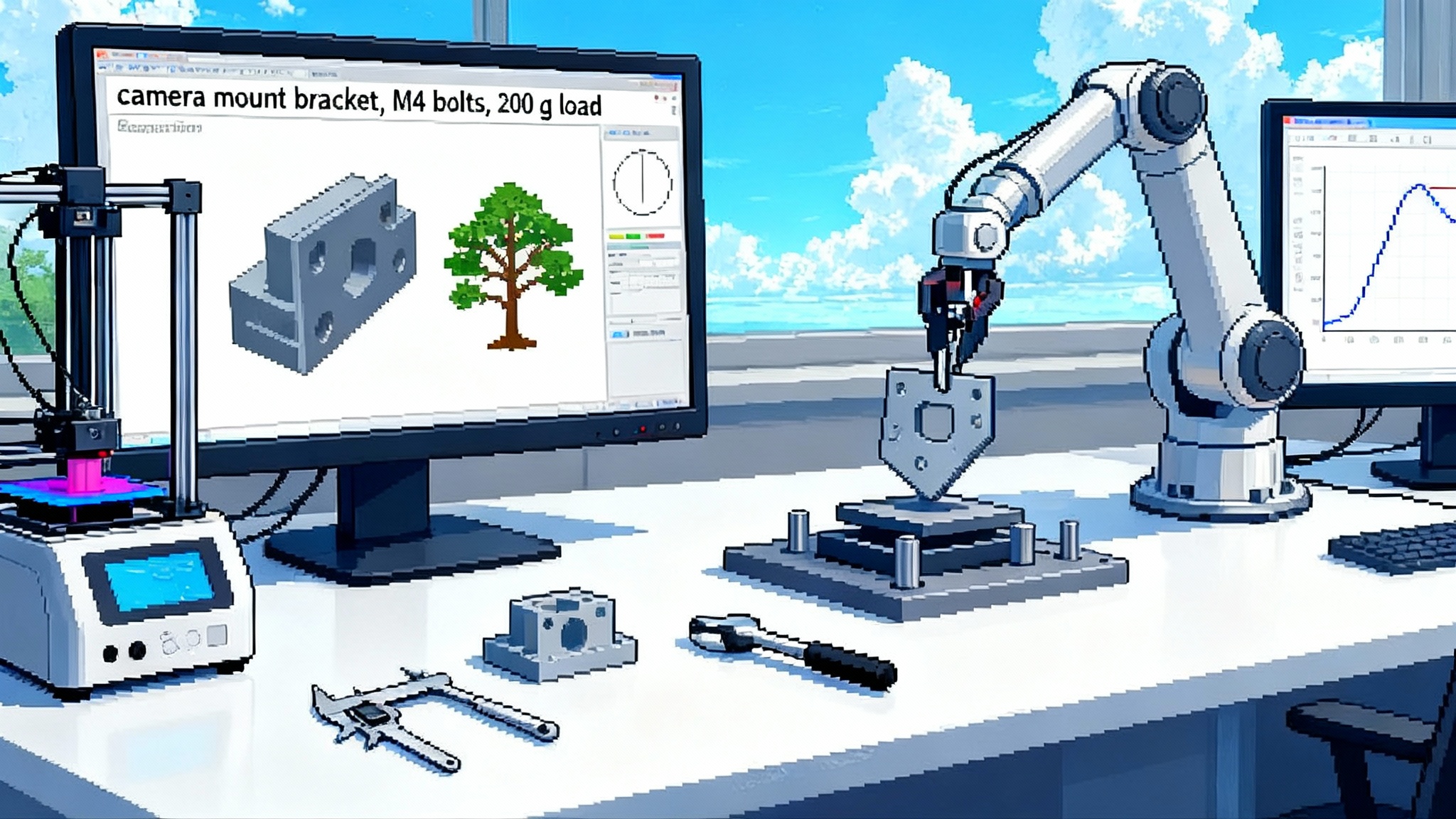

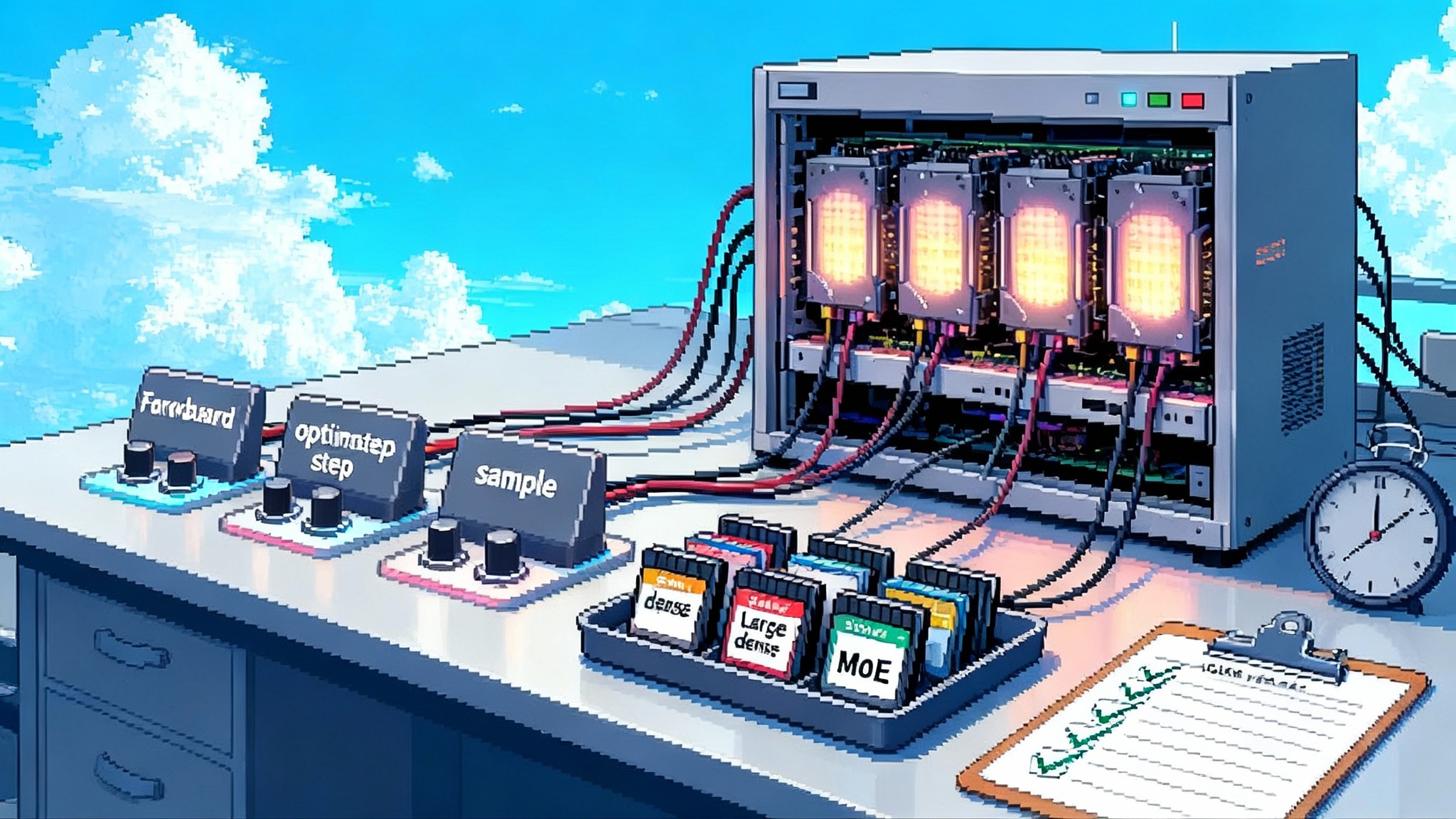

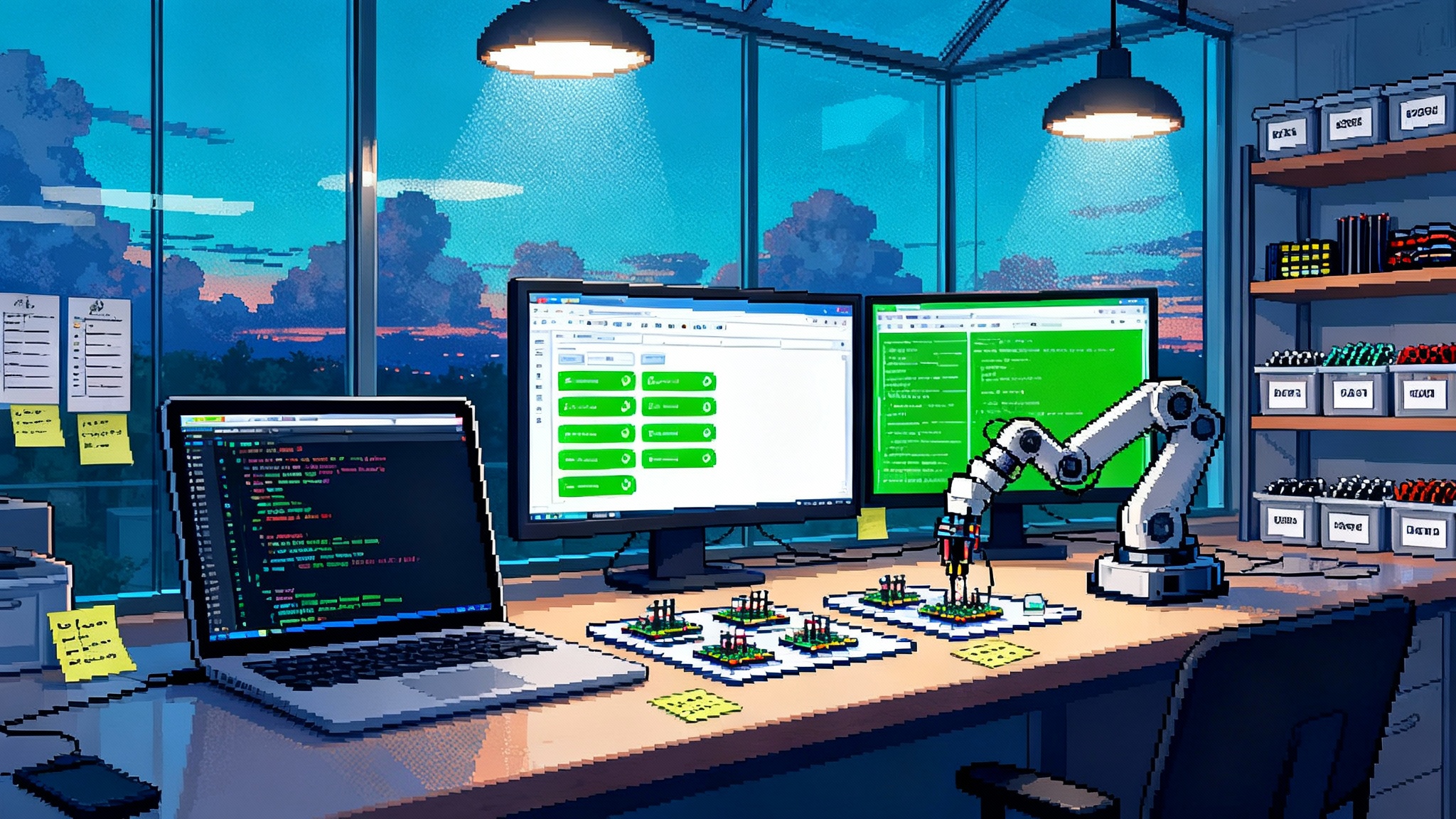

Figure 03 moves embodied agents from demo to deployment with a Helix native control stack, tactile hands, 2 kW wireless charging, and a BotQ supply chain built for volume. Here is why that matters now.