Replit Agent 3 crosses the production threshold for apps

Replit's Agent 3 moves autonomy from demo to delivery with browser testing, long running sessions, and the ability to spawn specialized agents. Here is how it reshapes speed, safety, and the economics of shipping software.

Breaking: autonomy that actually ships software

Something important changed in September 2025. On September 10, Replit introduced Agent 3, and with it the conversation about autonomous coding moved from demos to delivery. The new agent designs, builds, tests, and self-corrects full stack apps with light supervision. It does not just click around a screen. It operates inside a development environment that can compile, run, and validate the code it writes. If you have followed agents that mimic a user and press buttons on your behalf, this is different in kind, not just degree. It is closer to dropping a junior engineer into your repo, giving them a spec, and watching them run a loop of write, test, fix, repeat while you supervise. Replit outlines the approach in introducing Agent 3, our most autonomous agent yet.

Replit’s product moment arrived alongside a capital one. The company disclosed a new round that values it at billions, a signal that investors see autonomy crossing into production viability. Reuters summarized the raise in Replit raises 250 million at 3 billion.

From screen clickers and no code catalogs to a builder

For the past year, two approaches dominated non-expert software creation:

- Screen clicker agents that remote control a browser or desktop to trigger a series of actions. They are great for automation and lightweight chores, but fragile for building complex applications. One dialog change and the flow snaps. For a deeper look at this pattern, see our piece on Caesr clicks across every screen.

- No code catalogs that offer prebuilt templates and drag and drop components. They are fast for familiar shapes like a to do list or a customer intake form, but composing real product logic beyond the catalog often requires engineers.

Agent 3 aims to be a builder, not a clicker or a catalog. It operates on code, not on pixels. It proposes an architecture, scaffolds a full stack project, sets up routes and databases, writes tests, runs those tests in a live browser, observes failures, and loops until green. In practical terms, it looks at the thing it just built the way a human does, only it does not get bored of repetition. Where screen clickers rely on the layout of a page, Agent 3 relies on the structure and behavior of your application.

What crossed the production threshold

Think of the production threshold as a simple question: can I trust this to move my codebase forward without babysitting every step. Agent 3 adds three capabilities that tilt the answer toward yes.

-

It self tests in a real browser. Instead of assuming that a generated feature works, the agent spins up your app, drives the flows, asserts success on forms and buttons, and records what failed. Then it patches the code and tries again. This narrows the gap between a working demo and a shippable change.

-

It runs for a long time without constant prompting. In Max Autonomy, Replit reports sessions that last hours, not minutes. That lets teams assign a well specified feature and step away. The agent keeps track of its own task list and reports back with artifacts and diffs rather than waiting after every micro instruction.

-

It can generate other agents and automations. The tool you use to build an app can also build a Slack helper to triage support tickets or a scheduled job that backfills analytics every night. Autonomy compounds. Once the builder can build builders, your surface area grows even if your team does not.

These shifts matter because they close the loop between generation and verification. In older workflows, the human had to validate every step. In this one, the agent validates, and the human supervises the validation.

The economics flip: from prompts to supervision

The economic story is straightforward. The expensive part of early agentic coding was prompting and re prompting. Writing clever prompts, breaking tasks into tiny steps, and staying glued to the screen was the hidden cost of early tools.

With Agent 3, the center of gravity moves from prompt crafting to supervision. You write a crisp goal with acceptance criteria, not a play by play. Then you supervise outcomes at the right checkpoints. Imagine your organization measuring two variables:

- Supervised hours per shipped feature: how much human time it took to shepherd the agent to done.

- Agent hours per shipped feature: how much autonomous runtime was needed to complete it.

Your goal is to drive the first number down while budgeting the second. In practice, this means replacing prompt engineers with supervisors who do three things well: write specs with unambiguous acceptance tests, review diffs with a clear standard of quality, and intervene early when the agent drifts. The person is not the robot’s hands. The person is the editor who owns the definition of done.

A helpful metaphor is a newsroom. You do not ask a reporter for a perfect first draft. You expect a solid draft that an editor can shape quickly into a publishable piece. Agent 3 is the reporter. Your supervisor is the editor. The test suite is your stylebook.

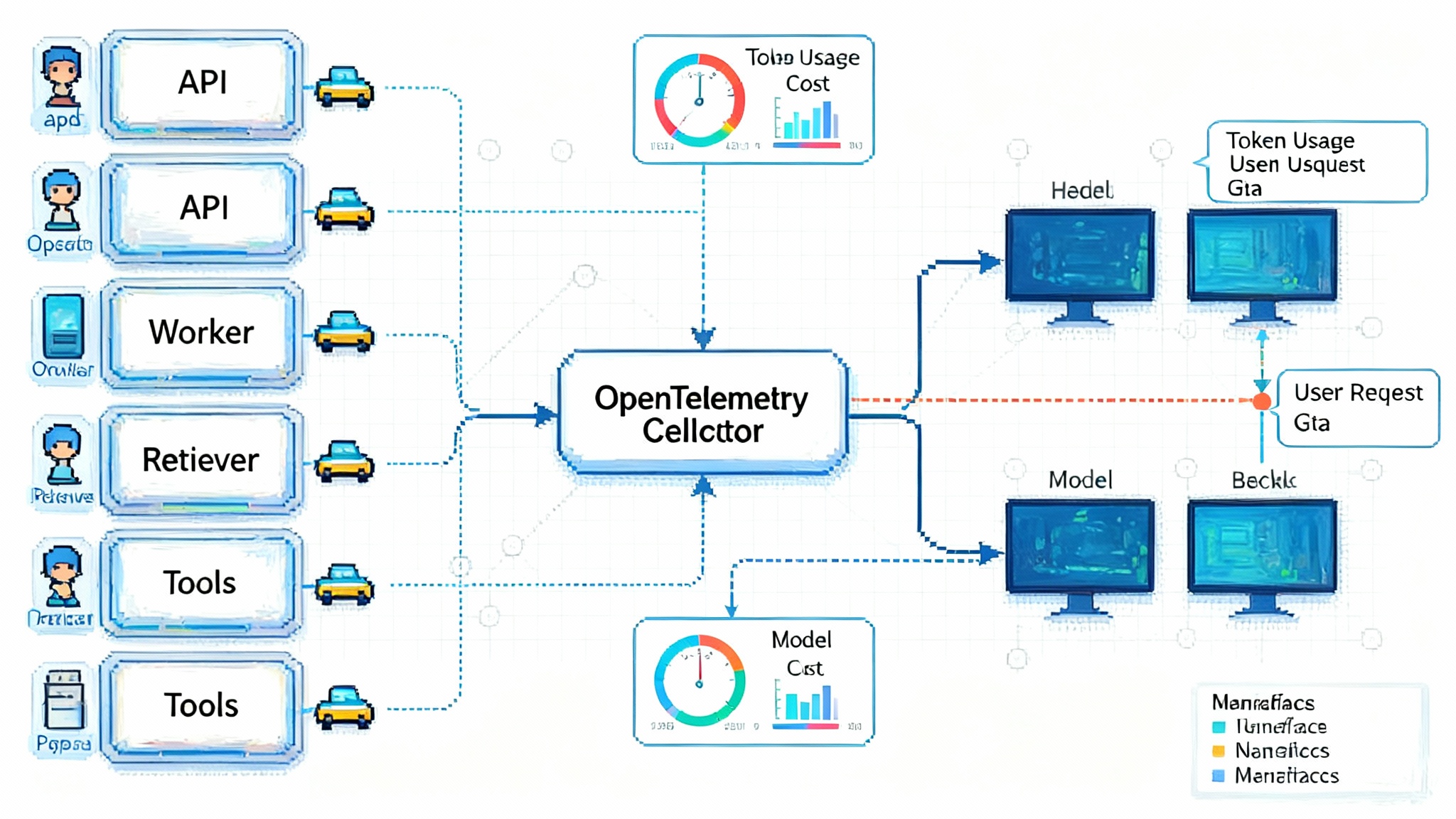

For organizations operationalizing this shift, observability becomes a first class concern. Our primer on zero code LLM observability outlines how to instrument agents without adding brittle glue.

A concrete example: a checkout feature in one afternoon

Picture a small SaaS company with a Python backend and a React frontend. The team wants to add a usage based billing plan. A supervisor gives the agent a ticket that includes:

- New pricing tiers and thresholds.

- Acceptance tests for prorated invoices and free trial conversion.

- A constraint that no secrets may be written to logs.

- A note to add a feature flag and an admin toggle.

Agent 3 scaffolds the backend endpoints, wires a billing provider, writes unit tests, and generates a browser driven flow that validates sign up, upgrade, and downgrade. The agent runs the app in a browser, notices a mismatch when a React component fails to handle a null plan, patches it, and re tests. It toggles the feature flag, generates a migration for a new column, and leaves a dashboard note. The supervisor reviews a clean diff plus a test report and merges.

The difference versus past tools is not that an agent wrote code. It is that the agent wrote code, validated the user journey, self corrected, and reported changes in a way a reviewer can trust. That chain is the production threshold.

Why this is not just faster but safer when used correctly

Speed alone is a shallow metric. The meaningful improvement is predictability. A supervised agent with a baked in test harness tends to produce smaller, reversible changes, because it learns to ship to the definition of done you enforce. That reduces the risk of silent breakage. You still need guardrails, but the process becomes legible.

Here is a practical checklist to operationalize that legibility:

- Write acceptance criteria first. Express them as executable tests or as scriptable checks the agent must pass.

- Define a change budget. Cap how many files or total lines the agent can touch without approval.

- Require canary deploys and automatic rollback on failed health checks.

- Store all agent actions in an append only log with timestamps and command traces.

- Treat secrets as sealed. The agent can request access tokens through a broker, never handle raw keys.

- Allocate a weekly review of agent drift. If supervised hours per feature rise, adjust prompts or tests.

The agents that build agents pattern arrives

Agent 3 introduces a capability that will ripple across software as a service. When a builder can create smaller specialized agents and scheduled automations, you stop treating automations as one off scripts and start treating them as first class features. For example:

- The main agent builds a Slack agent that triages customer feedback into Jira with tags and assigns owners based on on call schedules.

- It builds a weekly data hygiene agent that finds orphaned accounts, runs a cleanup job, and posts a report to finance.

- It builds a documentation agent that watches commit messages for public facing changes and drafts release notes.

This is not science fiction. It is a direct consequence of giving an autonomous builder a runtime and a testing harness, plus the ability to provision small connected services. Once you can do that, internal platform teams will start offering a gallery of pre approved mini agents with guardrails. Every team will have a folder of automations that the main agent can extend or specialize.

How this differs from a no code template universe

No code catalogs give you a floor plan. Agents that build give you an architect. The architect can reuse components, but is not limited to them. If your app needs an unusual data model or a custom workflow that spans tools, a template often breaks down. A builder agent can sketch a new plan, lay the plumbing, write the tests, and wire the integrations, because it is operating at the level of code and contracts, not just blocks on a canvas.

This does not make no code irrelevant. Templates remain the quickest way to get a standard backend and a basic frontend running. The change is that an agent can pick up where the template stops and keep going without tossing the work over a wall to human engineers. For emerging interoperability patterns, see the USB C moment for agents.

Shipping cadence and the weekly rhythm

When a builder can run for hours at a time, ship to a testable definition of done, and ask for help only at decision points, your weekly rhythm compresses:

- Monday: Supervisors write specs with acceptance checks and guardrails.

- Tuesday and Wednesday: Agent runs long sessions, generating diffs and passing tests.

- Thursday: Review and merge, deploy to canary, monitor.

- Friday: Release notes and a post facto review of supervision metrics.

Teams report that the blocking bottleneck becomes review, not build. That is where you want the bottleneck to be. It is where judgment lives.

Where the costs actually land

Replit’s pitch is that an integrated agent is more cost effective than cobbled together computer use models, because it runs fewer hallucinated steps and spends more time inside a compiler or a test suite than a browser viewport. Whether that holds for your stack depends on two things you control:

- The tightness of your spec and tests. Loose outcomes lead to wandering sessions and higher spend.

- The stability of your environment. Flaky tests teach the agent the wrong lessons and inflate retries.

A useful unit for planning is the agent hour. Budget how many agent hours each feature should consume, benchmark your team’s supervised hours against it, then adjust your spec depth or your environment to hit the target. Over time you will learn which classes of work the agent does cheaply and which it does expensively. Feed that learning into triage.

What stays on the human desk

Autonomy narrows the field of work, but it does not eliminate it. Humans still own:

- The product narrative and the prioritization behind it.

- Security, data governance, and compliance constraints.

- Cross team coordination and handoffs that span systems the agent cannot see.

- The design quality bar and taste decisions that tests cannot capture.

The agent will improve at proposing ideas, but it will not own your roadmap. Treat it like a strong junior engineer who can build quickly within a well framed box.

Competitive pressure is about to intensify

A non hyperscaler putting a credible autonomous builder in developers’ hands changes market dynamics. It tells every tool vendor to expose a programmable surface an agent can use, not just a user interface a human can click. Expect connectors and stable software development kits to become a purchasing criterion. If your product cannot be assembled by an agent, it risks being skipped by teams that do not want to break the autonomy loop.

What to do next if you run a software team

- Establish a supervision guild. Train a rotating group of engineers and product managers in spec writing, test design, and review norms for agent written code.

- Create an agent runway environment. Preload sample data, seed fixtures, and default credentials through a broker. Make it cheap to spin up and safe to tear down.

- Start with a portfolio of low risk, high benefit tasks. Documentation generation, analytics backfills, internal dashboards, and small feature flags are ideal first candidates.

- Instrument everything. Track supervised hours per feature, agent hours per feature, rework rate, and time to green. Publish a weekly scorecard.

- Treat failures as data. Root cause drift once a week and update your spec templates and tests accordingly.

Do those five things and you will feel the economics flip within a quarter. The hardest part is culture. Teams must get comfortable treating an agent as a colleague who needs coaching and guardrails, not as a vending machine that dispenses perfect features.

The bigger picture for SaaS

As agents that build agents spread, expect platform roadmaps to evolve in three visible ways:

- Products will ship official automations for common back office chores, built and maintained by the platform’s own agent.

- Enterprise plans will advertise execution controls that make autonomy safe: sandboxed runtimes, granular scopes, audit trails, and time limits.

- Marketplaces will list composable mini agents rather than only templates or plug ins, with clear permissions and cost profiles.

Vendors that lean into this pattern should see two advantages. First, their apps will be easier to assemble by autonomous builders, which means more inclusion in customer projects. Second, their support burden should fall because tests and automations will be co generated and co maintained by the same agent that built the integration in the first place.

A note on reliability and trust

Autonomy is power, so mistakes carry weight. The answer is not to avoid autonomy. The answer is supervised autonomy, careful separation of duties, and a culture that treats tests as contracts. You would not give a junior engineer a shell on production without guardrails. Do not do that for an agent either. Use canaries, keep secrets sealed, cap the blast radius, and insist on reversible changes. When your tests are your contracts and your logs are your transcripts, trust becomes auditable instead of aspirational.

Why this matters now

Two lines crossed this fall. A product line crossed from demo to delivery, with an agent that can build and self correct inside a real development loop. A capital line crossed from curiosity to conviction, with investors underwriting a non hyperscaler that is productizing autonomy at scale. You can see Replit’s own description in introducing Agent 3, our most autonomous agent yet, which highlights browser testing, long autonomous runs, and agent generation.

Whether you run a startup or a product group inside a large company, the takeaway is the same. The unit of work is shifting. The job is less about telling a model what to type and more about defining what good looks like and supervising the path to get there. Teams that adapt will ship more, with fewer people stuck in glue work, and a cadence that feels like compound interest.

The closing image

Imagine a workshop at night. A single supervisor sits at a bench with a checklist, while a fleet of tireless apprentices move through their tasks, test their work under bright lamps, and return with revisions until the checklist turns green. That is what Agent 3 promises for software. Not magic. A well lit room where good supervision turns autonomy into production.