Meet the Watch-and-Learn Agents Rewriting Operations

A new class of watch-and-learn agents can see your screen, infer intent, and carry out multi-app workflows with human-level reasoning. Here is how they work, where to pilot them first, and what controls to require before you scale.

Breaking: the screen is now an instruction manual

In October 2025, a new class of automation moved from research into the daily toolkit. Google introduced the Gemini 2.5 Computer Use model, which can navigate interfaces by reading the pixels on a screen and taking actions the way people do. Anthropic, OpenAI, Microsoft, UiPath, and others are rolling out similar capabilities that click, type, scroll, and recover from errors while keeping a human in the loop.

Think of these systems as capable new hires. They shadow an expert for a few sessions, draft a standard operating procedure, then offer to run the workflow while you watch. Instead of brittle scripts tied to one app’s internals, they learn from the visible screen, which is the universal interface for work. That shift puts human reasoning at the center of automation and shortens the path from insight to impact.

What learn by watching really means

A learn-by-watching agent is a loop of perception, planning, action, and reflection.

- Perception: The model ingests a screenshot or video frame and a short history of clicks and keystrokes. It reads labels, buttons, hints, error messages, and table headers, and segments the interface into actionable regions.

- Planning: It drafts a compact plan that maps a business goal, such as file an invoice or reconcile a record, to concrete steps across one or more applications. The plan can update as the page changes or validations fail.

- Action: It emits structured actions like click, type, select from dropdown, drag, upload, or submit. Each action can be simulated in a dry run or executed for real.

- Reflection: It checks results against expectations, reads any error text, backtracks when needed, and requests human approval for sensitive moves.

The loop sounds simple. The magic is the model’s ability to generalize. When the purchase order screen moves a field or an unfamiliar portal uses different labels, the agent still finds the right path by reasoning over what it sees rather than relying on a brittle selector.

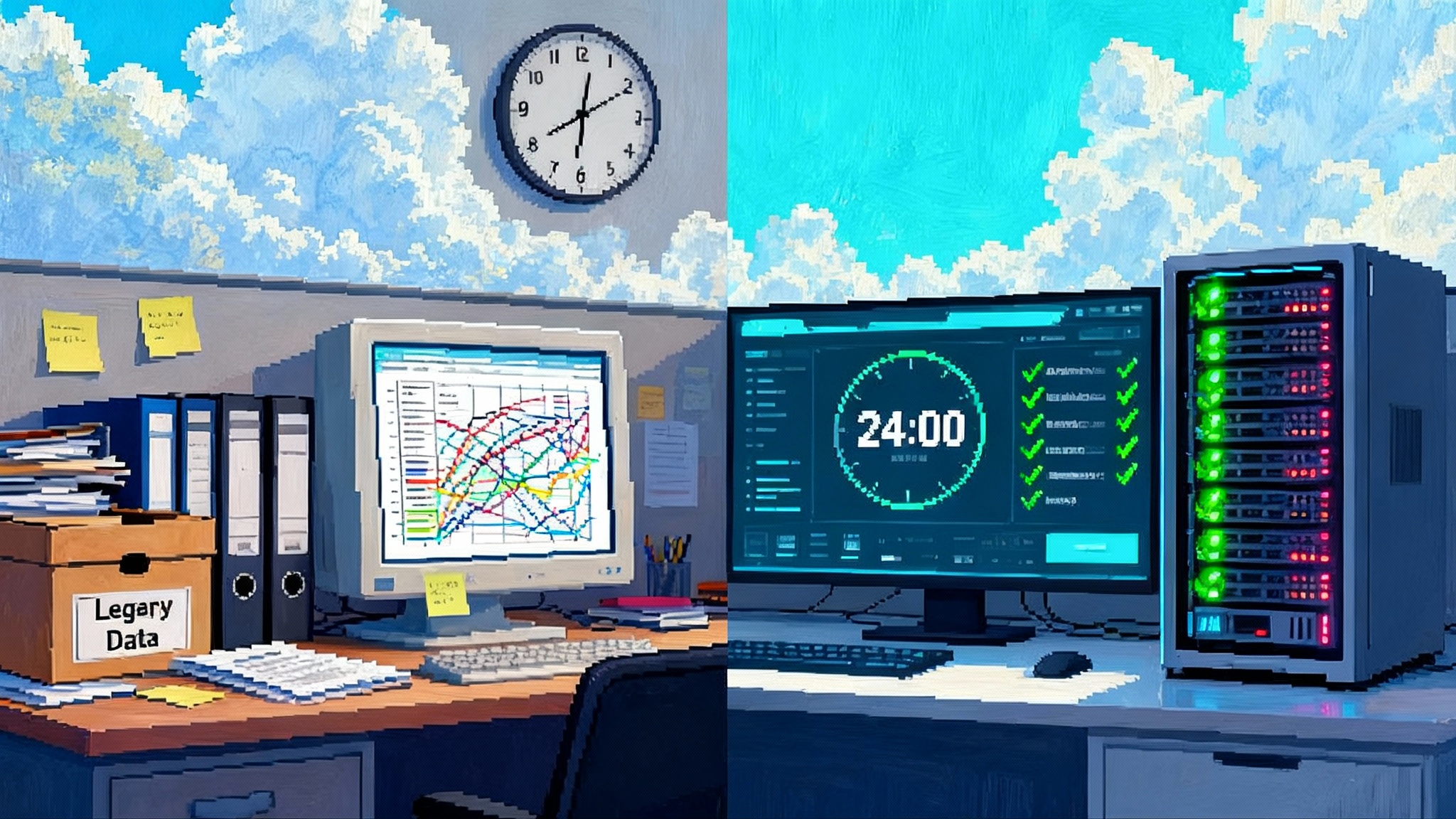

How this differs from classic RPA

Traditional RPA binds a robot to static selectors that identify elements in the Document Object Model or a native app tree. That is accurate when the interface is stable, and fragile when it is not. A minor front end tweak can break a flow, trigger a ticket, and start a mini project to heal the bot.

Learn-by-watching treats the interface like a person would. It reads text, interprets layout, and uses visual context to decide what to do next. That reduces the dependency on hand-tuned selectors and opens cross-application tasks where no single bot owner has authority. It also changes the deployment math. Instead of weeks spent stabilizing a script, a pilot can start with recordings of a top performer doing the work in a safe sandbox. The agent proposes an SOP and an executable flow. You approve, then run with guardrails and logs.

A helpful metaphor: classic RPA is a precision arm that needs a jig for every part. Learn-by-watching is a careful apprentice with good eyesight and a checklist who can still deliver when the table shifts a few inches.

From observation to SOP to reversible automation

Teams that are having success follow a practical pathway.

1) Capture the real work

- Record 5 to 15 representative sessions of an expert completing the process in a nonproduction environment. Include failure cases and uncommon branches.

- Annotate the business purpose, inputs, outputs, and acceptance criteria. If policy allows, capture tooltips, validations, and error text, since those help the model reason.

2) Auto-draft the SOP

- The agent clusters recordings into a canonical flow with branches for frequent variants.

- It generates a step list with screenshots, expected inputs and outputs, and success checks. Good systems explain why a step exists, which helps with audits and training.

3) Propose a safe automation

- The agent turns the SOP into an executable draft. Sensitive steps are flagged for human-in-the-loop approval.

- The draft includes test cases, expected results, and a dry-run mode that only reads and simulates actions in a cloned environment.

4) Ship with a brake and a black box recorder

- The runtime records actions, hashed or redacted frames, and system messages for audit. If a step deviates from the SOP or a risk rule triggers, the agent pauses for help.

- A one-click rollback returns the state to the last safe checkpoint. When a process changes, the agent proposes an SOP update and waits for approval to adapt.

The enterprise buy checklist

If you evaluate these systems this quarter, favor quick pilots but buy like a control owner. At minimum, insist on the following.

- Privacy model: Can screen processing run on device, or do screenshots leave your environment for cloud inference. If cloud is required, demand strict retention limits, delete-by-default, and training-use opt outs. Anthropic has published clear defaults for screenshot lifecycle, which is the level of specificity you want from every vendor. See Anthropic on screenshot processing.

- Security attestations: Require current SOC 2 Type II and ISO 27001. For regulated environments, check ISO 27018, HIPAA, and FedRAMP where applicable.

- Governance and traceability: Ask for per-step audit trails with immutable logs and exportable traces. Reward vendors that provide legible thought summaries or structured traces so reviewers can reconstruct decisions.

- Approvals and roles: Map actions to least-privilege identities. Sensitive steps should require approvals that are versioned, queryable, and easy to test.

- Rollback and containment: Demand dry runs, checkpointing, and transaction-level rollback. Ask for kill switches by user, process, and environment.

- Data boundaries: Use field-level redaction, allowlists, and network egress controls for agent browsers and virtual machines.

- Deployment options: Compare cloud, on premises, and virtual desktop setups. Understand the performance and cost tradeoffs of local inference, private cloud, and vendor-managed sandboxes.

Where to run your first pilot

Pick a process that is high volume, rule bound, and screen heavy, but not safety critical. Three patterns tend to work.

- Multi-system lookups and entry: For example, pull a customer’s last three invoices from a finance portal, cross check contract terms, and write a concise exception note in the case system.

- Back office publishing: Update listings in a marketplace dashboard or maintain a knowledge base across two content management systems.

- Structured onboarding: Vendor onboarding in a procurement portal that needs document collection, field validation, and status updates across two apps.

A practical timeline looks like this: treat the first two weeks as observation and SOP drafting, the next week as sandbox automation with human in the loop, and the last week as monitored production with caps. Many teams report that this compresses a quarter of work into measurable weeks without raising risk.

KPIs that change minds

Define clear measures before you start. The right metrics make the debate objective.

- Time to automation: Measure from kick off to the first supervised run that meets target accuracy. Aim for 21 days or less for an initial pilot. Track the percentage of steps that run unattended by day 7, day 14, and day 21.

- Error rate: Define what wrong means per step. For data entry, use a field mismatch rate. For retrieval, use a wrong record rate. Track pre pilot human error, supervised agent error, and unattended agent error. Beat human error with supervision before removing training wheels.

- Variance reduction: Standardize the path to completion. Measure the spread in step counts and handle times across operators. A good pilot narrows variance, which reduces downstream exceptions.

- Review burden: Track time spent on approvals and interventions per 100 runs. This should decline as the agent learns and as your risk rules improve.

- Rework and rollback: Count how many runs needed a rollback and how long recovery took. This is a proxy for operational risk.

Failure modes to respect and the guardrails that stop them

- User interface drift: The front end changes and the agent’s perception gets confused. Mitigation: nightly synthetic tasks, contract tests for critical fields, and a stop flag if a key locator fails.

- Shadow automation: A power user turns on an agent in production without approvals. Mitigation: signed policies for execution, a governed service that enforces role checks, and logging of every action.

- Data leakage: Screenshots might capture sensitive data or traces may persist beyond policy. Mitigation: redaction at capture time, ephemeral workers, and vendor contracts with delete-by-default and opt out of training. The published approach in Gemini 2.5 Computer Use model and other enterprise products shows how vendors are converging on stronger controls.

- Prompt injection and hostile pages: A page hides instructions designed to hijack an agent. Mitigation: content provenance checks, allowlists for actions on untrusted domains, and human approvals for sensitive steps.

- Misclicks with consequences: An agent clicks the wrong delete. Mitigation: two factor checks for destructive actions, read-first patterns, and staging outputs before any commit.

How this fits with your current RPA estate

No one is asking you to rip out working robots. Treat learn-by-watching as a multiplier on top of your existing portfolio. Use it to automate the long tail of processes that were too variable for selectors or too cross application for a single bot owner. Use it to document what your best operators actually do, then feed those SOPs back into your process and test teams.

When integrated with enterprise suites, you get the best of both worlds. Explainable traces and summaries help with change control and audits. Computer use skills unlock the hard pages that resisted selector-based bots. If you are charting the broader agentic shift in software, the perspective in Agent 3 marks the shift pairs well with the operational lens here. If your teams are exploring browser-native agents, the angle in Comet and the browser agent offers a useful complement. Security leaders should also review Agentic security with Akto’s MCP to see how policy and observability must evolve alongside these agents.

A concrete example that resonates with finance

Today, month end exception handling in many organizations lives in spreadsheets and screenshots. Different analysts resolve vendor name mismatches in different ways, queues spike, and leadership cannot see where the time goes. With a watch-and-learn pilot, you capture ten real resolutions, the agent drafts a step-by-step SOP, central finance tweaks the policy text, and the agent carries out the steps in the portal under human supervision. Within two weeks, exception handle time drops, variance shrinks, and the audit trail finally becomes searchable and complete.

Extend the same approach to vendor onboarding. The agent collects documents, validates fields across two systems, writes a status note, and flags any exceptions to a queue with screenshots and reasoning attached. Over time, fewer cases need human review, and the ones that do arrive with crisp context.

Operating model for scale

Pilots are simple. Scaling requires discipline and a light operating model.

- Ownership: Assign a product owner for each automated process and name a control owner for the risks involved. Draft a one page RACI so everyone knows who approves what.

- Standards: Maintain a shared library of risk rules, approval templates, and redaction patterns. Reuse these across processes to reduce variance and speed audits.

- Evaluation: Run continuous evaluation on synthetic tasks to track regression. Combine those with spot checks on real runs to detect drift early.

- Training and enablement: Teach operators how to annotate their screens, explain their steps, and suggest improvements. The better the observation, the better the SOP.

What to ask vendors in the demo

You can learn a lot from ten minutes of hands on time. Ask the vendor to:

- Run in a fresh tenant with your test pages and do not preload selectors.

- Explain how the model reasons about ambiguous labels and show how it chooses between two similar buttons.

- Trigger a validation error and recover, with logs that make the decision process legible.

- Demonstrate human approvals on a sensitive step and show you the immutable audit of that approval.

- Execute a rollback and then propose an SOP update when the interface changes.

If the demo cannot cover these asks, the product is probably not ready for production scale.

The near future: quarters to days

When a model can watch a task on Monday, draft the SOP by Tuesday, and run a supervised version on Wednesday, operations timelines compress. That is the acceleration everyone feels this fall. The caution is equally clear. Without governance, you get silent errors, quiet policy drift, and data that wanders outside your boundary.

The platforms are converging on the right features. Google’s recent launch brought a credible browser-first agent with measurable transparency. Anthropic is explicit about screenshot lifecycle and training defaults. OpenAI’s research previews point to general computer use, and Microsoft continues to integrate explainability and governance into its automation suite. Expect the next wave to bring better memory, richer action vocabularies, faster approvals, and safer human-in-the-loop experiences.

What leaders should do next

- Start small in a controlled environment where you can measure outcomes.

- Define time to automation, error rate, and variance reduction before the pilot begins.

- Require auditability and reversibility as table stakes.

- Integrate with your existing RPA estate to capture quick wins without rewriting stable bots.

- Build a culture where careful observation pairs with strong controls. That is how you turn months into weeks, and weeks into days, without trading away trust.