Publishers seize AI search with ProRata.ai’s Gist Answers

A startup-led, on-domain answer engine lets newsrooms run LLM Q&A on their own sites, keep readers and data in-network, and turn curiosity into revenue with contracts, attribution rules, and answer-native UX.

Publisher-owned AI search has arrived

For two years, publishers watched their reporting power off-site chatbots and answer engines. Traffic softened, referral quality dipped, and attribution turned into a guessing game. Now the pendulum is swinging back. With ProRata Gist Answers, newsrooms can run large language model question and answer directly on their own domains, with their editorial rules, telemetry, and business model. The idea is both simple and radical: do not let external bots scrape, summarize, and monetize your work. Host the brain at home and keep the value in the building.

Gist Answers is part widget and part infrastructure. To readers, it feels like a conversational answer box that lives on article pages and site search. Under the hood, it is a retrieval and generation pipeline wired into your content management system. It respects access controls and paywalls, cites your pages, logs the data you need, and enables sales units that fit journalism instead of generic display ads. A reader asks a question, gets a crisp, grounded answer, taps through to your sources, and stays on your site.

What on-domain LLM Q&A changes right now

The last search era sent users from search engines to publishers. The new answer era often stops before the click. On-domain question and answer flips that dynamic. It pulls curiosity back to the source of truth and makes the publisher site the first and last stop for understanding a topic. Instead of hoping an external model quotes you, your newsroom runs the model that answers the question.

Here is what changes on day one:

- Session shape: A reader’s journey starts with a question and yields an answer panel tied to your reporting, not a list of blue links. The panel can cite your three most relevant articles and offer follow-ups that expose depth of coverage.

- Data fidelity: You control scope and freshness. Paywalled content stays paywalled. Embargoed content stays out. Corrections propagate as soon as they are published.

- Measurable value: Because the answer experience runs on your page, you can attribute revenue to answers, rank evergreen explainers by answer assist, and price sponsorships around high intent topics.

The AIO playbook: contracts, attribution, and revenue

Artificial intelligence optimization, or AIO, is to the answer era what SEO was to the link era. For publishers, the playbook already has three pillars: contracts, attribution, and revenue share. Each is concrete. Each is measurable.

1) Contracts that actually bind the model

Treat any answer engine as a distribution partner and write terms like you would for a newswire or video syndication deal.

- Scope of use: Define which sections and feeds are included. Hold back investigative or sensitive coverage until you opt in.

- Storage and caching: Allow short caches for speed. Require immediate invalidation on correction or takedown.

- Training rights: Separate training from inference. Permit retrieval augmented generation against your index. Withhold general training rights without an explicit addendum.

- Audit and deletion: Reserve the right to audit embeddings and logs for compliance. Require deletion of derived artifacts upon request.

- Safety and liability: Specify refusal categories, incident timelines, and indemnification boundaries.

2) Attribution that favors your brand

Attribution in an answer product is not courtesy. It is navigation. Build rules that make your reporting the default path forward when you are the source of truth.

- Source pins: Fix authoritative sources in a visible position that travels with the text as the user scrolls.

- Canonical preference: When coverage overlaps, prefer your originals and definitive explainers.

- Follow-up prompts: Shape conversation with prompts that map to your coverage. A climate answer can offer a jump to your wildfire tracker, not a generic external link.

3) Revenue share that matches intent

Answer inventory is not display inventory. The unit is a resolved intent.

- Cost per answer session: When a sponsor wants presence on definitive explainers, sell answer sessions with caps and topic safeguards.

- Affiliate and commerce routing: For product journalism, keep commerce links inside the answer component and attribute downstream sales to answer assist.

- Knowledge sponsorship: Allow a brand to underwrite a recurring answer card on a beat, with strict church and state controls. The sponsorship buys presence, not influence.

Cross-publisher knowledge and the network effect

The step change will come from a federation of publishers running compatible Q&A stacks. When multiple trusted outlets opt into a shared knowledge graph, three things happen:

- Coverage expands: If your newsroom owns city hall and a partner owns state policy, the answer engine can traverse both in a single session while preserving attribution and revenue splits.

- Redundancy becomes quality: When three independent explainers converge on how a new law works, the model gains confidence and cites converging sources. Contradictions become review queues for editors.

- Long tail resilience: Niche but vital topics, from school budgets to transit changes, stay fresh because authority lives with the reporters closest to the beat.

The network effect is not theoretical. It appears as faster time to first credible answer, higher satisfaction scores, and a corpus of verified statements that the federation can share, license, or protect.

From SEO to answer-native experiences

SEO optimized pages for ranking. Answer-native design optimizes for resolution. That pushes product and editorial teams to build for questions, context windows, and follow-ups.

- Information density: Answers must be short enough to scan yet durable under scrutiny. Aim for two tight paragraphs with a source stack and one chart or key stat block.

- Conversational wayfinding: Replace generic related stories with explicit options such as, “Want the timeline, the money trail, or the key players?” Each option deepens mastery.

- Stateful sessions: When a user moves from “What passed last night” to “How will this change property taxes,” carry context across the turn and cite fresh sources.

For editorial teams, answer-native means building explainer arsenals and structured facts. For product teams, it means caching plans, index freshness budgets, and human-in-the-loop review. If your organization is exploring lean agent stacks, study how AgentKit compresses the agent stack to cut latency and cost while keeping control.

How developers can wire it into agent stacks

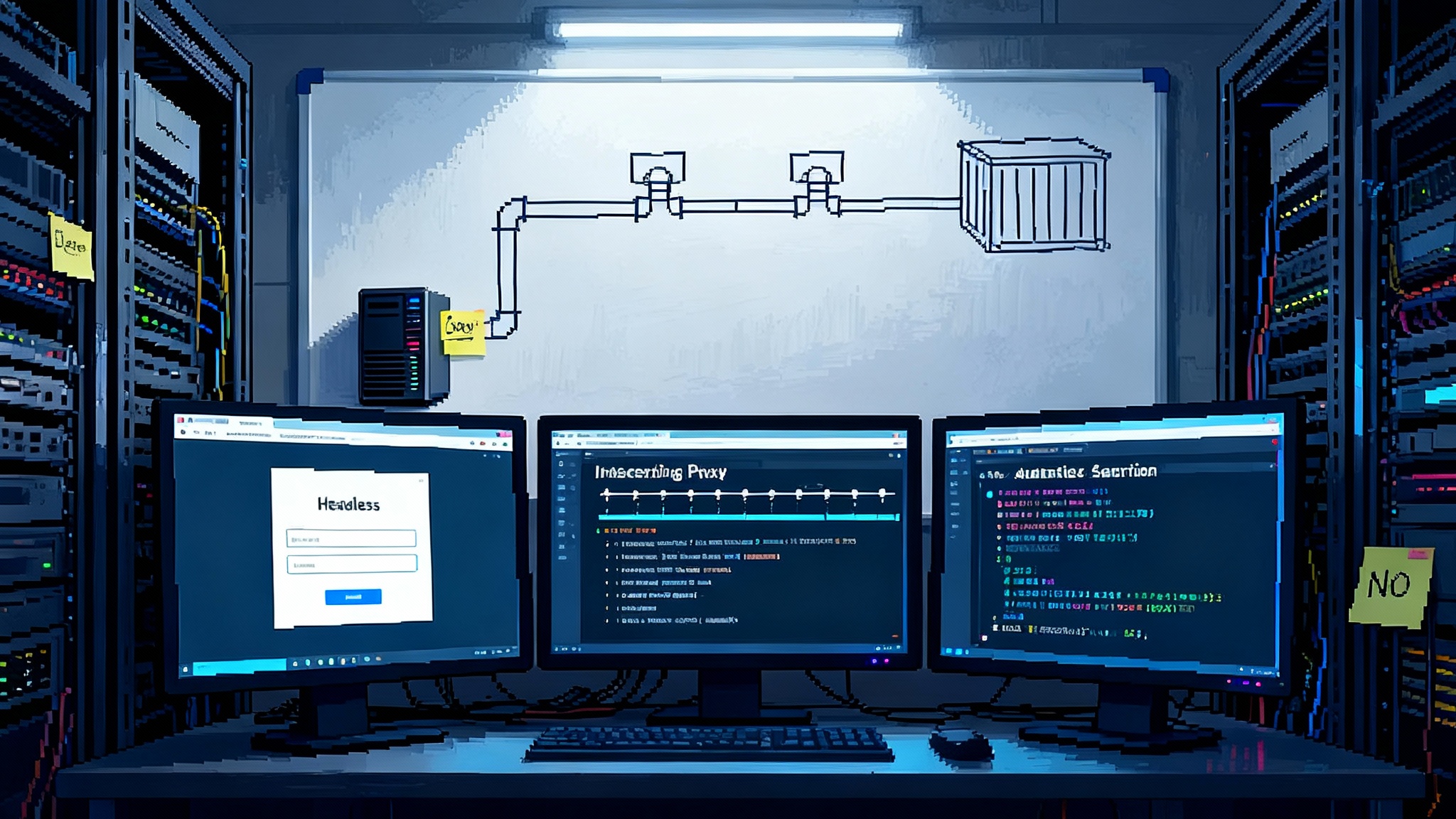

Gist Answers is more than a widget. It is a stack that plugs into your agents and data layer. Below is a blueprint for a first-party retrieval augmented generation pipeline with telemetry and safety.

Data flow and storage

-

Ingest: Pull articles, newsletters, and data graphics from your CMS and archives. Normalize into a canonical schema: id, url, publish_time, section, author, paywall, access_tier, tags, text, tables, media.

-

Chunking: Break content into semantically coherent chunks. Prefer natural boundaries like subheads and list items. Keep chunks small enough for efficient retrieval but large enough to preserve argument structure.

-

Embeddings and index: Generate embeddings with your preferred model and write to a vector store keyed by canonical id. Track an embeddings_version to enable rollbacks when models change. Teams experimenting with low-cost customization can learn from DIY fine-tuning of open LLMs to align answers with house style.

-

Metadata guardrails: Attach safety tags such as legal_sensitive or trauma_content so your answer chain can filter and route to stricter policies when needed.

Retrieval and generation

Use a layered retrieval strategy:

- Stage 1 lexical filter: A fast keyword or inverted index to prune candidates.

- Stage 2 semantic retrieval: Vector search for semantic neighbors within policy-compliant scopes.

- Stage 3 re-ranker: A cross encoder that prioritizes authority and recency, with bonuses for original reporting and policy tags like explainer.

Then generate with clear, testable instructions:

- Cite at least two sources with canonical preference rules.

- Do not invent numbers. If a numeric claim is uncertain, surface a range and link to the relevant paragraph.

- Summarize first, then offer three follow-up questions that map to your navigation model.

Telemetry and feedback

Instrument at the token and session levels:

- Query features: intent type, locale, device, and referrer.

- Retrieval features: which chunks won and their scores.

- Answer features: token count, latency, model usage, safety triggers.

- Outcomes: source clicks, scroll depth, satisfaction vote, subscription events, commerce events.

Create a daily quality report that highlights answer satisfaction rate, source click through rate, contradicted claims, and a freshness score that penalizes stale citations.

Safety and compliance

Route every turn through a safety router:

- Content policy classification: adult, self harm, medical, or elections. Apply stricter prompts or hard stops where required.

- High risk topics: Health and finance answers should default to recovery prompts that emphasize caution and link to your medical or finance explainers.

- Privacy protection: Redact personal information before indexing. Apply name matching to suppress answers about private individuals who are not newsworthy.

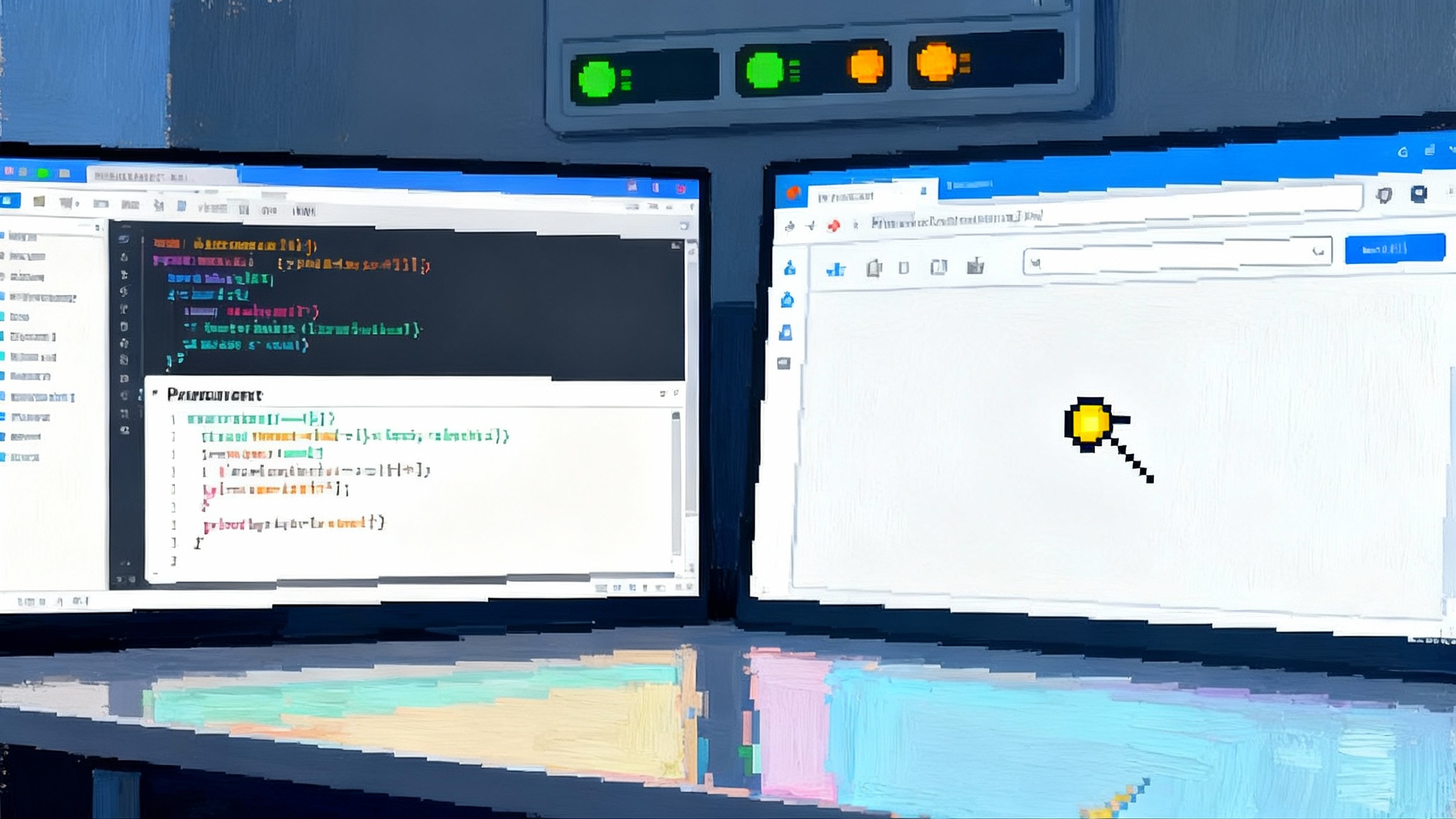

A minimal example chain

# Pseudocode for illustration only

def answer(question, user):

features = extract_features(question, user)

scope = policy_scope(features)

lex = lexical_search(question, scope, k=200)

vec = vector_search(question, scope, k=60)

cands = merge_unique(lex, vec)

ranked = cross_encoder_rank(

question,

cands,

boost=["original_reporting", "recent", "explainer"],

)

top = enforce_publisher_rules(

ranked,

prefer=scope.preferred_publishers,

limit=6,

)

prompt = build_prompt(

question,

top,

rules={

"cite_min": 2,

"no_new_numbers": True,

"style": "short_then_deeper",

"followups": 3,

},

)

draft = generate_llm(prompt)

safe = safety_review(draft, scope)

final = postprocess_for_attribution(safe, top)

log_telemetry(user, question, top, final)

return final

Editorial workflow that keeps humans in the loop

Answer-native products work best when they match the newsroom’s rhythm.

- Beat briefs: Reporters maintain short, structured briefs with key facts, definitions, and recurring data sources. These briefs become high authority chunks that the re-ranker loves.

- Correction propagation: When a correction is published, a webhook hits the indexer and invalidates embeddings for that story. The answer component displays a correction badge for 48 hours.

- Launch kits: For recurring events like elections or earnings, create knowledge packs with pre-approved sources and policies. The model switches to the pack on relevant queries.

- Weekly audits: Editors review the top answer sessions by volume and by complaints. Each audit updates a brief, adjusts a policy, or proposes a product experiment.

Revenue, pricing, and the new ad unit

If the banner ad was the page era’s unit, the answer session is the answer era’s unit. Price it against outcomes and guard it with policy.

- Sponsorship packages: Sell fixed fee sponsorships for scoped categories such as local weather explainers or school policy explainers. Include impression caps, quality thresholds, and make goods.

- Performance overlays: For commerce content, attribute sales that originate inside the answer experience. Share revenue with the desk that maintains the evergreen explainer that powered the conversion.

- Time based boosts: During breaking news, allow a sponsor to support the answer box for a limited window with neutral branding, not message control. Use this to fund the surge in compute and moderation.

Teams that care about clarity and accountability can look at accountable autonomy in email agents for inspiration on how to present model decisions and maintain trust.

Measurement that matters

Track metrics that connect product value to business value.

- Answer satisfaction rate: Percentage of sessions with a positive vote and at least one source click.

- Coverage depth ratio: How many follow up questions stayed on domain without failing to fetch a new source.

- Freshness half life: Median hours until a new piece of reporting becomes an answer citation.

- Safety incident rate: Issues per thousand sessions, segmented by policy category.

- Revenue per answer: Sponsor, subscription, or commerce revenue divided by answer sessions, reported by topic.

When these metrics move, you know whether to invest in retrieval, improve briefs, or change layout.

Why this is a strategic accelerant

On-domain Q&A compresses the distance between reporting and reader value. It also rebalances the ecosystem toward the people who do the original work. Three strategic effects compound over time:

- Less leakage: If a question starts and ends on your site, you do not rely on external rankings or aggregators for survival.

- Better data exhaust: You learn exactly what your community is trying to understand now, with local nuance. That signal improves assignments, explainers, and product priorities.

- Portable trust: A federation of participating publishers can share a verified statement layer that travels across sites, raising confidence and reducing duplication.

Risks and how to manage them

- Hallucinations and drift: Even grounded models can overgeneralize. Mitigation: strict no new numbers policy, source pinning, and an escalation path that temporarily disables answers on sensitive topics.

- Editorial overload: Reporters are busy. Mitigation: small beat briefs with clear owners. Automate extraction of facts into drafts that editors approve weekly.

- Incentive mismatch: Sales may push for looser controls. Mitigation: codify church and state in both contract and code. Safety gates cannot be toggled by an ad campaign.

- Compute cost spikes: Traffic surges are expensive. Mitigation: elastic caching of popular answers and a low latency distilled model for follow ups that do not require full regeneration.

A practical rollout plan for the next 90 days

Weeks 1 to 2: Legal and data mapping

- Draft and sign scope, caching windows, and training rights.

- Map content sources and decide what stays out of scope for the pilot.

Weeks 3 to 5: Index and prototype

- Build the canonical content schema and index the last 18 months.

- Launch an internal only answer box for one desk with a daily quality review.

Weeks 6 to 8: Safety and sponsorship

- Wire moderation categories and an incident pager. Define high risk topics that require editor approval before answers render.

- Prepare a sponsorship package for a small, well defined beat.

Weeks 9 to 12: Public beta

- Roll out to 10 percent of users on three sections. Ship the quality dashboard to the newsroom. A/B test layout and follow up prompts.

- Share results across the federation to compare quality and revenue per answer by topic.

The bigger picture

This shift is not a feature. It is a new distribution contract between reporters and readers. Off-site chatbots will not disappear, but they no longer have to be the first stop. A publisher who can answer quickly, cite cleanly, and maintain trust will earn more attention and better economics. A network of those publishers will build a durable layer of verified knowledge that helps the whole ecosystem, not just the biggest players.

If you are a newsroom leader, choose a beat where you already own the coverage and where readers show up with questions. If you are in product or engineering, wire retrieval augmented generation with tight policy, full telemetry, and an editorial loop that can absorb the work. If you are in sales, package answer sessions as a distinct unit that respects editorial boundaries and pays for the journalism that makes it possible.

Closing thought

For too long, publishers optimized for someone else’s ranking page. ProRata Gist Answers reframes the game. Build for resolution instead of clicks, keep the session on your site, measure what matters, and negotiate like a distributor of knowledge, not a supplier of raw text. The winners will pair original reporting with an answer engine that readers trust.