Autonomous pentesting goes live as Strix opens its agent

On October 3, 2025, Strix released an open source AI hacking agent that moves autonomous pentesting from research to production. Learn how it works, what it changes for SDLC and governance, and how to ship it safely.

The week autonomous pentesting crossed into production

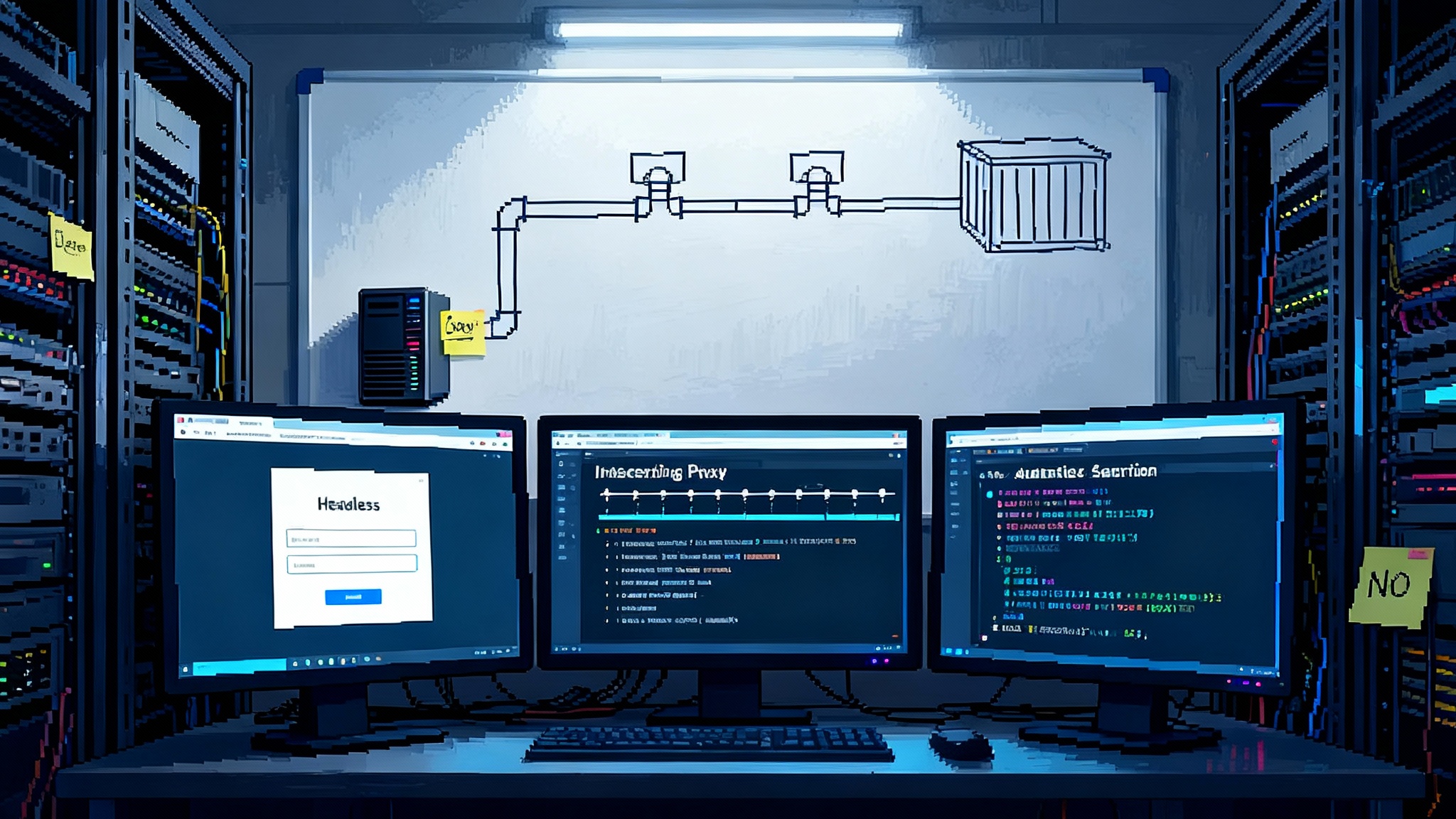

On October 3, 2025, Strix published an open source agent that treats your application like a target and tries to break it the way a professional would. The public Strix GitHub repository shows a developer first tool that chains a browser, an intercepting proxy, a terminal, and exploit code into a single system that can scan, exploit, verify, and report in one run. That simple sentence marks a line in the sand. Agentic security testing has moved from weekend experiments to something a production team can realistically operate.

If you felt the ground shift last year when code assistants began to write boilerplate, this week is the equivalent moment for application security. The difference is not only speed. It is that these agents carry a full toolkit and the judgment to use it in sequence, like a junior tester who never gets tired and always writes down what they did.

What an AI hacking agent actually does

Think of Strix as a patient red teamer who operates a small lab:

- Browser: walks the app, triggers flows, and inspects the Document Object Model. The agent clicks, types, follows redirects, and keeps cookies so it can move through login and role changes.

- Proxy: serves as a magnifying glass. It intercepts requests and responses, rewrites headers, tampers with parameters, and replays sequences to see what changes.

- Terminal: acts as the workbench. Here the agent runs reconnaissance tools, sends crafted payloads, executes scripts, and inspects operating system behavior inside a sandboxed container.

- Exploit runtime: functions like a custom shop. The agent can write proof of concept programs, call out to standard security tools, and stitch together multi step attacks.

This toolkit matters because real vulnerabilities rarely show up from a single scan. Imagine a classic access control flaw. A human tester logs in as a basic user, observes a numeric identifier in a profile link, swaps it for another user’s identifier, and sees whether the server returns forbidden or the other user’s data. Strix follows the same idea. It crawls to collect identifiers, uses the proxy to insert modified requests, monitors the browser for unexpected data, and then builds a proof of concept that shows the exact parameter and payload that leak the record.

The point is not that the agent is magical. It is that it is tireless and procedural. It remembers each step, records artifacts, and generates a report that contains reproduction steps instead of a generic label. That is how false positives collapse, because the report includes a working demonstration, not a guess.

Where this fits with broader agent trends

Security agents benefit from the same primitives that have improved coding and browsing agents. If you are tracking how multi tool agents wire browsers into decision loops, the discussion in Cursor's browser hooks is a useful mental model. Likewise, if you are building minimal stacks to ship agent capabilities fast, the approach in AgentKit compresses the agent stack maps neatly to the needs of a pentesting runner. Expect ongoing gains in search, planning, and test time adaptation similar to the patterns described in the rise of test time evolutionary compute.

Why this is a turning point

Until now, autonomous security work mostly stayed in two buckets. There were clever research demos that solved a single challenge. There were large enterprise scanners that were fast but shallow and often noisy. An agent that orchestrates a browser, a proxy, a terminal, and custom code gives you a third option. It is dynamic enough to validate findings and structured enough to repeat.

The open core is also consequential. When the engine is available under a permissive license, teams can audit what happens, contribute hardening, and build controls around it. In practice, that is what production adoption needs. Security engineering wants both sharp tools and the ability to put them behind guard rails.

What changes for the software development life cycle

Continuous adversarial testing becomes a realistic stage in the software development life cycle, not an annual event.

- Pull request time: run a short, budgeted agent session against the code path touched by the change. The goal is to catch obvious injection, authentication, or authorization mistakes when context is fresh.

- Nightly in staging: run deeper sessions that are allowed to crawl, mutate, and attempt exploitation. Fail the job on confirmed critical findings, not on speculative signals.

- Pre release: run a targeted pass on the high risk surfaces such as authentication flows, file upload endpoints, and administrative paths. Require a clean report before promotion to production.

This cadence flips the usual compromise between speed and depth. Instead of choosing one long manual pentest or a quick but noisy scan, teams get smaller but continuous slices of real adversarial behavior.

A pragmatic maturity curve

- Starter: one nightly staging run with a strict scope and artifact capture. A single owner triages.

- Intermediate: per service policies and separate jobs for auth flows, file uploads, and admin paths. Confirmed highs gate deploys.

- Advanced: environment snapshots for deterministic replay, dynamic test data seeding, and cross env variance analysis to catch unsafe config drift.

Governance moves to center stage

If your agent is allowed to poke, it must be controlled. That is not a legal afterthought. It is engineering work.

- Scoped permissions: define an allow list of domains, ports, and routes. The agent must not wander into production or third party systems unless explicitly permitted.

- Time boxing: enforce fixed windows with idle timeouts so a job cannot loop forever.

- Identity: use dedicated accounts with distinct roles and strong secrets that rotate. Do not reuse human accounts.

- Audit trails: capture a timeline of tool invocations, commands, and network calls. Keep raw artifacts such as request and response pairs and screenshots under retention with access controls.

- Human checkpoints: require an approval for high risk actions such as destructive payloads or privilege escalation. The goal is prevention, not bureaucracy.

Build these controls as code, so they are reviewed and versioned like the application.

The emerging defense stack for agent era testing

You will not only run agents. You will defend against them, even your own.

- Agent honeypots: create fake secrets, fake administrator endpoints, and fake low hanging assets in staging and pre production. If an agent ever presents those in a report, you know it took the wrong path or is overreaching. If an external actor triggers them, you get a high signal alert.

- Capability sandboxes: run the agent inside a container with a minimal profile. Use Linux security modules, seccomp profiles, and network namespace policies to reduce blast radius. Treat the container like a disposable lab, not a shared workstation.

- Signed toolchains: require that any binary or script invoked by the agent is signed by your team and verified at runtime. If a tool is not in the manifest, the policy blocks execution.

- Policy gate for tools: put the agent behind a control plane that authorizes which tools it can call and which resources it can read. The model should ask, and a policy server should answer, not a prompt.

These techniques sound heavyweight, but they are routine in production build systems. The novelty is applying them to an adversarial program that you control.

How Strix chains tools to validate a vulnerability

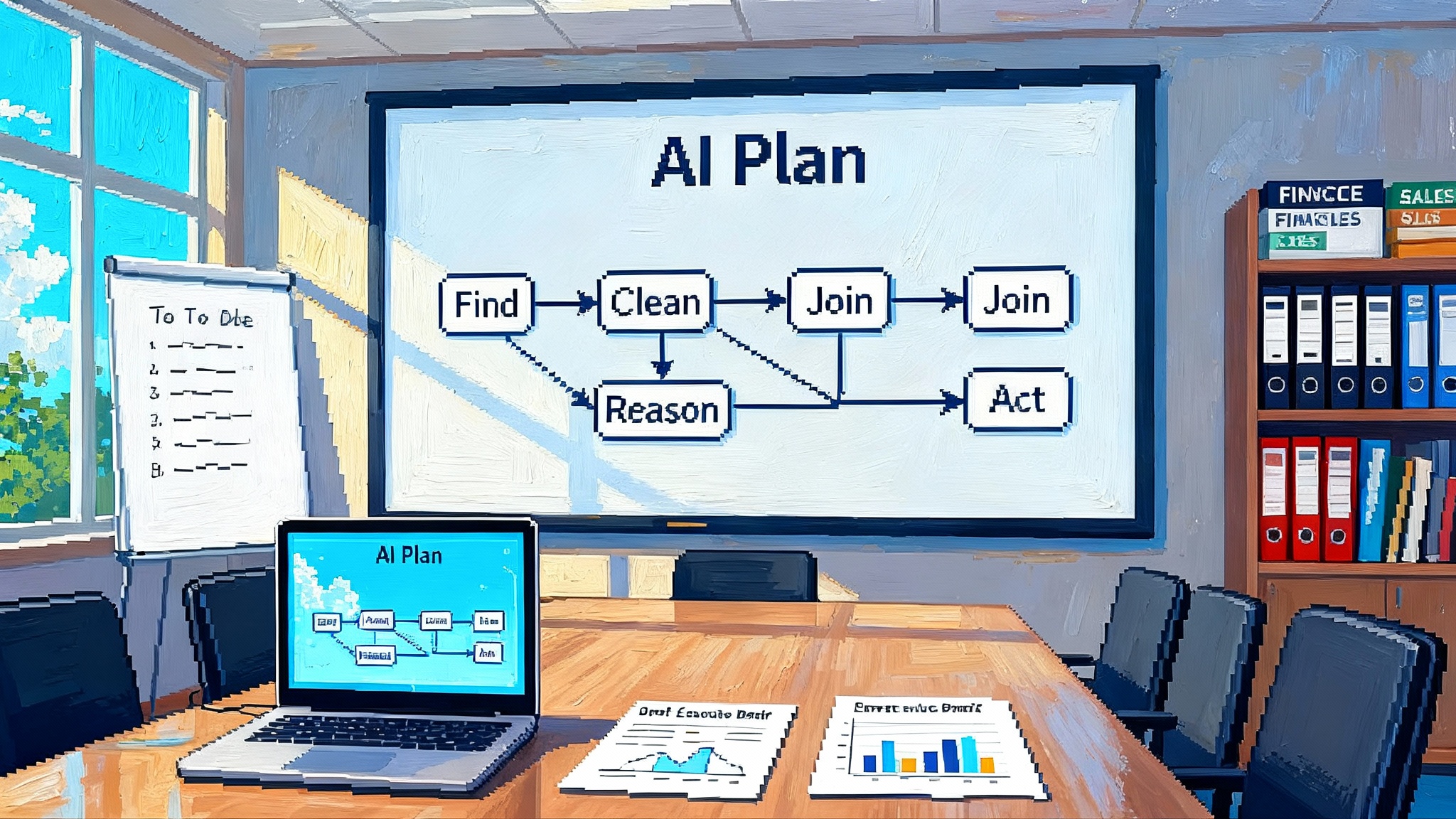

To see why chaining matters, consider how an agent would confirm a server side request forgery.

- Reconnaissance: the browser observes an image upload feature that fetches a preview by URL. The proxy watches for a request such as GET /fetch?url=http://example.com/image.jpg.

- Hypothesis: if the server fetches by itself, it may be able to reach internal addresses that users cannot, such as 169.254.169.254.

- Exploit attempt: the agent sends a crafted URL to an internal metadata endpoint and watches the timing and the headers in the proxy.

- Confirmation: if the response contains a token or metadata shape, the agent saves the evidence, wraps it in a proof of concept script, and records how to reproduce it.

- Report and remediation: the agent explains which validation is missing, suggests a deny list or a network egress rule, and marks the severity.

This is the difference between a signature and a finding you can act on. The system does not guess. It shows what it did and what happened.

The announcement and what to cite when your team asks

The developer behind Strix introduced the project publicly during the first week of October 2025 and pointed to a real codebase, not a slide deck. The Product Hunt launch page places the public launch in that window and links to the same repository your team can try in a controlled environment. It is rare to have both a clear announcement date and a working open source agent to evaluate within days.

Wire Strix into your staging pipeline with gates

Below is a concrete starting point you can adapt this quarter. It assumes you have a staging environment, a secrets store, and a container registry.

Prerequisites

- A dedicated staging domain and database seeded with red team safe data.

- A service account for the agent with a basic role and a separate account with elevated privileges for escalation tests.

- A container image that packages the agent, a proxy, and the minimal toolchain your policy allows.

- A storage bucket for artifacts, encrypted and private.

Policy file

Create a policy file checked into a security repo that defines scope and guard rails.

# security/strix-policy.yaml

scope:

allow_domains:

- staging.example.com

deny_domains:

- prod.example.com

allow_ports: [80, 443]

allow_routes:

- /api/*

- /auth/*

limits:

max_runtime_minutes: 45

max_requests: 6000

concurrency: 4

identities:

accounts:

low_priv:

username: agent_user

role: basic

high_priv:

username: agent_admin

role: admin

approvals:

require_for:

- destructive_actions

- privilege_escalation

artifacts:

retain_days: 30

include:

- http_traces

- screenshots

- poc_scripts

GitHub Actions example

# .github/workflows/strix-staging.yml

name: Strix Staging Security Run

on:

workflow_dispatch:

schedule:

- cron: \"0 2 * * 1-5\" # weekdays at 02:00 UTC

jobs:

strix:

runs-on: ubuntu-latest

permissions:

contents: read

actions: read

id-token: write

env:

STRIX_LLM: openai/gpt-5

LLM_API_KEY: ${{ secrets.LLM_API_KEY }}

STRIX_POLICY: security/strix-policy.yaml

STRIX_TARGET: https://staging.example.com

steps:

- name: Check out policy

uses: actions/checkout@v4

with:

repository: org/security

path: security

- name: Pull agent image

run: docker pull ghcr.io/org/strix-runner:latest

- name: Run Strix

run: |

docker run --rm

-e STRIX_LLM

-e LLM_API_KEY

-v \"$GITHUB_WORKSPACE/security:/policy\"

-v \"$GITHUB_WORKSPACE/artifacts:/artifacts\"

ghcr.io/org/strix-runner:latest

strix --target \"$STRIX_TARGET\"

--policy /policy/strix-policy.yaml

--out /artifacts/run-$(date +%Y%m%d-%H%M)

- name: Upload artifacts

uses: actions/upload-artifact@v4

with:

name: strix-artifacts

path: artifacts

- name: Gate on confirmed vulns

run: |

python scripts/parse_strix_report.py artifacts/latest.json

--fail-on-severity high

The gate fails only on confirmed exploits at high severity. That choice keeps signal high and avoids blocking deployments on heuristics. The parser looks for fields such as exploitable: true and severity: high in the agent’s JSON output.

GitLab continuous integration example

# .gitlab-ci.yml

stages: [security]

strix_security:

stage: security

image: ghcr.io/org/strix-runner:latest

rules:

- if: \"$CI_COMMIT_BRANCH == 'main'\"

script:

- strix --target \"$STAGING_URL\"

--policy security/strix-policy.yaml

--out artifacts/run-$CI_PIPELINE_ID

artifacts:

when: always

paths:

- artifacts/

allow_failure: false

Add human checkpoints with a simple approval step before any task that uses elevated credentials. In GitHub Actions this can be a required review on a deployment environment. In GitLab it can be a manual job that moves the pipeline forward. The key is to encode the rule in the pipeline, not in a team chat.

What good evidence looks like in reports

A useful report is one you can act on without guesswork. Ask for these qualities:

- Exact reproduction steps: the URL, headers, and body used during exploitation.

- Artifacts attached: screenshots, HTTP traces, and proof of concept scripts.

- Scoping context: which identities were used and which environment the finding belongs to.

- Remediation guidance: clear language that links the root cause to a fix, such as input validation, stricter authz checks, or egress rules.

The difference between a scanner alert and an agent finding is accountability. The latter tells you what happened, where, with which identity, and how to fix it.

A team checklist you can ship this quarter

Use this list to move from idea to practice.

- Define staging scope: domain, routes, and services the agent is allowed to touch. Put it in version control.

- Create dedicated agent identities: separate low and high privilege accounts with short lived credentials and clear roles.

- Containerize the runner: a single image that includes the agent, a proxy, and only the signed tools you allow.

- Write a minimal policy: limits, identities, approvals, and artifact retention. Review it like application code.

- Wire a nightly job: run the agent against staging on a fixed schedule and store artifacts under an encrypted bucket.

- Gate on confirmed findings: fail pipelines only on exploited high severity issues. Triage the rest weekly.

- Prepare honeypots: plant honey tokens and fake administrator endpoints in staging to detect overreach and external probing.

- Add audit logging: capture command invocations, network traces, screenshots, and proof of concept scripts with timestamps.

- Rotate secrets: use a managed secrets store and rotate agent credentials every month or on any sign of drift.

- Assign ownership: name one engineer for the pipeline, one for policy, and one for triage. Ship their names in the policy file.

- Drill remediation: pick a weekly issue, reproduce from the agent’s report, patch, and rerun to verify.

- Review legal scope: log permission for each target environment and communicate the testing window to stakeholders.

That is the minimum viable program. You can add richer features later, such as agent specific dashboards, data classification rules that change behavior on sensitive fields, or environment snapshots for deterministic reproductions.

What to watch next

Expect a race on two fronts. First, capability. Teams will keep adding adapters and tools that a permissioned agent can call to extend its reach. Second, control. Enterprises will want stronger policy engines, signed manifests, and audit layers that let them prove who did what and when.

There is a straightforward lesson here. Treat an AI hacking agent like any other powerful piece of infrastructure. Give it a narrow lane, strong identity, a paper trail, and a useful job to do. When you do, you get something rare in security practice. You get a tool that not only finds real problems but shows you exactly how to fix them, and you can run it every day without burning out the team.

The bottom line

Strix’s October 3 launch is not a teaser. It is an invitation to operate adversarial testing with the same discipline you apply to builds and deployments. Put an agent in staging. Give it guard rails. Let it try to break your work and show its notes. If you can make that routine, you will improve security without slowing the ship.