Publishers Go On Offense With ProRata Gist Answers

A startup flips AI search economics by letting publishers host LLM answers on their own sites, license from a 700 plus source network, and share revenue. Here is why it matters and how to ship a production launch fast.

The news that resets the AI search debate

On September 5, 2025, ProRata.ai put AI search squarely on publisher turf. The company raised 40 million dollars and introduced Gist Answers, a tool that lets media brands embed first party, large language model answers on their own domains. The system can answer from a publisher’s content alone or expand to a licensed network of roughly 750 partners with revenue sharing built in. Axios reported the financing and launch. The move reframes AI assistants from external aggregators into on site product features that attribute, recirculate, and pay.

If you squint, the idea is deceptively simple. Instead of hoping general chatbots send traffic back, the publisher becomes the chatbot. Visitors ask questions in plain language. The site returns answers grounded in its own reporting and fills gaps using licensed sources. The whole experience stays on the publisher’s page, credits the underlying work, and compensates the sources that contribute. That turns a defensive posture into an offensive product plan.

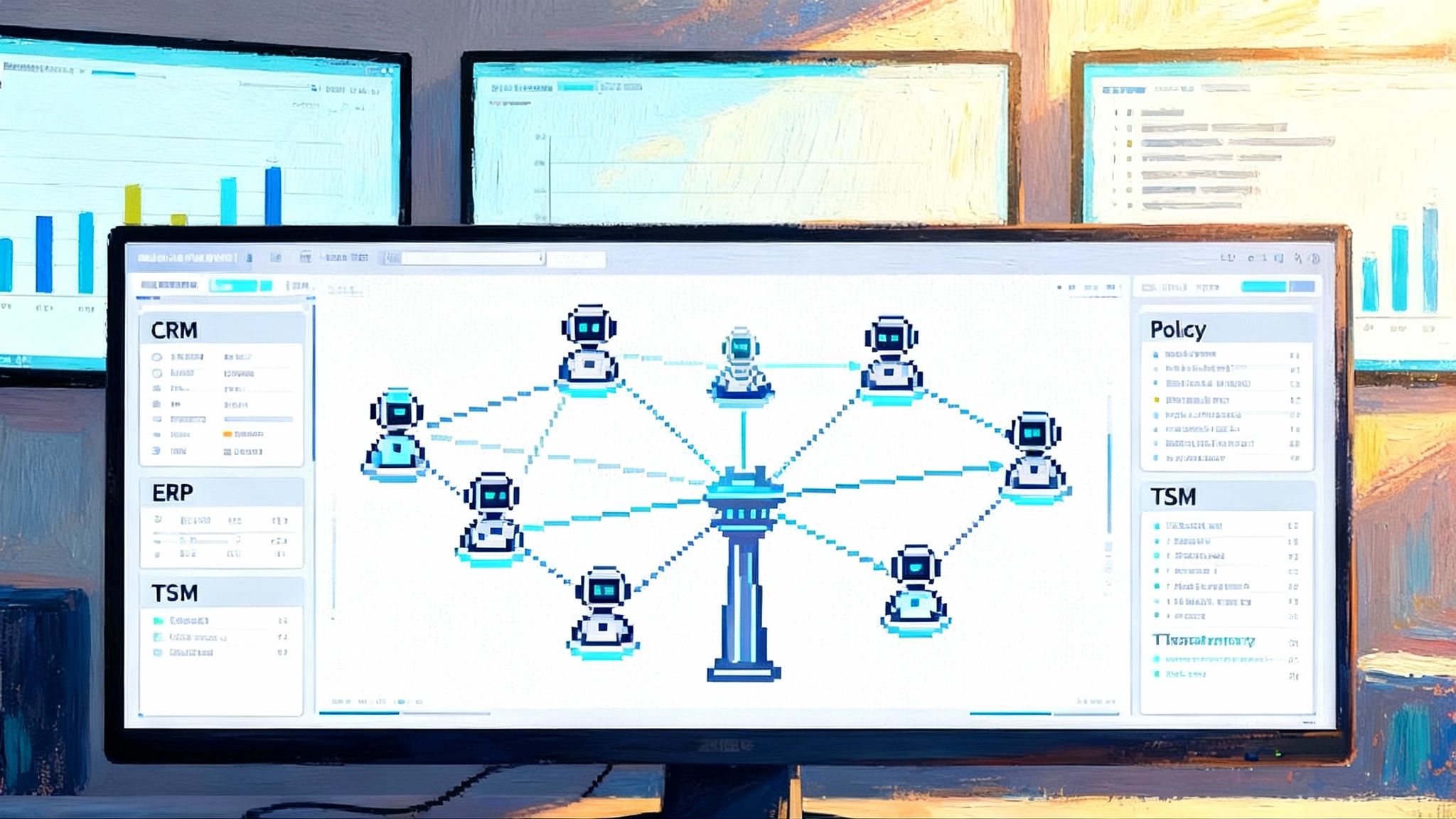

Why this flips AI distribution economics

For two years, most AI answers have appeared elsewhere, with thin attribution and little compensation. Traffic and revenue flowed to aggregators. Gist Answers reverses the flow.

- Distribution moves from hub to edge. The publisher owns the interface, the question, the answer, and the next click. That removes the need to claw back attention from off site assistants.

- Licensing replaces scraping. Rather than ingesting content without permission, the system draws from a network of licensed sources and shares revenue with contributors. The economic relationship is explicit and enforceable.

- First party data becomes a moat. Site taxonomy, topic authority, historical coverage, and editorial flags guide retrieval and ranking. That creates differentiation a general chatbot cannot easily replicate.

- Monetization aligns with quality. If an outlet’s pieces materially shape the answer, the outlet gets paid. ProRata promotes a 50 50 revenue sharing model for content partners that opt in, and early reporting confirms that structure. The better your reporting, the more you appear in answers and the more you earn.

Think of it like bringing the restaurant back to the farm. Instead of selling ingredients to a middleman who controls the dining room, the farm opens a kitchen on site. Diners still get a great meal, but the farm greets the guests, tells the story of how the food was grown, and splits the bill with the neighbors who supplied the herbs.

How it reshapes attribution and traffic flows

Attribution in generative answers has too often been a footnote. On site answers flip that into a first class product feature.

- Source credit is part of the interface. Answers can cite contributing articles inline and encourage deeper reading with suggested follow ups. The path from summary to full story stays inside your environment.

- Recirculation starts in the answer box. A question about a hurricane can route to your live blog, a preparedness checklist, and investigative coverage of infrastructure risk. The answer becomes a router for in depth reporting.

- Cross network lift is measurable. When the model pulls from the licensed pool, contributing partners see compensation and discoverability. The system behaves like a cooperative wire service for AI, where contribution is priced and trackable.

- Quality becomes a ranking signal that pays. Comprehensive and timely reporting shows up more in answers, earns more credit, and draws more sessions back to your site.

The change will not eliminate external distribution. It will, however, make a publisher’s own domain the most defensible place to capture AI driven intent.

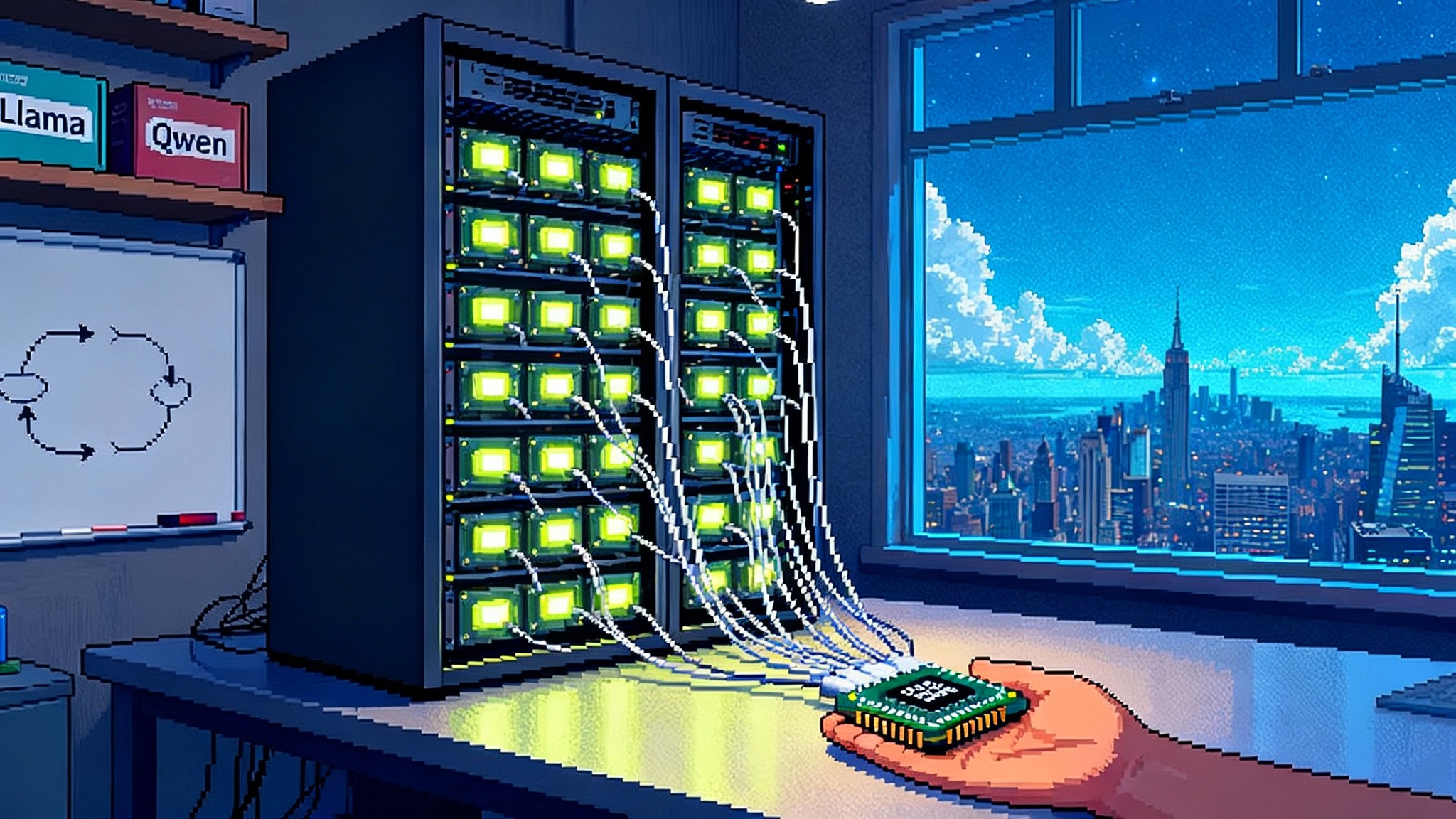

What Gist Answers actually is

Under the hood, Gist Answers is a retrieval augmented generation layer that prioritizes a publisher’s content, then selectively expands to a licensed library when needed. It installs quickly via snippet or plugin and can match a site’s look and feel. ProRata positions the product as accurate by design because it answers from vetted, licensed sources rather than indiscriminate web crawl. The company highlights a library of more than 700 trusted publications and simple setup for common content management systems on the Gist Answers product page.

Publishers can keep answers strictly first party, or allow the model to supplement from the licensed pool. When the supplement mode is active, the platform attributes contributions and shares revenue. Axios reported that Popular Science and The Atlantic are among outlets planning to participate, and that ProRata uses a 50 50 split for licensed content that informs answers. That pairing of on site utility and network economics is what makes the launch consequential.

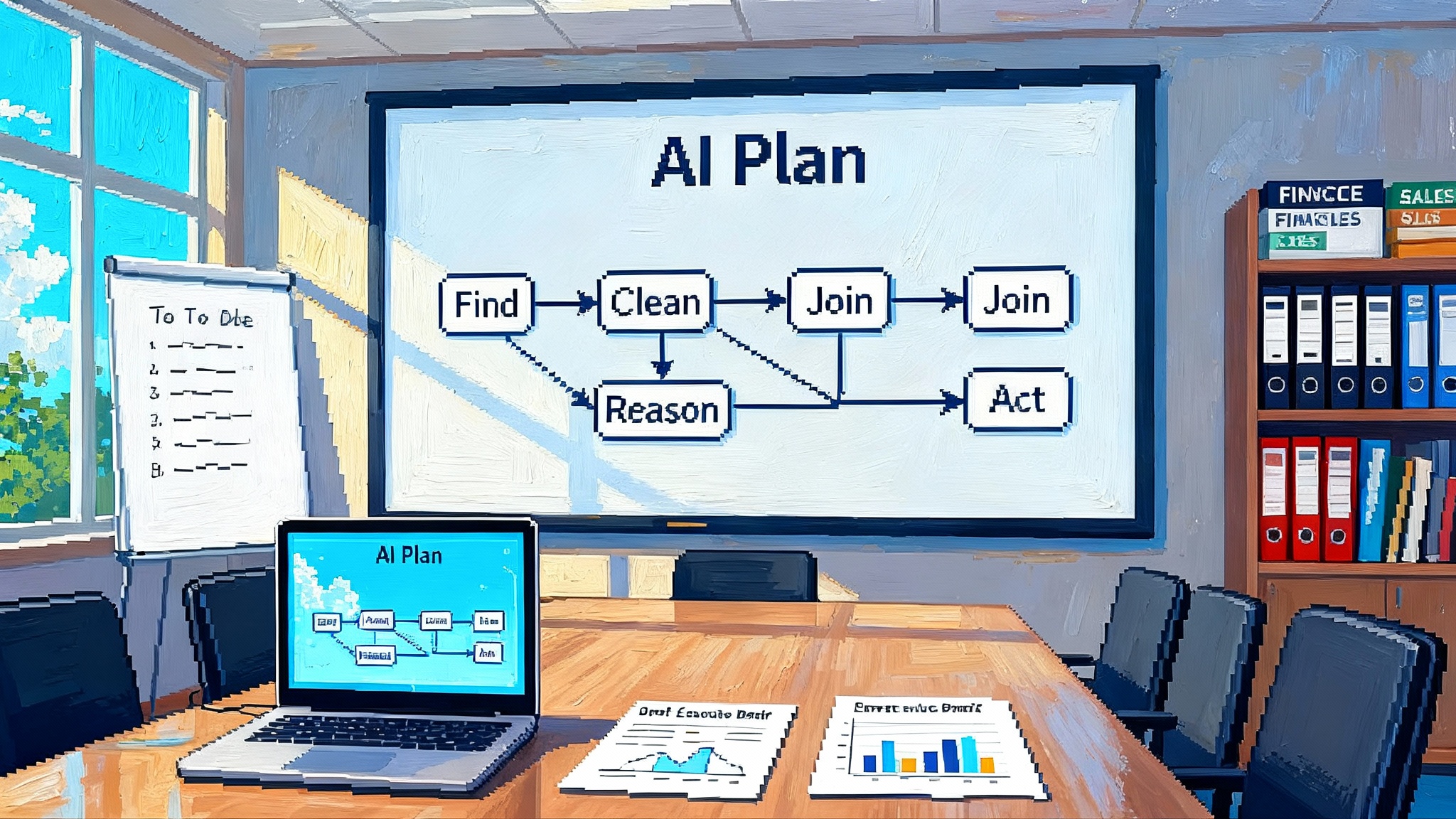

A builder’s playbook to ship on site AI answers in weeks

Here is a step by step plan for media and CMS teams that want to stand up a Gist style experience fast. The goal is first party answers by default, optional licensed expansion, and a revenue ready framework.

1) Start with a data contract, not a data dump

- Define allowed uses. Spell out what content can be used for retrieval, what is excluded, and how paywalled or embargoed material is handled. Make licensed expansion optional and auditable.

- Specify attribution rules. Agree on how sources are displayed, how many citations appear per answer, and how links are ranked when multiple partners contribute.

- Capture commercial terms as structured fields. Include revenue share percentage, minimum reporting cadence, and dispute timelines in machine readable fields so attribution and payouts can be automated.

Pro tip: Put the contract terms in a versioned manifest that lives next to your content feed. Treat it like an application programming interface for rights.

2) Build a clean ingestion path

- Use your best source of truth. Prefer a normalized feed from your content system over scraping your front end. In WordPress, expose a posts endpoint with full text, tags, section, author, timestamp, and canonical URL. In headless systems, export a JSON feed that mirrors your content schema.

- Include editorial metadata. Add desk, beat, story type, and evergreen flags. These fields let you boost explainers over fleeting updates or point breaking queries to live blogs.

- Refresh on the right cadence. Breaking sections can push updates every few minutes, while evergreen archives can refresh daily. Stagger jobs to avoid cache stampedes during major events.

3) Index for retrieval that respects editorial intent

- Use hybrid search. Combine sparse retrieval using inverted indexes with dense vector search. Sparse excels at names and quotes. Dense captures semantic similarity. A hybrid reranker gives you the best of both.

- Partition by section and freshness. Build separate shards for breaking news, features, explainers, and archives. Route queries based on intent signals like question words, date constraints, and entity detection.

- Preserve canonical relationships. Index the canonical field and resolve duplicates so that syndicated pieces or updates point back to the main record.

4) Design ranking that mirrors your newsroom values

- Boost authority. Give weight to pieces with strong editorial signals like an editor’s pick flag, high engagement, or an authoritative byline.

- Penalize stale or superseded stories. Use update timestamps to demote versions that have been meaningfully revised. Offer a banner in the answer when a newer story exists.

- Encourage depth. Prefer comprehensive explainers or hub pages when the question is broad. For narrow questions, rank Q and A or fact checks higher.

5) Make attribution verifiable and payout ready

- Token level contribution. Track how many generated tokens are supported by which sources. Aggregate into fractional credits per source per answer.

- Keep auditable logs. Store the retrieval set, the ranked list, the final selected passages, and the generated answer with a hash. Retain for at least one year.

- Automate splits. Batch payouts using fractional credits multiplied by monetized events such as sponsored answer views or clicks.

6) Ship safe and fast by agreeing on guardrails

- Set coverage bounds. For regulated topics like health and finance, route to human written explainers or display a caution that links to authoritative guides on your site.

- Enforce style and tone. Provide few shot examples that reflect your voice. Ban speculation for breaking stories by requiring an explicit source before claims appear.

- Block unsafe output. Maintain a blocklist and a policy set that rejects slurs, doxxing, or legal advice. Fallback to a search results page when the model cannot answer safely.

7) Create an evaluation plan you can run every day

- Retrieval quality. Measure recall at k, mean reciprocal rank, and normalized discounted cumulative gain on a set of newsroom questions. Populate the set from real search logs and audience research.

- Answer faithfulness. Score groundedness by checking that each sentence is supported by retrieved passages. Use spot audits with editors for high traffic queries.

- User utility. Track time to first useful click, click through to cited stories, and question reformulation rates. Strong answers reduce reformulations and increase deep link clicks.

- Bias and coverage. Run slices by topic, identity terms, and geography to detect skew. Require per slice sign off before shipping to a large audience.

8) Treat monetization as a product choice, not an afterthought

- Decide where sponsored messages appear. A small sponsorship tag in the answer can work if the creative is relevant and clearly labeled.

- Align incentives. If licensed partners contribute, include them in the split for sponsored views derived from their passages. That strengthens the network effect.

- Keep ads context safe. Use your retrieval metadata to block ad creatives that conflict with sensitive topics or ongoing coverage.

9) Wire analytics to prove value fast

- Instrument the full loop. Capture question asked, components retrieved, answer returned, citations shown, clicks to article pages, and revenue events.

- Build an attribution dashboard. Show editors and executives how much of each answer credited your pieces versus the network, and how much revenue and traffic each answer generated.

- Benchmark against legacy search. Compare session duration, page depth, and return rate for users who engaged with the answer box versus those who used the old site search.

10) Launch in phases that build confidence

- Start with a topical beta. Choose one vertical with steady demand such as personal finance or consumer tech. Set strict guardrails and instrument everything.

- Expand by intent. Add navigational and evergreen queries next, then time sensitive news once your freshness and safety workflows are proven.

- Bring partners in last. Enable licensed expansion only after your first party answers demonstrate accuracy, attribution, and monetization uplift.

What this means for the wider ecosystem

Three near term implications are most important for media, platforms, and advertisers.

- The post scraping model has momentum. When attribution and payment are built in, more publishers will license into networks that reward contribution. The result is a supply of high quality, legally clean content that improves answer quality.

- Search fragments into many local maxima. As large outlets and niche experts run on site answers, audiences will find high trust responses across the web, not just at a few general assistants. That fragmentation increases the value of brand authority and schema quality.

- Ads evolve from page slots to answer moments. Marketers buy context, not pixels. That raises the bar for relevance and measurement and favors teams that align creative to questions and intents.

Practical questions leaders should ask this week

- Do we have the rights and infrastructure to answer from our content today, and would we authorize licensed expansion under a clear contract and audit trail?

- Can our CMS export the fields a retrieval model needs, including canonical URL, timestamps, taxonomy, and evergreen flags?

- Which sections would benefit most from on site answers, and what guardrails are required for each?

- What is our bar for citation display and link prominence inside the answer so readers can verify and go deeper?

- How will we measure quality, safety, and revenue, and who owns the daily dashboard?

Edge cases and newsroom realities

- Breaking news surges. During a major event, switch the answer box to a mode that privileges the live blog and verified updates only. Block speculative claims until an editor approves.

- Paywalled content. Permit retrieval from paywalled text for answer grounding, but only show short attributed snippets with clear links to the full story for subscribers.

- Mixed languages. If you serve multilingual audiences, route non English queries to traditional site search or to curated explainers until multilingual retrieval is reliable.

- Syndication overlap. If your story ran in a partner’s feed, prefer the canonical source your audience expects. Keep the partner credited for contribution if their version adds unique passages.

How this intersects with your broader AI strategy

On site answers do not exist in a vacuum. They sit alongside answer optimization, agents, and new funnel mechanics.

- Answer optimization. If your growth team is working on generative engine optimization, study how your own answers surface and how they cite. For a broader view of the playbook, see our guide to win placement in AI answers with GEO tactics.

- Agent infrastructure. As more of the stack becomes agentic, retrieval and attribution will connect to workflows, not just search. That shift is already visible as agents move into the database and closer to business logic.

- Monetization beyond pages. If your go to market team is piloting conversational funnels, on site answers become the top of that path. For how autonomous experiences change conversion mechanics, explore how inbound sales goes autonomous with AI reps.

A smart way forward

The last era of search rewarded whoever could centralize demand. The next era will reward whoever can answer well, attribute clearly, and pay fairly at the point of intent. ProRata’s Gist Answers is one of the first attempts to hard code that alignment into a product. It is not the only model that will emerge, but it shows a credible way beyond scrape, litigate, and wait.

Publishers do not need to win the whole web to change their economics. They need to capture their own audience’s questions, route those questions to their reporting, and make sure everyone who contributes shares in the results. That future is available to ship right now, and the steps above will get you from idea to on site answers in weeks.