Aidnn’s $20M debut and the end of dashboard culture

Isotopes AI introduced Aidnn on September 5, 2025 with a $20 million seed round, signaling that agentic analytics is moving from demo to default. Here is how it rewires FP&A and RevOps and what to build next.

Breaking: a $20 million bet that dashboards have run their course

On September 5, 2025, Isotopes AI introduced Aidnn, an agentic analytics coworker designed to behave like a blended analyst and data engineer. The company also announced a $20 million seed round led by NTTVC. Coverage in the TechCrunch launch report on Aidnn underscored both the scale of the bet and the market’s impatience with dashboards that stop at a chart.

Dashboards still glow on big screens, but behavior around them has stalled. Teams look, then pivot to spreadsheets and messaging threads to figure out what happened and what to do. Aidnn is pitched as the missing colleague who takes a first pass at those follow on steps: finding the relevant data, fixing the mess, joining sources, reasoning about cause and effect, and drafting a plan with next actions.

From data duels to decision coauthoring

If classic business intelligence is a static map, agentic analytics is a turn by turn navigator that also suggests detours. Instead of a manager asking, “What was revenue last month,” then waiting for an analyst to crunch numbers and paste a chart, Aidnn lets that same manager ask a compound question in plain language, see the proposed plan of attack, approve how data will be cleaned and joined, and receive a draft narrative with visuals and follow through recommendations.

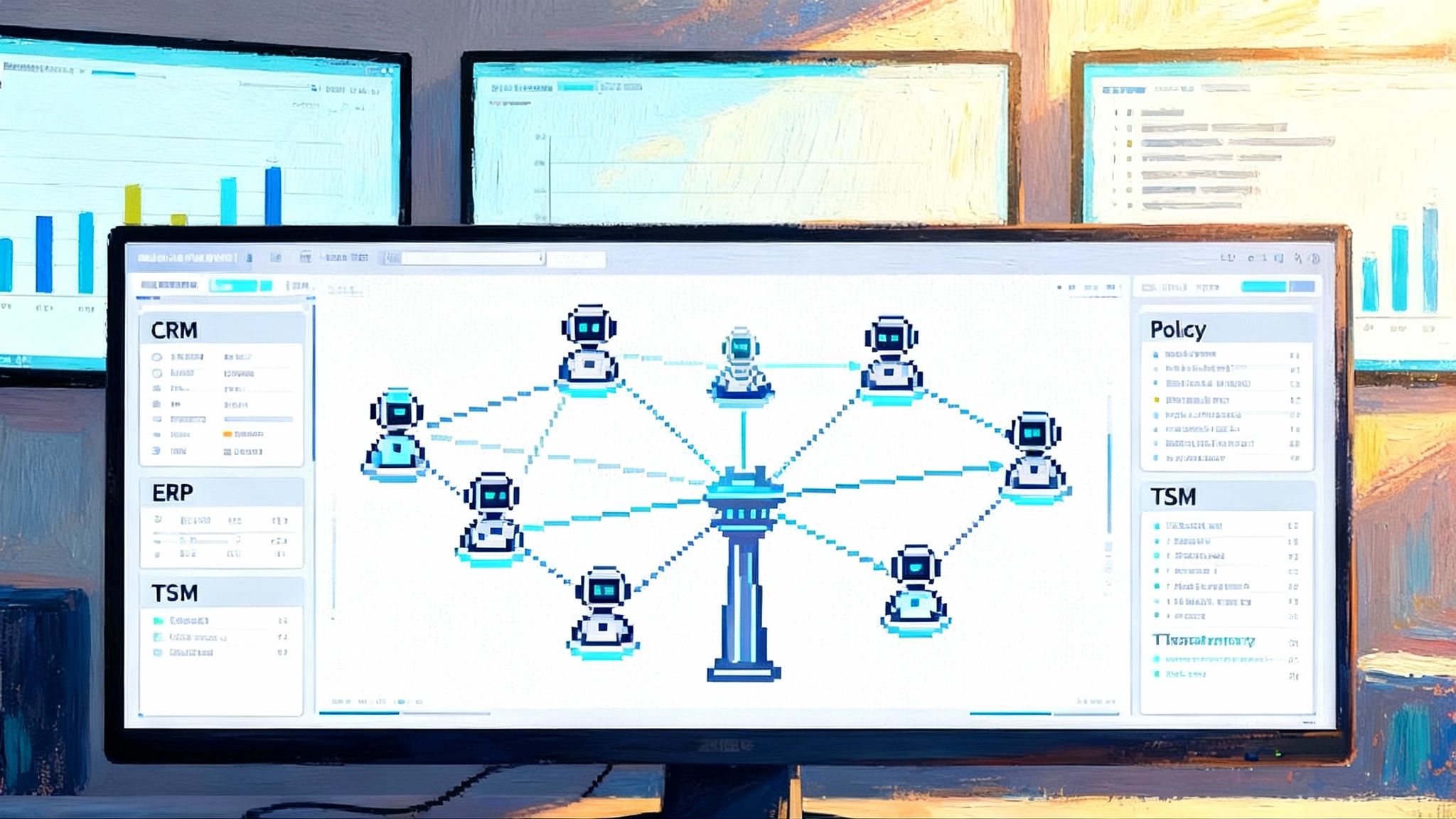

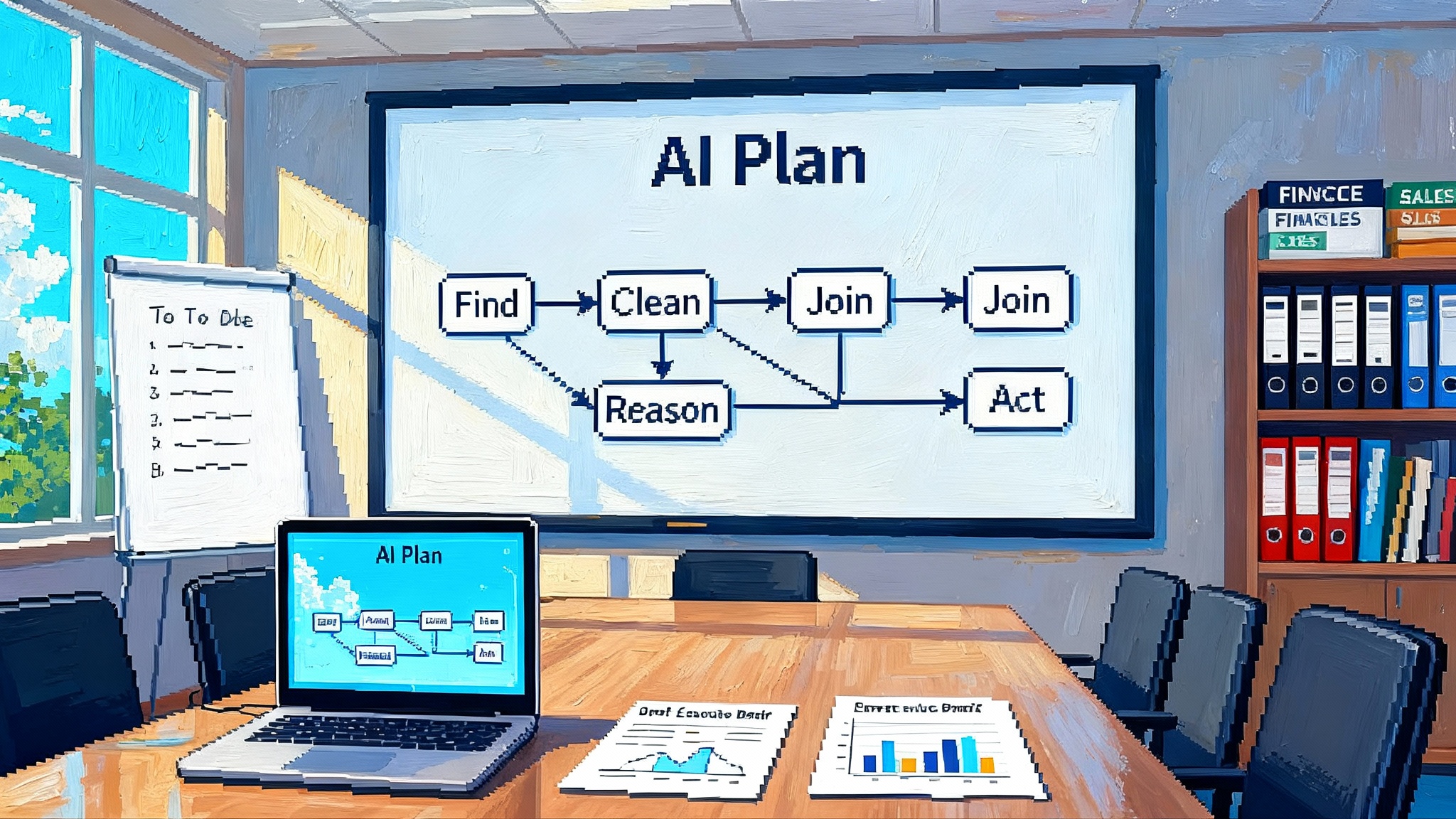

Isotopes AI describes Aidnn as a multi agent system that cooperates in stages: gather, clean, reason, and operationalize. The product site emphasizes that Aidnn works where the data already lives and keeps data under enterprise control, a requirement for finance, revenue operations, and compliance teams. For examples and positioning, see the Aidnn product overview page.

The shift sounds subtle, but it changes incentives. Dashboards reward looking. Agents reward doing. When the analytics surface includes a recommended action with a rationale, the social friction of moving from insight to decision drops. Managers stop bookmarking charts for later and start editing the proposed plan in the same workspace where the numbers are computed.

What Aidnn actually does, in plain terms

Imagine you join a new company on Monday and by Friday you are expected to explain a sudden dip in net revenue retention and propose a fix. The facts you need live in at least five places: customer contracts in a CRM, billing history in a finance application, product usage in a warehouse, support tickets in a help desk tool, and a spreadsheet of exceptions.

An agentic coworker has three core jobs:

- Find and fetch the right tables, files, and fields. It searches your warehouse and business systems, reads schemas and field descriptions, and builds a working set.

- Clean and join the messy parts. It handles missing fields, inconsistent date formats, duplicate records, and joins events across systems that use different identifiers.

- Reason about cause and effect. It computes relevant metrics, compares periods, runs cohort analysis, and drafts hypotheses that line up with the numbers.

Aidnn’s design targets all three. In product demos and early write ups, it shows a plan before it runs, explains how it cleaned and joined data, and outputs both charts and a narrative with proposed follow ups. That is closer to delegating a task to a colleague than to querying a static dataset.

Immediate effects on FP&A workflows

Financial planning and analysis thrives on repeatable answers to constantly changing questions. Aidnn’s launch matters here because it shifts where time is spent.

- Month end close: Instead of waiting on a parade of CSV exports and spreadsheet reconciliations, a controller asks for the close package, approves the data access plan, and gets an annotated workbook. It includes revenue breakdowns by product and region, variance explanations against budget, and a list of outliers with suggested corrections. The agent cites exactly how it imputed missing invoice dates and how it prorated revenue for partial months.

- Forecast refresh: A chief financial officer requests a rolling 13 week cash forecast and a scenario that assumes a 10 percent slowdown in new bookings and a two week slip in collections. The agent builds driver based models, lists assumptions, and highlights which vendors to re sequence without breaching service agreements.

- Board prep: Draft pages arrive with footnotes that name join keys and lineage from source to metric. The finance lead can accept or edit the text and lock the queries that must be rerun the night before the meeting.

In every case the dashboard remains, but it becomes an appendix. The primary artifact is the decision document and the operational follow through.

Immediate effects on RevOps workflows

Revenue operations owns pipeline health, forecast accuracy, and the conversion mechanics between marketing, sales, and customer success. Aidnn’s promise shows up in five routine tasks:

- Pipeline risk map: The agent links CRM stages, email engagement, product telemetry, and competitor mentions from call transcripts. It warns that pipeline coverage in the Northeast looks fine in gross terms but is fragile because it is concentrated in two accounts that share a risk factor, a user adoption dip.

- Attribution sanity check: The agent detects a mismatch between ad platform conversions and first touch dates in the warehouse, explains the likely cause, and proposes a correction that keeps historical comparisons stable.

- Capacity planning: It recommends territory and quota adjustments, backed by ramp curves from the last three cohorts of account executives.

- Expansion intent: It correlates seat growth signals, support satisfaction, and feature usage to produce a ranked expansion list with an outreach sequence for customer success.

- Churn triage: It shows a daily watchlist with the three factors most predictive of churn next month and the treatments that have worked before.

What used to be a weekly review of dashboards becomes a daily loop of proposed actions that carry their own provenance.

Why this is possible now

Several forces converged in 2025 to make agentic analytics viable outside of labs:

- Foundation model progress lowered the cost of reasoning across messy schemas and partial context, which makes the find and plan step reliable enough for production use.

- Connectors and integration runtimes matured. Warehouses like Snowflake and BigQuery, lakehouses such as Databricks, and application ecosystems like Salesforce and NetSuite reduced the toil of authenticating, streaming, and monitoring data flows.

- Column level lineage and metric catalogs moved from aspirational to operational. That lets agents explain themselves in the language auditors expect.

- Enterprise privacy playbooks caught up. Vendors ship configurations that keep data inside a virtual private cloud and prevent model training on customer data by default. That answers the first security question without a custom deal.

Aidnn sits at this intersection: a language interface, a planning and execution engine for analytics tasks, and a governance aware runtime that fits the risk posture of finance and sales leaders.

For a sense of the broader shift, compare this movement to how agents move into the database. As compute and decision logic migrate closer to data, agents gain both speed and context.

Risks and how to handle them

Agentic analytics raises new questions that leaders should answer before rollout.

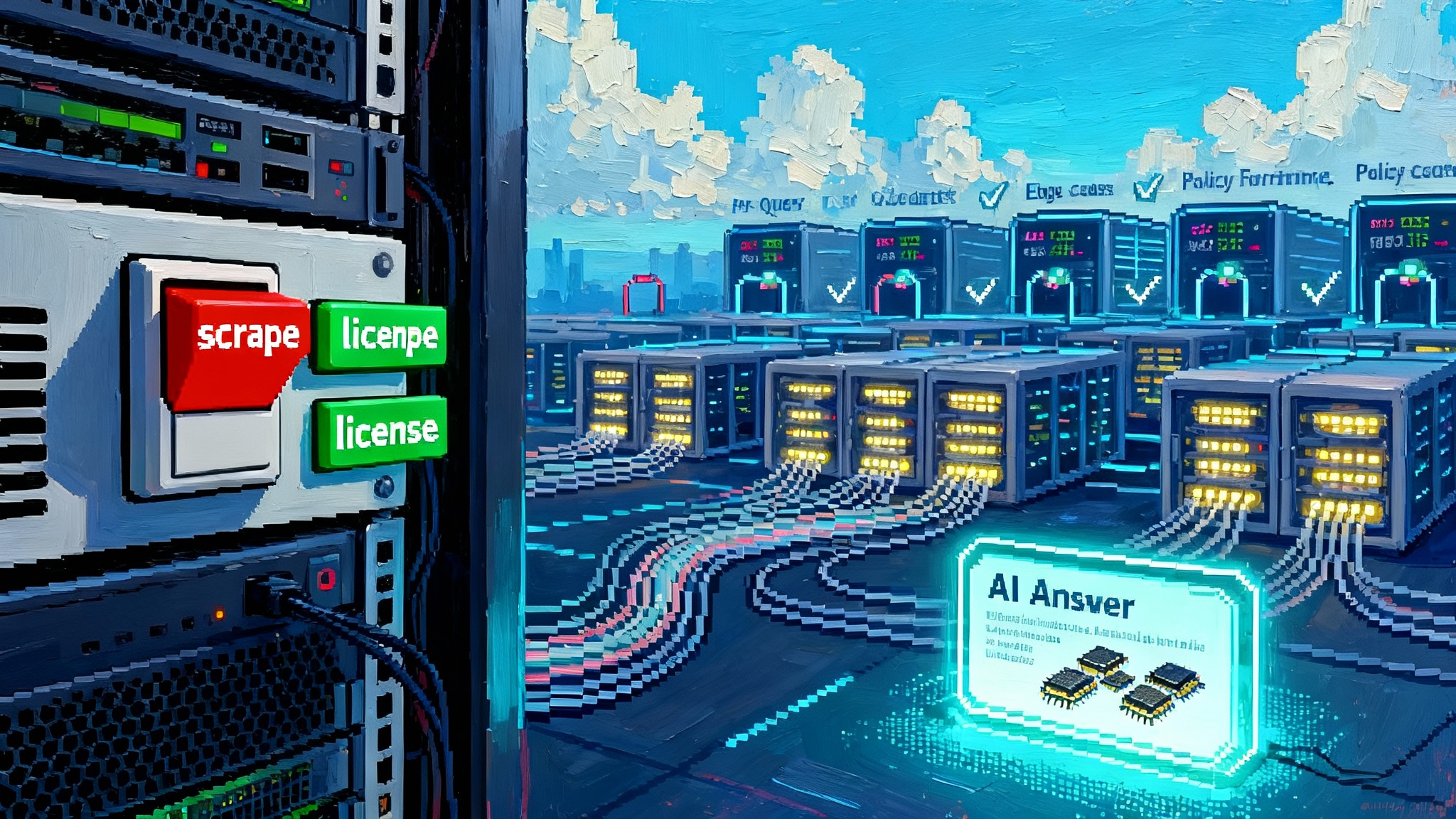

- Hallucination in analysis: If an agent invents a join key or imputes values without declaring it, trust evaporates. Require an explicit plan step where the agent lists joins, cleaning rules, and imputations before execution. Make acceptance mandatory for material reports.

- Policy drift: Finance and revenue rules change. Encode a policy registry that agents must check before proposing a treatment, for example discount thresholds and approval steps. Keep the registry version controlled and visible in the agent’s plan.

- Access control: Role based access with row level security is the default, but agents also need context about why access is denied. Provide masking policies that describe the rule so the agent can plan around it rather than fail silently.

- Latency and freshness: Agents that reason across both warehouse and operational systems can create unpredictable lag. Establish service level objectives for analysis freshness and make agents report whether they met them in the output.

What to build next to ride the shift

Three investments will compound the value of Aidnn like systems.

1) Governed data contracts at every boundary

Treat each integration between applications and the warehouse as a contract. The producer promises a schema, semantics, and delivery time. The consumer promises validation and feedback. Put contracts in version control, enforce them at ingestion, and surface breaking changes in the agent’s plan view. For finance data, mandate tests for currency codes, time zone handling, and revenue recognition fields. For sales data, standardize stage definitions and ownership fields. This keeps agents from quietly normalizing away real problems.

2) Lineage aware agents that can debug themselves

Add a lineage graph that spans raw sources, transformations, metrics, and published artifacts. Require every agent run to attach a snapshot of the lineage it touched. When a number looks wrong, the agent can trace back to the origin, check the last change to a transformation, and suggest a rollback or a data quality fix. This is the analytics equivalent of a modern software debugger.

3) ROI tested executive briefings

Stop shipping long slide decks that summarize the quarter. Ship executive briefings that include the two decisions that will move the metric and the estimated return on investment for each. Measure decision impact on forecast error, customer acquisition cost, or net revenue retention. Use control groups wherever possible. Reward teams for decisions that improve the metric, not for decks that look good.

A 30 day pilot plan, step by step

- Days 1 to 3: Pick one finance question and one revenue question that recur every week. Examples include cash forecast sensitivity to collections and pipeline fallout by segment. Write down the exact query the business owner asks and the current manual steps to answer it.

- Days 4 to 10: Define minimal data contracts for the sources involved. Lock definitions for customer, product, invoice, opportunity, and stage. Map owners for each table and field. Add freshness expectations and failure notifications.

- Days 11 to 15: Configure the agent to run inside your virtual private cloud with access to the warehouse and read only access to the needed applications. Turn on lineage capture and logging from day one. Define a plan approval gate for any analysis that could change a financial statement or external forecast.

- Days 16 to 20: Run in shadow mode. The agent produces the close package and the pipeline update without sending it. Analysts compare its work to the human version, annotate discrepancies, and rate plan clarity. Track false positives and false negatives as separate metrics.

- Days 21 to 25: Move to human in the loop. Approve agent plans, accept or edit the narratives, and execute one recommended action per day. Start measuring decision cycle time from data refresh to approval.

- Days 26 to 30: Review results. Track time to insight, decision cycle time, number of manual corrections, and the share of recommendations accepted. Decide whether to expand scope to additional workflows or increase automation depth.

If you are evaluating the broader enterprise agent ecosystem, consider how this pilot pattern aligns with enterprise AI agents go real, especially on deployment inside controlled environments.

Competitive context without the noise

Aidnn enters a crowded field. The largest vendors have embedded assistants in their suites. Warehouse platforms promote code copilots for data transformations. Startups target specific roles, from revenue analysis to support operations. What distinguishes winning approaches is not a clever interface. It is the chain of custody from data source to decision, the clarity of the agent’s plan, and measurable lift in outcomes.

Aidnn’s founders bring experience building and running large scale data systems. That background shows up in the product’s emphasis on transparent planning, explainable transformations, and on premises friendly deployment. The market will test whether those choices shorten the path from question to decision and withstand enterprise audits.

We also see a pattern at the edge, where the interface for agents moves closer to users. When the browser itself becomes an automation surface, as in browser becomes your local agent, the step between analysis and action shrinks further. In that world, an analytics agent that can open a ticket, schedule a task, or send a vetted brief closes the loop faster than a dashboard ever could.

How FP&A and RevOps should measure impact

Move past vanity metrics for agent adoption. Track the following and tie them to business outcomes.

- Time to first acceptable plan: Days from kickoff to the first agent produced package you would send to leadership.

- Decision cycle time: Hours from data refresh to decision approval, by workflow.

- Forecast accuracy: Absolute percentage error for revenue or cash versus the human only baseline.

- Cost to serve: Hours saved per month per analyst, and the mix of work that shifted from manual reconciliation to narrative and synthesis.

- Recommendation acceptance rate: Percentage of agent proposed actions that were approved, and the realized impact compared to the estimate.

- Plan clarity score: A one to five rating from reviewers on whether the agent’s plan and assumptions were explicit and auditable.

These metrics are not theoretical. They reflect where finance and sales leaders feel pain today and they are straightforward to instrument.

The dashboard is not dead, it is an artifact

Dashboards remain useful as status boards. They show where you are on the map. Agentic analytics turns the screen into a working surface for argument and action. The metric view becomes the appendix, referenced but not the center of gravity. The center is the plan, the narrative, the forecast, and the follow through. Once teams get used to editing the plan inside the analytics surface, flipping back to a static dashboard feels like losing context.

What this signals for the next year

Aidnn’s launch is a marker. It says that startups can ship large language model powered analysts that do the boring parts of analytics work and hold up under scrutiny. It says that the boundary between analytics and operations will soften, since the same agent that explains a variance can schedule a follow up or open a ticket. It says that teams who treat governance as a product, not as an afterthought, will gain compounding advantage because their agents will run faster and fail less.

Expect buyers to probe four things. First, whether the agent’s plan is explorable and editable before execution. Second, whether lineage and policy checks are embedded, not bolted on. Third, whether the agent can operate inside private infrastructure without data egress. Fourth, whether results show up as recommended actions that are easy to accept, schedule, and track.

The move from chatbots to decision coauthoring will not be won by the loudest demo. It will be won by surgical changes to daily work: your close package ready before the meeting, your pipeline risk map that proposes territory fixes, and your executive briefing that tests the return on investment of the two hard choices in front of you.

Closing thought

Dashboards taught us to look. Agents will teach us to decide. The companies that embrace governed data contracts, lineage aware agents, and return on investment tested briefings will turn that lesson into compounding advantage. If Aidnn’s debut is the opening bell, the next year will reward leaders who treat analytics not as a report to read but as a conversation to finish with a clear decision in hand.