Hume Octave 2 and EVI 4-mini push voice AI mainstream

Hume AI released Octave 2 and EVI 4-mini, bringing fast multilingual voice agents within reach. Learn what improved in prosody and latency, why it beats text-first UX, and how to ship voice-native copilots now.

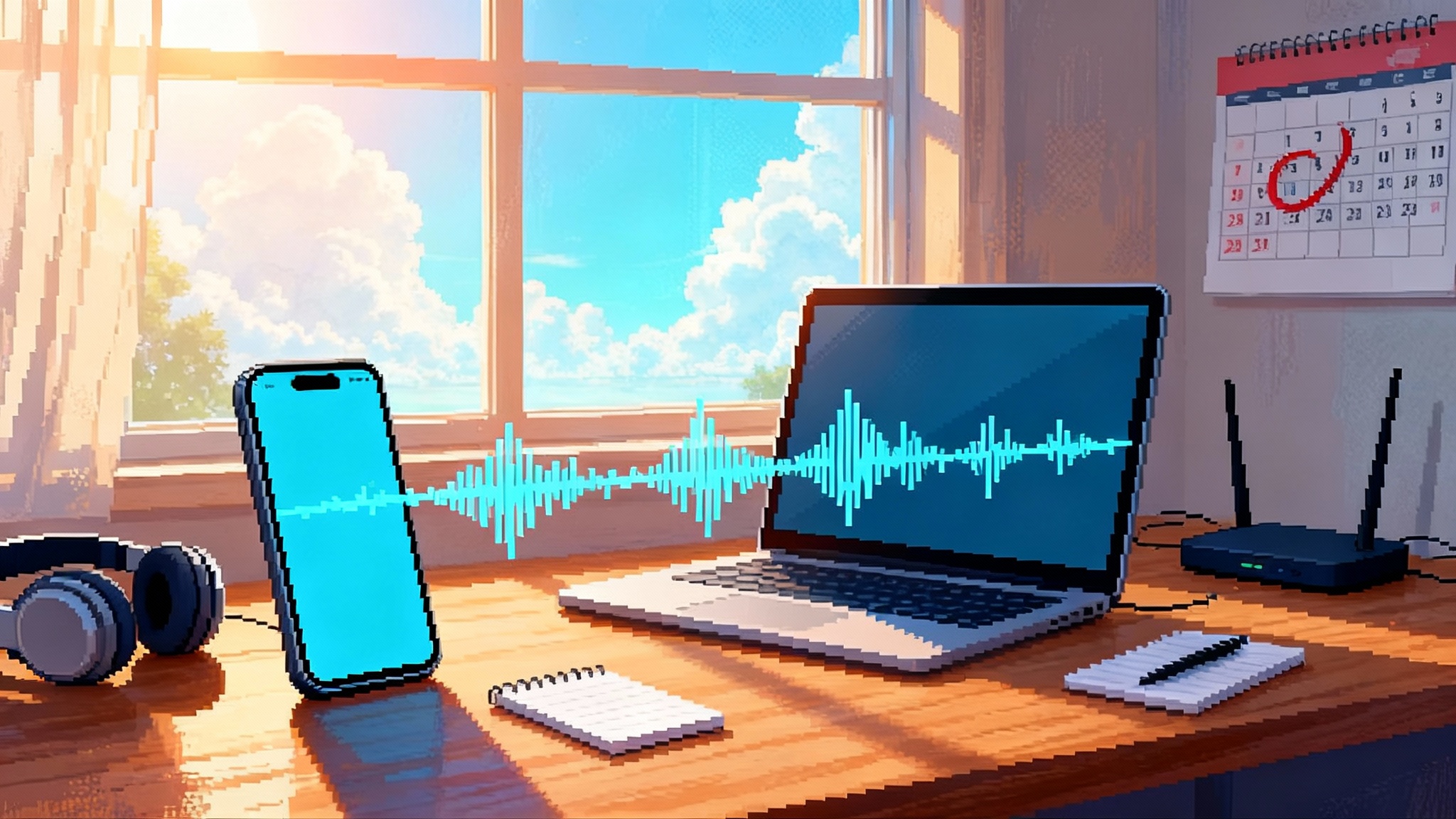

The week voice AI crossed a line

Voice has been the obvious interface for artificial intelligence since the first time we heard a demo that sounded human. The problem was always the same. Latency made conversations feel awkward, and the audio often sounded emotionally flat. With Octave 2 and EVI 4-mini, Hume AI is taking a serious swing at both problems. The result is a stack that makes real-time voice agents feel less like bots and more like collaborators you can talk to without thinking about the machine in the loop.

This article breaks down what changed, why prosody plus low latency beats text-first design, and how teams can ship voice-native copilots that users actually enjoy.

What shipped and why it matters

In the same cycle, Hume AI announced Octave 2 and introduced EVI 4-mini product details. Octave is the company’s affective speech platform focused on the nuances of human expression. EVI 4-mini is a compact model tuned for responsive, natural-sounding dialogue that runs efficiently enough to make always-on voice feel practical for consumer and enterprise apps.

Two themes stand out:

- Prosody and emotion modeling move from novelty to utility. That means better turn-taking, fewer awkward pauses, and responses that match the speaker’s intent.

- Latency budgets tighten to the point where continuous conversation becomes comfortable. Users do not want to wait or repeat themselves. Snappiness wins.

Octave 2 in one paragraph

Octave 2 leans into expressive speech. The goal is not just pronunciation but affect. You get more accurate cues about sentiment, intent, and conversation state, plus tools to influence cadence and emphasis in the model’s voice. It is the difference between reading a line and delivering it. For support, sales, coaching, and creative tasks, that shift changes user trust and completion rates.

EVI 4-mini in one paragraph

EVI 4-mini is designed for fast response and small-footprint deployment. Think lightweight streaming, aggressive token-to-speech efficiency, and a voice that holds up under real world conditions. The focus is not maximum IQ but maximum EQ at practical speed. This is what you put on a phone, in a kiosk, or inside a browser experience without a tangle of infrastructure.

Why prosody beats text in real products

Text will stay foundational for retrieval and records, but speech wins whenever intent and emotion matter. Prosody gives you signals that text strips away.

- Emotional clarity. Users relax when the agent’s tone mirrors the moment. Coaching sounds encouraging. Troubleshooting sounds calm. Sales sounds engaged.

- Turn-taking that feels natural. Prosodic cues help the agent decide when to speak, when to hold back, and when to jump in with a backchannel like “got it” or “one moment.”

- Higher comprehension in noisy contexts. Speaking and hearing beat reading on the move. Think driving, walking, or multitasking while hands are busy.

- Less cognitive overhead. Users do not have to translate their intent into short prompts. They just talk. The agent adapts.

When teams measure task success in the wild, these advantages translate into completion rates and repeat use. The voice that responds in the right rhythm with the right tone becomes sticky.

Latency budgets that feel human

Humans notice gaps. We tolerate about a quarter second of delay in casual conversation before it feels sluggish. Product teams that build for voice often set a round trip target under that threshold for most exchanges. Even when the full pipeline occasionally spikes, careful buffering and backchannels can keep the interaction smooth.

A practical voice latency budget often includes:

- Audio capture and voice activity detection

- Low-latency streaming into the model

- Fast intent resolution or planning

- Token-to-speech synthesis that can start speaking early

- Smart barge-in so users can interrupt and correct

EVI 4-mini reduces the largest pain points in that list, and Octave 2 supplies the prosodic intelligence that hides small delays with natural fillers and confirmations. The combination is what makes the experience feel effortless.

What changed under the hood

You do not need to read a research paper to feel the difference, but the directions are clear.

- Better emotion embeddings. Octave 2 turns raw waveforms into richer features that capture affect and intent. That improves alignment with user goals.

- Tighter streaming decode. EVI 4-mini produces usable speech quickly, not just accurate speech eventually. Early tokens come out fast and are corrected on the fly.

- More robust endpointing. Voice activity detection handles messy audio so you can rely less on push-to-talk. That makes hands-free experiences viable.

- Practical multilingual support. Enough coverage to ship global features without maintaining a zoo of separate models and voices.

The key point is not perfection. It is consistency. If the system is fast and expressive nine times out of ten, users begin to trust it and the tenth time becomes forgivable.

Product patterns that win with voice

If you are designing with Octave 2 and EVI 4-mini in mind, a few patterns deliver outsized results.

-

Ear-first onboarding

- Let users choose a voice and pace.

- Offer a 15 second tutorial that demonstrates barge-in and corrections.

-

Micro-feedback loops

- Encourage short acknowledgments like “great question” or “give me two seconds.”

- Reflect user emotion when appropriate without overdoing it.

-

Mixed initiative dialogues

- Allow the agent to ask clarifying questions proactively.

- Keep the user in control with easy interruption.

-

Context continuity

- Stream transcripts into your data layer for search and analytics.

- Use session memory that survives app restarts, within user consent scopes.

-

Fail soft

- If comprehension drops, summarize what you heard, ask for confirmation, then proceed.

- Offer a visible fallback to text for noisy environments.

A practical build plan for startups

Voice is deceptively hard because many small decisions add up. Here is a pragmatic path to get from prototype to production.

-

Define tasks and thresholds

- Start with one or two jobs to be done where voice is clearly better than touch or text. Examples include quick triage, hands-free status checks, or guided troubleshooting.

- Set success metrics before you write code. Aim for task completion rate, median round trip time, and user satisfaction after the call.

-

Choose your runtime

- Keep the first build simple. A browser or a mobile client that streams audio to a single back end is enough to learn fast.

- Add edge inference only if you must reduce cold start or comply with strict data boundaries.

-

Build the happy path

- Implement streaming in and streaming out.

- Stand up EVI 4-mini for speech and Octave 2 for prosodic control.

- Prototype two or three voices that fit your brand.

-

Add guardrails and memory

- Keep short term memory inside a rolling window.

- Log transcripts with consent. Encrypt at rest. Rotate keys.

- For safety and domain constraints, create a narrow toolbelt the agent can call. Start small.

-

Instrument everything

- Track time to first audio, time to final token, barge-in frequency, interruption recovery, and escalation rate to a human.

- Compare cohorts with and without prosody controls enabled.

-

Uplevel the UX

- Use subtle earcons for listening, thinking, and speaking states.

- Show a minimal caption of what the agent will say next. Users like the preview and it catches errors early.

-

Expand channels

- Add phone and kiosk after you stabilize the core experience.

- Keep parity in behavior across channels so users do not have to relearn habits.

Metrics that matter more than a model card

Model cards tell you what a model is good at in a lab. Real users care about whether the assistant helps them finish the job without friction. Consider tracking these:

- Time to first audio

- Median and 95th percentile round trip time

- User interruptions per minute and recovery rate

- Detected sentiment shift after agent responses

- Task completion rate for the top three use cases

- Escalations to human support

- New or returning users per week

When these numbers move in the right direction, you are close to product market fit for voice.

Risks, limits, and how to de-risk

Voice raises three categories of risk. None of them are reasons to avoid shipping, but you should plan for each.

-

Privacy and consent

- Make recording status explicit and persistent.

- Offer immediate deletion on request. Provide a private mode that keeps conversations ephemeral.

-

Hallucinations and tone mismatch

- Limit the agent’s scope and tools. Reward concise answers over creative ones in sensitive domains.

- Use prosody controls to keep the tone appropriate. Warmth is not the same as overfamiliarity.

-

Uneven environments

- Airports, cars, and kitchens are messy. Test there.

- Favor conservative endpointing in noise. Provide push-to-talk as a reliable fallback.

How this fits the broader agent stack

The move to expressive, low-latency voice sits on top of a broader shift toward agentic applications that act rather than chat.

- Browsers and runtimes are becoming agent-aware. See how agentic browsers cross the chasm to enable automation where users already work.

- Teams are teaching assistants to check their own work. Our coverage of self testing AI coworkers shows why self-verification matters before an agent speaks out loud.

- The data plane is getting smarter and more autonomous. When information flow is tight, voice can trigger action safely. Explore data plane agents that work for the next step beyond voice.

Voice is not a silo. It is the front door to agents that can browse, code, and operate systems. The moment that voice stops being frustrating, everything behind that door gets used more often.

What to watch next

A few signals will tell you whether the voice-first future is arriving on schedule.

- Enterprise pilots that skip the lab and run in real call queues

- Retail or hospitality kiosks that handle more than simple FAQs

- Accessible experiences that serve people with diverse speech patterns

- Creator tools that let non-technical teams author voices, moods, and micro-gestures

- Regulatory guidance around transparent recording and AI disclosures

If these show up in the wild, expect a flood of voice-native apps in productivity, support, and entertainment.

Quick playbook for specific roles

Every team approaches voice from a different angle. Here is a compact guide.

-

Product managers

- Pick one job to be done per release.

- Instrument until you can answer: did the agent save time or not.

-

Engineering leads

- Treat audio as a first class citizen in your observability stack.

- Design for graceful degradation when network jitter rises.

-

Design and research

- Test tone and pacing with real users. Small changes in cadence produce big changes in comfort.

- Use inclusive voice options and explicit consent flows.

-

Sales and success

- Pilot with high-volume but low-risk use cases first.

- Capture verbatims after calls. Track sentiment deltas.

Frequently asked build questions

-

Do I need a text UI if voice is great

- Yes. Users want transcripts for search, compliance, and silent environments. Dual modality wins.

-

Can I localize fast

- You can, but audit cultural tone shifts, not just phonemes. Work with native reviewers to tune prosody.

-

How do I choose a voice

- Match the job, not the brand color palette. Support and coaching benefit from warm and steady. Technical walkthroughs benefit from clear and neutral.

-

What about accuracy

- Keep the agent scoped. Add tool use for facts and numbers. Prefer verification over long monologues.

The bottom line

Octave 2 and EVI 4-mini mark a shift from impressive demos to shippable, enjoyable voice products. Prosody closes the emotional gap that kept voice from feeling trustworthy. Latency improvements close the conversational gap that made voice feel awkward. Put them together and you get an interface that many users would rather use than a keyboard.

If your roadmap includes assistants that work in the background, make voice the front door now. Start with a single use case, keep your latency and tone tight, and let users interrupt whenever they want. The future of agents will not just be seen on a screen. It will be heard in a conversation that feels effortless.