Replit Agent 3 turns AI assistants into self-testing coworkers

Replit Agent 3 upgrades coding agents from helpers to coworkers. Its 200 minute run budget and built in test and fix loop let it plan tasks, write tests, ship code, and open a pull request with evidence.

The breakthrough: an agent that can work alone and check its own work

Replit has pushed the conversation about coding agents forward with Agent 3. The headline capability is simple to say and powerful in practice. The agent can work for up to 200 minutes without handholding. While it runs, it writes tests for what it builds, executes them, reads failures, and patches code until those tests pass. That sounds incremental, yet it moves leverage inside software teams. We are not talking about another autocomplete tool. We are talking about a coworker that shows up with its own checklist and evidence.

Why does 200 minutes matter? Autonomy is the difference between a helper and a colleague. A helper asks for guidance every few minutes. A colleague takes a scoped goal, disappears to do the work, and returns with a pull request, a test report, and tradeoffs. Agent 3 is designed to behave like the second one.

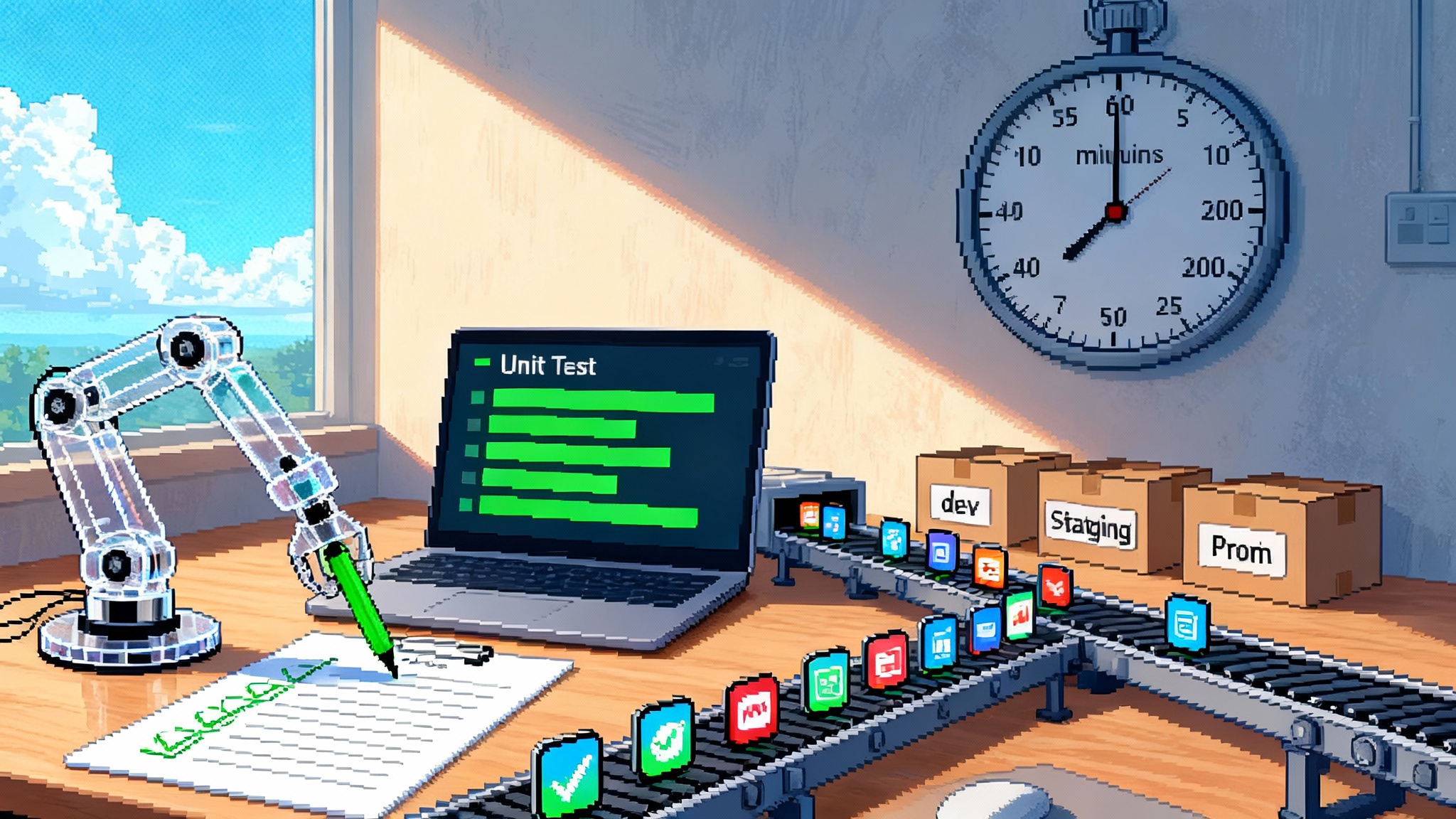

The built in test and fix loop is equally important. In most teams, tests are the wind tunnel where code proves it can fly. If an agent writes the plane and the wind tunnel, it can iterate without waiting for a human nudge. That is the mechanism that turns a chat style assistant into a continuous app factory.

From assistants to coworkers

For the past two years, most developer facing AI felt like a fast pair programmer. It suggested code, explained errors, and filled in boilerplate. Useful, but still a human first workflow. Agent 3 represents a different shape. You can set a milestone level task rather than a snippet level task and let it run. The output is not a single function. It is a working feature, plus a test harness that proves it.

Think of this like hiring a junior developer who learned test driven development from day one. You give them a user story. They draft tests that describe success, scaffold the code, run the tests, read the failures, and patch until green. The value is not in any single step. The value is the closed loop that runs without your constant presence.

Two things make that loop credible in an agent. First, the run budget is long enough to chain several attempts and corrections. Second, the agent treats test results as its primary feedback channel instead of waiting for a human to score each attempt. The outcome is fewer context switches for the person who wrote the ticket and a tighter inner loop for the agent.

What the loop looks like in practice

Imagine you type the following task into your environment:

- Build a three page web app that lets a teacher create quizzes, collect responses, and export a CSV report.

- Use a lightweight framework, add simple authentication, and ship a single container image.

- Include tests for authentication, quiz creation, submit flow, and CSV export.

Here is how Agent 3’s cycle typically unfolds:

-

Planning. It reads the task and breaks it into subtasks. It outlines scaffolding the project, setting up auth, defining models, building routes, adding client components, writing tests, and containerizing.

-

Test scaffolding. It writes basic tests for endpoints and UI interactions. These initial tests are not perfect. They are good enough to expose gaps.

-

First build. It generates the initial code, configures a container, builds, and runs tests.

-

Read failures. Tests fail. The agent inspects stack traces, diffs, and logs.

-

Patch. It changes code and adjusts tests. It aims to be strict enough to catch semantics and flexible enough to avoid locking in trivial implementation details.

-

Iterate. Steps three through five repeat until the test suite is green and the app boots cleanly.

-

Package. It prepares a pull request with a description of changes, passing test reports, and a summary of remaining risks.

The team lead steps in to review the pull request and either merge or request a follow up run with added constraints. The point is not that the agent never needs you. The point is that you only need to be present at gates that matter.

Why 200 minutes changes the rhythm of teams

Autonomy is a budget. With a two hundred minute budget, an agent can run several substantial loops before it returns for direction. That allows a team to chunk work differently. Instead of ten interruptions for ten tiny asks, you can write one clear ticket and return to your own work. Standups change. You ask what goals you gave the agent, what was merged, and what is blocked by policy gates.

There is also a psychological shift. When a tool can try, fail, and try again without your supervision, you start to treat it like a teammate with potential rather than a vending machine that must vend the exact code you pictured. You write outcomes, not recipes. You evaluate evidence, not vibes.

Continuous app factories come into view

Factories are sets of steps that turn inputs into consistent outputs. Software never had a true factory because every product is different and the work is creative. Yet modern teams do have repeatable pipelines. Requirements turn into tests. Tests guide code. Code ships behind automated checks. Agent 3 slots into that pipeline and runs large parts of it without you.

Even a tiny startup can run a continuous app factory if it chooses its scope carefully. The factory is not a literal conveyor belt. It is a workflow where a product manager writes clear outcomes in tickets, an agent drafts both code and tests, the tests act as a permission gate, and human review focuses on architecture, security, and product nuance.

Done well, this is how you deliver more features with fewer meetings:

- Tickets are written as measurable behaviors and constraints.

- Agents translate those into tests and code that satisfy the behaviors.

- Gates enforce quality with static analysis, security checks, and performance budgets.

- Humans review the decisions that tests cannot capture.

If you are evaluating patterns across the market, compare this with the rise of data-plane agents that do work and the emergence of emerging control towers for agents. The story is converging on autonomy, evidence, and guardrails.

New governance patterns for autonomous coding

Autonomy without control is not a plan. If you let an agent run for hours, connect to repositories, and touch infrastructure, you need practical safety. The good news is that the controls you need are already familiar from continuous integration and site reliability engineering. The difference is that you now apply them to a nonhuman teammate.

Here are four walls of safety that smart teams are adopting:

-

Capability scoping. Give the agent only the permissions it needs. Default to read only on production data. Allow write access only to feature branches. Use separate keys for dev, staging, and prod. Prefer short lived credentials. If the agent must call third party APIs, place it behind proxies that enforce rate limits and redact secrets.

-

Budget and quotas. Treat autonomy minutes and external calls like a budget. Set a per run cap, a per day cap, and a cost per task target. Put a hard stop when a run exceeds a threshold number of failed attempts. Require human approval to extend the budget beyond the default.

-

Test and policy gates. Require tests to pass, coverage to meet a minimum, static analysis to be clean, and security scanners to report no critical findings. Add policy as code rules for data access, dependency licenses, and network egress. The agent is free inside those guardrails and blocked at the edges.

-

Audit and observability. Record inputs, plans, code changes, test results, and external calls. Keep a run ledger with timestamps and artifacts. Ship metrics like success rate, mean time to green, and cost per passing pull request. Observability turns incidents into improvements and makes regulatory conversations straightforward.

These controls do not slow teams. They prevent waste and build trust with customers. They also make the agent better over time because the feedback is crisp and recorded.

A minimal app factory you can stand up this week

If you want to test the new pattern, assemble a small factory. You do not need a platform overhaul. You need a clean path from ticket to green build and a clear decision on what the agent can touch. Use this checklist as a starting point:

- One repository per service with a clear readme and run scripts.

- A test harness the agent can run locally and in your continuous integration system.

- A sandbox environment seeded with safe sample data.

- Short lived credentials with explicit scopes for the agent.

- A branch protection rule that requires passing checks before merge.

- A code owner rule that asks for human review on files that can hurt you, like security configs and billing code.

- A policy file that captures non negotiable rules such as allowed dependencies and license types.

- A cost dashboard that shows run time minutes, external calls, and average cloud spend per agent task.

- A kill switch that terminates any run that escapes its budget or touches disallowed resources.

- A post run template that forces a summary of outcomes, tradeoffs, and next steps.

Adopt this in a narrow slice first. Pick a feature that is new and low risk. Write a precise ticket. Let the agent run. Review the pull request and the run ledger. Capture what went well and what needs hardening before you scale to more services.

What changes in the developer day

Daily work shifts from composing code to composing constraints. Writing a clear ticket is a skill. Review becomes the control point where human taste and risk management live. The best developers will become superb reviewers who shape architecture with minimal hand edits. They will also become toolsmiths who design the tests and policies that encode product truth.

This does not erase the joy of building. It changes where the joy comes from. Creating a crisp contract and seeing a passing build appear can feel as satisfying as a late night coding sprint. It also opens the door to more experiments. When the cost of a prototype falls, you try more ideas and retire more weak ones early.

If you follow how suites and startups approach agents, compare this autonomy heavy design with suites as a fast on ramp. Suites optimize for adoption and compliance. Startups optimize for depth and iteration speed. Teams benefit when both approaches exist, but the frontier is being pushed by sharper tools that do one thing very well.

What to measure

To avoid hand waving, pick metrics that reflect real value and review them weekly:

- Time to green. Minutes from ticket creation to a passing test suite and a reviewable pull request.

- Pull request acceptance rate. Percentage of agent generated pull requests that merge without major rewrites.

- Defect escape rate. Bugs found in staging or production per merged pull request.

- Cost per passing pull request. Cloud and tool spend divided by the number of merged agent pull requests.

- Coverage delta. Change in test coverage over time on agent touched areas.

Interpretation matters. If the first three improve while cost stays flat or falls, you have a factory. If cost spikes while acceptance falls, your tests are likely too weak or your policies are too loose. Tighten gates, simplify tickets, and deepen observability before scaling.

Limits, risks, and how to handle them

No agent replaces deep product judgment or hard architecture calls. You still need humans to decide tradeoffs, design data models, and shape interfaces that fit real workflows. Agents can also overfit to their own tests. Watch for brittle tests that encode implementation details rather than behavior. Periodically rewrite a subset of tests by hand to validate that the behavior is what users need.

Security and privacy require special care. Treat production data as toxic until proven safe. Use synthetic data for most runs. If the agent must see real data, expose only the fields required for the task and strip identifiers. Rotate keys and review access logs. Assume that anything written to a run log might be read later.

There is also the question of scope creep. Give the agent milestones, not vague wishes. The sharper the acceptance criteria, the better the results. A well formed ticket reads like a contract. It defines inputs, outputs, user flows, and edge cases. The goal is a world where tests reflect that contract and the agent is responsible for turning it green.

A 30 day playbook to learn and scale

If you lead a team, try this short roadmap:

Week 1

- Pick one service where you can accept small failures.

- Document build and test steps so an agent can run them end to end.

- Set branch protections and short lived credentials.

Week 2

- Write two outcome focused tickets with clear acceptance criteria.

- Let the agent run with a strict budget and capture the run ledger.

- Merge if tests pass and review is clean. If not, adjust the ticket, not only the code.

Week 3

- Add policy as code for dependencies and data access.

- Start tracking time to green and cost per passing pull request.

- Expand to a second service if the first shows lift.

Week 4

- Tune gates to reduce false positives and catch real risks.

- Build a small library of reusable prompts and ticket templates that the agent handles well.

- Present results to the team and decide which parts to scale.

By the end of the month, you will know if the factory pattern works for your context. You will also have a list of controls that make you comfortable scaling it.

Frequently asked questions teams are asking

Will Agent 3 create flaky tests to pass its own work? It can, just like a human. That is why review focuses on behavior over implementation and why you periodically rewrite tests. Keep linters and security scanners strict to catch obvious shortcuts.

How do we prevent runaway costs? Treat minutes and external calls as a budget. Cap autonomy by default. Ship dashboards that show cost per passing pull request and success rate. If the slope turns the wrong way, set lower caps and shrink ticket scope until you regain control.

What about secrets and data leaks? Prefer short lived tokens with minimal scopes. Log all external calls. Redact by default. Rotate keys on a schedule. If your compliance needs are heavy, consider dedicated proxies that scrub sensitive fields.

How will this affect hiring? It changes the shape of work. You will still value engineers who can design systems and write clear tests. You will add new value to reviewers who can judge architecture and risk quickly. You will also prize engineers who are great at writing crisp tickets and codifying product truth as policies.

The bottom line

Agent 3’s 200 minute autonomy and self testing loop do more than speed up coding. They shift the center of gravity from human in the loop assistance to agent in the loop development. That shift unlocks continuous app factories that small teams can run today. It also forces real governance, not slogans. The teams that win will treat autonomy as a budget, tests as contracts, and policies as code. They will give agents clear outcomes, strong guardrails, and honest metrics. Then they will let the work speak for itself.

It is not a future tease. It is a practical way to ship more software with fewer interruptions right now. The change starts when you write a better ticket and let your new coworker prove it can pass its own tests.