Awaiting your topic and angle to begin

Most AI agent pilots impress in demos then falter in production. This playbook shows how to pick one valuable workflow, ship a safe MVP in 30 days, and scale to real adoption by day 90.

Why most agent pilots stall

Agent demos are intoxicating. A few prompts and a shiny UI can make even complex workflows look solved. Then the reality of enterprise deployment arrives. Data is scattered, guardrails are unclear, and success metrics are fuzzy. Teams get stuck in the gap between an impressive proof of concept and reliable production value.

This playbook closes that gap with a simple arc you can run in 90 days. The goal is not a lab demo. It is a live agent that handles a narrowly defined workflow end to end, with measurable impact, auditable behavior, and a path to scale.

You will:

- Pick one high value, low blast radius workflow

- Ship a safe, measurable MVP by day 30

- Hardening and expand the surface area by day 60

- Scale adoption and automate oversight by day 90

The details below assume an enterprise context with data trust requirements, stakeholder complexity, and real-world failure modes. The plan works whether you start greenfield or inside a suite where agents are already emerging.

The 90 day arc at a glance

- Days 1 to 30: Define a single job to be done, wire the data, build the smallest viable agent, and instrument everything

- Days 31 to 60: Close reliability and safety gaps, add one hop of autonomy, and integrate with the system of record

- Days 61 to 90: Scale usage through change management, automate evaluation, and prepare the next two workflows

Each phase culminates in a review that asks one question: does the agent reduce time to value for a specific user by at least 30 percent while keeping risk within agreed limits?

Phase 1: Define and ship a safe MVP (days 1 to 30)

Your first month trades breadth for certainty. Resist the temptation to support every edge case. Pick one workflow that is frequent, structured, and currently painful.

1. Choose the workflow

Good first candidates share three traits:

- High volume with measurable cycle time or cost

- Clear source of ground truth data and a single system of record

- A bounded action set that can be reversed or reviewed

Examples: drafting customer support replies from a knowledge base, triaging inbound sales notes into the CRM, or generating weekly account health summaries for success managers.

2. Define success and guardrails

Write a one page contract that the team can point to when decisions get fuzzy. Include:

- Target metric: cycle time, first response accuracy, deflection rate, or acceptance rate

- Risk limits: maximum allowed hallucination rate, data access scope, and escalation conditions

- Audit needs: log every prompt, decision, and data touch with immutable IDs

Anchor your risk approach in the NIST AI Risk Management Framework. Keep it lightweight in month one, but write down threat categories so you do not miss obvious issues like over-permissioned connectors or silent prompt injections.

3. Assemble the smallest team that can win

You do not need a cast of thousands. You need:

- One product owner who knows the workflow in the real world

- One data engineer who can expose clean views and joins

- One application engineer who can ship a thin UI and integrations

- One security reviewer who signs the access and logging model

4. Design the agent’s job

Write a plain language job description for the agent. Keep it under 12 sentences. Name inputs, tools, and outputs. For example:

- Inputs: customer ticket text, knowledge base snippets, previous reply history

- Tools: retrieval, template library, reply sender with approval queue

- Outputs: a drafted reply with citations, a confidence score, and an action log

This document becomes your prompt skeleton and your evaluation plan.

5. Build the smallest viable system

The MVP needs four layers:

- Data connectors that read from ground truth only

- Retrieval and reasoning that can cite sources and show working

- Tools with explicit permissions for read or write

- A review surface where a human can accept, edit, or escalate

A suite can shorten your runway. For teams already invested in productivity platforms, read how suites as the fastest on ramp can compress setup. If you start outside a suite, keep interfaces thin and observable. Avoid bespoke glue code that hides side effects.

6. Instrument from day one

You cannot improve what you cannot see. Track:

- Input distribution and drift over time

- Retrieval quality: coverage, freshness, and citation precision

- Decision traces: which tool was called, with what parameters, and how long it took

- Human in the loop outcomes: accepted as is, edited, rejected, escalated

Design your dashboards around outcomes, not model internals. For inspiration on outcome-first thinking, see how proactive BI agents move beyond vanity metrics.

7. Ship and review the MVP

By day 30 the agent should handle the happy path end to end. Run a one week shadow period. Compare baseline metrics to MVP outcomes. Capture failure patterns. Decide which gaps you will fix in phase 2 and which you will defer.

Phase 2: Make it reliable and add controlled autonomy (days 31 to 60)

The second month turns a good demo into a dependable teammate. You will address reliability, safety, and integration depth.

1. Close reliability gaps using data and tests

Start with the failures captured in your MVP review. Build regression tests that use real examples pulled from logs. Track pass rates by scenario. Add simple safeguards:

- Rate limit expensive or risky tool calls

- Add timeouts and retries to brittle integrations

- Create templates for common outputs with required fields and format checks

2. Harden security and audit

Move from permissive to least privilege access. Grant the agent only the read or write scopes it needs, nothing more. Centralize secret management and rotate keys on a schedule. Adopt structured logs that tie every decision to a user, dataset, and purpose. For a practical overview, study agent-native security controls and adapt the tactics to your stack.

Map your controls to the OWASP Top 10 for LLM Applications. This turns abstract risks like prompt injection or training data leakage into concrete backlog items.

3. Add one hop of autonomy

In phase 1 the agent likely drafted outputs for human review. In phase 2 allow the agent to perform a limited action without waiting for approval, but only within narrow guardrails. Examples:

- Auto close tickets that match a documented policy with high confidence

- Auto populate CRM fields when the confidence and data provenance meet thresholds

- Auto schedule follow ups when templated rules fire

Each autonomous action must write an explanation and a reversal plan to the log.

4. Integrate with the system of record

The value of an agent compounds when it writes to the place work actually lives. Connect to the system of record with a single source of truth pattern. Avoid sidecar spreadsheets. Put circuit breakers between the agent and any write endpoint. Maintain a quarantine queue for suspicious changes.

5. Expand evaluation

Move from ad hoc checks to a continuous evaluation loop:

- Curate a living test set from real user interactions

- Score outcomes on usefulness, faithfulness, and compliance

- Alert when score distributions drift from your phase 1 baseline

Automate the loop so every prompt, tool change, or model upgrade triggers a run and report.

Phase 3: Scale adoption and prepare the next two workflows (days 61 to 90)

By month three your agent should be useful to a small cohort. Now expand carefully.

1. Nail change management

Agents reshape habits. Users adopt what they trust and abandon what confuses. Invest in:

- Training sessions with live examples and open Q and A

- Embedded help that explains what the agent can and cannot do

- A feedback button that files structured issues back to the team

Set a clear communication rhythm with weekly updates on fixes, wins, and known gaps.

2. Align incentives

Managers should see the same dashboards users see. Celebrate time saved and customer outcomes, not just usage. Tie team goals to adoption milestones that reflect quality, not just volume.

3. Prepare the next two workflows

You will be tempted to add many features to the first agent. Do not. Instead, document the next two workflows using the same criteria you used in phase 1. Sequence them by shared data and tools so you can reuse integrations.

4. Establish a governance cadence

You need a lightweight process that keeps you fast while preventing surprises. Propose a monthly review that covers:

- Access changes and least privilege checks

- Evaluation results and drift analysis

- Incident reviews and mitigations

- Planned model or tool upgrades and their risk assessment

A disciplined cadence builds credibility with security and legal without slowing the product team.

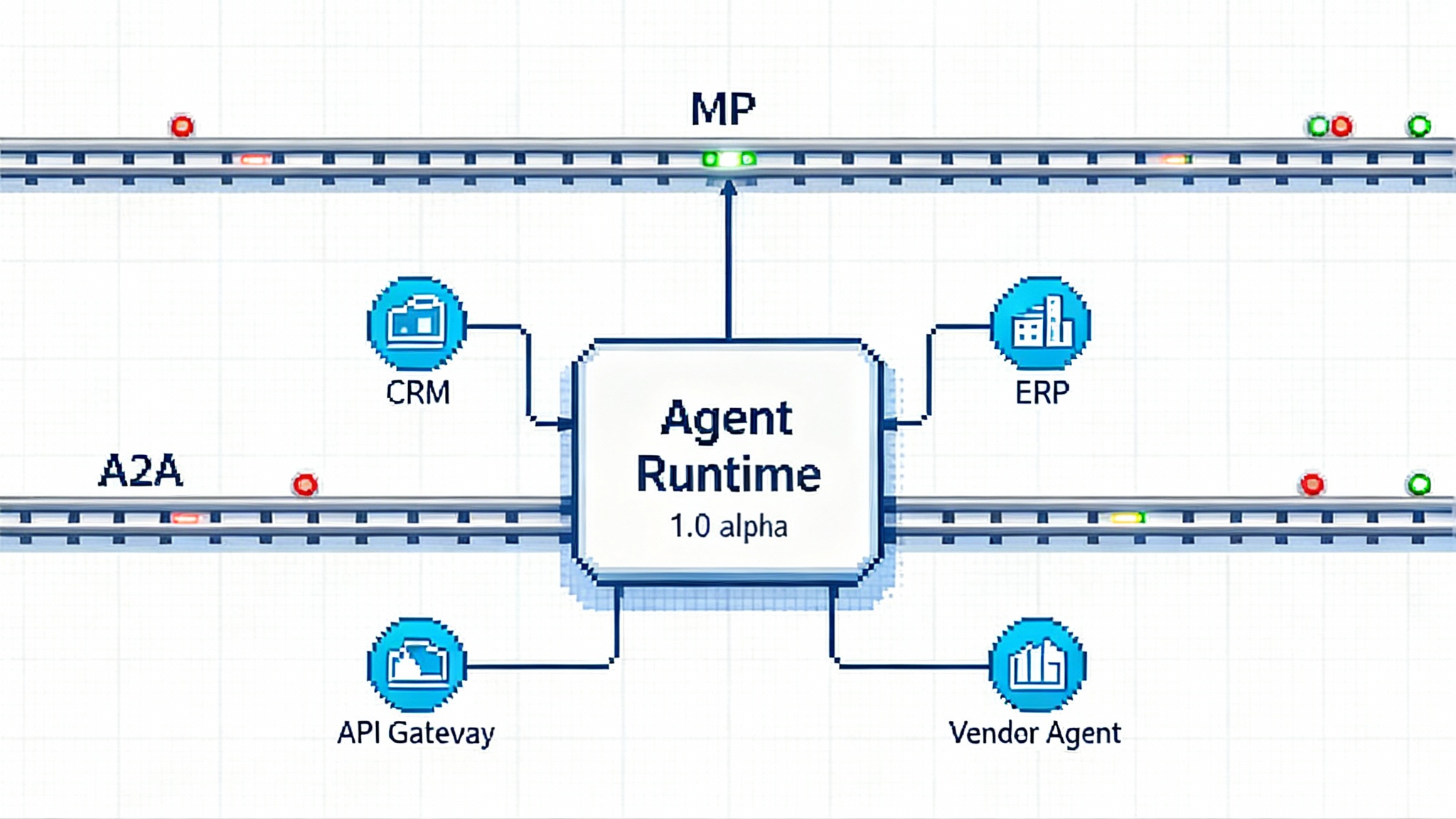

Reference architecture you can start with

Every enterprise is different, but a simple reference pattern works well in most contexts:

- Ingestion: connectors read from curated data products and event streams

- Retrieval: hybrid search over documented collections with freshness policies

- Reasoning: prompt templates with structured outputs and a fallback path

- Tools: a small set of typed actions with per tool permissions

- Orchestration: a workflow engine that logs every step with correlation IDs

- Oversight: evaluation jobs run on a schedule and on every change

- Interface: a thin UI for review and a plug in for the system of record

Keep boundaries explicit. Draw the line between retrieval and reasoning. Draw the line between the agent and tools. This makes failure modes observable and tractable.

Controls that matter most in practice

You do not need a hundred controls to be safe. You need the right ten. Prioritize:

- Authentication and purpose binding for every request

- Row level and column level filters on sensitive data

- Prompt provenance and template versioning

- Output schemas with strict validation

- Safe tool defaults and clear rollback procedures

- Immutable logs and retention policies

- Continuous red teaming aligned to the OWASP categories

These controls map cleanly to the threat list you defined in phase 1 and to the categories in the NIST framework. Keep the list visible in your tracker so security work stays first class.

Cost control without killing usefulness

Cost surprises are common in months two and three. Prevent them with:

- Token budgets per workflow and per user

- A tiered model policy: cheap default, smart upgrade when confidence or complexity warrants

- Caching for frequent prompts and retrieval results with freshness checks

- Batch processing for long running analysis jobs

Finance will love the predictability. Users will love that quality stays high where it matters.

What good looks like on day 90

By the end of this playbook you should be able to present:

- A single workflow where the agent reduces cycle time by 30 to 50 percent

- A dashboard that proves adoption and quality, not just usage counts

- An audit log that shows who did what, when, and why

- An evaluation suite that runs on every change and alerts on drift

- A backlog with two next workflows that reuse today’s plumbing

If you cannot show these artifacts, do not scale. Fix the gaps first. Scale only when the mechanism is sound.

Common pitfalls and how to avoid them

- Picking a vague goal: define one job and one metric or you will chase anecdotes

- Over connecting data: start with the source of truth, not every system that might help

- Shipping a black box: require citations, templates, and tools you can log

- Skipping security reviews: least privilege is cheaper than cleanup

- Measuring inputs, not outcomes: track cycle time, accuracy, and user adoption

The compounding effect of agents as products

Treat your agent like a product, not a project. Products have users, roadmaps, and guardrails. Projects end. Products compound. When you design for a single job, instrument outcomes, and build with explicit boundaries, you create a backbone you can reuse. Each new workflow becomes easier than the last. Your stack becomes simpler, not more tangled.

The organizations that win with agents in 2025 will not be the ones that demo the cleverest prompts. They will be the ones that earn trust through clarity, safety, and measurable outcomes. Start narrow, ship something real, and let results pull you forward.

Further reading inside this site

- Learn how suites accelerate delivery in suites as the fastest on ramp

- See how outcome first analytics evolves in proactive BI agents

- Review practical controls in agent-native security controls

One page checklist you can copy

- Use case: frequent, measurable, reversible

- Success: one metric, one threshold, one review cadence

- Data: source of truth only, documented retrieval policy

- Tools: few, typed, with per tool permissions

- Interface: thin review surface plus system of record plug in

- Security: least privilege, structured logs, mapped to OWASP

- Evaluation: curated test set, automated runs on every change

- Cost: token budgets, tiered models, caching plan

- Adoption: training, in app help, visible roadmap

Print it. Tape it next to your monitor. Run the play.

Conclusion: narrow the scope, widen the impact

Agents become valuable when they make a single workflow faster, safer, and easier the same way, every day. This playbook gives you a repeatable arc to get there. Define a job. Ship a safe MVP. Add a hop of autonomy. Scale with measurement and governance. Use the NIST AI Risk Management Framework to keep risk in bounds and the OWASP Top 10 for LLM Applications to focus your defenses. Do this once and the next two workflows will arrive faster with fewer surprises. That is how pilots turn into production.