LangChain 1.0 alpha: the production shift for agents

LangChain and LangGraph 1.0 alpha signals a real shift from prototypes to production. With stable runtimes, typed messages, and rising rails like MCP and A2A, teams can ship durable agents with less risk and more control.

The stabilization moment finally arrives

On September 3, 2025, the LangChain team announced the first alpha releases for LangChain and LangGraph 1.0, a milestone that signals a new phase for enterprise agent orchestration. The promise is simple and decisive: stable abstractions for agent runtimes and a backward compatible path for existing apps. That combination reduces risk in a way prototypes never could, and it sets a clearer course for teams that need to ship agents into real environments. The LangChain and LangGraph 1.0 alpha announcement outlines the agent runtime foundations, message content upgrades, and the compatibility plan for both Python and JavaScript users.

This piece is not a changelog. It is a decision guide for product leaders and staff engineers who need to understand what changes with 1.0 alpha, why it matters for production, and how to move without breaking what already works.

Why 1.0 alpha matters more than a version number

Version numbers are easy to dismiss, but 1.0 alpha carries concrete meaning here.

- No breaking changes in LangGraph relative to recent versions. For teams that care about durability, this is a strong signal that long running workflows will not be thrown off track during migration.

- A reorganized LangChain centered on an agent primitive. Instead of a sprawl of loosely coupled patterns, the framework now emphasizes a clear agent abstraction built on LangGraph.

- A standardized message model with typed content blocks. Reasoning steps, citations, tool calls, and multimodal payloads share a common surface, which makes orchestration legible and observability more reliable.

Together, these choices make code paths easier to reason about, keep behavior consistent across environments, and shrink the gap between a demo and a deployment. The legacy package gives you a backward compatible path so older chains and agents keep running while you adopt the new primitives. That is not glamorous, but it is exactly what large systems need to upgrade safely.

From clever demos to dependable infrastructure

LangGraph’s value has always been control and durability. An agent that can pause, resume, checkpoint, and fork is an agent you can trust with real workloads. With human in the loop support, streaming, and multi user sessions, you can map messy business processes to a stateful graph rather than a brittle chain of prompts.

Typed message content in LangChain 1.0 unifies how providers expose structured outputs. That reduces the constant friction of schema drift as model APIs evolve. It also gives you a consistent surface for policy enforcement, evaluation, and monitoring. When messages are typed, you can parse them without guesswork, attach guardrails where they belong, and stitch traces across services with confidence.

The real win is incremental adoption. Because the 1.0 alpha is designed to avoid breaking existing flows, you can apply these capabilities step by step, then scale to more complex topologies when you are ready.

Protocol rails that change the game: MCP and A2A

Framework stability is only half the story. The other half is the emergence of shared protocols for how agents connect to tools and to each other.

- MCP, the Model Context Protocol, standardizes how agents discover and call tools, files, and services. It turns ad hoc integrations into a discoverable, monitorable catalog.

- A2A, the Agent to Agent protocol, standardizes how agents delegate tasks and exchange results across organizational boundaries. It brings capability discovery, structured messages, and identity to multi agent collaboration.

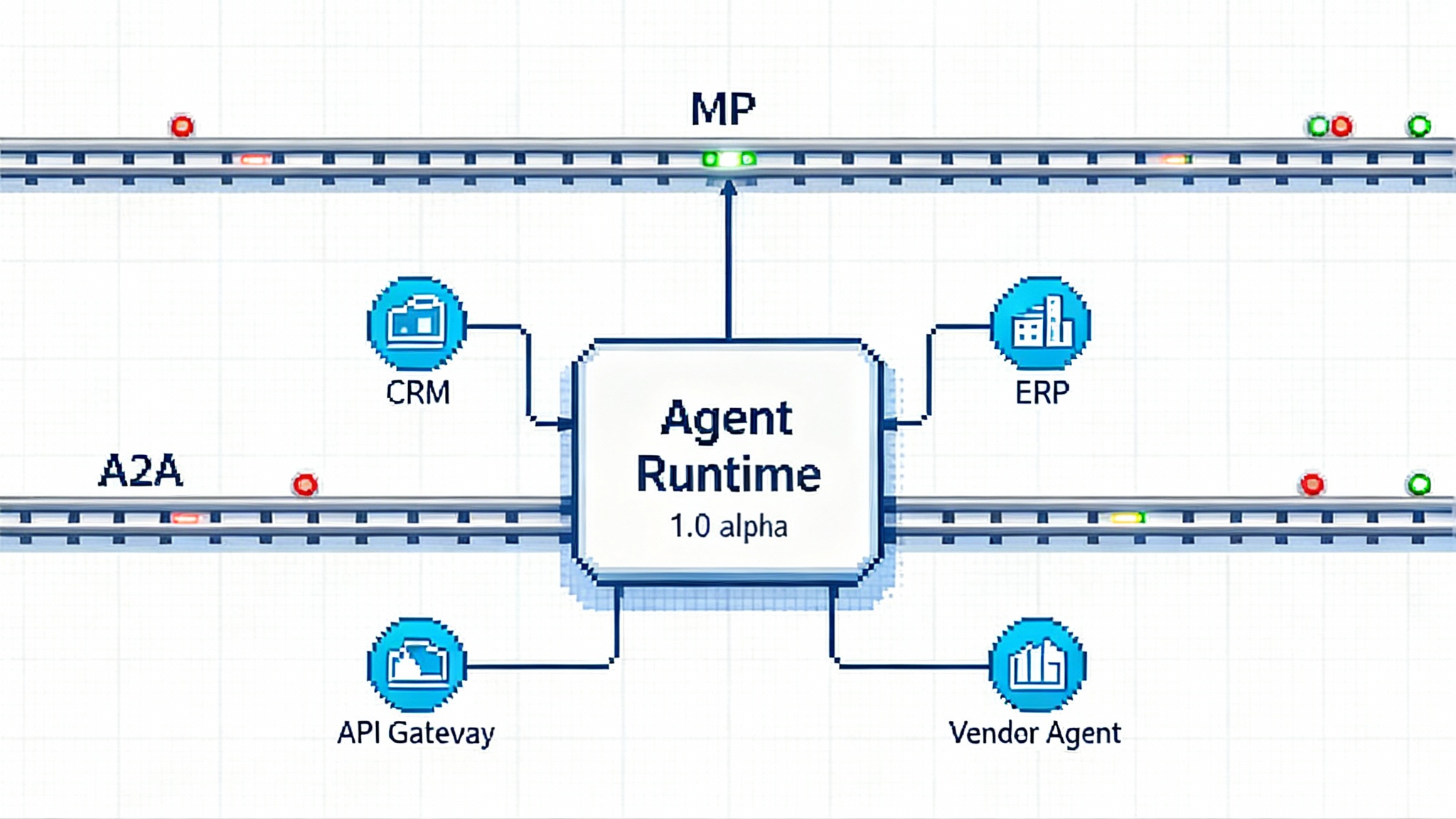

Think of MCP as the vertical rail that connects agents to systems of record and operational tools, and A2A as the horizontal rail that lets specialized agents coordinate. Combined with a stable runtime, these rails let you treat agents like first class distributed components rather than one off experiments.

Adjacent vendor moves validate the direction

Protocols matter when platforms adopt them. In September, Boomi added MCP support across its integration platform and Agentstudio, letting teams expose APIs as MCP tools and import shared MCP services with straightforward setup. The Boomi September 2025 release means agents built on LangChain or any other framework can tap into Boomi managed APIs without custom wrappers while platform teams enforce policies and telemetry at a centralized control plane.

Adoptions like this reduce integration tax and move governance from code into platform policy. As more API gateways, event streaming platforms, and iPaaS vendors publish MCP endpoints or registries, the cost of connecting agents to enterprise systems drops. Combine that with A2A for inter agent delegation and you start to see an agentic fabric that spans teams, vendors, and clouds.

For a view of how enterprise platforms are evolving to meet production needs, our deep dive on Algolia’s approach to orchestration is a useful companion. See how they move from demo to enterprise agents in this analysis: from demo to enterprise agents.

The build versus buy calculus is shifting

A year ago, the safest route for most teams was to buy a point solution per use case. Building meant owning glue code for tools, memory, routing, and observability. Today the calculus is changing:

- Build confidently on a stable runtime. LangGraph provides durable execution, checkpoints, and explicit control over side effects. That lowers the ongoing cost of maintaining your own orchestration.

- Rent integrations instead of hand coding them. MCP support from platforms like Boomi means you can snap agents into curated tool catalogs and inherit authentication, quotas, and audit.

- Mix build and buy. With A2A and MCP, you can treat vendor agents and your own agents as peers. Outsource subtasks to an external agent that advertises a clear capability card while keeping your core logic in house.

This opens a middle path: build the parts that differentiate your product, buy the rails and catalogs that would otherwise become your maintenance burden.

What production ready now must include

Shipping to production is not the same as passing a demo. Here is a pragmatic checklist for the new baseline.

1) Identity and access

- Agent identity. Assign every agent a unique, attestable identity and a way to sign or verify messages. Treat agent identities like service accounts with rotation and revocation.

- User to agent trust. Map human identities to agent actions through scopes and consent. If an agent books travel or files a case, maintain a chain of custody from the user to the call.

- Tool scoping. Expose least privilege MCP tools with explicit allow lists and per tool policies. Manage tool registries like production infrastructure with change control and audits.

2) Observability and operations

- Tracing across boundaries. Trace every step from planner to worker to tool. Typed content in LangChain 1.0 makes spans more structured, which helps when you stitch traces across frameworks.

- Durable state and replay. Use LangGraph checkpoints to reproduce incidents, apply hotfixes without losing progress, and persist intermediate artifacts for forensics.

- Live debugging hooks. Expose pause, intervene, and resume controls, plus event streams for analysis and dashboards. Developers and operators should see the same state.

For more on the operational side, especially when agents run near devices and edge services, our guide on observability shows how to turn shadow AI into a productivity engine: turn shadow AI into a productivity engine.

3) Governance and safety

- Policy guardrails. Enforce PII rules and data residency at the tool boundary, not only inside prompts. MCP is a natural choke point for gating access to systems of record.

- Evaluation at deployment. Run scenario based evaluations on every change to models, prompts, or tool catalogs. Focus on failure modes like prompt injection, tool confusion, and over delegation.

- Audit and retention. Record decisions, inputs, outputs, and tool calls with retention policies that match your regulatory domain. Be able to explain why the agent acted.

4) Cost and performance discipline

- Bounded loops. Cap steps per task and require explicit approvals for expansions. Combine caching with structured retrieval to avoid recomputation.

- SLA aware routing. Choose models and tools based on latency budgets and precision needs. Fail fast when dependencies degrade and surface partial results where appropriate.

Migration paths that respect reality

Most teams do not rewrite. Here is a sequence that minimizes risk while adopting LangChain 1.0 alpha and the emerging rails.

- Start where you are. Keep existing LangChain flows running through the legacy package while you pilot the new agent primitive for one use case. Measure latency, stability, and trace quality.

- Move memory to the runtime. Replace ad hoc session stores with LangGraph persistence. Checkpoint long running tasks and add resumability to your incident playbooks.

- Wrap tools as MCP. Publish internal APIs as MCP tools behind your API gateway. Bring them into agents through a registry with scopes, quotas, and explicit approvals.

- Introduce A2A selectively. Identify one cross team or cross vendor collaboration where delegation is common. Model that as an A2A task with a capability card, then monitor the interaction for leakage and drift.

- Raise the governance bar. Add allow lists, redaction, and human in the loop policies at the tool layer. Instrument everything. Make failure visible and reversible.

If you are watching how agent roles evolve from dashboards to doers on the front line, our Space Agent shift analysis pairs well with these migration steps.

Reference architectures you can copy

1) Single agent with a tool belt

- One agent built with the 1.0 agent primitive

- Tools exposed via MCP and curated in a registry. An API gateway enforces authentication, rate limits, and schema validation.

- LangGraph runtime provides persistence and human in the loop steps.

- Traces flow to your observability stack. Secrets and keys are rotated centrally.

When to use: a focused task with a predictable set of systems, such as marketing ops, procurement approvals, or incident summarization.

2) Planner worker pattern over A2A

- A planner decomposes work and delegates tasks over A2A to specialized worker agents owned by other teams or vendors.

- Workers use MCP tools specific to their domain, like CRM, ERP, or ticketing.

- The planner handles retries, timeouts, and synthesis. Every delegation is signed and scoped.

When to use: cross domain workflows where you do not control all agents, such as quote to cash or supply chain exceptions.

3) Aggregator with policy guardrails

- An aggregator exposes a single surface to end users while brokering calls to internal and vendor agents.

- Policy engines enforce data classification, redaction, and routing choices before any cross agent call.

- Observability stitches all traces under a common session ID.

When to use: customer facing scenarios where privacy, non repudiation, and latency matter, such as support agents and financial assistants.

Risks to manage as you scale

- Prompt and tool injection. Treat every tool output as untrusted input. Validate schemas, sanitize data, and isolate agent sandboxes.

- Identity confusion. Never reuse user tokens as agent identity. Separate the two and sign every delegation.

- Drift and hidden state. Long running sessions collect stale context. Use checkpoint compaction and prefer explicit state machines for critical steps.

- Vendor swing. MCP and A2A reduce lock in, but they do not erase it. Keep an exit strategy for critical tools and agents.

What to watch between now and GA

The LangChain team has signaled a target for the stable 1.0 release later in October 2025. Expect additional polish on the content block model, more prebuilt patterns on top of LangGraph, and deeper integrations for popular providers. On the ecosystem side, watch for more MCP registries to appear inside API gateways and iPaaS products, and for A2A discovery and identity to settle into repeatable patterns. The direction is clear. The rails and the runtime are converging.

The bottom line and next steps

The 1.0 alpha of LangChain and LangGraph marks a stabilization moment for enterprise agents. With a durable agent runtime, standardized message content, and a credible compatibility path, teams can take on production use cases without betting the farm. Add MCP for tool access and A2A for cross boundary delegation, and the build versus buy decision no longer forces extremes.

If you have been waiting for the agent stack to settle, your wait is ending. Pick a workflow with measurable value, wrap your systems as MCP tools, delegate where you must over A2A, and let a stable runtime carry the load. Then scale with confidence.

For additional perspective on how enterprise platforms are industrializing agents, revisit how Algolia framed the leap from demo to enterprise agents and how observability upgrades can turn shadow AI into a productivity engine.