ProRata’s Gist Answers Brings Publisher‑Owned AI Search

ProRata's Gist Answers puts AI search on publisher sites with licensed retrieval, citations, and revenue share. Learn how it works, what to ask in due diligence, and a 90 day plan to pilot and measure impact.

Publisher AI search grows up

On September 5, 2025, ProRata.ai announced Gist Answers, a product that lets publishers embed AI search on their own sites and decide whether answers draw only from their archive or also from a licensed network of roughly 750 publishers. The company also announced a 40 million dollar raise to accelerate rollout and said publishers share in revenue from these AI experiences. For core launch details, including early partners and a 50 50 revenue share promise, see Axios detailed the raise and launch specifics.

The timing matters. AI overviews in search engines, chatbots that satisfy intent without a click, and a fog of unsettled licensing disputes have pushed publishers to pick a lane. Either you let aggregators summarize your reporting, or you summarize it yourself and keep readers on your pages. Gist Answers is a bet on the latter.

What Gist Answers is and how it differs

At its simplest, Gist Answers is an on site search and answer box. A reader types a question, the system retrieves relevant passages from a permissioned corpus, then generates a short answer with citations that link back to source pages. Two big dials sit in the publisher’s hands. You can constrain answers to your own archive, or you can opt into a broader licensed network when you need coverage and breadth.

Three product choices stand out:

- Retrieval first, generation second. Answers are grounded in documents you have rights to use. That lowers hallucination risk and makes attribution straightforward.

- Compensation is built in. ProRata positions the module as free to implement and revenue positive through ad units that sit beside answers and a share paid to contributing licensors.

- Distribution without losing the relationship. Because the box lives on your site, the session stays in your product. You keep analytics, conversions, and brand context.

This setup is not just another chatbot bolted to a page. It is a search product that treats your archive as the primary knowledge base and uses a network index to raise answer quality where needed.

RAG is the pattern, implementation wins the day

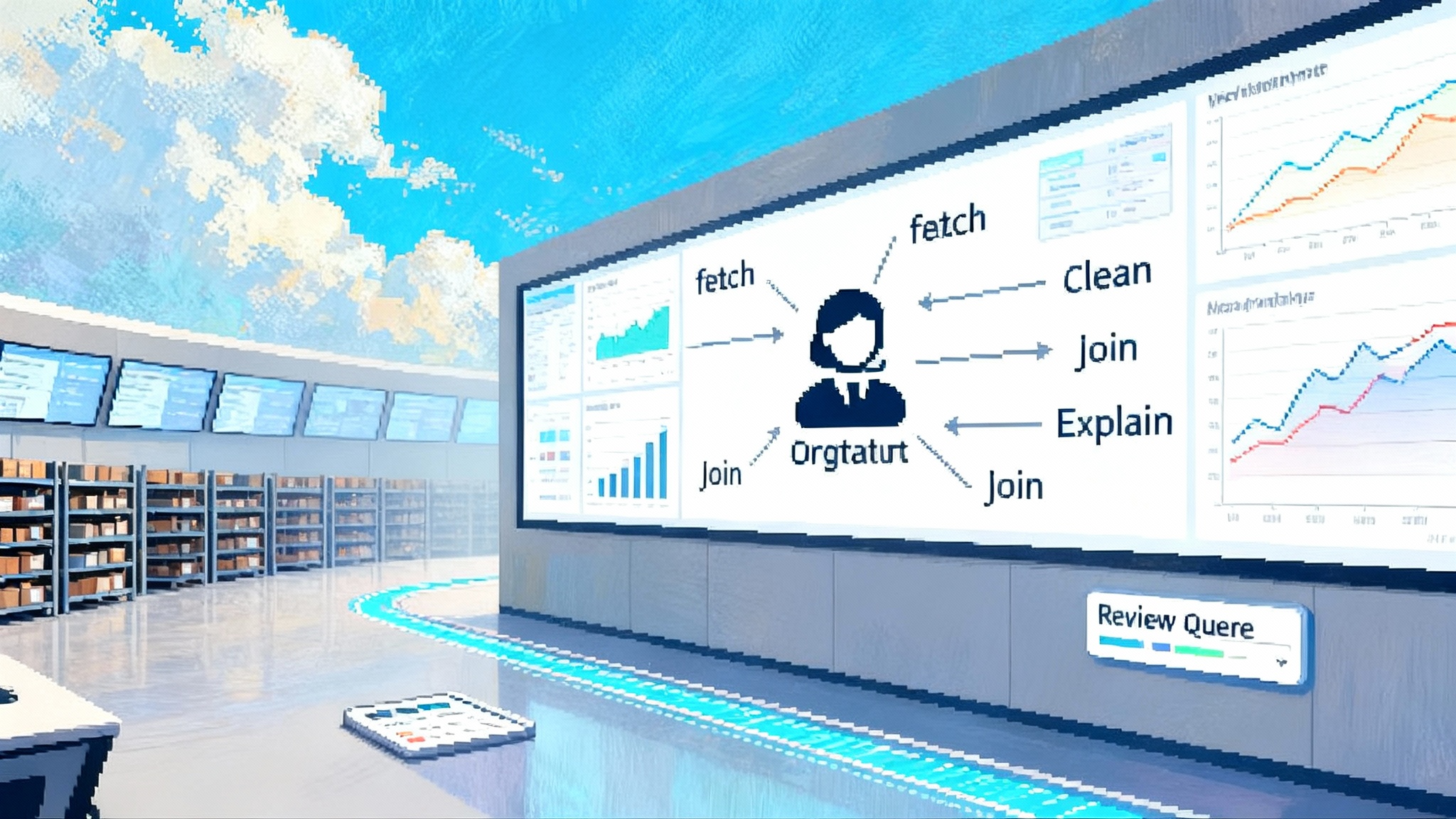

Retrieval augmented generation is now the default pattern for applying large language models to publisher content. The recipe is familiar. Index your archive. Retrieve the best passages at query time. Prompt a model to synthesize a faithful, source linked answer.

The difference between a good and a great result is in execution. Five gritty decisions decide outcomes:

- Indexing granularity

- Chunk size, overlap, and deduplication determine whether the retriever returns passages specific enough to answer the question without dropping vital context.

- For long explainers, bias to smaller chunks with light overlap. For news briefs, larger chunks can keep entities and dates intact.

- Hybrid retrieval

- Combine semantic vectors with lexical signals and metadata filters. Dense retrieval shines on concepts. Sparse retrieval wins on rare entities, dates, and exact phrases. The best systems blend both and learn when to lean on each.

- Freshness strategy

- Publisher search must be news aware. That means fast ingestion for breaking stories, a freshness boost in the ranker, and opinionated fallbacks to canonical explainers when intent is evergreen.

- Answer discipline

- Guardrails that prioritize quotations and factual bullets before prose reduce drift. If the source is thin, answer narrowly or defer with a link.

- Latency budgets

- Aim under two seconds end to end. That pushes you toward caching hot queries, precomputing entity maps, and streaming tokens with progressive disclosure of citations. Users will forgive slightly longer finalization if they see useful text and links arrive early.

If you have been following agentic product work, these tradeoffs will feel familiar. We saw a similar pattern in Inside Algolia Agent Studio, where product choices around retrieval and orchestration determined whether demos scaled to enterprise expectations.

The three fronts: trust, monetization, distribution

-

Trust. First party answers can show the exact paragraph that supports a statement and always link back to the original article. That is harder for aggregator chatbots that blend many sources, some licensed and some not. When the source list is composed of brands your audience already recognizes, the answer inherits brand trust instead of diluting it.

-

Monetization. ProRata couples Gist Answers with a native ad format that sits beside responses and aims to match intent. The closer the answer is to a user task, the more performant the adjacent placement. Publishers also participate in revenue when their content contributes to answers served on third party destinations using the same stack.

-

Distribution. Aggregators still benefit from default placement in browser omniboxes and mobile search fields. First party AI search does not erase that advantage. It does reduce leakage. When readers arrive via newsletters, socials, or homepages, an on site box can satisfy their next question without sending them out to a chatbot. Over time, that compounds into deeper sessions, clearer conversion paths, and more predictable ad yield.

For readers of this blog, the distribution angle echoes what we covered in Space Agent Signals a Shift: products that move from dashboards to doers tend to keep users closer to the brand experience.

The compensation mechanics to inspect

Marketing headlines are simple. The diligence is not. If you evaluate Gist Answers, press for specifics on five mechanics that determine whether the economics work for your newsroom:

- What counts as shareable revenue

- Is it only ad revenue next to AI answers, or also subscription upsells and commerce referrals triggered by answer interactions?

- Attribution method

- When multiple sources inform a single answer, how is the split calculated among contributors? Does the model reflect exposure as well as clicks?

- Off site distribution

- If your content helps answer a question on a third party site that uses the same stack, do you get paid even if the reader does not click back?

- Floors and controls

- Are there minimum effective RPM floors? Can you opt out of low value categories or block specific brands?

- Reporting transparency

- Do you see contribution shares at the article level? Can you audit retrieval logs during disputes? Are there controls for sensitive topics and local compliance?

A workable model defines answer views, contributing source weights, and link through credit. It should also let you tune the balance between answer completeness and click incentives. A recipe site might show a brief ingredient list and withhold steps unless the user clicks. A service journalism site might provide the complete answer and monetize the adjacent placement.

Integration and SEO tradeoffs

Adding an AI answer layer changes how search engines and readers experience your site. Plan for operational controls from day one:

- Crawling and indexation. Decide whether answer pages are indexable. Many publishers will mark them noindex to avoid competing with their own articles, but still allow crawling for discoverability signals.

- Canonicals and duplication. If answers paraphrase reporting, set canonical links to the source articles and avoid mirroring answer text across multiple URLs.

- Structured data. Use Q and A or HowTo schema on pages that include AI answers so that structured data remains coherent with human written content. Avoid exposing transient, query specific URLs to bots.

- Internal linking. Bias links to your most authoritative evergreen resources. That requires a curated map of canonical explainers by topic, not just a list of recent posts.

- Measurement. Track answer impressions, contributing source exposure, link through rate, time on site after answer, and adjacent RPM. Compare these against cohorts that used your legacy internal search.

If you are deep in instrumentation conversations, you will find useful patterns in Edge AI Observability, especially around turning messy behavior into a feedback loop the newsroom can act on.

The aggregator context and the licensing cloud

Aggregator chatbots have made progress on licensing, but the landscape remains uneven. Some publishers have struck deals to license archives to AI platforms, while others are fighting in court. In March 2025, a federal judge allowed the New York Times copyright case against OpenAI to proceed, keeping core infringement questions alive and signaling that the rules are not settled. For a clear recap of that ruling, see NPR summarized the judge’s decision to let the case proceed.

This backdrop does not mean aggregator chatbots are doomed. They still control massive distribution and can deliver high intent users to publisher links when answers are citation forward. But it sharpens the contrast. You can deploy a licensed, first party answer box that pays you when your words power the result. Or you can rely on third party answers that may or may not compensate you and that often keep the reader in their interface.

A practical 90 day playbook for mid market publishers

You do not need a skunkworks team to evaluate first party AI search, but you do need a structured trial. Use this plan:

- Define the jobs to be done

- List your top 200 on site queries and FAQs. Group by task: explain, compare, decide, do. Prioritize sections where you can help a reader complete a task quickly.

- Prepare your corpus

- Clean up canonical explainers, evergreen guides, and policy pages. Add short summaries and key facts at the top of each article. Mark high value pages as preferred sources in retrieval filters.

- Decide your scope

- Phase 1 can be your corpus only. Phase 2 can add the licensed network to boost coverage. Document the uplift you need from the network to justify revenue share complexity.

- Set guardrails

- Establish answer length limits by intent. Require at least two citations for most answers. Force a link to your primary evergreen resource where it exists. Create a blocklist for sensitive topics and a human escalation path.

- Instrument evaluation

- Measure coverage, faithfulness, click through to sources, and latency. Define a red team script for adversarial prompts common in your beat. Track errors by cause: retrieval miss, summarization drift, outdated content.

- Model your economics

- Compare answer adjacent RPM to display and to newsletter ads. Estimate answer views per thousand sessions and expected contribution share as a licensor. Set a minimum acceptable effective RPM for the pilot.

- Tune the UX

- Place the box in the site header and article template. Allow keyboard search from site chrome. Stream tokens so users see answers build. Keep citations visible by default. Make the path to the underlying article obvious.

- Plan for SEO

- Mark answer pages noindex at first. Use canonical links to cited articles. Add Q and A schema only if you are comfortable with potential rich result exposure. Monitor crawl stats to catch accidental duplication.

- Close the loop with the newsroom

- Share weekly insights on which pages power answers. Use that data to prioritize updates, add explainer modules, and align editorial calendars with recurring question patterns.

- Decide go or no go

- Weeks 1 to 3: instrument, seed the index, soft launch on two sections.

- Weeks 4 to 8: expand to five sections, adjust guardrails, and test the licensed network.

- Weeks 9 to 12: roll to the homepage, set commercial terms, and decide permanent placement.

Build, buy, or blend

Not every publisher should buy a turnkey solution. Use this quick decision tree:

-

Buy if you want speed, a licensed network, and a compensation scheme you cannot replicate quickly. This is the Gist Answers promise: a ready index, shared economics, and ads with proven demand.

-

Build if your content is highly specialized, you have strong in house ML talent, and your data governance needs are strict. You get more control over retrieval and model choice, but you will need to solve monetization yourself.

-

Blend if you want to pilot with a vendor while building core retrieval infrastructure in parallel. Keep your own vector store and let the vendor handle ad demand and network licensing during the trial.

The most important non technical factor is incentives. If the vendor earns when you earn, and if the attribution logs are auditable, you can treat the relationship as an extension of your internal search team rather than a black box.

What success looks like after a quarter

By the end of a 90 day pilot, you should expect to see the following if first party AI search is working:

- Higher session depth and time on site for users who engage with the answer box.

- Fewer exits to external search and chatbot tabs from article pages.

- Higher RPM next to answers than next to comparable non AI modules.

- Stable or improved subscription conversion near paywall prompts.

- A weekly list of source articles that merit updates because they drive answer quality and revenue.

If you do not see these signals, simplify. Tighten the corpus to top evergreen resources, make answers shorter, and raise the bar for when the system is allowed to answer instead of recommend a click.

The bottom line

Publisher owned AI search will not replace the distribution power of aggregator chatbots. It can replace the feeling that your reporting disappears into someone else’s box. ProRata’s Gist Answers is a credible attempt to turn AI search into a first party product with aligned economics and measurable trust benefits. If you have a clean archive, a clear view of the questions your audience brings to you, and a willingness to tune guardrails, you can ship an experience that earns more from the attention you already win. That is the right bar for adopting any AI layer in publishing: better service for readers, better economics for your newsroom, and better leverage when the next licensing negotiation begins.