Geordie Launches Agent-Native Security for Enterprise AI

Geordie unveils agent-native security for enterprise AI agents, delivering live runtime visibility, contextual policy, and human-in-the-loop control. Discover why AgentSec is its own layer and a smart build vs buy path.

Breaking: a new security layer for the agent era

A fresh chapter in enterprise AI security just opened. Geordie emerged from stealth with an agent-native platform built to monitor, govern, and intervene in autonomous agents as they work. The company announced a 6.5 million dollar raise and positioned its system as a cockpit for security teams that want to see, shape, and sometimes stop what agents do in production. Early details were covered in SecurityWeek on Geordie funding.

Read that again. A cockpit, not a clipboard. Instead of static guardrails that only filter prompts or mask outputs, Geordie’s pitch bets on runtime governance. It argues that AgentSec is becoming its own layer in the enterprise stack, similar to how Identity and Access Management separated from monolithic apps when services and APIs took off.

Why agents break yesterday’s security assumptions

Traditional application security is built on predictability. Requests arrive, services respond, logs capture the flow, and policies map to roles and endpoints. Agents behave differently. They reason across context, call tools, traverse internal systems, and adapt to feedback. Think of an agent as a new hire who improvises to meet a goal rather than a script that follows a known path.

Two implications follow for security and compliance:

- You cannot infer risk from configuration alone. Two identical agents can make different choices based on context, memory, and tool results.

- Post hoc audits are not enough. If you only discover a risky action after it happened, your exposure window is too wide.

Consider a purchasing agent that can file vendor tickets, check budgets, and submit purchase orders. A subtle prompt injection in a vendor portal might nudge it to create a second account that routes invoices to a different cost center. Nothing obviously “broke.” The sequence resembles a normal workflow. The risk hides in the emergent chain of actions.

What makes a platform agent-native

Agent-native security is less about hardening inputs and more about governing decisions in flight. Three capabilities define the category:

-

Runtime visibility that follows the agent, not just the app. You want a live graph of actions, tool calls, data reads, and writes as the trace unfolds. Treat it like flight data for reasoning systems.

-

Policy that evaluates context, not just roles. Rules should consider the principal an agent acts for, the intent of the step, the destination of data, and whether this matches past behavior. That looks more like a policy program than a static allow list.

-

Live intervention that can nudge or halt. Sometimes you require human signoff for high-risk steps. Sometimes you ask the agent for stronger justification or force a safer tool. Sometimes you hit a kill switch.

Geordie describes a runtime engine, informally called Beam by some observers, that aims to guide choices rather than only block them. In practice, that might mean lowering privileges mid-run, swapping a sensitive tool for a safe simulator when confidence drops, or escalating a contract edit for human review when it crosses a policy threshold.

From controls to choreography

The metaphor that keeps surfacing is air traffic control. Security tools have long controlled the runway. They validated identities, checked baggage, and watched the perimeter. AgentSec choreographs the airspace above the runway. It tracks each flight’s path, talks to pilots, and can change course when conditions shift.

That shift matters for three reasons:

- Risk detection becomes dynamic. You can spot tool poisoning, confused deputy patterns, and off-policy data flows as they emerge, not only in logs later.

- Policies become executable. You can encode business rules like “draft contracts are fine, send for review if above 50,000 dollars, and never export price lists to external agents” and enforce them during execution.

- Operations become collaborative. Product, legal, and security inspect the same traces, annotate decisions, and tune policies without halting the program.

If your team is moving from dashboards to doers, this is the missing piece between orchestration and compliance. It is the same shift we explored when Space Agent signals a shift from passive analytics to action-taking agents.

AgentSec is breaking out as its own layer

Today’s agent stack is starting to look like this:

- Foundation models and inference infrastructure

- Orchestration frameworks that coordinate tools and workflows

- The emerging AgentSec layer for identity, policy, runtime visibility, and live intervention

- Enterprise systems and data sources where the work happens

Vendors are converging on the middle security band. IBM’s all-in-one posture tools are expanding to cover models and agents. Identity players focus on permissions and audit trails for non-human principals. Large consultancies package orchestration with role-based access and governance. Domain tools like email security platforms are building agentic defenses tuned to specific channels.

Geordie’s claim is that agent security must be runtime-first. That is the difference between a policy that says “sales assistants cannot modify price lists” and a runtime engine that notices an agent copying a price table into a free-form message and steps in before it leaves the network.

The build vs buy playbook for do-work agents

Startups often face a false choice. Either they bolt a few prompt filters onto an agent and hope for the best, or they try to build a full control plane and lose quarters reinventing identity, governance, and logging. There is a middle path that lets you move fast without ignoring risk.

1) Outsource identity, policy, and audit

- What to outsource: agent identity and authentication, permission checks, rate and spend limits, immutable audit logs, and a policy engine that evaluates context at runtime.

- Why outsource: these are horizontal, high-blast-radius capabilities that require specialized engineering and evolve with regulations. Building them in-house slows your roadmap and creates a future compliance tax.

- How to evaluate: look for platforms that issue agent identities distinct from human users, enforce scope by task and data domain, and produce human-readable plus machine-readable logs. Require regional data residency, and evidence packages aligned to the European Union Artificial Intelligence Act, SOC 2, and your sector’s controls.

- Practical test: can the platform prevent an agent from reading a customer’s full record when a task only needs anonymized fields, and can it prove that decision to an auditor without custom code?

2) Own domain actions and data contracts

- What to build: the actions that represent real work in your product and the schemas that define inputs, outputs, and side effects. Treat them as signed procedures. This is your moat.

- Why it matters: actions and data contracts encode how your business creates value. You need precise control, versioning, tests, and performance tuning.

- How to build: wrap every external call in a versioned contract. Add preconditions and postconditions that run automatically in your orchestrator. Build a simulator for high-risk tools so you can test policies safely. Run chaos drills that inject malformed or hostile outputs, then watch how your agent and policies respond.

- Practical test: can you swap the payments action from sandbox to live and have your policy layer automatically escalate the first 20 real transactions above a threshold for human review?

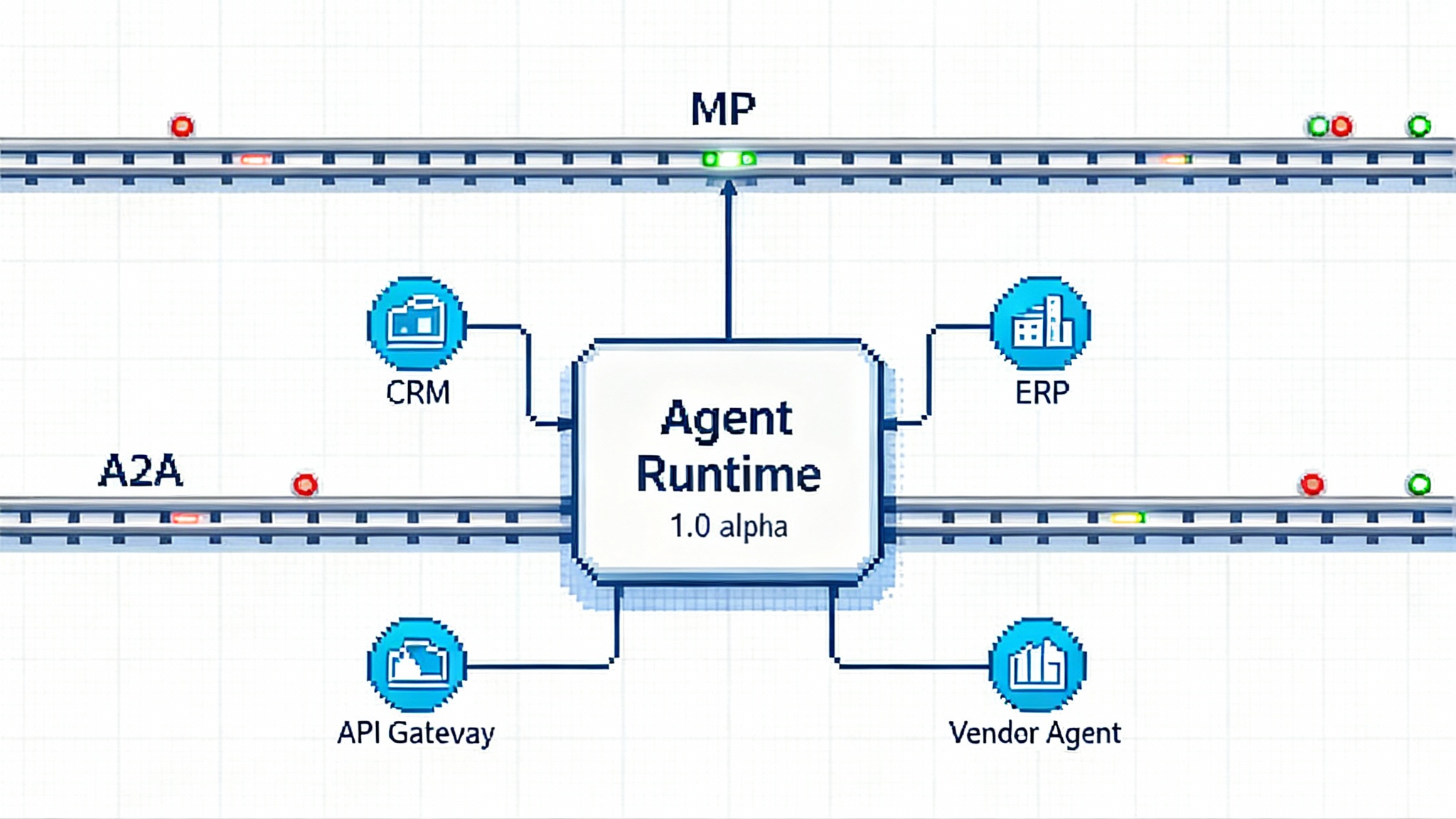

3) Align early with emerging agent protocols

- Why adopt protocols: protocols turn one-off integrations into reusable connectors and make your audit trail portable. They also create a shared surface for security tools to observe and intervene.

- What to use now: the Model Context Protocol from Anthropic is gaining adoption across platforms and operating systems. It standardizes how agents discover resources, call tools, and share context. For a mainstream overview, see Verge coverage of MCP. If your resource access rides an MCP-compatible server, your security layer can reason about it consistently.

- What to watch next: proposals for agent-to-agent communication are crystallizing. Whether you adopt a formal A2A protocol or a vendor variant, design actions and policies so that inter-agent messages are typed, logged, and enforceable.

- Practical test: can your agent discover a tool via a protocol registry, request consent to use it, and emit a structured trace that shows which tool was called, with what scope, and under which policy decision?

For teams building orchestration, the production shift described in LangChain 1.0 alpha shift shows how fast the operational bar is rising. On the go-to-market side, commerce rails like AP2 open rails for agents illustrate why standard contracts and policy-aware connectors will matter.

A week-one wiring plan for real-work agents

If you are turning on a new agent next week, here is a concrete setup that gets you to value fast without painting yourself into a corner.

Day 1: name the agent and define the narrowest goal that delivers value. Write down the five actions it needs. Draft input and output schemas for each action. List the data sources involved and mark which contain sensitive fields.

Day 2: plug in an agent-native security platform for identity, policy, and audit. Create a dedicated agent identity with the least scope that still completes the goal. Turn on immutable logging. Set spending and rate limits for external tools.

Day 3: implement two actions end to end with strong preconditions and postconditions. Build a simulator for any tool that can cause harm or cost. Add canary records to datasets that should never leave a safe domain and alert if they move.

Day 4: write three executable policies. Example set: redact personally identifiable information from free-form outputs by default; require human approval if an action touches customer billing; block external messages that contain internal file paths or database identifiers.

Day 5: run a live fire drill. Inject a prompt that tries to route a sensitive document to an external workspace. Watch the trace. Confirm the policy triggers, the agent explanation is logged, and the human-in-the-loop sees clear next steps. Tweak thresholds and repeat.

By the end of the week, you will have a small but real do-work agent with a visible heartbeat, enforceable policy, and clean audit trails. You will also have a template for adding more actions without widening risk.

How this changes the stack for security teams

Security leaders will need to extend three core disciplines to cover agentic systems:

- Identity becomes multi-principal. You will manage human users, service accounts, and autonomous agents that act on behalf of people or teams. Treat agent identities as first-class citizens with dedicated scopes and lifecycles. Rotate keys and privileges per task, not per service.

- Policy becomes dynamic and stateful. Static rules will not capture the nuance of a reasoning system mid-task. Move policy closer to code. Test and stage policy changes like application features. Track policy coverage the way you track test coverage.

- Telemetry becomes narrative. Traces should tell a story your legal and compliance teams can follow. Prefer structured events over free-form logs. Require deterministic labels for risky transitions like data export, cross-domain writes, or off-platform tool calls. Visualize causality, not just timestamps.

Expect vendors to compete on four knobs: breadth of discovery across frameworks and clouds, depth of runtime visibility, expressiveness of the policy language, and ease of human intervention with minimal disruption. Integrations with SIEM and DLP tools will be table stakes. Dedicated auditor portals that produce evidence packages on demand will differentiate winners.

Risks and honest limitations

No runtime system guarantees perfect safety. Three risks deserve ongoing attention:

- False confidence. A beautiful trace is not the same as complete coverage. Make sure visibility includes every tool boundary, not just the ones that speak your orchestrator’s language.

- Policy sprawl. As teams add rules, conflicts and gaps emerge. Borrow from feature flag discipline. Use staged rollouts, default-deny for high-risk actions, and automated tests for critical policies.

- Latency and cost. Live intervention adds hops. Measure added time per step and give product teams budgets. Allow shallow checks for non-sensitive actions with predictable cost. Reserve deep inspection for high-impact events.

These caveats do not weaken the core idea. They focus where to invest in engineering discipline and where to demand maturity from platforms.

What to watch next

- Protocol registries. Expect curated catalogs of MCP servers and approved inter-agent connectors that ship with compliance metadata and risk scores.

- Standard traces. A common schema for agent execution traces will make it easier to switch vendors and prove compliance to auditors.

- Identity federation for agents. SSO for agents will arrive, with cross-tenant trust and scoped delegation similar to human sign-in.

- Task-scoped keys. Short-lived, least-privilege credentials minted per task will reduce blast radius for tool misuse and theft.

- Real-time testing in production. Agents will propose safe rollbacks and auto-escalate when they detect uncertainty, blending self-assessment with policy.

The bottom line

Geordie’s debut arrives at the moment the industry needs it. Autonomous agents have crossed from demos to do-work. That demands a security layer that understands how agents think, not only what inputs they see. Treat AgentSec as its own runtime layer. Buy identity, policy, and audit so your team can ship faster without a compliance tangle. Own the actions and data contracts that encode your value. Align early with protocols so your logs and policies travel with you as the ecosystem matures. Do this and you will move quickly, stay trustworthy, and keep agents on course even when the air gets choppy above the runway.