Proactive BI Agents Are Here: From Dashboards to Diagnosis

Proactive BI agents just moved from monitoring to explanation. With WisdomAI's September 3 launch, 24/7 metric watching, anomaly triage, and root cause proposals are becoming table stakes. Learn how to build or buy now.

The breakthrough moment

On September 3, 2025, WisdomAI announced Proactive Agents and framed them as an always on AI analyst that learns your business, monitors metrics, detects changes, and proposes next steps. The message is crisp and serious. Spend less time refreshing dashboards and more time acting on explanations. The announcement is worth a careful read because it captures a new floor for expectations in business intelligence. See the details in WisdomAI launches Proactive Agents.

The right metaphor is clinical. Think of your metric stack as a hospital. Dashboards are the monitors that show vitals. Alerts are the beeps that demand a glance. Proactive agents play attending physician. They watch the vitals in real time, triage which beeps matter, and write an initial differential diagnosis with orders to confirm or roll back. If that sounds ambitious, it is by design. The user experience shifts from reports to reasons, from charts to choices, and from paging to plans.

Why dashboards give way to diagnosis

For years, teams have invested in clean metrics, rich dashboards, and configurable alerts. Those investments were necessary. They are no longer sufficient. The pattern across operations is clear. Monitoring alone does not satisfy decision makers who are measured on outcomes. A dashboard can say conversion fell yesterday. A diagnosis must say where, why, how big, and what to do next. Engineering observability embraced this mindset a decade ago and never looked back. Now revenue, supply, marketing, support, and finance are following the same curve.

This is not a niche upgrade. It is a change in what leaders assume software can do. The baseline is 24 by 7 monitoring that filters noise, ties anomalies to business impact, and proposes a credible next step. Teams do not want one more chart. They want a ranked list of explanations with evidence, confidence, and a safe action plan.

What buyers will expect by 2026

Buyers will increasingly treat these capabilities as table stakes rather than premium add ons:

- Always on metric watching across event streams and warehouses

- Anomaly triage that suppresses noise and lifts high impact changes

- Root cause proposals that cite evidence, name responsible dimensions, and suggest verification steps or rollbacks

- Human in the loop controls that keep risky actions reversible

- Cost to serve transparency so finance understands the unit economics of insight

Vendors who cannot demonstrate these behaviors with live demos and backtests will feel out of step by early 2026.

Build or buy in 2025

The practical question is simple. What should you ship or adopt now to meet the higher bar without creating brittle complexity or runaway spend? Four pillars matter most: a spine that joins event streams and the warehouse, an evaluation harness that measures diagnosis quality, human in the loop and rollback controls, and cost to serve management that finance can audit.

1) Event stream plus warehouse integration

Proactive diagnosis starts with connective tissue. Agents need speed and history.

- Event stream for freshness. Publish key business events such as user actions, ad impressions, checkout attempts, inventory changes, deploys, and price updates. Keep schemas versioned and document semantic meaning. Include business identifiers like account, plan, and campaign so the agent can segment correctly.

- Warehouse for depth. Maintain a clean source of truth in Snowflake, BigQuery, or Databricks. Keep dimensional models for product, customer, geography, and channel. Preserve slowly changing dimensions so the agent can attach a plan change or region reassignment to a metric shift.

- Semantic layer as a contract. Define metrics and dimensions in a central layer that the agent can query consistently. Expose a metrics registry, time grains, allowed filters, and ownership. This reduces the odds that two queries compute monthly active users differently.

- Lineage and business events as first class inputs. Diagnosis gets sharper when the agent can join time series with deploys, promotions, price changes, outages, and holidays. Treat these logs like sensors, not afterthoughts.

A helpful historical reference that pairs anomaly detection with interactive diagnosis is the ThirdEye project for detection and RCA. Your stack may differ, but the lesson is durable. Tie detection, diagnosis, and context into one loop so the agent can explain what changed and why it likely changed.

2) Evaluation harnesses for root cause accuracy

If diagnosis is the feature, measurement is the moat. Without an evaluation harness, root cause proposals degrade into storytelling. You need objective tests that run every week and trend over time.

Build an RCA evaluation harness with these parts:

- Synthetic anomaly injection. Create controlled perturbations in sandboxes. For example, reduce conversion in one region by 8 percent and raise paid clicks in a single channel by 12 percent. Label the ground truth factors. The agent should surface the right segments as top suspects with supporting evidence.

- Historical backtests. Replay real incident windows from the last year. Label the true causes from postmortems. Score precision at k, recall at k, and time to first credible narrative. Track false positives that would have caused unnecessary churn.

- Counterfactual consistency. When the agent claims that a price drop drove conversion up, verify that in a matched control region where price did not change, conversion stayed flat. Penalize explanations that fail basic counterfactual checks.

- Multi source agreement. Compare proposals across aggregation levels. A strong system should reconcile that a national dip was driven by a single carrier outage in three states, not by a nationwide behavior shift. Inconsistency should lower confidence.

- Feature ablations. Routinely turn off certain inputs such as campaign events or deploy logs. If accuracy collapses, you have a key dependency and a reason to improve observability for that signal.

Create a scorecard that the whole company understands. Example targets: top 1 explanation accuracy at 60 percent or better, top 3 at 80 percent or better, median time to credible narrative under 10 minutes, and mean time to rollback decision under 45 minutes. Publish the trend. The scorecard converts aspiration into operating pressure.

3) Human in the loop and rollback

Autonomy without brakes is not useful in an enterprise. Design progressive control so people supervise what matters and automation handles the rest.

- Triage queues with confidence thresholds. Route only high confidence, high impact anomalies to auto actions. Send medium confidence items to analysts with proposed steps and prewritten queries. Archive low confidence noise and add it to the learning set.

- Safe actions and rollbacks. Define what the agent may do unassisted. Examples include pausing a campaign segment, lowering a bid cap by 10 percent, or flipping a feature flag to off. Every action needs an automatic rollback that triggers on pre declared thresholds or manual veto.

- Two phase commit for risky moves. Let the agent prepare a change and a rollback plan, then request human approval within a time window. If not approved, revert.

- Audit trails and reproducibility. Capture every alert, hypothesis, query, and chart as a case file with a permanent link. Re running the case on new data should produce the same narrative except for updated numbers.

- Feedback that actually learns. One click labels like Correct cause, Partial cause, and Wrong feed back into ranking models and retrieval. Close the loop weekly during model updates.

4) Cost to serve that finance can trust

Agentic BI is a service with real costs. Data movement, storage, compute for detection and diagnosis, and model inference all add up. Design for cost clarity from day one.

- Budget by metric and by team. Assign a monthly budget per thousand metrics watched. For example, 10 dollars per thousand metrics for baseline checks and 40 dollars per thousand for diagnosis bursts. This prevents unbounded metric sprawl.

- Load shedding policies. During heavy incident windows, limit deep diagnosis to the most material metrics by revenue exposure. Define a maximum of N concurrent deep dives and a queue policy. Avoid spend spikes and degraded response for everything else.

- Token and compute optimization. Cache recent slices, collapse duplicative queries, and throttle speculative hypotheses. Aim for the first accurate explanation, not the fiftieth variant.

- Benchmark the fully loaded cost. Include storage, stream processing, feature stores, inference, and analyst time for reviews. Target a ratio such as one hour of human review per ten incidents with auto rollback and two hours per complex incident, then refine.

A simple estimate helps planning. Suppose you monitor 50,000 metrics with a 1 percent daily anomaly rate and an average of two diagnosis runs per anomaly. If a deep run consumes 0.25 dollars of compute and model spend on average, daily diagnosis spend lands near 250 dollars, or roughly 7,500 dollars per 30 day month. Add 2,500 dollars for stream and warehouse overhead and you get a round 10,000 dollars monthly. If the system prevents a single day of misallocated advertising or an overnight checkout issue, the payback is immediate. Your numbers will vary, but the framing should be this explicit.

A smart buying checklist

RFPs for agentic BI should move past feature checklists and force vendors to prove diagnosis quality in your context. Ask vendors to deliver the following before you sign a statement of work.

- A red team backtest. Provide a set of labeled incidents. Request top three explanations with evidence and counterfactual checks. Score on accuracy and time to first credible narrative. Require that they run it in your environment or a privacy safe replica.

- A deployment plan. Look for connectors to your event stream and warehouse, a semantic contract for metrics, and a plan for lineage and business events. Confirm that your metrics registry stays the source of truth.

- Governance and rollback. Request a concrete list of authorized actions and exact rollback triggers. Ask for an audit trail sample from a real customer case, sanitized for privacy.

- Cost controls. Negotiate a fixed fee for watch, a variable fee for diagnosis bursts with caps, and a switch to pause deeper hunts during budget freezes.

Pilot where business impact is easy to measure. A single domain such as paid growth, supply chain health, or billing risk is enough to generate a strong signal. Measure revenue exposure, false alarm fatigue, and time saved by analysts over four weeks. Expand only if backtests and pilot results both meet the bar.

A minimal build blueprint

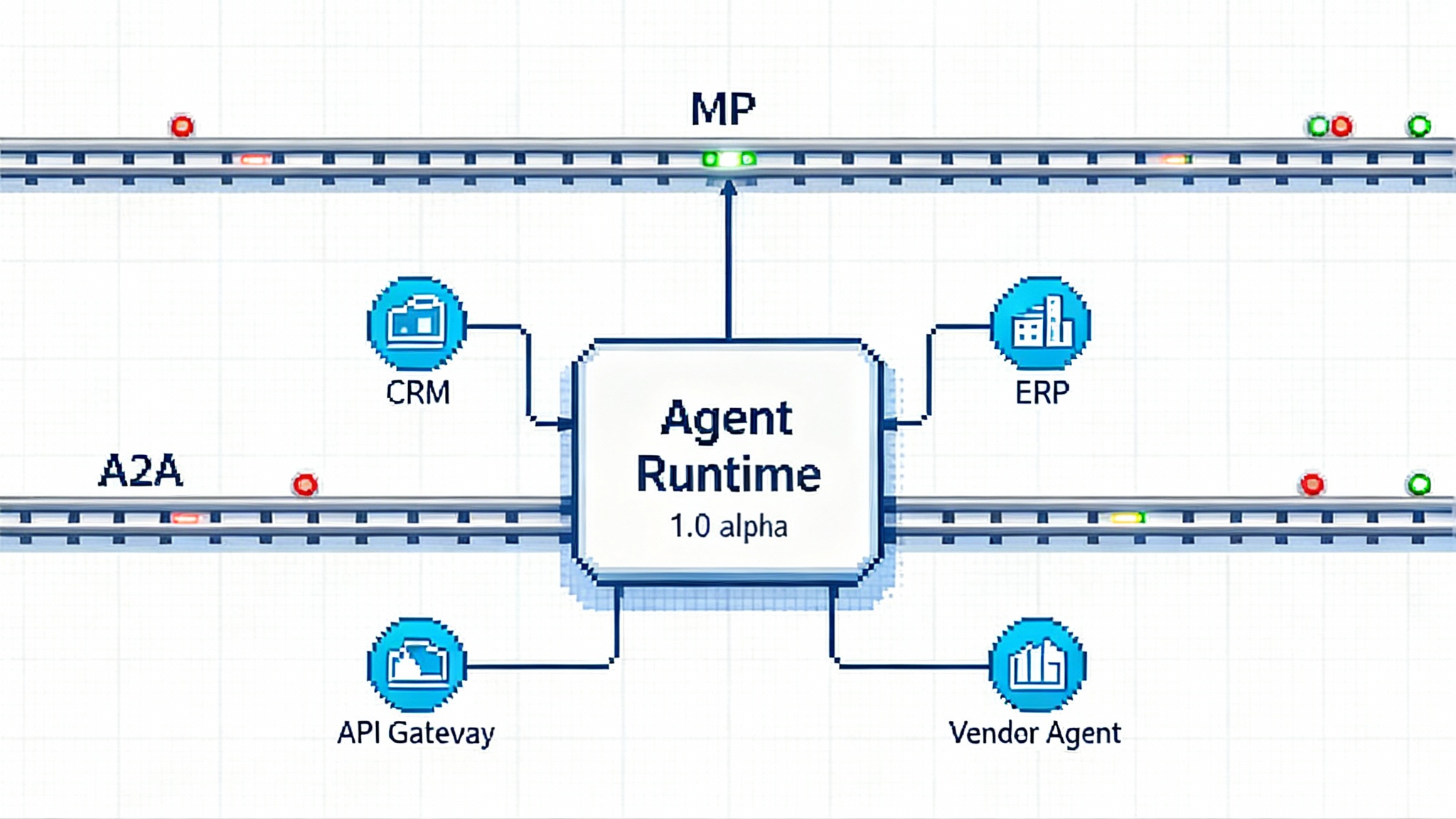

Not every company should build, but some should. If you have unique signals, strict data residency, or very specific actions that are hard to expose to a vendor, building a targeted agent can make sense. Here is a minimal viable architecture that keeps focus on diagnosis rather than yet another dashboard factory.

- Data layer. Stream ingest for key events, warehouse for history, and a feature store for derived aggregates used in detection and diagnosis.

- Detection. Seasonal decomposition or Bayesian change point detection at metric granularity, with impact scoring by revenue or customer harm.

- Root cause engine. A hybrid of top down contribution analysis and bottom up search across dimensions. Pull in business events and deploy logs for causal hypotheses. Use retrieval augmented generation for narratives and to propose verification steps.

- Orchestration. A job scheduler for periodic scans and event driven pipelines for bursts. Treat diagnosis windows like incidents with defined priorities.

- Human loop. Review queues, confirmation steps, and rollback automation wired into the actions your teams already use.

- Evaluation. Synthetic injections, backtests, and weekly scorecards that executives can read without translation.

- Security and compliance. Principle of least privilege for queries, strict secrets management, and a kill switch for the agent.

As you build, borrow proven patterns from the broader agent ecosystem. Suites that centralize context can speed onboarding. See how suites as the fastest on ramp shapes adoption curves. Decision planning also matters. Agents that translate findings into ordered steps outperform chatterboxes, which is why products that move from queries to decision plans tend to win trust in operations.

Operating model shifts by mid 2026

Expect four visible shifts if you implement proactive diagnosis well.

-

Daily standups include agent authored cases. Teams review a ranked list of three to five explanations per domain. The habit sticks because the cases cite evidence, quantify impact, and link to reversible actions.

-

The line between data observability and BI blurs. Pipeline health checks and business metric triage share inputs and tooling. Deploy logs, campaign calendars, and holiday data sit next to metric timelines inside the same case file.

-

Incident response borrows from site reliability engineering. You define service level objectives for explanations, such as time to credible narrative and accuracy at top three. Incident commanders learn when to accept auto rollback and when to escalate for human review.

-

Compliance and finance become first class stakeholders. Audit trails and cost caps stop being optional. The agent gets a cost of goods line and a quarterly review like any other service. Security leaders will also insist on agent native security for enterprise patterns so actions remain controlled and traceable.

What startups should ship next

The analytics and agent market is crowded with claims. Teams that ship concrete pieces in the next two quarters will stand out.

- A metrics and events contract. Offer a clean spec for metrics, dimensions, and business events, plus adapters for major streams and warehouses. Make it a one afternoon connection.

- An RCA evaluation kit. Publish a downloadable harness with sample incident windows, a scorer for top k accuracy and time to narrative, and a notebook to reproduce results. Share your median scores on a public benchmark dataset.

- A safe actions catalog. Provide low risk actions with automatic rollbacks and evidence links. Make it easy to add a new action with a single configuration page.

- A case file experience. Deliver a narrative that includes the query, the chart, the evidence log, and the rollback plan. One link per case should be enough context for Slack or email.

- Cost transparency. Provide a usage panel that shows spend drivers by domain and by team. Allow customers to cap diagnosis burst costs and schedule heavy analysis for off peak hours.

Win by making the job to be done obvious. The market has read enough prose about copilots for data. It wants an attending who explains the patient, orders the lab, and calls for a revert when needed.

The bottom line

WisdomAI’s Proactive Agents did not invent monitoring or anomaly detection, but the packaging and timing matter. The launch signals that always on watching, triage, and explanation are no longer differentiators. They are the minimum to be taken seriously. Teams that adopt an event plus warehouse spine, insist on measurable diagnosis accuracy, put humans in the right loop with rollbacks, and manage costs like pros will operate with fewer surprises and faster recovery.

Whether you buy or build, remember the core shift. The value is not another dashboard. The value is a credible why, a clear what next, and a safe way back when things go wrong. Treat diagnosis as a product, not a slide, and you will feel the difference by 2026.