Aidnn ushers in data-plane agents that finally do work

Aidnn signals a shift from chatty BI bots to data-infrastructure-native agents that plan, govern, and execute real work in your stack. Learn how data-plane agents turn insights into actions with audit-ready safety.

The day the data plane learned to act

On September 5, 2025, Isotopes AI introduced Aidnn, an enterprise agent designed to operate like an analyst and a data engineer working in concert. The announcement, covered in a detailed TechCrunch launch report on Aidnn, marked a practical turning point. The era of chat-only dashboards is giving way to data-infrastructure-native agents that plan multi-step work and execute it inside your stack. Aidnn’s site reinforces the posture with a clear emphasis on connectors, planning, and governance in your environment, not theirs. See the Aidnn product overview and connectors for the vendor’s positioning.

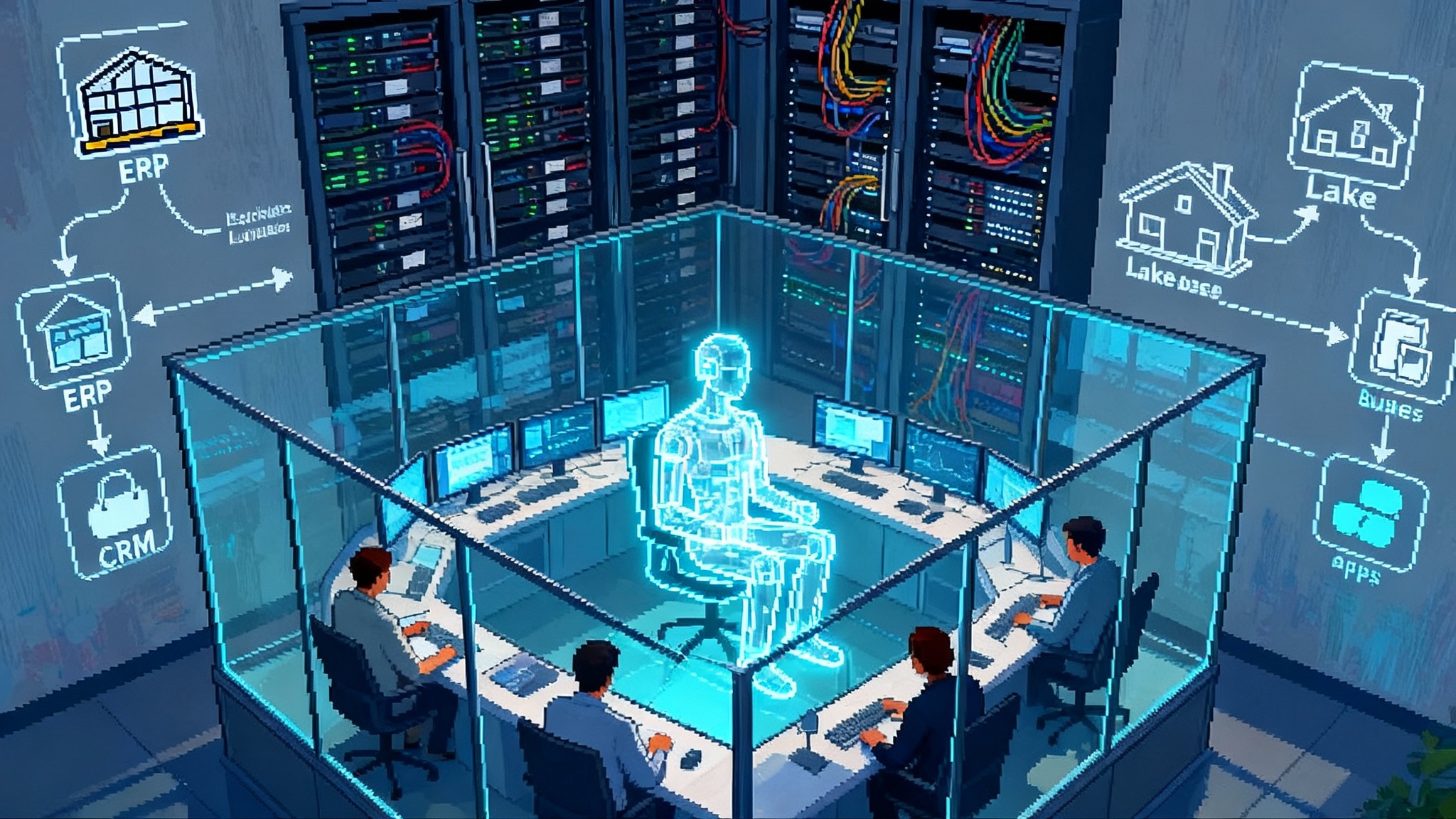

If you felt something shift, you are not imagining it. For a decade, teams tried to talk to data through chat layers on top of dashboards. Most could translate a question into a query, then render a chart. Very few handled the messy work where real analysis lives: reconciling schema drift, normalizing inputs from ERP and CRM systems, annotating lineage, or invoking downstream tools to act on results. Aidnn’s debut signals that agents are moving down into the data plane itself, where identity, policy, and workload management are first-class.

From BI chat to data-plane agents

Think of a BI chatbot as a helpful tour guide in a museum. It can point to paintings, answer questions, and zoom in on a detail. A data-plane agent is more like a curator and a conservator working together. It knows the collection, the storerooms, the humidity settings, and the shipping manifests. It can retrieve works from storage, restore a damaged canvas, decide which pieces go to which exhibition, and coordinate logistics. The difference is not tone. The difference is agency.

This shift builds on the same momentum we have seen in proactive analytic assistants. If you followed the recent proactive BI agents move, this is the next logical step. Instead of whispering suggestions into a dashboard, the agent operates where the data lives and where actions are authorized.

Three traits that separate data-plane agents

-

They sit over systems of record. Instead of relying on pre-modeled extracts or curated semantic layers, data-plane agents connect to the actual sources where work happens. That includes ERP, CRM, billing, data warehouses, and lakehouses. They prefer gold datasets when available and can fall back to trusted upstreams when needed.

-

They plan work, not just queries. A data-plane agent breaks a business request into concrete steps: discover relevant tables, inspect schemas, verify lineage, join across sources, reconcile units and currency, aggregate, materialize an output, and trigger actions when authorized. The plan is explicit and reviewable.

-

They act under governance. Because they operate in the data plane, these agents inherit identity, authentication, and authorization models. Row-level rules, masked columns, and audit trails become native features instead of bolt-ons.

What Aidnn signals about the agent pattern

Aidnn’s early materials emphasize that it plugs into existing systems, generates multi-step analysis plans, and executes without sending data outside company walls. The company positions the product as a coworker that can explore, explain, and act across finance, sales, and operations. Two points matter here. First, the tool collapses the gap between the person who knows the metric and the person who can run the pipeline that produces it. Second, it treats governance as a native constraint rather than a compliance afterthought. That combination is what turns a chatbot into a coworker.

Anatomy of a data-infrastructure-native agent

Use this checklist to distinguish a data-plane agent from a BI chatbot. Treat it as a procurement and architecture guide.

-

Identity in, identity through. The agent should accept a user or service identity, propagate it to every data source, and prove that reads and writes occurred under that identity. If your warehouse enforces row-level policies for a user, the agent should inherit those rules without custom code.

-

Lineage-aware retrieval. Retrieval should consult your catalog and lineage graph before touching data. That means preferring gold tables over ad hoc extracts, understanding upstream freshness, and warning when a column used in a metric was renamed or deprecated.

-

Schema introspection, not guessing. The agent must inspect schemas and statistics in situ, walk foreign keys, and sample rows to validate assumptions before it plans any join. If the warehouse provides histograms or profiles, the agent should use them to reduce cost and error.

-

Governed tool use. Query engines, transformation frameworks, orchestration, and operational endpoints should be invoked under explicit policies. The agent should present a planned tool chain, including cost and cardinality estimates, then require either an approval or a policy match before execution.

-

Pushdown by default. Computation should be pushed down to the engines that own the data whenever possible. Pull data out only when necessary, such as federating across systems that cannot join remotely or applying a model that does not exist in the warehouse.

-

Explainable planning. Every run should produce a plan artifact that explains what was read, why it was read, how it was transformed, and where the outputs went. Treat this as a living brief, not a black-box transcript.

-

Idempotence and rollback. If the agent writes to operational systems, every write should be idempotent and reversible. That includes staging writes, diffing changes, and maintaining a corridor for manual intervention.

A concrete example: MRR, the hard way, done the right way

Monthly recurring revenue sounds simple until you compute it across billing systems, discounts, mid-cycle upgrades, credit memos, and multi-entity books. A classic BI chatbot can query a revenue table. It will not construct what is often missing: a derived time series that correctly prorates partial months and aligns subscription events from CRM with invoice events from accounting.

A data-plane agent can do the following sequence end to end:

- Discover that invoice-level revenue lives in the accounting system, while subscription state lives in the CRM. It locates both, inspects their schemas, and checks lineage to avoid stale extracts.

- Build a join plan that maps subscription identifiers to invoice line items, applies product catalog metadata, and inserts a proration function for mid-month changes.

- Execute the plan inside the warehouse, using a materialization strategy that balances freshness and cost.

- Expose a result set under the same row-level policies that protect the underlying tables.

- Trigger a briefing for the finance leader and, if authorized, create variance tasks for account owners when churn risk spikes.

That sequence is the difference between an answer and an outcome. It turns a metric into a workflow with reversible actions and a clear audit trail.

Why now: the stack is finally ready

Timing matters. Warehouses and lakehouses have matured into operational cores, complete with governance primitives, query acceleration, and workload management. Catalogs have made lineage reliable enough for agents to trust. Large language models have improved at translating natural language into data operations, and tool-use techniques now support multi-step planning with a human in the loop. Teams that invested in these foundations can now move faster without lowering their bar for compliance or cost control.

We have also seen the broader market shift toward agentic stacks that respect enterprise boundaries. Suites are adding entry ramps and control layers, as we explored when agent control towers arrive. The common thread is simple. Winning systems live inside your guardrails and prove it with artifacts, not promises.

What this unlocks for teams building do-work agents

For founders and product leaders, the move to data-infrastructure-native agents unlocks capabilities that used to require tickets and brittle pipelines.

-

Governed tool use by design. Treat every tool invocation as a policy decision. Define allowlists for connectors and functions, and require pre-flight checks that show expected read volumes, estimated cost, and the exact permissions that will be used. Security reviews become mechanical, not ad hoc.

-

Schema introspection as a first step. Before any query or transformation, run a schema survey. Capture column types, null percentages, primary key uniqueness, foreign key coverage, and value distributions. Cache that profile as part of the agent’s context so it stops guessing and starts knowing.

-

Lineage-aware retrieval as guardrails. Always retrieve from gold or certified datasets when available. If the user asks for a metric that exists in your catalog, resolve to the canonical definition rather than synthesizing a new one. When a model upstream is late, the agent should surface the freshness gap before running anything.

-

Compliance by default. By operating within the existing identity and authorization framework, the agent inherits audit logs and access controls. Extend that with signed plan artifacts, row-level access proofs, and write diffs that can be reviewed and reversed. This is useful for Sarbanes Oxley audits, vendor assessments, and internal change management.

-

Human-in-the-loop that actually helps. Replace ambiguous chat confirmations with structured plan approvals. Present a compact plan that lists sources, join keys, filters, expected row counts, materialization strategy, and proposed actions. Allow the approver to tweak parameters, then record the decision.

-

Evaluation you can believe. Build a test harness that validates both semantic correctness and governance adherence. For example, verify that a user without access to a restricted segment receives masked results, and confirm that a proration function matches a reference implementation.

These principles align with the broader trend toward database-native agents. If that direction interests you, consider how a database native agent era changes your connector and planning choices.

Build versus buy: a pragmatic checklist

Use this scoring guide to decide whether to embed Aidnn-like capabilities or assemble your own retrieval-first agent stack. Score each item from 1 to 5 and note the gaps.

- Connectors inside your walls

- Do you have production-grade connectors to ERP, CRM, billing, warehouse, and lakehouse systems that support service and user identities, incremental syncs, and schema change handling?

- Action if building: Choose a connector framework with typed schemas, schema evolution hooks, and centralized secrets with rotation. Implement a dry-run mode that inspects permissions before any call.

- Identity propagation

- Can your agent receive a just-in-time user identity and impersonate it across sources, including row-level policy enforcement and column masking?

- Action if building: Standardize on single sign-on and short-lived tokens. Use per-tool service accounts only when necessary and log impersonation claims.

- Catalog and lineage

- Do you have a catalog that marks datasets as gold, silver, or experimental, plus a lineage graph that your agent can query?

- Action if building: Integrate a catalog that exposes an API for search and lineage traversal. Teach your agent to resolve metrics from the catalog before generating new ones.

- Planning engine

- Can the agent produce a human-readable plan that includes source selection, join graphs, cost estimates, and a materialization strategy?

- Action if building: Start with a structured plan schema. Add explain steps that annotate why each source was chosen, and bind expected row counts to prevent runaway scans.

- Execution inside the warehouse

- Can the agent push compute down to the engines that own the data and choose between ephemeral, incremental, or full-refresh materializations?

- Action if building: Wrap execution with idempotent staging tables. Only publish to production schemas on success.

- Write-back and operational actions

- Can your agent post results back into business systems, spin up a brief, open tickets, or trigger alerts under explicit scopes?

- Action if building: Create per-system adapters that support dry runs, bulk operations, and fine-grained scoping. Maintain a ledger of proposed versus executed actions with diffs.

- Governance and audit

- Do you have audit logs that tie every read and write to an identity, including the agent’s plan identifier and version?

- Action if building: Emit a signed plan and bind it to log events. Store redacted copies for user review and full copies for audit.

- Evaluation and safety

- Do you test outputs for correctness, performance, and policy adherence before general availability?

- Action if building: Create golden tasks with known answers and access patterns. Run shadow mode where the agent plans and evaluates without executing writes.

Scoring guidance: if you score below 20 across items 1 through 5, buying is likely faster and safer. If you score above 28 with clear owners for identity, catalog, and execution, building is viable. Either way, insist on the same plan artifacts, auditability, and rollback guarantees.

Embedding Aidnn-like capabilities into a retrieval-first stack

If you choose to build, a simple reference architecture can help you move fast without boxing yourself in.

-

Interface layer. Start with a prompt router that classifies intents into analysis, diagnostics, or operations. Keep prompts thin. Push structured context from your catalog and schema survey instead of dumping raw tables into context windows.

-

Retrieval and metadata. Use your catalog and lineage graph first. Resolve metric definitions and gold sources, then construct a candidate join graph. Only then consult embeddings for disambiguation of column names or free-text labels. Avoid treating a vector store as a primary data source when metrics already exist.

-

Planner. Train or fine-tune a planner on plan schemas, not free-form text. Reward the model for producing costed, explainable plans. Penalize silent column renames, unbounded scans, and missing rollback strategies.

-

Executor. Implement an execution engine that understands your warehouse dialect, can materialize staged tables, and returns measurable artifacts: rows scanned, bytes read, execution time, and estimated cost.

-

Tool governance. Store tool policies centrally. Each invocation should include policy checks and identity proofs. Provide a one-click path to request a policy exception that routes to the data governance team.

-

Human in the loop. Build a review screen that shows the plan, the policy checks, and a compact diff of expected impact. Offer a reason panel that traces choices back to lineage and metric definitions.

-

Observability. Instrument everything. Plans have identifiers. Runs tie to plans. Logs include identity, plan id, and cost. Dashboards show late data, drift, and escalating costs.

What to watch next

Several factors will determine which data-plane agents win in the market.

-

Real identity, not pretend permissions. Agents that cannot impersonate users end up creating side channels and shadow access patterns. Products that inherit and prove row-level enforcement will win security reviews and reduce onboarding friction.

-

Lineage maturity. If the agent cannot trust lineage, it will overfetch, miss dependencies, and produce brittle pipelines. Winners will integrate with strong catalogs or ship reliable metadata systems of their own.

-

Growth through action, not answers. The winners will connect insights to actions that matter. That includes writing back to CRM, opening operations tickets, and scheduling refreshed briefs. All of this must be governed and reversible.

-

Cost discipline. Data-plane agents that can predict and constrain cost will earn trust. Expect pre-flight cost estimation, workload-aware scheduling, and policy-based guardrails for exploratory scans.

As these products scale, control layers that orchestrate and supervise many agents will matter even more. If your organization is heading there, study how agent control towers arrive to standardize approvals, policies, and audits across teams.

The bottom line

Aidnn’s debut marks a turning point. It reframes the enterprise agent from a chat overlay to a governed coworker that lives in the data plane. The shift rewards teams that treat identity, lineage, and planning as the backbone of agentic work. Whether you buy or build, hold every product to the same standard: operate inside your stack, plan in the open, respect governance by default, and connect insights to actions with rollbacks at the ready. That is how data stops merely answering and starts doing.