Agents Move Into The Database: RavenDB Sparks a Shift

RavenDB’s new AI Agent Creator puts agents inside the database, not outside. Learn why in-database execution can beat external RAG on security, latency, cost, and governance, plus concrete build patterns you can ship in Q4 2025.

The breakthrough: agents cross the data boundary

On September 8, 2025, RavenDB announced AI Agent Creator, a feature that executes autonomous agents inside the operational database rather than as a bolt-on service. The pitch is simple but consequential: build the agent where the data lives, keep policy and security with the data, and remove the network hops that make many Retrieval Augmented Generation deployments fragile and costly. RavenDB’s release signals a broader turn toward database-native agent runtimes. The company’s positioning emphasizes zero trust scopes, tool gating, and cost controls enforced at the data tier. For primary details, see RavenDB's AI Agent Creator launch.

Why does this matter now? For two years, external RAG services have carried most enterprise agent pilots. They stitched together embeddings, a vector store, a large language model, and a thin application server. That architecture got prototypes into demos. It rarely made production easy. Every request left the data perimeter. Policy lived in application code instead of at the data boundary. Latency, egress, and cascade failures multiplied with each hop. Database-native execution flips the center of gravity. The agent runs in the same trust and performance envelope as your operational data.

Why in-database beats external RAG add-ons

Think of external RAG as a relay race across several tracks. Each baton pass adds time and risk. In-database agents turn the race into a short sprint on a single track. That shift affects three things that matter in production: security, latency and cost, and governance.

1) Security: zero trust scopes instead of blanket access

External RAG typically grants broad read access to indexes or stores that mirror operational data. Guardrails live in app code, which is hard to audit and easy to drift. In-database execution applies the database’s native security model to the agent.

RavenDB’s approach starts from default deny and forces developers to explicitly define the agent’s tools and the data or operations available to those tools. Instead of handing an assistant the whole keyring, you issue a single-use badge with a list of doors it can open and when. Concretely, this allows:

- Tool scoping by collection, index, or stored procedure, not just by endpoint.

- Per-user delegation, where the agent impersonates the caller and inherits least-privilege rules.

- Auditable action logs alongside normal database audit trails, so the same security team and systems can review both human and agent access.

The result is not a promise of safety. It is a predictable enforcement point that already exists in your security practice. That is the difference between another code path to review and a guardrail your governance team already understands. For a deeper dive on defenses for production agents, see our view on runtime security for agentic apps.

2) Latency and cost: fewer hops, cached memory

External RAG often involves five or more network legs per request: application to vector store, vector store to object storage, application to model gateway, model to tools, and so on. Each leg adds milliseconds and jitter. Each leg can fail independently.

In-database execution cuts those legs. The agent queries the live data where it sits and executes gated tools without extra round trips. Two practical benefits follow:

- Lower tail latency. Users judge systems by their slowest interactions. Cutting cross-service calls reduces the chance that a remote dependency drags down the entire exchange.

- Lower model spend. RavenDB’s design, as described in its launch materials, focuses on summarizing agent memory and caching reasoning artifacts so the agent does not re-pay for identical context on every turn. With the agent inside the data tier, those caches sit next to the source of truth and inherit the database’s locality and eviction policies.

3) Governance: policy co-located with data and lineage

When policy engines live at the data layer, you can version, test, and roll back rules as easily as you manage schemas and indexes. Putting agents there lets you:

- Keep lineage intact. When an agent reads a document and writes a decision or enrichment back, lineage is captured without exporting audit logs from external tools.

- Apply data residency and retention automatically. The agent never moves raw data across regions unless the database allows it, which matters for cross-border workloads.

- Reuse existing change management. Schema change reviews now include agent tool changes. The same gate you use to approve a new index can approve an expanded agent scope.

What exactly changes for developers

If you have built with external RAG, the development model feels familiar but moves inward. Instead of wiring an orchestrator to a vector database and a model gateway, you:

- Define an agent object in the database. You set its purpose, instructions, and the tools it can call. Tools are query capabilities, existing procedures, or approved actions that mutate data.

- Bind identity and scope. You choose whether the agent runs as the caller or a service identity, then restrict the scope by collection, index, or function.

- Deploy and monitor with database primitives. You use the database’s studio, logs, and metrics, rather than a separate agent platform, to track usage, cost, and quality.

The first time you do this, it feels like moving from a home-built message queue to a managed one. The components are the same. The failure modes and the maintenance surface shrink. And because tools live where governance lives, your rollout conversations with security and compliance become shorter and clearer.

Build patterns you can ship in Q4 2025

Below are concrete reference patterns and where they shine. Each includes pitfalls to watch for before you ship.

Cloud pattern: multi-tenant agents on managed clusters

- Problem: You serve many customers from a single cloud deployment and need an agent to act on per-tenant data without risking cross-tenant leaks.

- Pattern: Run the agent inside the database cluster that already enforces tenant data partitioning. Define one agent template and instantiate per tenant with scoped tools that can only read and write that tenant’s collections. Bind identity to the tenant’s user role and log per-tenant usage for chargeback.

- Why this works: You reuse your existing role hierarchy and schema-based sharding. Every tenant gets a separate agent identity without operating another microservice tier.

- Pitfalls:

- Do not share vector embeddings across tenants unless they are derived from sanitized, non-sensitive features.

- Budget for concurrency spikes. Cloud tenants can burst together. Use admission control that prioritizes latency-sensitive tools over batch-like ones.

On-prem pattern: sealed-perimeter agent for regulated data

- Problem: You cannot move regulated data to the public cloud, but teams still want agent capabilities for workflow automation and assisted analysis.

- Pattern: Deploy the database and agent runtime inside the controlled network. Allow the agent to call only local tools that wrap approved procedures. If you must use external models, terminate through an on-prem model gateway with private routing. Cache summaries and decisions in the database to minimize model calls.

- Why this works: The data never leaves the perimeter. Security review focuses on new tools and scopes, not new services. Latency is predictable on the internal network.

- Pitfalls:

- Keep a model fallback plan. If external models become unavailable, your agent should degrade gracefully and surface a clear error to users.

- Audit tool outputs. In regulated settings, the action log must show not just what the agent saw but why it chose a tool and what the tool returned.

Edge pattern: offline-first with periodic sync

- Problem: You need agents to operate where connectivity is intermittent, for example in a factory cell, a ship, or a retail store.

- Pattern: Run the database and agent locally on edge hardware. Allow read tools and a limited set of write actions to function offline. Sync summaries, events, and state back to the central cluster when a link is available. Use small, locally hosted models for low-risk tasks and queue high-risk actions for central review.

- Why this works: The agent remains useful without a network and still benefits from the same tool and policy model as the cloud or data center. Sync brings edge decisions into the enterprise audit trail.

- Pitfalls:

- Be explicit about conflict resolution when the edge and core disagree. Decide which fields are authoritative and record merge results.

- Watch disk pressure. Edge nodes can fill up with cached context. Set hard caps and test eviction under stress.

From RAG add-on to in-database agent: a migration path

You do not have to rewrite everything at once. Move the highest-value, lowest-risk pieces first.

- Inventory the tools your external agent calls. Classify them as read, write, or side effects. Start by moving read tools into the database as parameterized queries.

- Wrap existing business logic as database tools. If you have stored procedures or domain services, expose them through an adapter that enforces input schemas and rate limits.

- Put policy in the database. Express the same access rules you enforce in your application as roles and scopes the agent understands. Test with shadow traffic.

- Introduce memory and cache near data. Persist conversation state and retrieval results as first-class entities so they can be reused across sessions and safely evicted.

- Phase out redundant infrastructure. As you remove external hops, decommission duplicate caches, gateways, and vector indexes to cut cost and operational risk.

Pro tip: treat your agent like a new kind of user. Give it the same review gates, the same change windows, and the same observability that you give to humans who can read and write important data. For examples of agents that operate close to the data plane, compare this strategy with our coverage of data-plane agents that work.

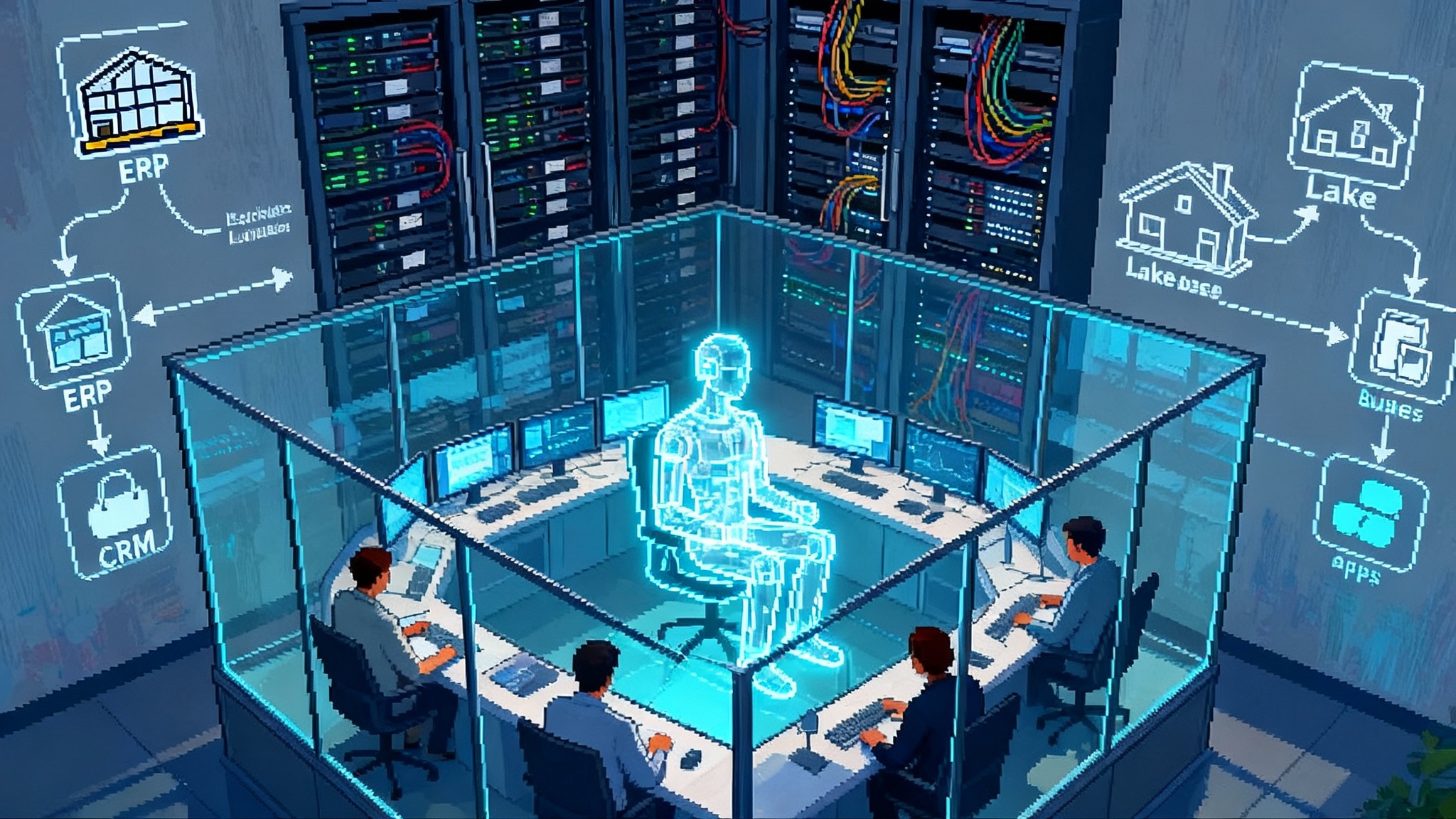

The emerging landscape: databases absorb agent runtimes

RavenDB’s move is part of a clear pattern. Snowflake documents Cortex Agents for planning, tool use, and policy inside its data platform, a sign that warehouse-centric stacks are also pulling agents into the data layer. To see how a major warehouse frames this, read the Snowflake Cortex Agents orchestration section of its documentation. Databricks continues to expand agent frameworks and evaluation tooling around the lakehouse. Whether your center is a document store, a warehouse, or a lakehouse, the gravitational pull is the same. Agents are moving toward the data and the governance layer.

Three implications follow for builders:

- Vendor lock-in looks different. The risk is not only which model you choose. It is which policy and tool model you adopt at the data tier. Favor platforms that keep tools declarative and transportable.

- Observability will converge. You will not maintain one system for data lineage and another for agent traces. Expect database studios to add token cost, tool selection steps, and quality counters alongside query plans and index health.

- The line between transaction and analysis will blur further. As agents make decisions inside the database, you must draw clear boundaries between what an agent may read, what it may write, and what must remain human-approved.

What to build now: concrete use cases

- Returns and credits assistant for ecommerce. The agent verifies order status, checks shipping timelines, and issues approved credits through a stored procedure. All actions are scoping-enforced and logged with the customer’s identity.

- Field service triage for equipment makers. An edge agent summarizes error logs locally, cross-references known incidents in the database, and queues parts orders. When online, it syncs summaries and proposed actions for supervisor review.

- Patient intake enrichment for healthcare. Inside the on-prem database, the agent normalizes free-text symptoms into coded fields and flags risk criteria. Any write updates must pass existing validation rules before commit.

These examples share a theme. The agent is not another server to call. It is a new kind of user acting within the same guardrails as your existing users. For a parallel shift in how builders think about agent capability and quality, see how coding assistants evolved into self-testing AI coworkers.

Pitfalls and how to avoid them

- Letting prompts smuggle policy. If you allow free-form instructions that expand an agent’s scope, you are re-creating entitlement drift. Keep policy in roles and tools, not prompts.

- Over-centralizing tool catalogs. A single global catalog makes discovery easy but multiplies blast radius. Partition tools by team or domain, then explicitly opt in cross-domain use.

- Treating caches as invisible. Cached memory and reasoning artifacts must be governed like any other data. Set retention, encryption, and redaction rules.

- Ignoring backpressure. Agents can generate more tool calls than humans. Rate limit tools, prioritize idempotent reads over writes, and surface backpressure to the caller.

- Skipping replay testing. Save failed interactions as reproducible traces with inputs, selected tools, and outputs, then use them to regression test agent changes.

A short design checklist

- Identity and scope

- Does the agent impersonate the end user or a service identity

- Are tool scopes least privilege by default and time-boxed when possible

- Data and cache

- Where is agent memory stored and how is it redacted on retention

- Are caches co-located with the data and covered by the same encryption

- Safety and audit

- Are tool inputs and outputs validated against schemas

- Can you replay a decision from logs without external systems

- Operations

- How do you cap concurrent tool calls per tenant

- What is the fallback path when the model gateway is unavailable

Why to prioritize in-database runtimes now

If you are moving from pilot to production in Q4 2025, the trade-offs favor running agents in your operational data layer. You get fewer moving parts to break, a security model your auditors already trust, and cost dynamics you can predict. Importantly, you do not have to give up flexibility. The agent can still call out to external models or services when needed. The difference is that the database, not the application, decides who can do what, where, and when.

The upshot is concrete. Treat the database as the runtime, not just the store. Start by moving the smallest useful tool into the database and scope it tightly. Prove the latency and governance gains on one path, then expand. The teams that make this shift will spend less time gluing systems together and more time shaping agents that actually solve business problems.

The bottom line

The industry tried stapling agents onto applications, and the seams showed. RavenDB’s AI Agent Creator and similar moves across data platforms show a better way. Keep the agent where the data, policy, and audit live. Make each tool explicit. Cache what you can near the source of truth. If you want agent systems that are fast, governed, and ready for production, bring them inside the database and ship from there.