AI‑DR Arrives: Runtime Security for Agentic Apps Goes Mainstream

September marked a clear shift. Runtime security for language models and agentic apps is now expected, not optional. This guide defines the AI‑DR stack, shows how to ship it in weeks, and offers a practical build versus buy playbook.

The moment runtime security became the default

Ask an engineering leader what changed in September and you will hear a common refrain. The center of gravity moved from proof‑of‑concept guardrails to runtime security for large language models and do‑work agents. Buyers started asking a new first question: how do we control and observe every prompt, tool call, and model output while the system is working, not just before or after the fact?

That question is the origin of AI‑DR, short for AI Detection and Response. If endpoint detection and response modernized workstation security, AI‑DR is its analogue for models, tools, and agents. It is the runtime layer that keeps your systems safe while they read, write, browse, book, update, and deploy.

This article is a field guide. We will define the AI‑DR stack, show how to ship a credible version in three sprints, walk through a build versus buy decision, and close with a checklist you can take to your next planning meeting.

What AI‑DR is and why it exists

AI‑DR is the control plane and data plane that sit between users, agents, models, tools, and the outside world. It watches traffic, decides what is allowed, logs what matters, and reacts quickly when something goes wrong. Think of AI‑DR as your agent runtime copilot that is fast, consistent, and auditable.

A simple metaphor helps. Imagine a factory floor. Your agents are robotic arms that fetch parts, read instructions, and assemble products. Guardrails are the fences and emergency stop lines. Policy is who can start which machine and for how long. Telemetry is the camera and sensor network that records what each arm did. Response is the supervisor who pauses a line, swaps a tool, or rolls back a change when something looks unsafe. AI‑DR is that combination for software agents.

Why now? Three forces converged:

- Real adoption of do‑work agents that browse, edit code, update tickets, run procurement, or answer customers with private data.

- A maturing catalog of failure modes, from indirect prompt injection and data exfiltration to wallet abuse and excessive tool agency.

- Platform moves that made runtime enforcement part of the default stack. Once buyers can source AI runtime controls next to endpoint, identity, and cloud security, expectations change quickly.

If you are building agents that actually act, AI‑DR is no longer a nice‑to‑have. It is the difference between a demo and a dependable system.

The AI‑DR stack at a glance

Think of AI‑DR as four layers you can implement and measure: guardrails, policy, telemetry, and response.

1) Guardrails

Guardrails are runtime checks on inputs, outputs, and tool invocations. Input guardrails inspect prompts, instructions, and retrieved context. Output guardrails review the model’s response, including the tool results and any data the agent plans to write. Tool guardrails validate that the requested action is allowed for the current user, on the current resource, within a safe range.

Concrete patterns:

- Inbound: Reject prompts that carry jailbreak templates or attempt to override system instructions.

- Retrieval: Strip secrets and tokens from retrieved documents before they meet the model.

- Tooling: Enforce an allow list per role and validate arguments, such as blocking file deletion outside a scoped directory.

- Outbound: Block outputs that contain regulated data or that would post sensitive text to a public destination.

Guardrails should be model‑agnostic and tool‑aware. Do not hardcode for a single model if you can avoid it. Go deep on the tools that matter in your domain, such as code execution, databases, email, chat, ticketing, and payments.

2) Policy

Policy answers who can do what, where, and when. In an AI context, policy adds dimensions that classic web access control never had to consider:

- Actor dimensions: end user identity, agent identity, and the impersonation chain between them.

- Capability dimensions: which tools the agent can call, with which arguments, on which resources.

- Data dimensions: which data classes can be read or written, with masking or redaction rules.

- Context dimensions: time of day, environment, channel, and risk scores from detectors.

Express policy as code and version it. Store policies alongside infrastructure code. Test policy changes against replayed traffic before rollout, exactly as you would test firewall rules.

3) Telemetry

Telemetry is the lifeblood of AI‑DR. Without complete, structured logs, you cannot debug incidents, train better detectors, or meet audit requirements.

High‑quality telemetry captures:

- Prompts, responses, and tool calls, tagged with actor, agent, resource, and trace identifiers.

- Risk signals from detectors, such as jailbreak likelihood, sensitive data exposure, and tool misuse.

- Token usage and cost, to tie risk to spend.

- Environmental context, including model version, knowledge base version, and gateway path.

A common pattern is to adopt OpenTelemetry traces and spans, then attach AI‑specific attributes like prompt hash, content class, detector verdicts, and enforcement outcomes.

4) Response

Response is everything you can do when risk crosses a threshold. Build a spectrum from gentle to firm so you do not have to choose between silence and block.

- Soft responses: redact or mask, ask the user to confirm, drop citations, remove unsafe tool arguments, or switch to read‑only mode.

- Firm responses: deny, quarantine an agent, rotate credentials, revoke a token, lock a channel, or isolate a workspace.

- Human‑in‑the‑loop: page an owner, require managerial approval for a high‑risk tool, or switch to a safe fallback path.

Response must be low latency. If an agent is waiting, you have milliseconds to decide. Precompute as much as possible and keep policies close to the enforcement point.

How to ship AI‑DR in three sprints

You do not need a yearlong program. You can reach meaningful runtime safety in three weeks if you focus.

Week 1: Map and instrument

- Inventory agents and tools. List every model, gateway, and tool with production access. Note which agents are user‑triggered and which are autonomous.

- Insert a gateway or middleware that can observe prompts, tool calls, and responses. If you already use an AI gateway, enable logging and policy hooks. If not, a lightweight proxy in the application layer works.

- Start with input and output guardrails. Add redaction for secrets and regulated data. Enable a basic jailbreak and injection detector with thresholds for audit and block.

- Emit structured telemetry. Adopt a standard schema and ship events to your log pipeline. Tag each event with a trace identifier and the impersonation chain from user to agent to tool.

Week 2: Establish policy and tool safety

- Define policy for the top three agent use cases. For each, write an allow list of tools, argument schemas, and per‑resource scopes. For example, a support agent can update the current customer’s ticket but cannot export all tickets.

- Add budget controls. Put daily and per‑session token and cost limits by user, agent, and tenant. Emit alerts at 70 and 90 percent of budget.

- Harden tool adapters. Add argument validation, safe defaults, rollbacks, and dry‑run modes. If the tool can write, ensure it can also undo or create a draft for approval.

- Attack your own system. Run a small red team exercise with prompt injections and tool misuse attempts. Capture the gaps. Update rules and test again.

Week 3: Automate response and operationalize

- Wire alerts to owners. Page the product owner for agent incidents and the security owner for cross‑tenant incidents. Include a replay link with the full trace.

- Turn on automated responses. Start in shadow mode for a few days, then block the top three verified risky patterns. Build runbooks with customer messaging and rollback steps.

- Version policies and detectors. Put them under code review and require a replay test before merging.

- Review outcomes with product, legal, and go‑to‑market. Decide what to expose to end users for clarity and trust. For example, show a banner when an agent redacts or refuses due to policy.

By the end of week three, agents should have basic immunity to common attacks, visibility that a security team can work with, and a response loop that learns from every incident.

Build versus buy: a pragmatic playbook

There is no single right answer, but there is a right question. Which parts of AI‑DR are strategic to your product and demand deep control, and which parts are utility where a vendor can move faster than you can?

Evaluate using nine criteria:

- Coverage. Does the solution protect the full runtime path across prompts, tools, and outputs, not just a model wrapper?

- Latency budget. Can it decide within your agent’s service level objective at peak load?

- Policy model. Can you express policies as code with tests, versioning, and staged rollout?

- Tool depth. Can it validate the tools that matter in your domain rather than just string match on prompts?

- Telemetry. Will you get complete, structured logs with clear schemas and privacy controls?

- Model and cloud independence. Can it support the models and providers you will use next quarter, not only today?

- SOC integration. Does it produce incidents your operations team can act on with reasonable signal to noise?

- Privacy posture. Can you keep sensitive prompts and outputs in your tenant, with redaction at the edge and appropriate retention?

- Total cost and ownership. Count people and time to run it, not just license price.

Buy if:

- You need coverage now across multiple apps and teams.

- You do not have dedicated security engineers to write and maintain detectors and policies.

- You must meet audit or customer expectations this quarter and want a supported path.

Build if:

- Your agents use domain‑specific tools that require deep validation, such as custom trading systems or industrial control.

- Your latency budget is tight enough that every millisecond matters and you own the path end to end.

- You treat AI‑DR as a core competency and plan to invest in a security research loop.

Best practice for most teams: start with a vendor for baseline guardrails, policy, and telemetry plumbing, then build custom validations for your unique tools and data. Keep a copy of all logs in your environment. Wrap vendor policies with your own tests and version control to preserve speed without losing control.

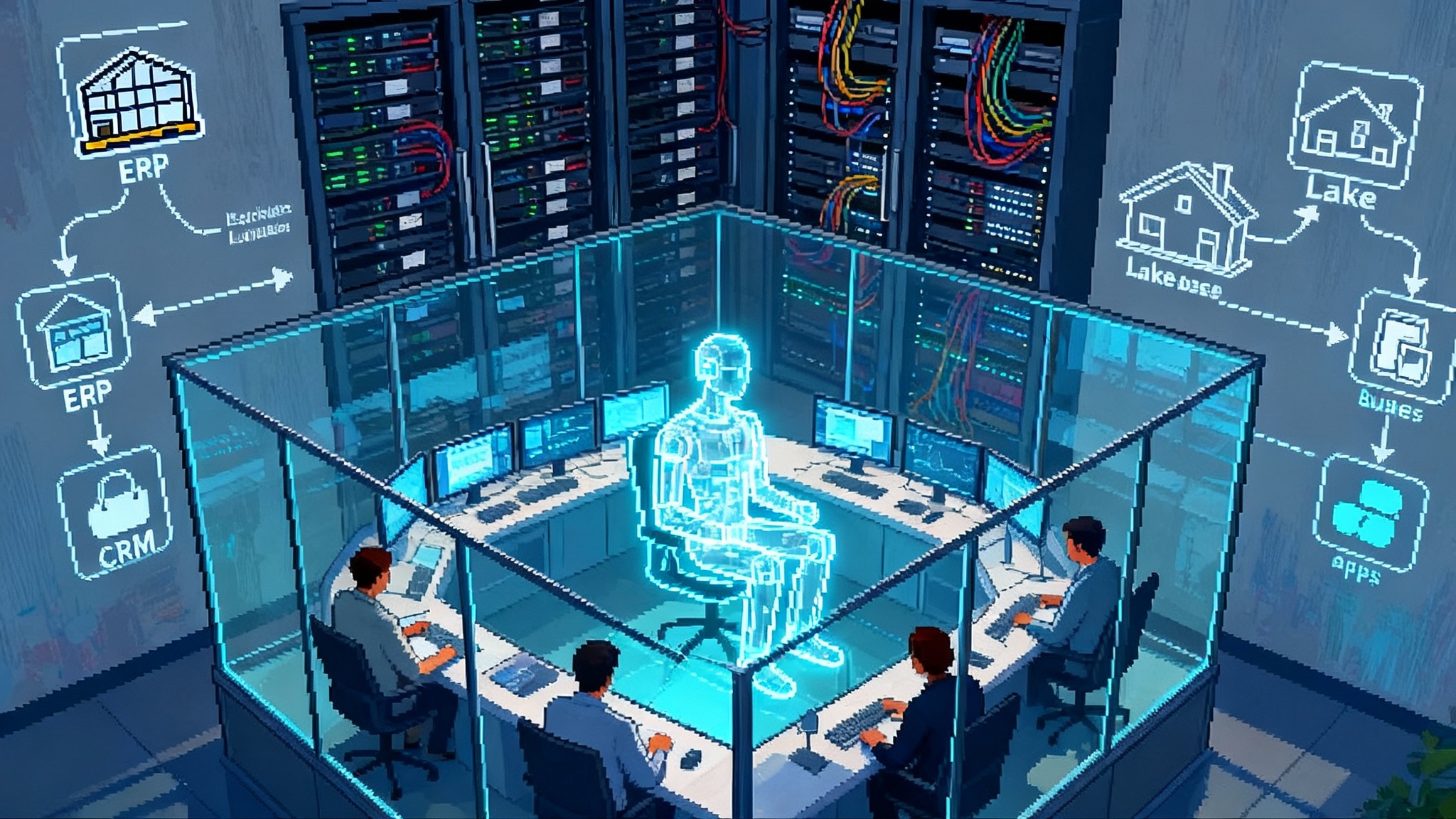

Reference architecture you can implement this quarter

- Ingress: All agent traffic flows through a gateway or middleware that supports inspection and policy enforcement.

- Detectors: A chain of detectors for secrets, jailbreaks, injections, and data classification. Each outputs a score and a reason.

- Policy engine: Reads actor, agent, tool, resource, and detector scores to choose allow, redact, transform, approve, or block.

- Tool adapters: Per‑tool modules with strong argument validation, safe defaults, and undo paths. Each adapter emits its own telemetry.

- Telemetry bus: Structured events sent to your logging platform with traces and spans. Include privacy filters at the edge.

- Response automation: Hooks to block, quarantine, rotate credentials, and page owners. This can be a workflow engine or simple functions with runbooks.

If you are exploring how agents coordinate at scale, read about agent control towers arrive for a view of orchestration patterns that complement AI‑DR.

What great looks like at runtime

Three realistic scenarios set the bar.

-

Customer support agent. A user shares an invoice with a social security number. The retrieval layer redacts it before the model sees it. The output guardrail double checks that no protected numbers leave the system. Policy allows the agent to update the customer’s own ticket but prevents cross‑tenant searches. Telemetry records the redaction and the policy decision. If repeated exposures occur, the response layer escalates to the data protection lead.

-

Procurement assistant. The agent browses vendor sites and requests a quote. A malicious page hides a prompt injection in a comment. The input guardrail scores the injection attempt, policy downgrades tool permissions for the session, and the agent switches to read‑only mode. The user must confirm vendor identity through a separate channel.

-

Code agent. The agent proposes a dependency upgrade that introduces a license risk. Tool guardrails block the pull request merge and open a ticket with the composition team. The agent offers an alternative compatible version. Telemetry links the event to the dependency database and the policy rule that triggered the block.

If your roadmap includes autonomous code changes, compare these controls to the practices in self‑testing coworkers in Replit so you pair runtime enforcement with self‑verification.

Metrics that prove AI‑DR is working

Security that cannot be measured will get dialed down the first time a deadline hits. Establish a simple scoreboard.

- Mean time to respond for agent incidents.

- Rate of risky prompts or tool calls blocked per thousand requests.

- False positive rate by detector type and by use case.

- Percentage of agent sessions with full trace coverage.

- Percentage of write actions protected by argument validation and rollback.

- Token and cost exposure prevented by redaction and policy.

Target directional goals, then refine per team. The aim is not to eliminate risk. It is to make risk observable, manageable, and steadily cheaper to handle.

Anti‑patterns to avoid

- Blind spots. Agents that bypass your gateway with direct model calls or unlogged tools.

- Static rules. Hardcoding for a single model or detector without versioning or tests.

- All block or all allow. Lacking soft responses that reduce harm without stopping work.

- Unbounded autonomy. Agents with write access across tenants or systems without scoped permissions.

- Logging without privacy. Capturing sensitive prompts and outputs without redaction or retention controls.

If your agents operate on operational data or across systems of record, study how data‑plane agents that do work approach safe execution paths within strict boundaries.

The market signal in plain language

In recent months, runtime AI security moved from specialty tooling to a standard expectation inside broader platforms. The practical effect is simple. Security teams now expect AI telemetry in the consoles they already use. Procurement prefers a shorter vendor list. Product teams still need depth for their unique tools and data, but the baseline capabilities are becoming nonnegotiable. That is what table stakes looks like.

For builders, this shift is helpful. It means you can align AI‑DR with existing incident management, logging, and audit rhythms. It also means your launch checklists can include AI‑specific policies and detectors without inventing new processes.

A checklist for teams shipping do‑work agents

- Inventory agents, tools, data classes, and external destinations. Map the impersonation chain for each workflow.

- Put a gateway or middleware in the path that can read, redact, and decide. Avoid blind spots.

- Enforce allow lists for tools and schemas for arguments. Validate before you execute.

- Redact secrets and regulated data at ingress and egress. Prefer detection at the edge.

- Log prompts, responses, and tool calls with trace identifiers. Keep logs in your tenant.

- Start with audit mode, then graduate to block for the top three risky patterns.

- Budget tokens and spend with per‑user and per‑tenant limits, with alerts.

- Test with adversarial prompts and tool misuse playbooks each release.

- Version policies, detectors, and runbooks. Require replay tests before merge.

- Make response humane. Tell users what happened and why, and offer a safe alternative path.

The bottom line

Runtime security for models and agents is no longer an optional add‑on. It is the layer that lets you move from demos to dependable systems, from scripted assistants to agents that safely do real work.

If you are a startup, you can stand up a credible AI‑DR posture in weeks. If you are an enterprise, you can fold AI‑DR into the same operational rhythms as your other controls and give builders a safe highway to ship faster. The teams that combine guardrails, policy, telemetry, and response into a single, tested system will ship agents that customers trust, and they will keep that trust when something novel happens. It will.