OutSystems Agent Workbench GA makes enterprise AI agents real

OutSystems has taken Agent Workbench to general availability, formalizing an agent workbench category: a low code control plane to design, govern, and deploy multi agent systems across live enterprise data and workflows with real controls.

The news, and why it matters

On October 1, 2025, OutSystems announced that Agent Workbench is generally available, positioning it as a production hub for building and orchestrating enterprise AI agents. The company paired the release with an agent marketplace, cross model flexibility, and compliance features aimed at moving agents from demos to durable software. If you want the primary source, see the company’s GA press release on October 1, 2025.

Why does this matter? Because it puts structure around a fast growing concept. Teams are past the stage of clever prototypes. They need a common control plane to design, govern, deploy, and observe agents across real systems of record. A platform that treats agents like first class software units is the difference between a slick demo and an auditable, supportable application.

What is an agent workbench

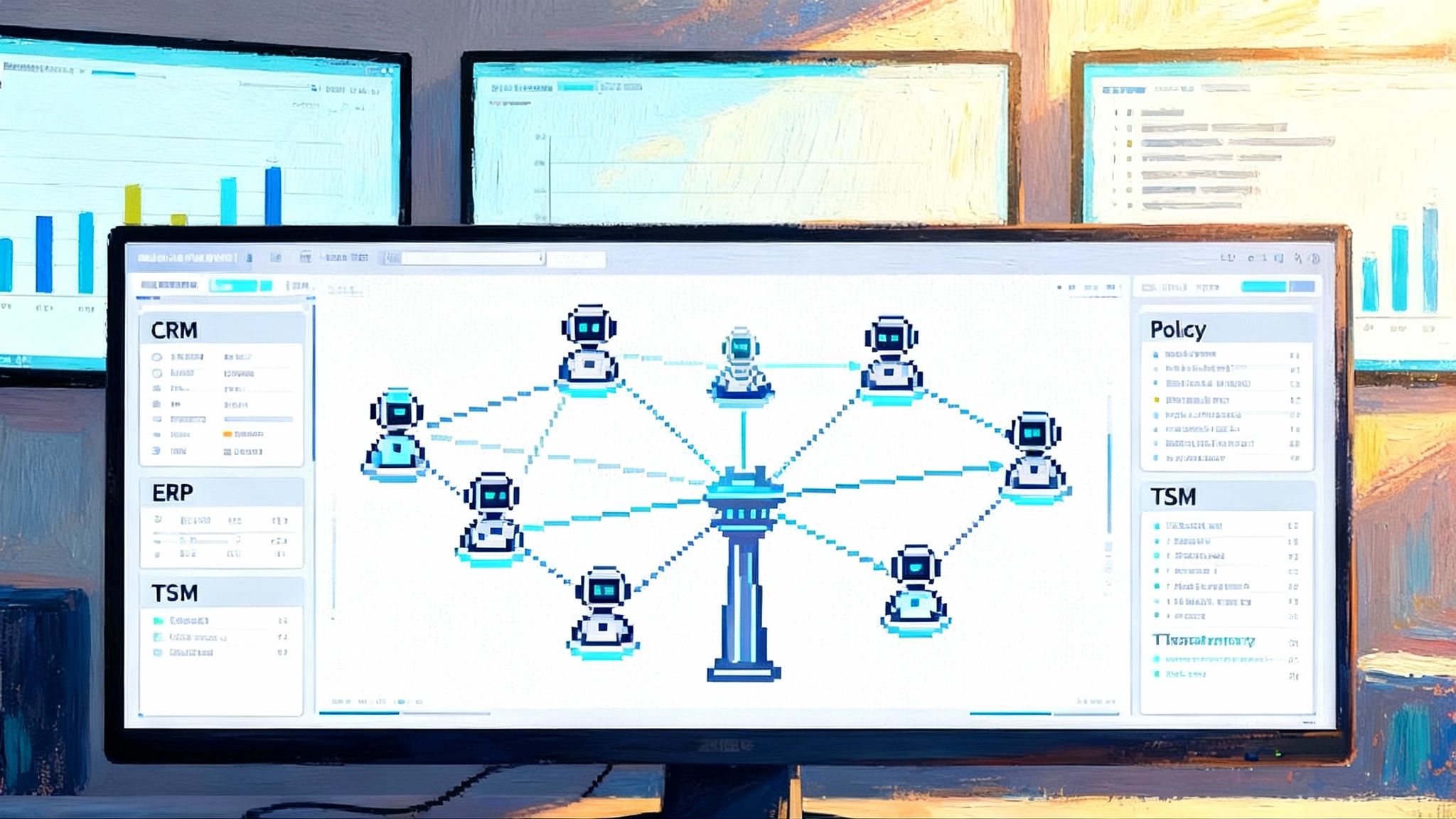

Think of an agent workbench as an air traffic control tower for software agents. The pilots are agents that triage tickets, fetch data from enterprise systems, and route work to humans. The tower coordinates takeoffs, landings, and handoffs so the flight plan is safe and repeatable. In practical terms, a workbench provides five pillars:

- Design: visual and low code canvases to define multi agent roles, tools, handoffs, and guardrails.

- Governance: policy, role based access control, audit logs, and versioning across the agent lifecycle.

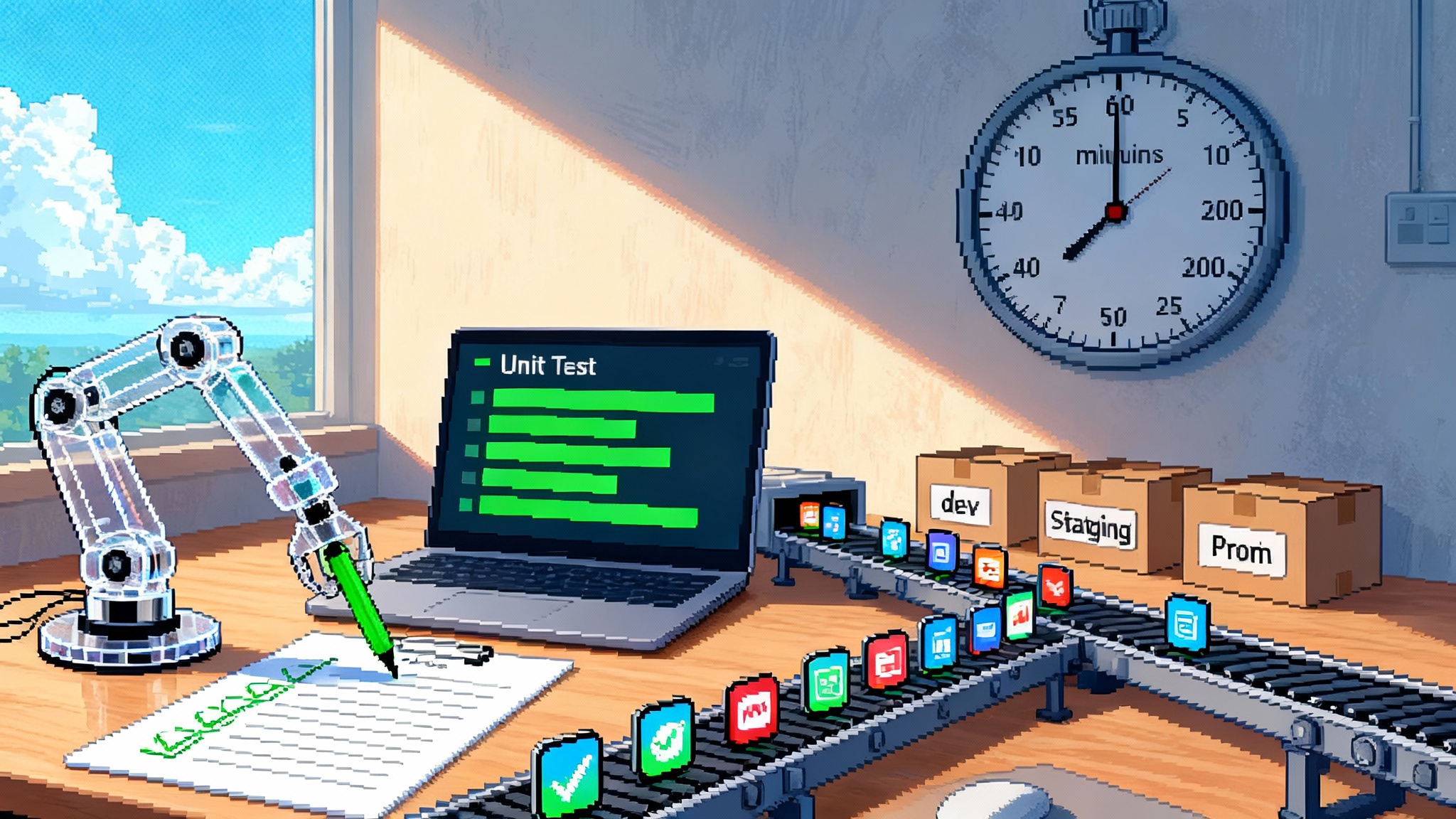

- Deployment: packaging, promotion, canary releases, and monitoring so agents move from sandbox to production.

- Integration: standard connectors and protocol support to reach data, applications, and workflows.

- Observability: traces, metrics, evaluation harnesses, and red team simulators to spot regressions and unsafe behavior.

Together, these elements let teams graduate from one off pilots to a portfolio of reliable agents tied to measurable outcomes.

Why Model Context Protocol and a marketplace matter

Two design choices stand out.

First, OutSystems highlights support for Model Context Protocol, a community standard that defines how language model applications connect to external tools and data. Standards reduce the integration tax that has haunted agent projects. Instead of building a one off connector for every vendor, teams can rely on a consistent interface for tools, resources, and prompts. That lowers switching costs between models and vendors and enables tighter permissioning and auditing because the surface area is predictable. If you want the technical underpinnings, read the Model Context Protocol specification on GitHub.

Second, an agent marketplace helps IT leaders say yes faster. A marketplace is more than a catalog. It is a trust framework that pairs vetted templates with enterprise policies. A procurement ready marketplace means a bank can adopt a complaint escalation template that already conforms to segregation of duties and logging, then adapt it to the bank’s own data and rules. It also nudges the ecosystem toward shared definitions for tool permissions, evaluation criteria, and telemetry. That is how a category matures.

Early wins point to repeatable patterns

OutSystems cites early customers using agents to automate escalations, classify and route tickets, analyze error logs, and speed up data entry. Those stories map to three patterns that most enterprises can repeat:

-

Routing and enrichment at the edge of a workflow

When a customer email or sensor alert arrives, an agent can enrich it with context, classify urgency, and route it to the right resolver group. The measurable outcome is faster time to first response and fewer misrouted tickets. In regulated industries, the workbench’s policies ensure enrichment does not leak sensitive data. -

Log and knowledge analysis for operations

Operations teams drown in application logs and long troubleshooting guides. An agent can summarize error clusters, propose likely root causes, and link the exact runbook step. The workbench provides a harness to test those suggestions against known incidents so reliability improves rather than drifts. -

Document triage for back office throughput

Agents can extract fields from invoices or application forms, validate them against master data, and flag exceptions for humans. The win is higher throughput with consistent controls. Centralized policies define who approves deviations and how long data is retained.

A key point: the separating line between pilots and production is not the model. It is the scaffolding around the model, including permissions, lineage, observability, and rollback. A workbench puts that scaffolding in one place.

What this means for CIOs wrestling with legacy modernization

CIOs face a familiar dilemma. Modernize core systems slowly and safely, or move fast with sidecar tools that risk duplicating logic and data. An agent workbench offers a third path. Treat agents as controlled intermediaries that sit on top of legacy systems, pull context through standard interfaces, and codify business rules in tools that are visible and auditable.

- Modernization through orchestration: unlock high value workflows that span a mainframe, a CRM, and an ERP backbone without refactoring all three at once. Start at the workflow edges where cycle times and error rates are easiest to measure.

- Policy first integration: map the workbench’s policies to your identity and secrets management so each tool call carries the right scope and is recorded with a human readable purpose.

- Evolutionary replacement: as you replace a legacy component, keep the agent contract stable. Swap the connector, not the agent behavior. This reduces change management for frontline teams.

The near term payoff is time. You can move a high impact workflow into a governed agentic flow without waiting for a multiyear rewrite. The long term payoff is a rationalized integration layer that is easier to test and audit.

Standardized interfaces will accelerate adoption

In the first wave of agent pilots, teams built bespoke connectors and prompt wrappers. Every pilot had different error semantics and monitoring. That approach works for demos and fails at scale. Standardized interfaces change the adoption curve in three ways:

- Interoperability: MCP style tool contracts allow you to swap models or vendors without rewriting business logic. This aligns with the broader trend we discussed when agents move into the database, where standardized interfaces pull agents closer to core data paths.

- Security posture: a predictable interface enables allow lists, rate limits, and scoped tokens that can be audited by the same teams that inspect API gateways. For more on this shift, see our piece on runtime security for agentic apps.

- Reliability engineering: once every agent reports the same traces and metrics, you can compare agents fairly, set service level objectives, and run canary rollouts with confidence. The move toward self testing AI coworkers illustrates how evaluation becomes part of the development loop.

The result is pressure on bespoke pilots. Pilots that cannot conform to shared interfaces will stall. Pilots that adopt shared interfaces will be candidates for promotion to a platform. This mirrors how microservices matured a decade ago, moving from prototypes to platforms as contracts solidified.

The next 6 to 12 months: what to expect

This category is moving fast. Here is a grounded view of what shifts between now and mid 2026.

- Agent IDEs dock into operational backbones: expect native bidirectional hooks into systems like IT service management, ERP, and CRM. A ticket created by an agent carries deep links to trace IDs, prompt versions, and tool permissions. An order update from ERP triggers an agent plan with deterministic fallbacks.

- Compliance and observability become table stakes: regulators and internal audit teams will ask what the agent knew, which tools it invoked, and why. Workbenches will ship with prompt version catalogs, red team simulators, and exportable audit packs that align with common frameworks. Expect built in detectors for data residency, secret exfiltration, prompt injection, and tool escalation.

- Workbenches become the new development surface: product managers will sketch an agent’s goal, tools, and handoff criteria in the workbench before any code is written. Developers will build tools, not monoliths, exposing approved actions that agents can call. Quality engineers will own evaluation suites and safety tests. Operations will watch agent runbooks with the same rigor they use for microservices health.

- Multi model agility is normalized: a pricing agent uses one model family while a summarization agent uses another, and both share a common policy, logging, and cost budget. The platform enforces model substitution rules during incidents or cost spikes.

- Agent marketplaces converge: marketplaces will publish not only templates but also standardized safety attestations, evaluation scores against public test suites, and integration badges. Expect internal marketplaces behind the firewall that mirror the public catalog.

- Cost governance gets a seat at the table: agent runs will be tagged to cost centers. Workbenches will enforce monthly budgets and unit cost ceilings per workflow. This will separate projects with measurable savings from those that are merely interesting.

A concrete path to production in 90 days

If you lead a platform or application team, here is a focused plan to adopt a workbench without overreaching.

- Week 1 to 2: define a narrow, measurable workflow such as incident triage for a single application, claim intake for one region, or order exceptions for a single product line. Write the agent’s contract: inputs, permitted tools, success criteria, and human handoff rules. Assign an executive sponsor who commits to a numeric target such as a 20 percent reduction in time to first response.

- Week 3 to 4: integrate identity, secrets, and logging. Do not write custom connectors if a marketplace tool exists. Add a synthetic test harness with golden cases and failure cases. Define gates for production, for example write actions on non sandbox systems are disabled until the agent achieves a pass rate above 95 percent in staging.

- Week 5 to 6: run a controlled pilot with the involved teams. Measure unit costs and error rates per step, not just aggregate outcomes. Expand the agent’s tools only when existing ones are stable. Capture user feedback directly in the workbench as labeled events.

- Week 7 to 9: productionize. Turn on continuous evaluation. Add alerts for drift in model behavior and for cost spikes. Document rollback steps that are a single click inside the workbench. Publish the agent’s usage guide and escalation path. Move to a second workflow only after the first one meets its objectives for two weekly cycles.

This sequence avoids the two classic failure modes. You sidestep the sprawling pilot that never lands and the brittle launch that lacks guardrails.

What to watch in OutSystems’ approach

Three signals will show whether Agent Workbench becomes the control plane many enterprises adopt:

- Depth of marketplace curation: templates that ship with robust tests, PII redaction patterns, and default policies reduce setup time from weeks to days. Watch for vertical packages in banking, manufacturing, and public sector.

- First class observability: native traces from plan to tool to human handoff, with correlation IDs and clean export to existing observability stacks, will decide whether operations teams trust the platform.

- Breadth of protocol and connector coverage: strong MCP support and high quality connectors into systems like service management, ERP, and CRM will separate production platforms from demo ware.

Practical buying criteria for CIOs and platform teams

Use this checklist to compare agent workbenches, including OutSystems, and to keep vendors honest.

- Governance: role based access control mapped to your identity provider, policy as code for prompts and tools, red team simulation, and auditable decision logs.

- Observability: step level tracing, error taxonomies, cost telemetry per tool call, model version pinning, and canary deploys.

- Protocols and connectors: MCP compatibility and vendor supported connectors for your top five systems of record.

- Safety and compliance: data residency controls, secret sanitization, prompt injection defenses, and human in the loop gates per action type.

- Lifecycle: versioning of agents, tools, and prompts with rollback, staging to production promotion, and automated evaluation gates.

- Economics: unit cost ceilings, model selection policies, and native budgeting by cost center.

If a platform cannot demonstrate these on a pilot connected to your systems within two weeks, assume you will be the integrator and plan accordingly.

The bottom line

Agent workbenches are the missing layer that turns agentic ideas into production software. OutSystems has made a strong bet with a general availability release backed by a marketplace, standardized interfaces, and early customer results. The next year will determine which vendors combine low code speed with enterprise grade control. For CIOs, the strategic move is to treat the workbench as a first class development surface, not an add on, and to pick a narrow, high value workflow to prove out governance, integration, and observability. Do that, and agents will stop being a slide nine demo and start becoming software your business can trust.