Gist Answers goes live: licensed AI search for publishers

On September 5, 2025, ProRata.ai lit up a 750 publisher network with Gist Answers, a licensed, revenue sharing AI search. Here is how rights aware retrieval, attribution, and pay per query pricing can reshape the web.

September 5 flipped a switch

ProRata.ai’s launch of Gist Answers on September 5, 2025, looks like a tidy product release until you trace the wiring underneath. A startup, not a Big Tech incumbent, has stitched together a 750‑publisher network that feeds licensed content directly into retrieval augmented generation. Retrieval augmented generation, often shortened to RAG, means a model does not answer from memory alone. It looks up fresh documents, cites them, and then composes a response. Gist Answers makes that lookup a licensed act with attribution and revenue sharing, not a free ride on scraped text.

The best analogy is refueling. Yesterday’s systems siphoned gas from parked cars and argued about the rules later. Gist Answers pulls into a station, pays by the gallon, prints a receipt, and reconciles the books. Same fuel, different economics, and suddenly the neighborhood runs on agreed rules instead of gray areas.

The moment answers carry receipts, incentives change for publishers, builders, and readers.

What Gist Answers actually is

Gist Answers presents as a search experience, but its core invention is the contract. Behind the interface sits a broker that routes user questions to a permissioned index. That index contains structured content provided by publishers through feeds or an API. Each item carries policy metadata that can be enforced, not just hoped for.

Each content item is tagged with four building blocks:

- A license that defines allowed uses in answers and in model training.

- A price schedule for pay per query, per thousand queries, or per session.

- A time to live for caching plus refresh rules for breaking news.

- Attribution requirements that specify how brand and byline appear.

On top of that policy layer, Gist Answers runs retrieval models that fetch the most relevant passages. The generation model composes an answer with inline citations and expandable context. Crucially, citations are not a courtesy. They are a condition of access. If the answer is rendered, the meter runs and the publisher gets paid.

From scraping to licensing

The last decade of search and summarization leaned on crawling websites, scraping text, and arguing later about fair use. That era was already wobbling as lawsuits, robots directives, and content blocklists gained teeth. The September 5 launch marks a break. Content enters the model’s field of view on purpose, under terms that can be measured and enforced.

The practical effects show up immediately:

- A publisher can allow its content to power answers for medical terms but withhold premium recipes or subscriber investigations.

- A local news outlet can set special rates for queries that mention a specific region, where accurate coverage is both scarce and valuable.

- A trade publication can demand paragraph level attribution and an image credit whenever a chart is reused.

Instead of scraping and apologizing, systems will negotiate and comply.

Why a startup moved first

Incumbent platforms face a classic channel conflict. If a large search engine pays publishers per answer, it risks cannibalizing its own advertising revenue while conceding that long form answers reduce clicks. A startup can place the opposite bet: maximize answer quality and share the upside with sources. ProRata.ai is betting that a rights aware index increases trust, improves accuracy, and compresses legal risk in one move.

There is also a speed advantage. Integrating hundreds of publishers requires hands on business development, data plumbing, and messy edge cases. It is easier to start clean than to retrofit a sprawling crawler and ad stack with license checks at every hop.

How the network plugs into RAG

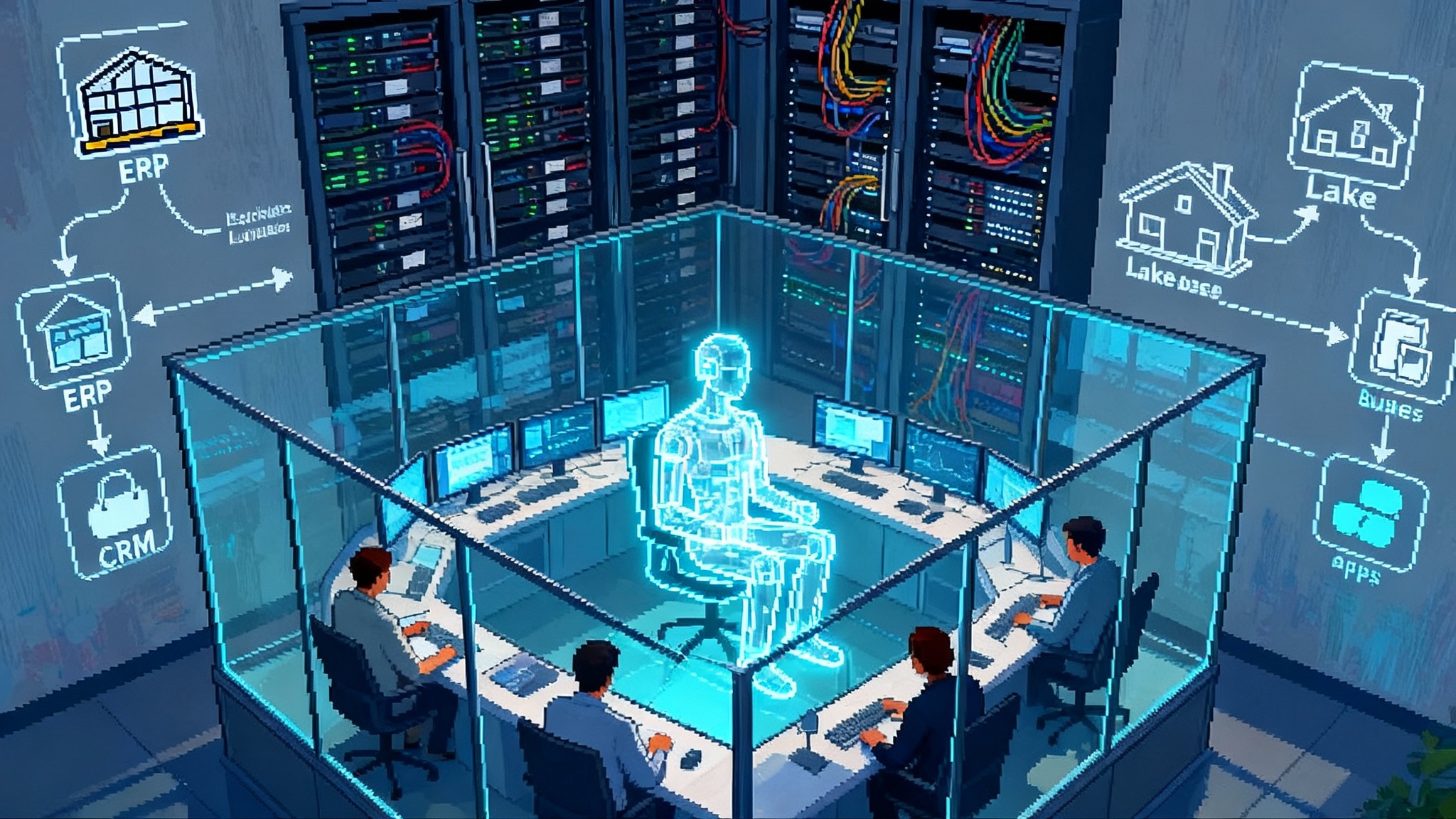

Under the hood, the 750‑publisher network looks less like one database and more like a mesh of tenants. Each publisher’s content passes through five steps that preserve provenance and enforce policy:

-

Ingestion: content arrives through a structured feed or a push API with article text, images, captions, author, section, tags, and permission flags.

-

Normalization: the system cleans markup, resolves references, deduplicates near‑identical versions, and segments each article into passages of a few hundred words with their own metadata.

-

Embedding: each passage is converted into a vector that captures meaning. These vectors go into an index that keeps boundaries intact so passages never lose their source.

-

Policy binding: licenses, prices, and attribution rules are attached to each passage like a baggage tag that travels with the text.

-

Retrieval and composition: when a user asks a question, the broker pulls only passages that match the policy for that use. The generator composes an answer and stitches in the required citations.

Because each tenant is isolated, publishers can turn knobs without affecting the network. One might allow full caching for a week to improve speed. Another might forbid caching for financial tickers and enforce real time refresh.

Attribution that travels with the answer

Attribution is the sorest point in the move from pages to answers. Gist Answers ties attribution to payment and to the interface. Answers include:

- Inline source lines that name the publication and section.

- A one click expand that reveals the exact passages used.

- A path to read more that respects each publisher’s paywall or free tier.

When a user expands a source or clicks through, the event is logged for the publisher and the platform. If an answer renders without any required attribution elements, the broker flags the failure and withholds access on the next attempt. Attribution moves from decorative flourish to routing rule.

From search engine optimization to answer index optimization

Search engine optimization trained teams to write for snippets and headlines. Answer index optimization changes the unit of optimization from page to passage. Rather than packing every keyword into a single page, teams will design content that can be safely reused as a two hundred word building block.

Practical steps for publishers:

- Use question first headings so lead paragraphs plainly answer the likely user query.

- Provide clean paragraph identifiers so passages can be referenced without guesswork.

- Publish structured metadata for licensing, including whether a passage can be quoted in full or summarized only.

- Maintain living explainers that can be sliced into current, background, and evergreen sections.

New metrics join the dashboard. Instead of focusing only on click through rate, teams will track effective query revenue, average passages per answer, and conversion from answer views to onsite reads. It is still storytelling, but the new distribution channel wants puzzle pieces rather than posters.

This shift aligns with broader changes in how users interact with AI powered interfaces. If you are exploring agentic UIs, see how agentic browsers cross the chasm and what that means for content that must be composable, auditable, and fast.

Training economics get a new denominator

The old choice in data licensing was to allow training or not. That binary matters less as models rely on retrieval for precision at inference time. The meaningful choices become: what to license for live answers, what to license for fine tuning, and how to price caching.

A simple example for a tech review site:

- Inference use for answers about product specs: 0.2 cents per query, caching allowed for 24 hours.

- Inference use for editorial recommendations: 0.5 cents per query, no caching.

- Fine tuning on historic reviews: a monthly fee based on tokens used for training, with revocation rights if competitors misuse quotes.

On the buyer side, a product team can set a cost ceiling per answer. If a query requires expensive sources, the agent can ask permission to proceed, switch to an open source fallback, or reframe the question to reduce cost. Pricing becomes a knob in the planning loop. Some knowledge is better memorized. Some is better rented at the moment it is needed.

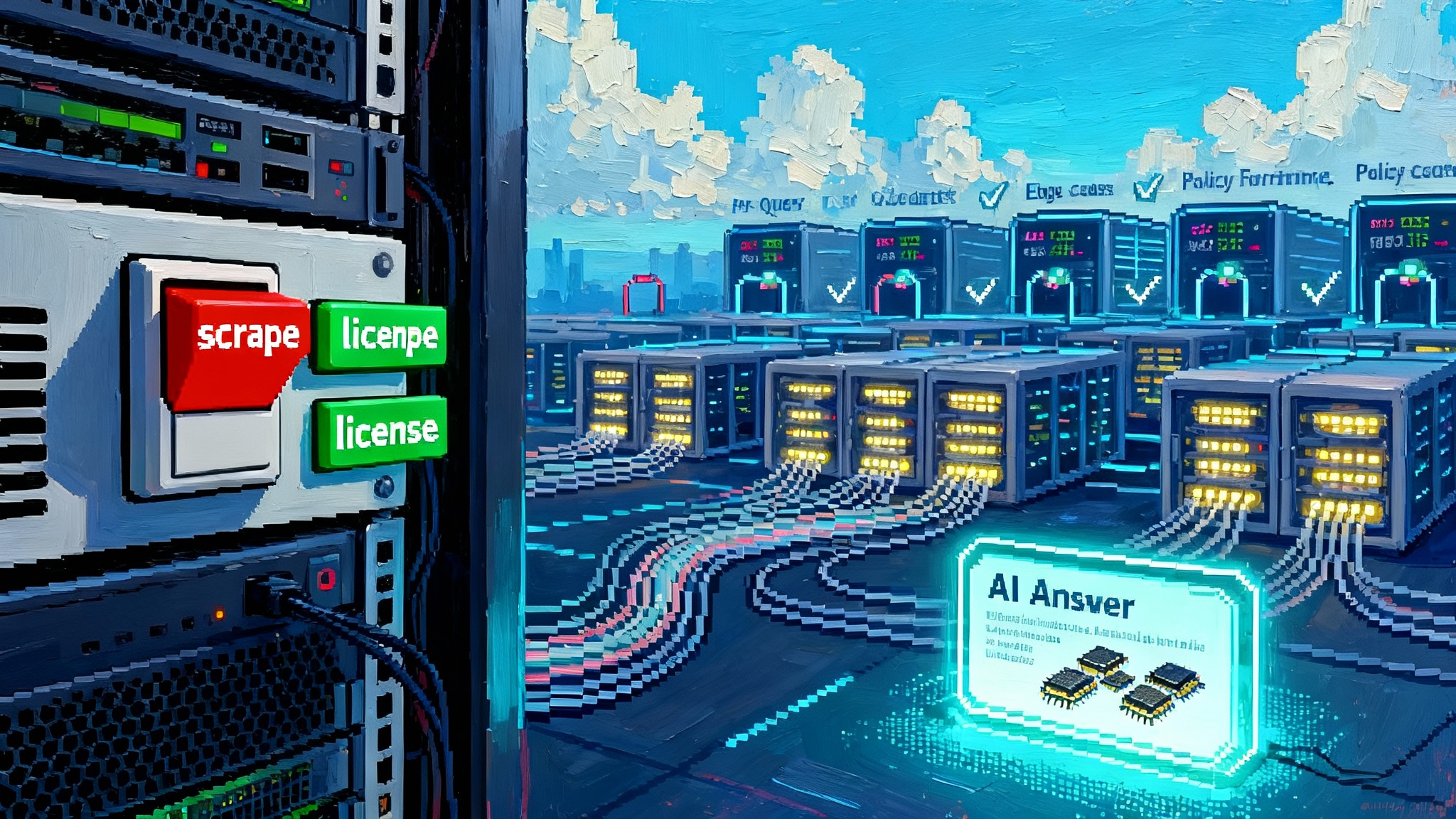

A content delivery network for LLMs

If licensed retrieval becomes the norm, the infrastructure will start to look like a content delivery network designed for model inputs rather than image files. Think of it as a content CDN for LLMs.

- The origins are publisher systems that emit signed content objects with policy tags.

- The edge lives near inference endpoints in cloud regions. It caches hot passages locally to cut latency, but only within each passage’s time to live.

- A policy gateway sits in front of the cache. It checks whether the requesting agent has a valid license token, whether the use fits the policy, and whether the meter is running.

- A clearinghouse settles payments to publishers and provides audit logs linking passages to specific answers.

This model upgrades the web’s cache rules with a rights layer. Instead of if‑modified‑since and ETags, agents negotiate allowed uses, rates, and retention windows. The payoff is speed with compliance by default.

Pay per query licensing and default compliance

Pay per query sounds simple, but three details make or break the experience.

- Granularity: pricing at the passage level avoids overpaying for whole articles when a single paragraph answers the question.

- Bundling: publishers should offer volume bundles for high demand categories to stabilize revenue and reduce spend variance for buyers.

- Policy clarity: rights flags must be machine readable. If a passage is embargoed until an hour after publication, the gateway must enforce that window automatically.

An agent’s request includes four parts: the query, the intended use, the expected display context, and the budget. The gateway replies with an allow or deny plus the price. If allowed, the agent retrieves passages, composes the answer, and posts a receipt on render. The receipt triggers payout.

Compliance becomes first class. Answers carry a manifest of which passages were used. That manifest is stored for auditing and can be replayed to reproduce the answer. If a dispute arises, both sides compare manifests and event logs instead of trading accusations.

What this means for publishers

The playbook for publishers shifts from page views to passage economics.

- Build a content API that exposes article text, images, captions, and section metadata at the paragraph level. Include licensing flags and pricing schedules.

- Define pricing tiers by use case. Background facts, time sensitive scoops, and opinion pieces may deserve different rates.

- Adopt a clear caching policy. Some content benefits from rapid answers even if a reader never clicks through. Other content requires fresh context on every view.

- Instrument attribution so brand, byline, and section labels travel with each passage and generate event data when displayed.

- Create inventory views for sales and editorial. Sales needs high demand categories and query volumes. Editorial needs to see which passages drive answers and where gaps exist.

Treat content like a product line with both wholesale and retail channels. Wholesale feeds the answer market. Retail serves loyal readers who want the full story.

What this means for product teams and agents

If you build applications that answer questions, assume the world becomes licensed by default. That requires a rights aware retrieval layer that can:

- Evaluate whether a question requires licensed sources or can be satisfied with public data.

- Negotiate and cache within a budget.

- Produce an answer manifest for auditing.

- Degrade gracefully when a source is unavailable or overpriced.

In practice, configure your agent with a cost per answer ceiling and a library of fallback strategies. When licensed coverage is thin, the agent can ask a clarifying question to shrink scope, then request only the specific passage needed. This aligns with patterns we see in self‑testing AI coworkers, where agents learn to reason about constraints, not just content.

Security also rises in importance. When answers trigger payments and move licensed material, you need guardrails that detect prompt injection, exfiltration, and misuse. Our coverage of runtime security for agentic apps outlines how policy enforcement, tracing, and isolation become part of the default stack.

Risks and how to reduce them

- Latency: rights checks and cache misses can slow answers. Mitigate with regional caches, prefetching for trending topics, and batch retrieval of related queries.

- Coverage gaps: some important sources will hold out. Reduce gaps with strong open data fallbacks and transparent reporting that earns publisher trust.

- Price spikes: breaking news can be expensive when demand surges. Negotiate surge caps and offer a premium tier where buyers opt in for higher rates with guaranteed freshness.

- Adversarial use: bad actors may try to exfiltrate full articles with crafted prompts. Use rate limits, passage quotas, and anomaly detection to stop extraction patterns.

None of these risks are unique to Gist Answers. They are the realities of turning the web’s knowledge into a metered utility.

The next six months

Expect three waves to build quickly:

- Contracts: large publishers will test pay per query bundles tied to categories such as health, finance, and local government. Regional networks will appear as opt‑in packages that agents can purchase in one click.

- Standards: expect practical drafts that define a machine readable policy format and a receipt schema for answer manifests. The winner will be whatever the first three big buyers and sellers can implement quickly.

- New roles: editorial teams will assign a passages editor to structure explainers for answer reuse. Sales teams will create an answer partnerships lead to negotiate bundles and seasonal rate cards.

On the user side, interfaces will grow explicit about sources and prices. When an answer requires premium sources, users may authorize a small spend that is folded into a subscription or paid on the spot.

Conclusion: the crawl era ends, the contract era begins

The web grew on crawling. Generative systems stretched that habit past its limits. Gist Answers marks a line you can circle on a calendar. On September 5, 2025, licensed retrieval became a product with a price tag and a receipt. That may sound like plumbing, but it changes incentives in ways readers will feel.

Publishers no longer have to choose between being invisible to answers or giving it all away. Builders no longer have to pretend that every piece of text on the internet is fair game. And users get answers that make it clear where knowledge comes from.

The next phase is simple. Treat content like electricity. Pay for what you use. Meter it fairly. Cache it when allowed. Cite the source every time. The web will not lose its openness. It will gain a backbone that lets us build faster, argue less, and make the business of knowledge work for everyone involved.