The Inflection for Coding Agents: Cursor’s Browser Hooks

Cursor's late September update added browser control and runtime hooks, shifting coding agents from helpers to reliable operators. This guide shows how to build guardrails, policy, and evidence loops that ship fixes faster.

The week coding agents grew legs

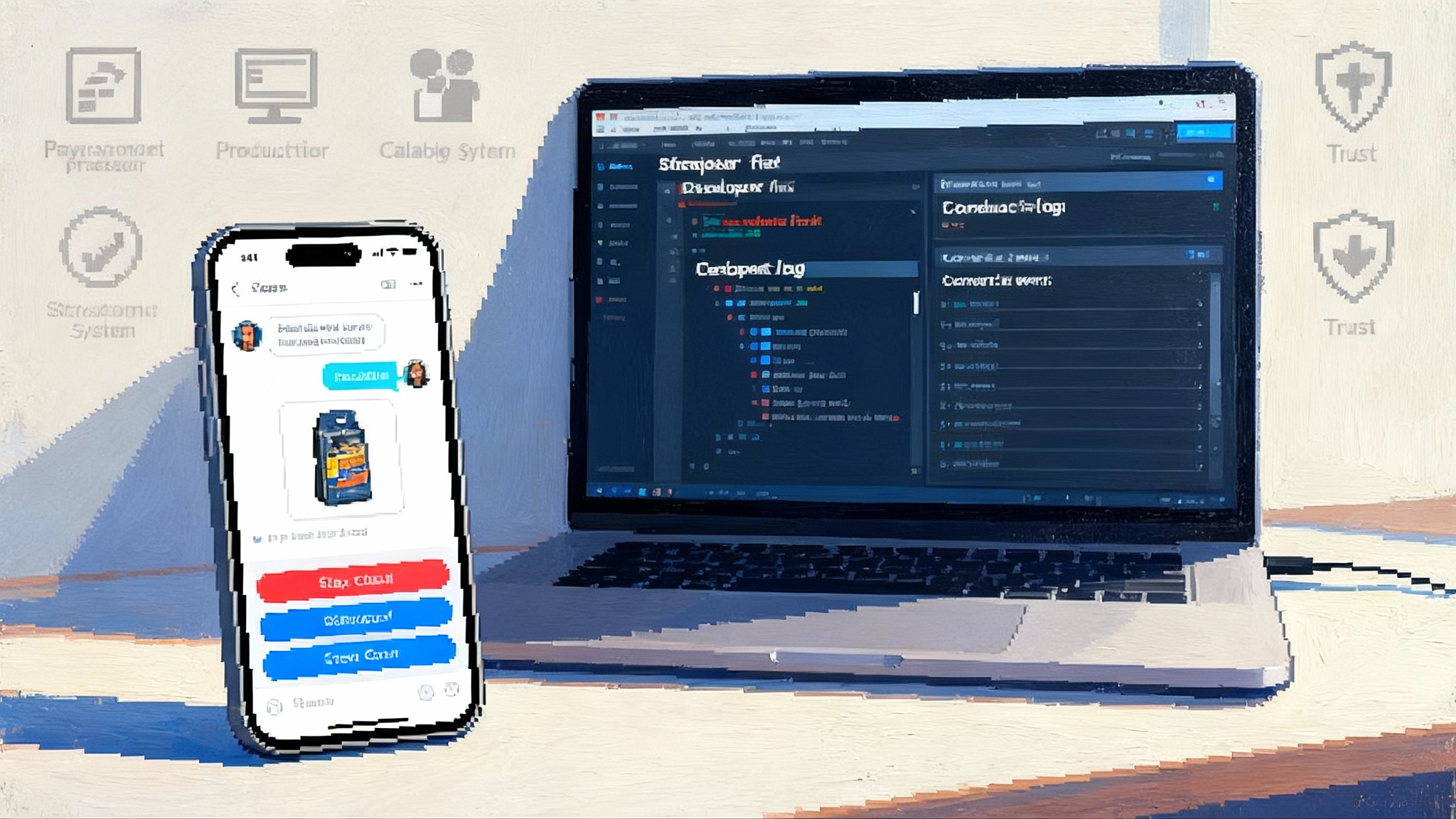

In late September 2025, Cursor shipped an update that moved coding agents from clever sidekicks to dependable operators. Two additions mattered more than they first appeared: browser control and runtime hooks. In practical terms, agents can now look at your product the way users do, click through real flows, capture evidence, and then operate under live policy while you observe and intervene.

If you want the official rundown, read Cursor's late September release notes. The shift is simple to describe. It is profound in effect.

Picture a teammate flagging a login bug that only shows up in Safari with a specific cookie state. Yesterday, an agent could scan source files and propose a patch. Today, an agent can start a browser, reproduce the issue, snap screenshots at the failing step, record console and network traces, gate risky actions through hooks, and open a pull request with a failing test and the proposed fix. The loop moves from suggestive to operative.

What actually changed

Three new surfaces are worth close attention.

- Browser control. Agents can navigate, click, type, evaluate the DOM, gather screenshots, and analyze layouts. That means they can reproduce client bugs rather than guessing from code alone. Cursor provides a short overview in the browser control documentation.

- Hooks at runtime. You can intercept the agent loop before and after tool calls. Observe, restrict, redact, or modify behavior in flight. Hooks add the belt and suspenders that transform a helpful tool into a safe, auditable system.

- Team rules and sandboxed terminals. Policy can be shared across repositories. Risky shell actions default to an offline sandbox with an explicit retry path when needed.

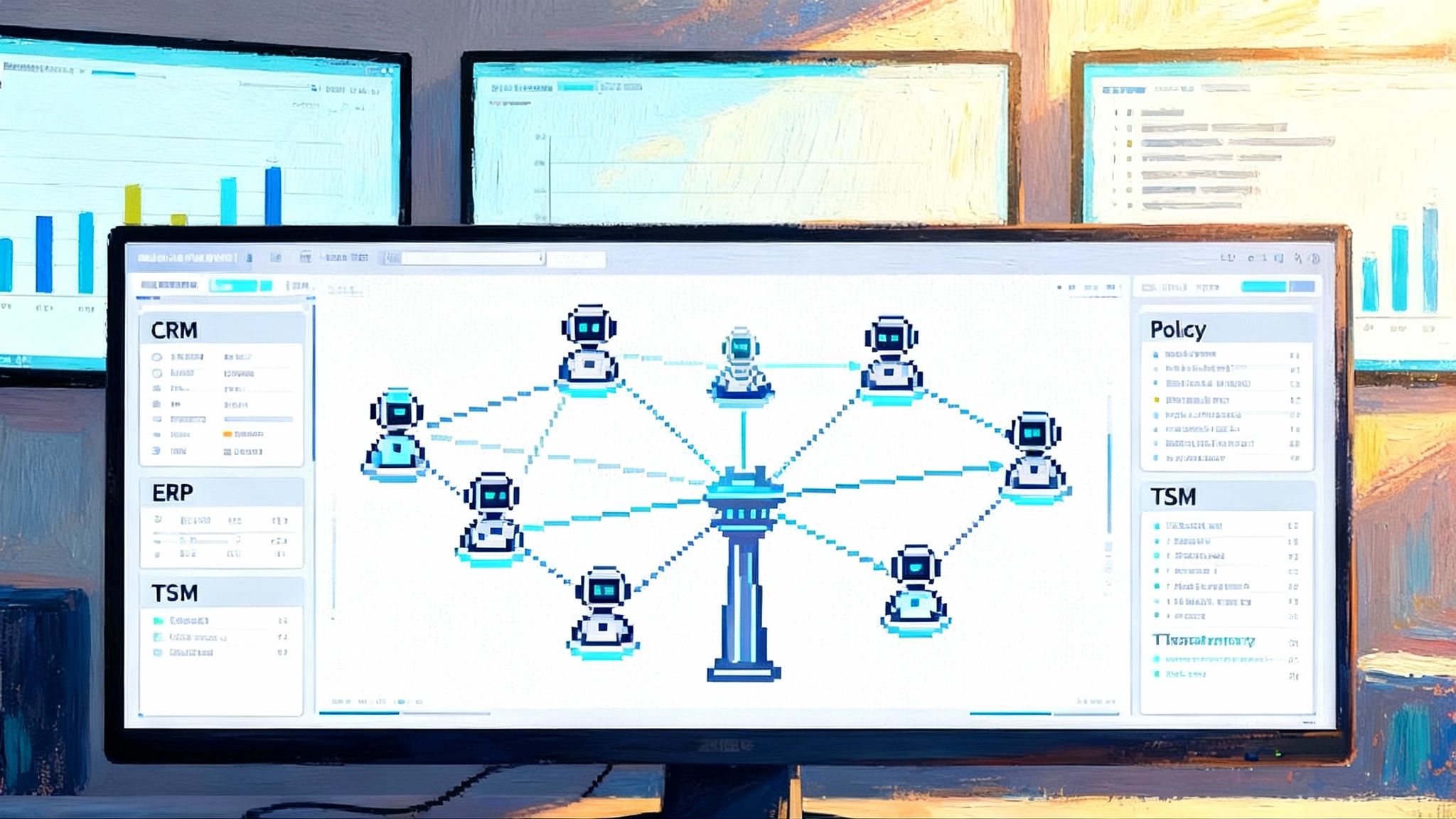

Together these are not just features. They form a platform for managed fleets of software agents.

From editor copilot to a managed fleet

For the last year, most teams treated coding assistants like smarter autocomplete. You kept them inside the editor, watched diffs, and hoped they saved time. That model topped out fast. Complex fixes often require:

- Reproducing a bug in a real browser

- Running end to end checks or visual diffs

- Coordinating changes across server, client, and tests

- Opening a pull request with evidence that convinces reviewers

Human engineers move across tools to do this. Without browser control and runtime hooks, agents could not. That gap just closed.

Here is what the new setup enables immediately:

- Agents validate fixes by interacting with the live interface, not just reading code.

- Hooks provide the guardrails to let agents run repeatedly without babysitting.

- Team rules keep behavior consistent across repositories at scale.

- Menubar monitoring and background agents turn status into a glanceable signal instead of guesswork.

The result is a repeatable bug to pull request loop. The word repeatable is the point. A single lucky win is useful. A hundred safe runs per week is a new capability that compounds.

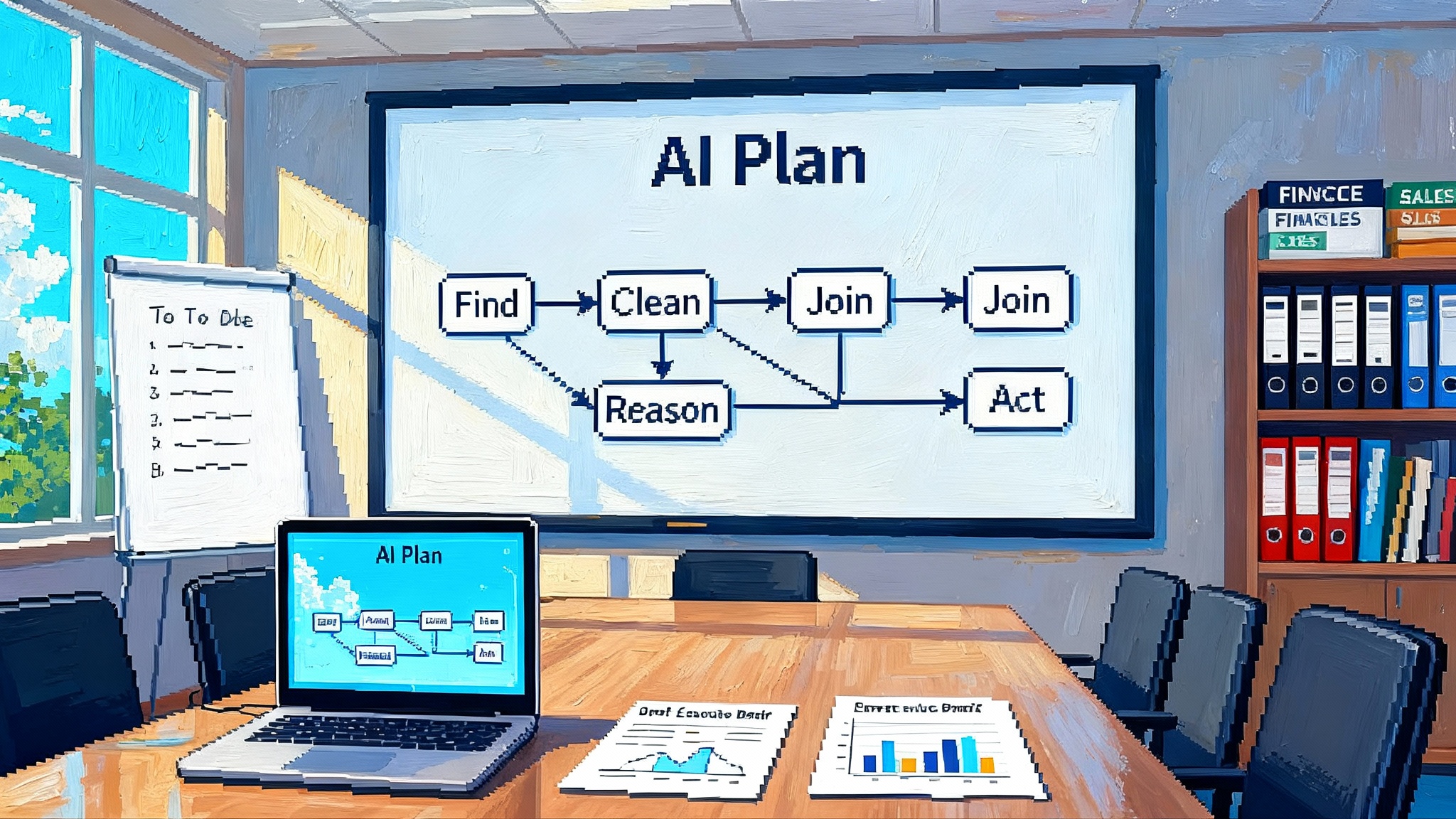

A practical stack you can run this quarter

If you want a serious agent program, assemble a simple, opinionated stack. Keep it boring and observable.

1) Identity and permissions

- Use per agent identities tied to your identity provider with least privilege by default.

- Store credentials in your existing secret manager. Do not expose secrets an agent does not need. Rotate tokens tied to agent identities on a fixed schedule.

- Give agents separate commit signatures. Require signed commits for everything they touch.

2) Runtime hooks

- Before action. Inspect the planned tool call and decide if it is allowed, needs redaction, or requires human approval. For example, block unknown domains or external POSTs by default.

- After action. Capture outputs, normalize logs, and emit metrics. If the action changed code, attach a diff fingerprint and the test outcomes.

- On error. Route retryable errors to the agent, and escalate policy violations to a human queue with context.

3) Policy and rules

- Keep org rules in a central dashboard rather than scattered config files. Enforce code owner paths, dependency allowlists, commit message conventions, and branch protections.

- Define an escalation ladder. A simple example: unknown domain access by browser leads to an automatic block and a Slack ping to the agent owner.

- Document default approvals. For example, doc fixes on internal markdown can auto proceed, while build script changes require human sign off.

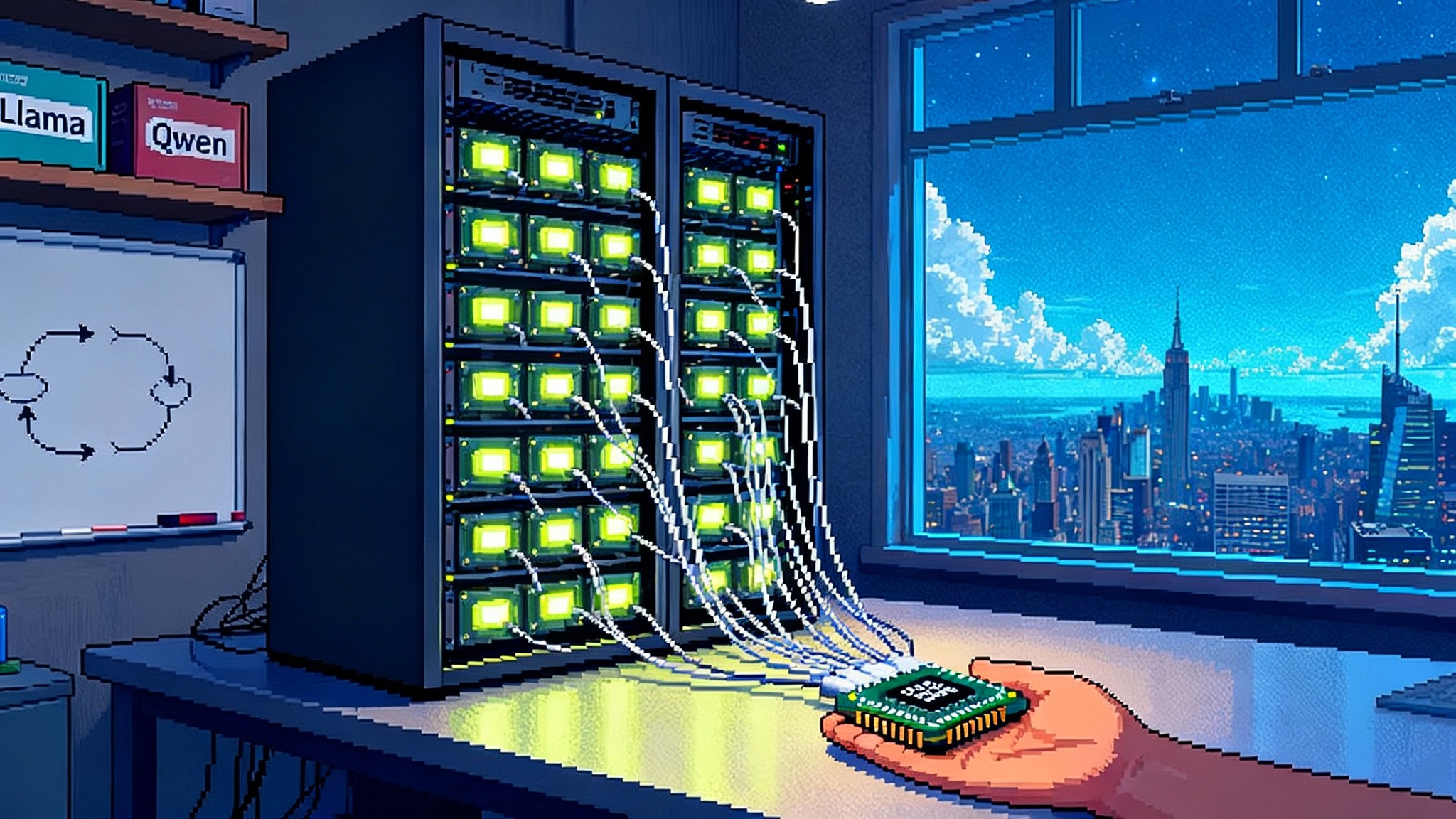

4) Sandboxing and network control

- Default to a command allowlist. When an agent attempts a non allowlisted command, run it in a local sandbox with no internet and write access only to the workspace.

- Maintain an egress allowlist for web actions. Keep a dedicated list for analytics and error reporting domains so agents can collect evidence without spraying data.

- Standardize browser versions, fonts, and extensions. Bake them into an image and pin it so runs are comparable.

5) Evidence and audit

- Require the same artifact bundle for every task: repro steps, screenshots, console logs, network traces, code diffs, test results, and a short narrative. Attach the bundle to the pull request.

- Keep a structured audit log. Record who approved what, which hook modified which step, and how policy was applied. This helps both compliance and agent debugging.

6) Human in the loop

- Add fast checkpoints. A two minute review gate can prevent hours of bad automation.

- Provide a per agent kill switch and a global emergency stop.

- Publish a short on call playbook for agent incidents so responders know how to pause, roll back, and escalate.

The new blast radius: security and governance

Agents that can click through the web and open pull requests have real power. They inherit risk along with it. Address three classes of impact up front.

- Data exposure. If an agent can fetch staging pages and upload screenshots, it can leak secrets. Strip tokens from headers in captured network logs. Blur or redact sensitive areas in screenshots using the hooks layer. Scrub PII by default.

- Supply chain changes. Agents that bump dependencies will alter your bill of materials. Enforce dependency policies, run security scanners on every agent pull request, and require signed commits for agent identities.

- Environment drift. A browser session on a developer laptop is not the same as a session in a container. Standardize the browser runtime, headless settings, fonts, and extensions. Pin them and record the image hash in the artifact bundle.

Keep this contained by defining a small, named blast radius for each agent. Write it down and keep it in version control.

- What codebases can it edit

- Which domains can it browse

- Which secrets can it read

- Which tests can it run

- Which environments can it touch

Three build opportunities you can ship now

1) Agent QA farms

Goal: catch visual and interaction regressions before users do.

- Setup. Create an agent pool that runs scripted journeys across supported browsers and form factors. Example journeys include onboarding, purchase, and profile settings. Provide the journeys as plain text steps with selectors and expected outcomes.

- Evidence. For each run, collect screenshots, layout metrics, console output, and network traces. Save a short video when a failure occurs so reviewers can see the issue without rerunning.

- Hooks. Block navigation to unknown domains, redact tokens, and annotate screenshots with element hashes for comparison later.

- Output. When a regression is detected, open a pull request with the failing journey as a test, a suggested fix, and a link to the artifact bundle. Assign the pull request automatically using your code owners file.

- Why it wins. You turn expensive manual checks into a constant background process. Failures arrive with repro steps and a patch rather than a vague bug report.

What to measure in month one:

- Repro time: median minutes from failure to a pull request with a failing test

- Flake rate: percent of failures without a consistent repro on rerun

- Visual diff noise: false positives per one hundred comparisons

2) CI and CD triage bots

Goal: reduce time spent deciphering broken pipelines and noisy alerts.

- Setup. Subscribe an agent to your continuous integration events. When a pipeline fails, the agent reads logs, maps errors to the nearest code changes, and groups failures by root cause. For common errors, the agent proposes a patch and opens a pull request with an explanation.

- Hooks. Rate limit interventions so that one root cause triggers one pull request. Require human approval for changes to build scripts or deployment manifests. Block secrets in logs.

- Output. A daily digest lists flakiest tests, slowest steps, and the top three failure causes. For each flaky test, the agent opens an issue with a minimal reproduction and tags the owner.

- Why it wins. Teams get time back from yak shaving. The pipeline becomes more predictable, and noisy tests get fixed instead of ignored.

What to measure in month one:

- Mean time to green: time from first failure to successful rerun on main

- Flake quarantine time: time from first flaky signal to quarantine or fix

- Pull request latency: time from agent opened pull request to merge or rejection

3) Autonomous accessibility testing

Goal: ship accessible labels, focus order, and contrast so your product works for everyone.

- Setup. Maintain a library of accessibility journeys, including keyboard only flows and screen reader hints. On every significant pull request, the agent launches the browser, runs those journeys, and flags violations with clear selectors and screenshots.

- Hooks. Enforce a zero tolerance policy for regressions in critical flows. Automatically block merges when a severity one accessibility issue is detected. Redact user identifiers in captured artifacts.

- Output. A pull request comment lists violations with suggested code changes. When feasible, the agent submits a patch with aria attributes, alt text, or role fixes and attaches a short video of the corrected flow.

- Why it wins. You move accessibility from a late stage audit to a continuous, demonstrable practice. You also create a living library of examples that new engineers can learn from.

What to measure in month one:

- Violations per pull request over time

- Time to remediate severe issues

- Coverage of key user journeys under keyboard only navigation

A two quarter plan to build an advantage

Quarter 1: build the paved road.

- Pick two high value loops to automate. A flaky test triage and a visual regression check are reliable starters.

- Define your hooks policy. Decide what is blocked by default, what needs approval, and what is auto allowed. Start strict, then relax as you gain confidence.

- Ship the evidence bundle. Decide exactly which artifacts attach to every agent pull request. Make the bundle consistent and machine readable.

- Train owners. Give each team an agent owner who is accountable for policy, metrics, and incident response. Keep the feedback loop tight.

Quarter 2: expand and productize.

- Add one new loop per team, such as accessibility checks or documentation drift fixes.

- Turn the paved road into a simple platform. Publish a template so any team can add an agent task with the same guardrails.

- Integrate with planning. When an agent opens a pull request, it should update the linked ticket, add a status, and post in the right Slack channel.

- Track outcome metrics, not just usage. Focus on time to reproduce, time to merge, change failure rate, and the share of pull requests fully handled by agents.

Related reads from the AgentsDB community

The move from sidekicks to operators is showing up across the ecosystem. If you are assembling your first stack, this primer on how to compress the agent stack quickly pairs well with the approach above. For enterprise leaders, see how global shops make enterprise AI agents real. If you are exploring how browsers become control planes, we covered what it takes to turn the browser into a local agent.

What to watch next

- Multi agent orchestration. Once each loop is reliable, teams will want agents that split work across reproduction, patch, and proof. Keep the interface between them simple and logged.

- Cross environment parity. Expect requests for the same browser runs on macOS, Windows, and Linux images, and for mobile simulators. Bake parity tests into QA farms early.

- Evidence rich pull requests. Leaders will start to require screenshots, traces, and tests before they review any agent generated change. Treat this as a forcing function for quality.

- Policy surfaced in the editor. The line between editor and fleet manager will blur. Expect rule and hook previews in the editing experience so developers can see policy effects before an agent runs.

The bottom line

Browser control and runtime hooks finish a missing circuit for coding agents. They can now touch the real interface, verify results, and operate under live policies with clear evidence. That turns assistants into predictable actors. Teams that assemble the simple stack above will move faster almost immediately. Bugs arrive reproduced. Fixes arrive with proof. Pull requests carry the context reviewers need.

The investment is not in a magical agent. The leverage is in the guardrails, defaults, and artifacts that make automation safe. The update from late September is an inflection and an invitation. Use the next two quarters to pave one road well, then let your agents run it often. The teams that productize around this surface will not just keep up. They will set the pace.