Replit Agent 3 Goes Autonomous: Build, Test, Ship

Replit Agent 3 turns coding assistants into autonomous builders that write, run, and fix apps in a real browser. See what changed, what to build first, and a practical playbook for safe and cost-aware adoption.

Breaking: coding agents just crossed the builder line

On September 10, 2025, Replit introduced Agent 3 with real browser testing and long autonomous runs that move coding agents from autocomplete helpers to autonomous builders. The headline change is not a tweak to prompts. Agent 3 composes full apps, runs them in a real browser, observes failure, applies fixes, and reports progress with artifacts and logs. It can also generate task specific agents and recurring automations, then run for extended periods without a human babysitting each step. Replit introduced Agent 3 with real browser testing.

For most teams over the past two years, agents felt like clever interns that draft code and wait for review. Agent 3 acts more like a contractor who shows up with a toolbox, uses your staging environment, drives the app, files a bug against itself, applies the fix, and tries again until the tests pass.

What actually changed under the hood

- Real browser testing. The agent opens a browser inside the workspace, clicks through flows, fills forms, and calls APIs like a user would. This is not a synthetic simulation. The feedback loop is the feature.

- Self correction by default. Because it can watch the app fail in preview, the agent proposes edits, applies them, and retests without waiting for triage.

- Longer autonomous runs. Sessions can span hours, which lets the agent tackle multi step jobs such as scaffolding a backend, wiring a frontend, integrating a datastore, and hardening flows with tests.

- Agent generation. Agent 3 can create other agents and scheduled automations that work in chat tools or on timers. That shifts value from single app builds to workflow engineering across your stack.

- Connectors and imports. New connectors let apps and automations log in to popular services with minimal key wrangling. A Vercel import flow pulls existing projects into Replit so the agent can refactor, test, and ship with live logs.

Think of this as continuous integration with hands. Historically, you merged a pull request, a pipeline failed, and you found out later. With Agent 3, the pipeline runs during creation. The agent fixes failing steps before a pull request appears.

From assisted coding to compiled by agents

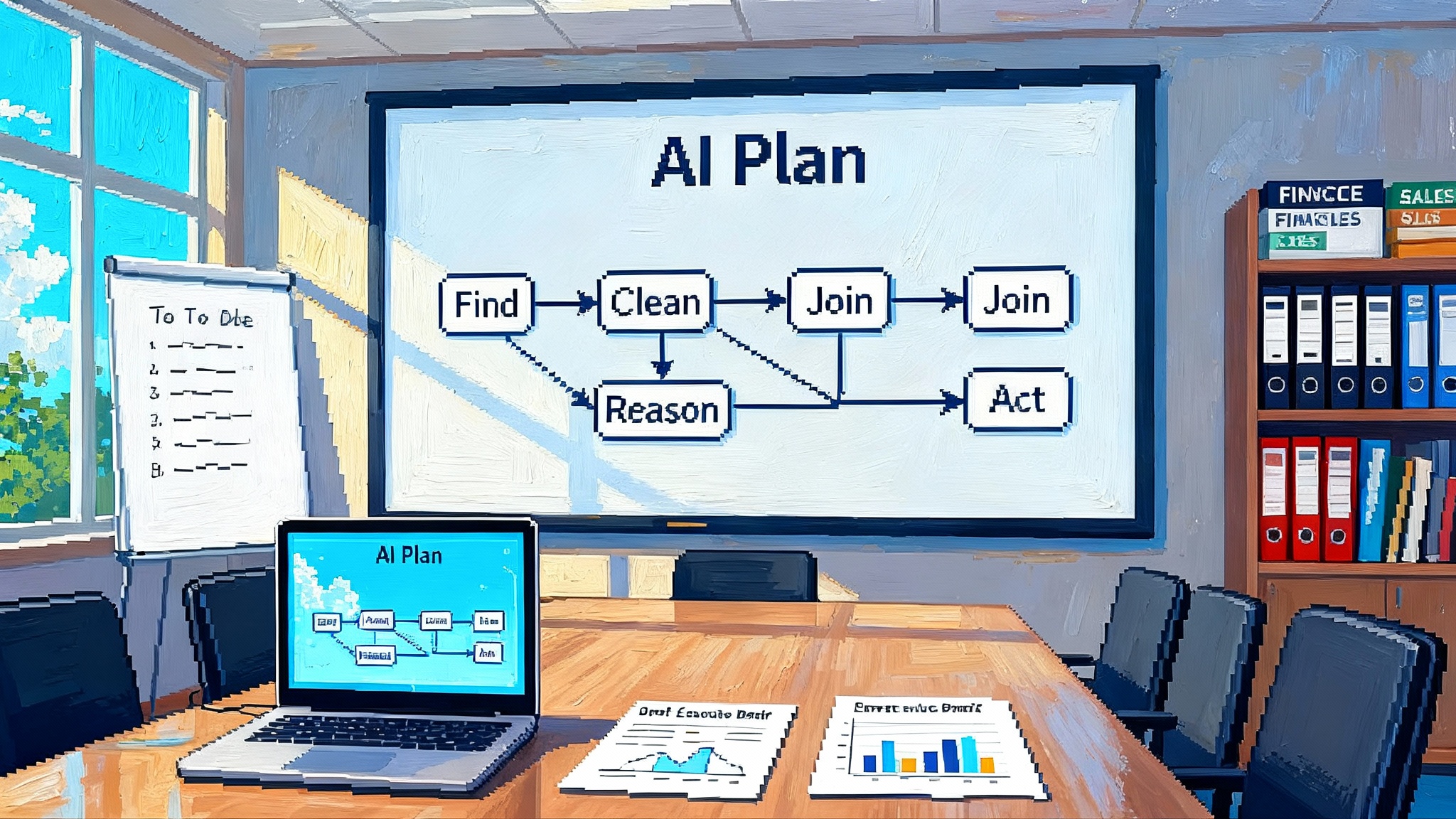

This shift is as much conceptual as it is technical. Traditional compilers turn human readable programs into machine code. An autonomous agent turns a short specification into a running app with tests and automations. The new target is not only a package or binary. The target is an artifact that boots, renders, authenticates, calls external services, and passes interaction tests that mimic user behavior.

In practical terms, a solo founder can describe an admin dashboard with authentication, two database tables, and a metrics panel. The agent scaffolds the backend, generates the frontend, connects the data store, opens the browser, creates a user, tests the login flow, notices the password reset route returns 404, adds the missing handler, and retries. Minutes later there is a working staging app with a checklist of what was tested and changed.

Connectors and the distribution pipeline

Two new building blocks push Agent 3 past the demo stage and into production intent.

- Connectors. Authenticate once with services like Google Drive, Salesforce, Notion, or Linear, then treat those connections as first class building blocks. The agent can fetch records, write updates, and handle typed errors through a common interface. Your app code stays cleaner and the agent does not need to juggle one off credentials.

- Vercel imports. If your team deploys with Vercel, you can import a project into Replit by linking the repository. The agent scans the codebase, requests the minimum secrets, boots the app with live logs, and begins refactoring and testing. Existing projects become agent editable, agent testable, and agent shippable without tearing down your toolchain.

Put the two together and you get a credible path to production. The agent builds the app, validates the surface area, stitches in your systems, and ships to a runtime the team can observe and control.

Why the timing matters

Momentum matters for platform shifts. On the same day Agent 3 arrived, Replit secured new financing that Reuters reported at a three billion dollar valuation. That injection of capital, paired with a visible shipping cadence, suggests the company is aligning resources behind autonomy, connectors, and import flows rather than incremental editor features. Reuters reported a three billion dollar valuation.

When capital, release velocity, and developer on ramps line up, production usage tends to follow. The friction that stalled many agent demos in 2023 and 2024 was integration and deployment. That is the exact friction this release targets.

What to build first: internal tools and stitched automations

Start with jobs where latency, scale, and compliance risks are modest and where value is easy to see.

- Sales pipeline triage. Pull deals and stages from Salesforce, join to product usage, and post a daily risk list to Slack. The agent builds the dashboard and the posting automation. You monitor one configuration file.

- Marketing content ops. Draft landing pages for a campaign, connect to a content store, auto check links and images in the browser, and send a review URL. The agent retries tests until every section renders.

- Support tooling. Build a case deflection page that queries known issues from Notion and a search index. The agent validates that the search result view behaves as expected and that the contact form still submits cleanly.

- Finance reconciler. Pull expenses from a card provider export, match to contracts in storage, and raise exceptions for vendor mismatches. The agent creates a nightly automation with a single connector.

- Engineering runbooks. Stand up a simple on call tool that receives webhooks from your incident platform, renders status, and opens a follow up issue with a prefilled template. The agent handles auth and end to end form tests.

Treat each as a mini product. Write a short spec, define two or three acceptance tests the agent must pass, and assign a time budget. Early wins build trust in the loop on your stack.

A practical playbook for team adoption

-

Choose a constrained target. Pick one internal tool or automation that touches at most two external systems. Fewer moving parts means better feedback loops.

-

Write acceptance tests first. Define what the agent must prove in the browser. Examples: a new user can sign up and log in, a form submit returns a success state, a database record persists and renders in a list.

-

Set budgets and autonomy. Give the agent a time budget and enable longer runs only when the spec is crisp. Track whether extra autonomy created more value than cost.

-

Instrument everything. Surface agent actions in a timeline. When it edits code, commits, runs tests, or invokes connector actions, you want logs and screenshots.

-

Lock down secrets. Use scoped credentials for connectors and least privilege by default. Treat each automation like a service account.

-

Phase releases. Ship to a staging URL first. Promote only after a short soak where agent tests run on schedule and pass.

-

Require readable diffs. When the agent modifies code, require a commit message that references the failing test and includes a concise rationale.

-

Train on your patterns. Seed the repo with example tests, stable selectors, and naming conventions so the agent learns your idioms.

Evals, guardrails, and what remains open

- Coverage for interactive tests. Browser tests are powerful, but you still need confidence that the agent touched the flows that matter. Require a test plan that maps to user stories and track gaps over time.

- Non determinism. Two runs should not produce two incompatible apps. Request reproducible builds with container snapshots, pinned dependencies, and seedable test data.

- Contract tests for connectors. Each external service deserves a narrow schema of expectations. A Notion connector, for example, should raise a typed error when a database is missing and should support a dry run mode.

- Human approvals for risky actions. Let the agent propose schema migrations and environment variable changes, but gate the final step behind a button with context and a short diff.

- Rollback paths. If a deploy fails after passing agent tests, you need a one click rollback and a snapshot of the last known good state.

Manage cost without killing momentum

Agent 3 claims its testing system is faster and cheaper than general computer use models, which matters when the agent is clicking around a browser for minutes at a time. Still, autonomy consumes runtime, test environments, and external API calls. A few habits keep costs predictable.

- Budget by objective, not token. Set a ceiling per task measured in wall clock minutes and connector calls. If a task exceeds the ceiling, capture state and stop.

- Cache knowledge, not only artifacts. Persist discovered configuration like runtime versions and verified routes so the agent does not rediscover basics on every run.

- Replay tests selectively. If the agent only touched frontend styles, do not rerun heavy backend tests. Use change aware plans.

- Prefer events over schedules. Nightly automations are simple, but event triggers waste less time. React to a new record, a merged pull request, or a webhook.

- Share connectors with scopes. Reuse a single authenticated connection where possible, but fence each app to its own scoped view of data.

Failure modes to watch and how to mitigate them

- Flaky end to end tests. Interactive tests sometimes pass by luck. Require explicit waits, stable selectors, and screenshots for auditing.

- Drift in permissions. OAuth scopes and enterprise policies change. Add a daily dry run that checks connection health for each automation and posts a summary to the team channel.

- Silent loops on long runs. When sessions last hours, costly loops can hide. Add a heartbeat, a hard stop with a state snapshot, and alerts for repeated retries.

- Upstream outages. Build fallbacks that switch an automation to safe mode or queue work locally. Show retries and backoffs in the agent’s timeline.

Multi agent patterns on the near horizon

Expect specialization. One agent focuses on browser testing, another on data migrations, another on connector health. A lightweight coordinator delegates and reconciles. Over time, a built in eval suite will act like unit tests for agents so you can measure autonomy gains release by release. Project memory with provenance will let the agent recall past failures and fixes with links to the diffs it made. Richer staging controls will supply ephemeral preview environments seeded with example data so browser tests simulate real usage.

Templates will improve too. Marketplace quality starters will include connectors, tests, and deployment playbooks tuned for specific jobs such as a sales pipeline dashboard or a support triage bot. That shrinks the gap from greenfield idea to production ready internal tool.

How this compares with other agent stacks

Replit’s bet on real browser execution fits a broader pattern. Earlier this year, Cursor's browser hooks changed expectations about how much of the developer loop an agent should own inside the editor. On the stack side, AgentKit compresses the agent stack so teams can ship faster with fewer moving parts. Security sensitive teams can look to autonomous pentesting with Strix as a reminder that autonomy needs strong guardrails when systems touch production.

Taken together, these moves suggest the market is standardizing around agents that do real work in real environments, with clear traces, typed errors, and testable contracts.

Bottom line

Agent 3 is the first mainstream path to apps that are built, tested, and shipped by agents rather than humans babysitting a cursor. The killer feature is not a smarter prompt. It is an execution loop that composes code, runs it in a real browser, observes failure, and fixes itself. With connectors and a simple import path for existing projects, the platform meets teams where they already are.

Start small. Pick one internal tool, write three acceptance tests, and give the agent a hard time and cost budget. If the loop repeats and the numbers hold, scale to the next app. At that point you are not just using an agent. You are practicing compiled by agents development, where the artifact of your spec is a running product that proves it works.